Hands-On Tutorial: Sync Recipe¶

Over the next two lessons, we’ll learn about two simple visual methods for moving data to a database: the Sync and Prepare recipes.

Prerequisites¶

In order to complete the hands-on lessons for this course, you will need to have configured connection to an SQL database. The example here demonstrates using a PostgreSQL connection called PostgreSQL_tshirt.

Note

If you have not already configured an SQL connection, please see the course on Integration with SQL Databases.

Create Your Project¶

The first step is to create a new project.

From the Dataiku DSS homepage, click +New Project > DSS Tutorials > Code > SQL in Dataiku DSS (Tutorial).

Note

You can also download the starter project from this website and import it as a zip file.

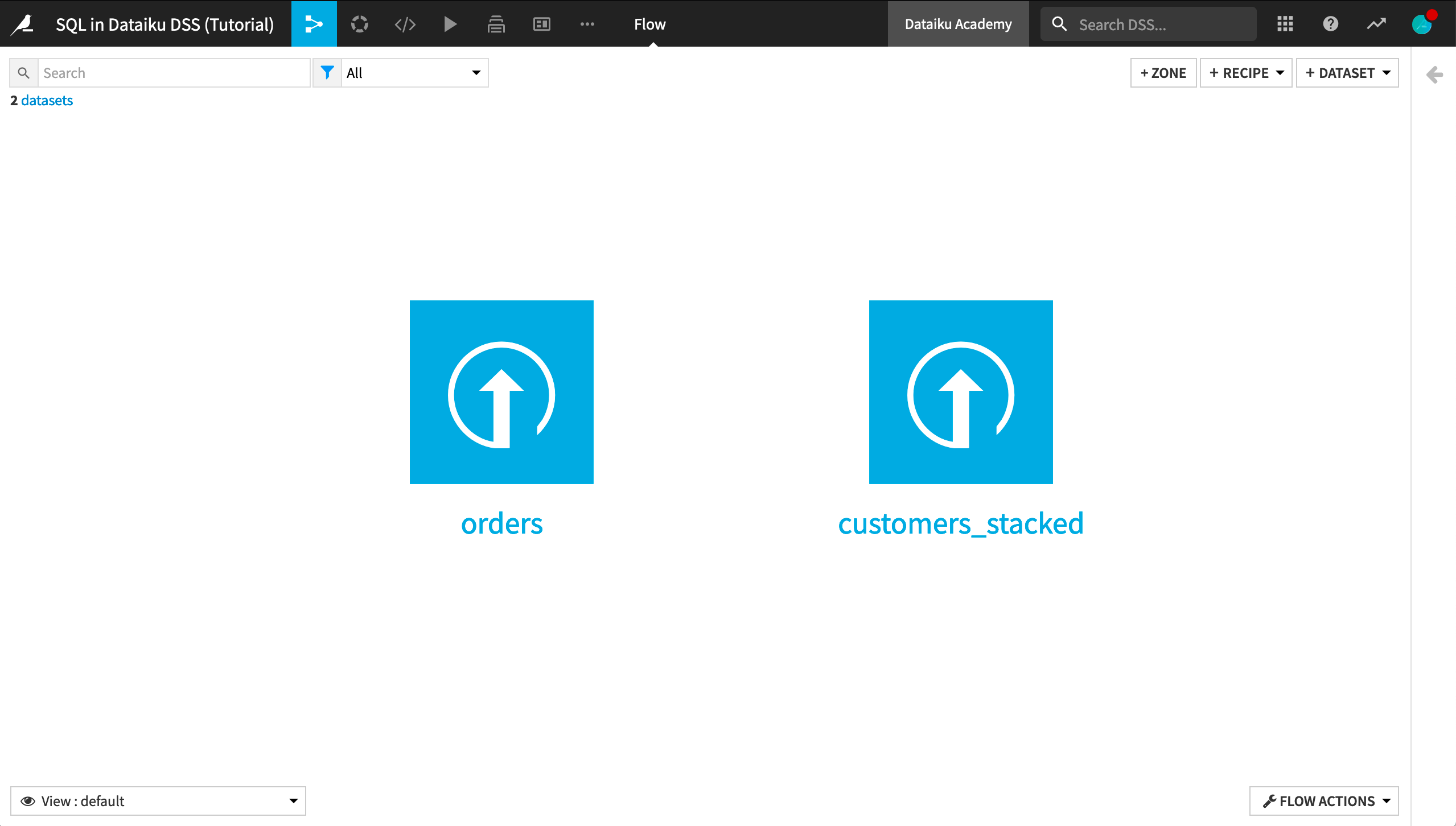

In the Flow, you see the uploaded Haiku T-Shirt orders and customer data. Further, the labeled and unlabeled customer data has been stacked into a single dataset.

Multiple Ways to Move Data to Your Database¶

First, we want to get the uploaded data into the SQL database. We’ll highlight a couple ways to do this.

Sync Recipe¶

Tip

A screencast below walks through the actions described here.

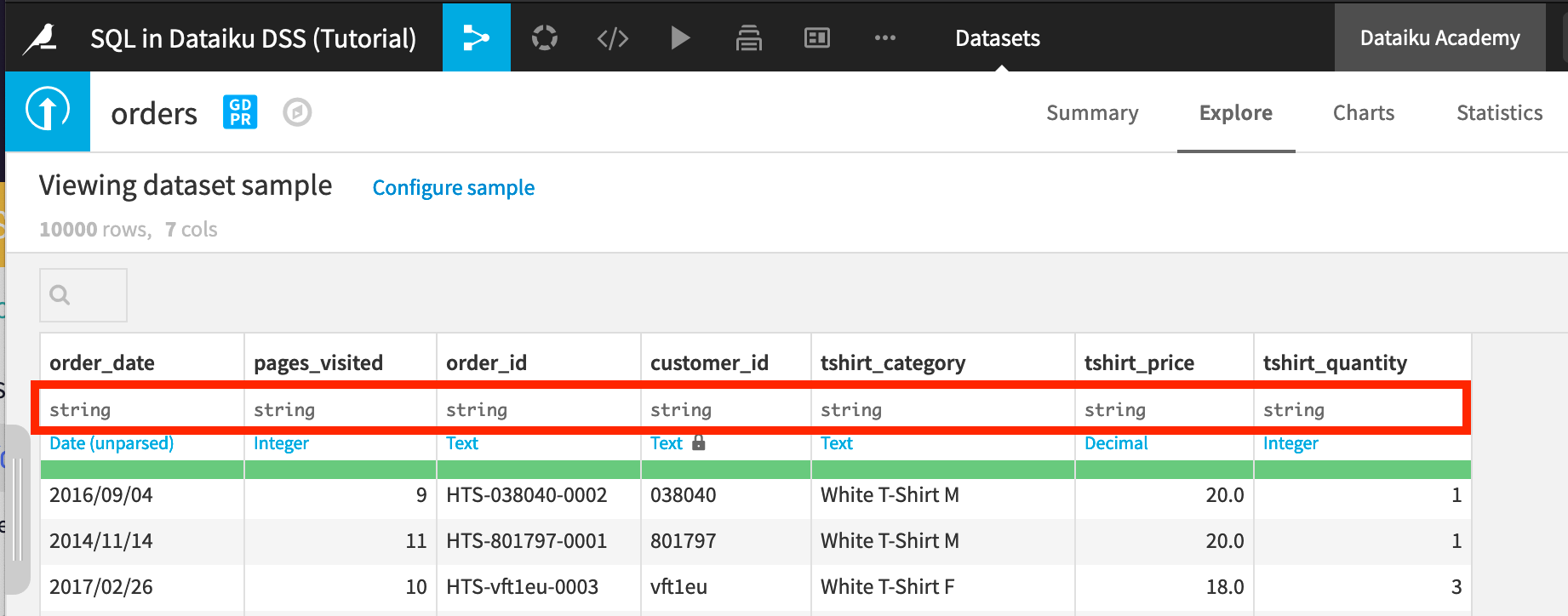

Let’s start by opening the orders dataset. It is a CSV file that has been uploaded into Dataiku DSS. CSV files do not contain any kind of typing information. Thus, the columns of this dataset are for the moment not specifically typed, and so Dataiku DSS assumes by default that all columns have a string storage type.

However, when we sync this dataset to the database, we want pages_visited, tshirt_price, and tshirt_quantity to have integer, double, and integer storage types, respectively.

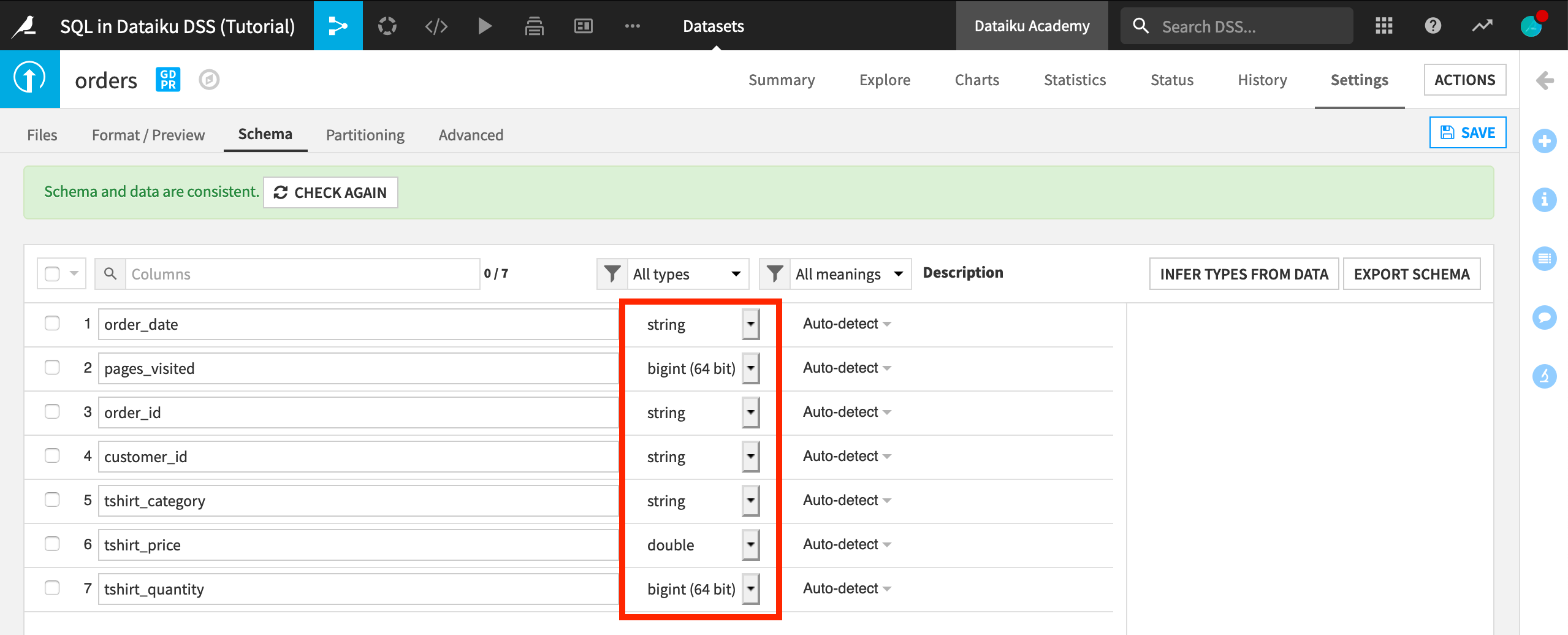

The Sync recipe maps the storage type in the input dataset to a similar type in the output database. So let us first set the column types in the input dataset. One way to handle this is to infer the storage types from the data and save the updated schema.

Note

Note that the type inference is performed against a sample of the data, and you should check that the inferred types correspond to your actual data.

Open the Settings tab of the orders dataset.

In the Schema subtab, click Check Now to confirm the schema is consistent.

Then click Infer types from data, confirm, and then save your dataset.

Return to the Explore tab to confirm the new storage types.

With the updated storage types, let’s sync the dataset to the database.

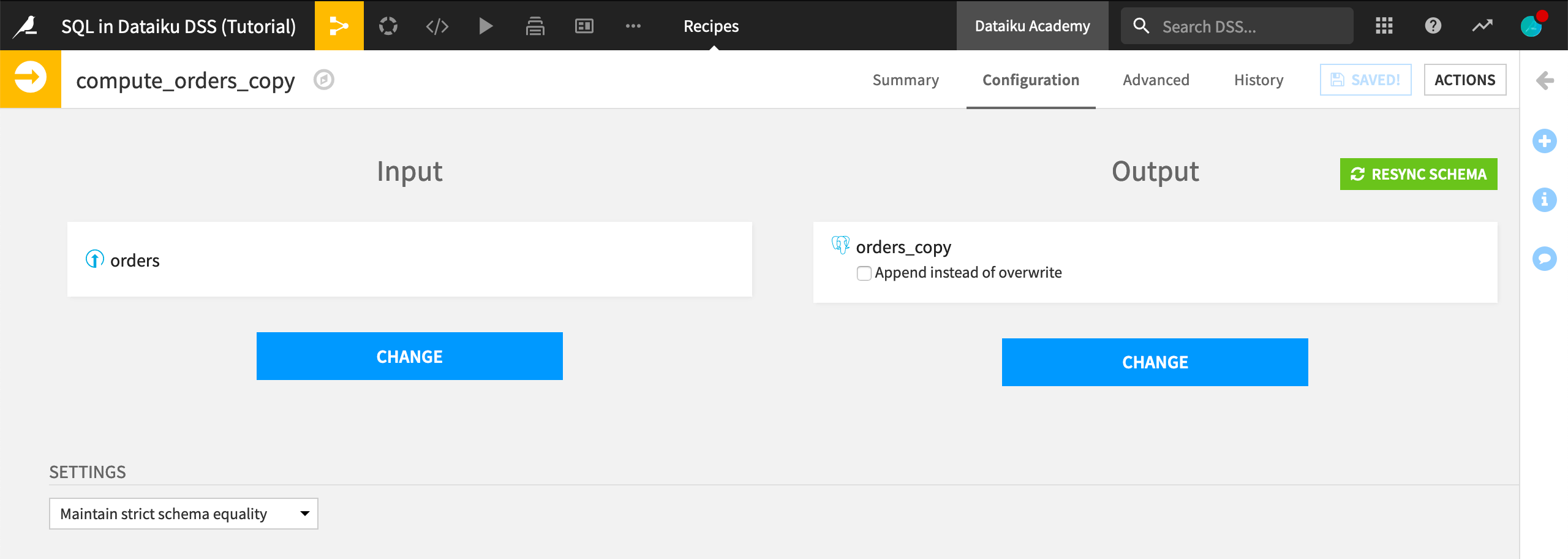

From the orders dataset, select Sync from the Actions sidebar.

Leave the default dataset name of

orders_copy.Store the new dataset into a SQL connection. In the video below, we use the PostgreSQL_tshirt connection.

Create and run the recipe.

To review these steps, please see the video below.