How the Dataiku Architecture Supports MLOps¶

In this lesson, we’ll describe the Dataiku architecture and how it pertains to MLOps, including:

Dataiku architecture tools and nodes,

Batch scoring project lifecycle, and

Real-time scoring project lifecycle

Tip

This content is also included in a free Dataiku Academy course on Production Concepts, which is part of the MLOps Practitioner learning path. Register for the course there if you’d like to track and validate your progress alongside concept videos, summaries, hands-on tutorials, and quizzes.

The final mile of putting machine learning models into production can be a significant challenge. One way to meet this challenge is to work within a framework that is already prepared to push models to production. This is where the architecture of Dataiku comes in.

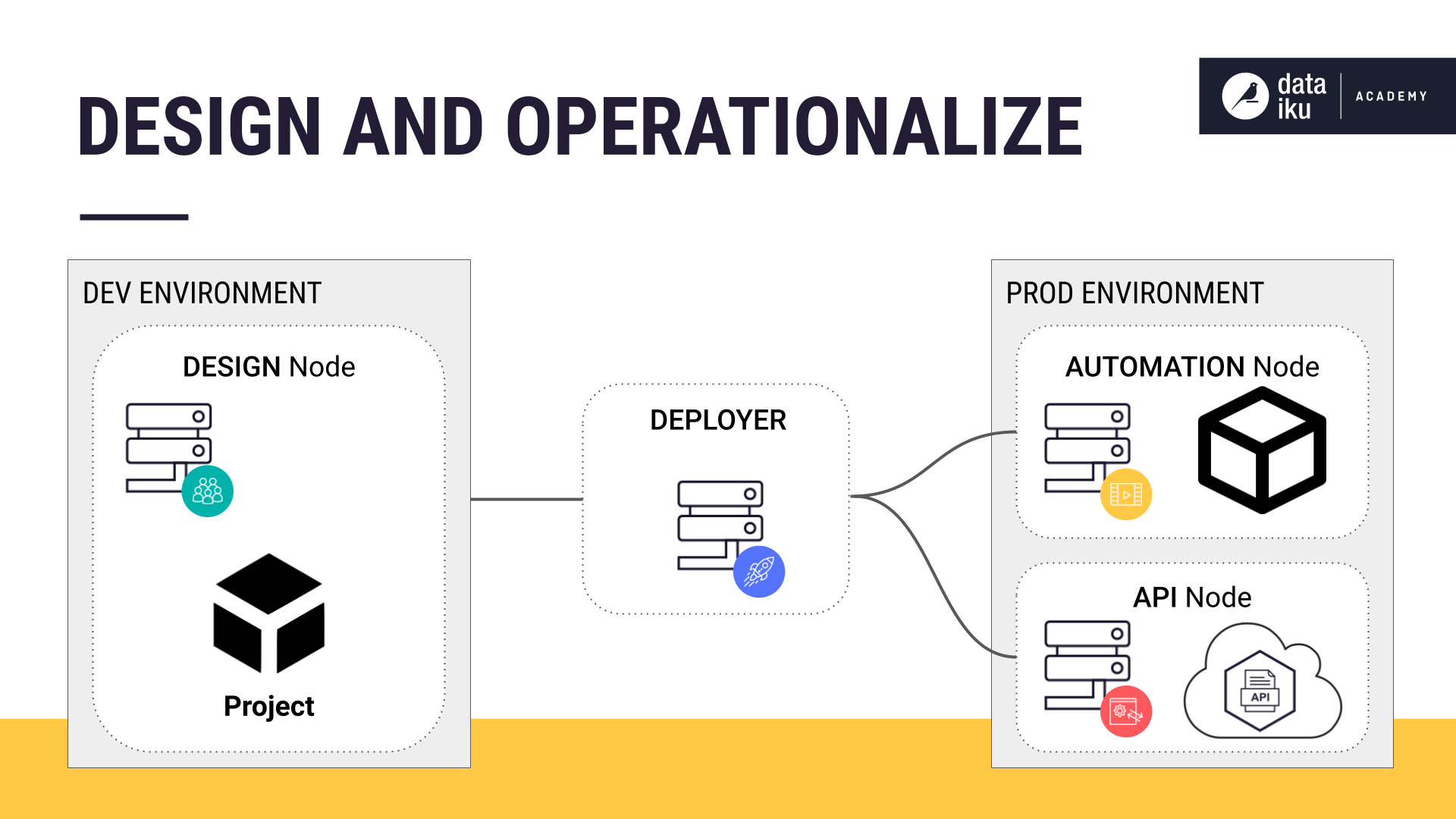

The Dataiku architecture consists of tools and nodes to design your models and then operationalize them. These tools and nodes can be thought of as unique environments–each with its own purpose–yet are still part of the same platform.

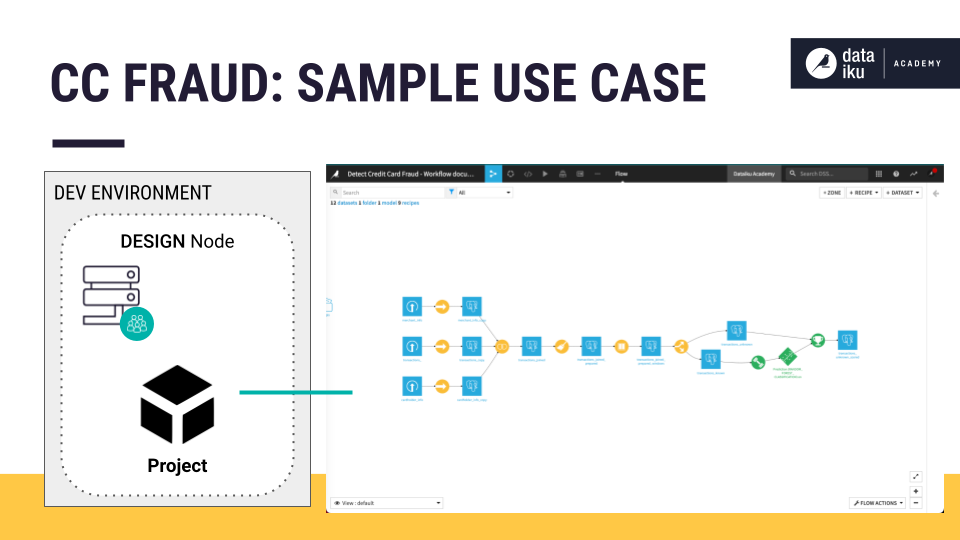

Using an example use case, Credit Card Fraud Analysis, we’ll describe the Dataiku architecture nodes, starting with the Design node, which is where we build our project.

To illustrate, we’ll describe how we use the Deployer to deploy our project to the Automation node for batch processing and then to the API node for real-time scoring.

A Project Bundle is Deployed for the Purpose of Batch Processing¶

In our Credit Card Fraud example, we aim to batch process and score credit card transactions once per day. Our batch-processing project lifecycle begins at the Design node–the most flexible node.

The Design node is a shared development environment where we can collaboratively connect to, analyze, and prepare our data, creating data visualizations and prototypes. Having this sandbox allows us to fail (even spectacularly) without impacting projects in production.

The Design node is also where we iteratively build our prediction model, set up metrics and checks, and create automation scenarios and dashboards.

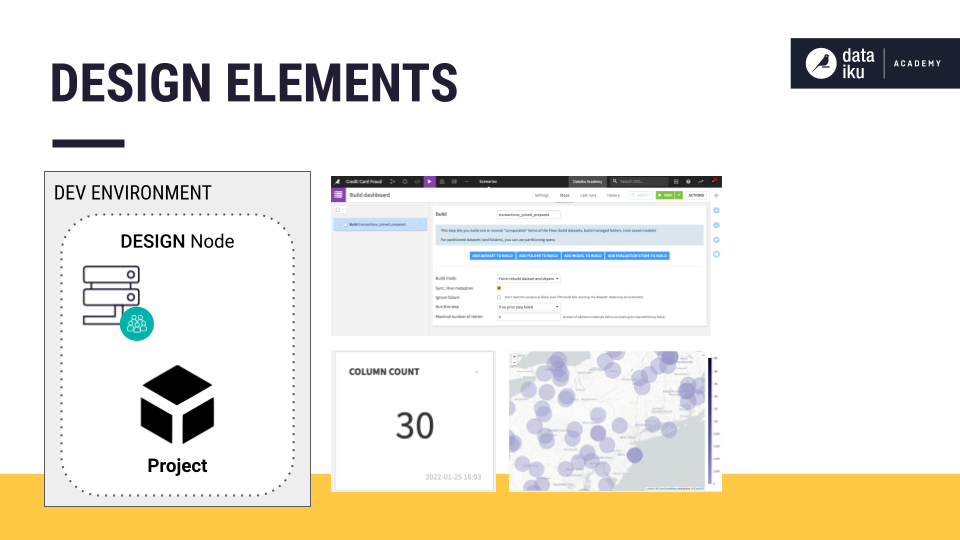

For example, let’s say the business wants a daily dashboard showing where that day’s fraudulent transactions occurred according to the prediction model. We want to ensure the data used to create the dashboard passes quality checks before building the dashboard. One of our data quality checks might be to check for the number of unique merchant IDs or no new columns in the new, unknown transactions dataset.

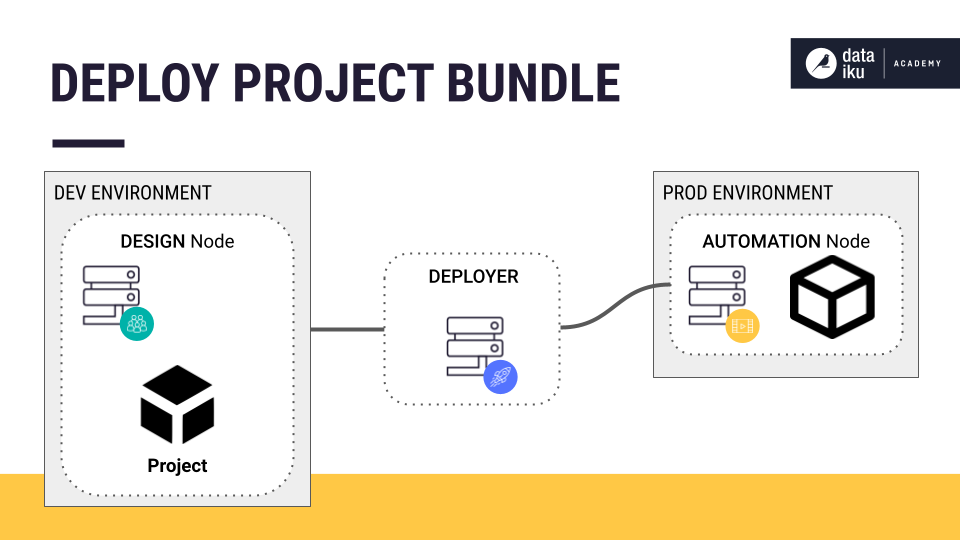

After designing our workflow, we package all of our work as a bundle. You can think of a bundle as a snapshot of the project that contains the data and configurations needed to reconstruct the tasks in the production environment. This snapshot includes the recipes, transformations, and automation scenarios. A bundle exports the project structure.

Once we create our bundle, we use the Deployer to deploy the project. The Deployer is a tool for deploying projects and API services–it can be set up as a standalone instance of Dataiku or as part of the Design or Automation node. We’ll use a specific Deployer component known as the Project Deployer for our batch processing use case.

The Project Deployer deploys the bundle to the Automation node. The Automation node is an isolated environment for operationalizing batch-processed data or machine learning scoring and retraining (redesigning still happens on the Design node). The Automation node lets us orchestrate and execute data workflows in production, giving us tools to monitor performance and version different projects. The stable and isolated nature of the Automation node allows for repeatable, reliable, production-integrated processes.

Once we have deployed our project, we can start monitoring it. For example, the business can monitor the location of transactions predicted to be fraudulent from the previous days’ purchases using an interactive dashboard.

An API Service is Deployed for the Purpose of Real-Time Scoring¶

Another type of model deployment supported by the Dataiku architecture is real-time scoring, where we process our predictions in real-time. Our goal here is to flag credit card transactions as fraudulent, or not, in real-time, individually, as the bank receives them.

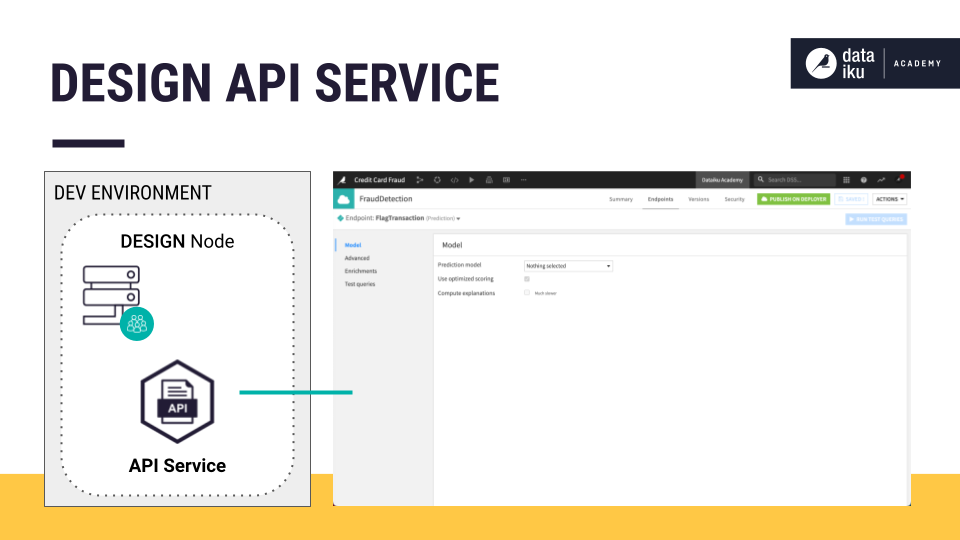

To start, we use the Design node to train our model. Then we create an API service from the model we want to deploy. This lets us use the API Designer to create our API endpoints. For our use case, our API endpoint will be the prediction model.

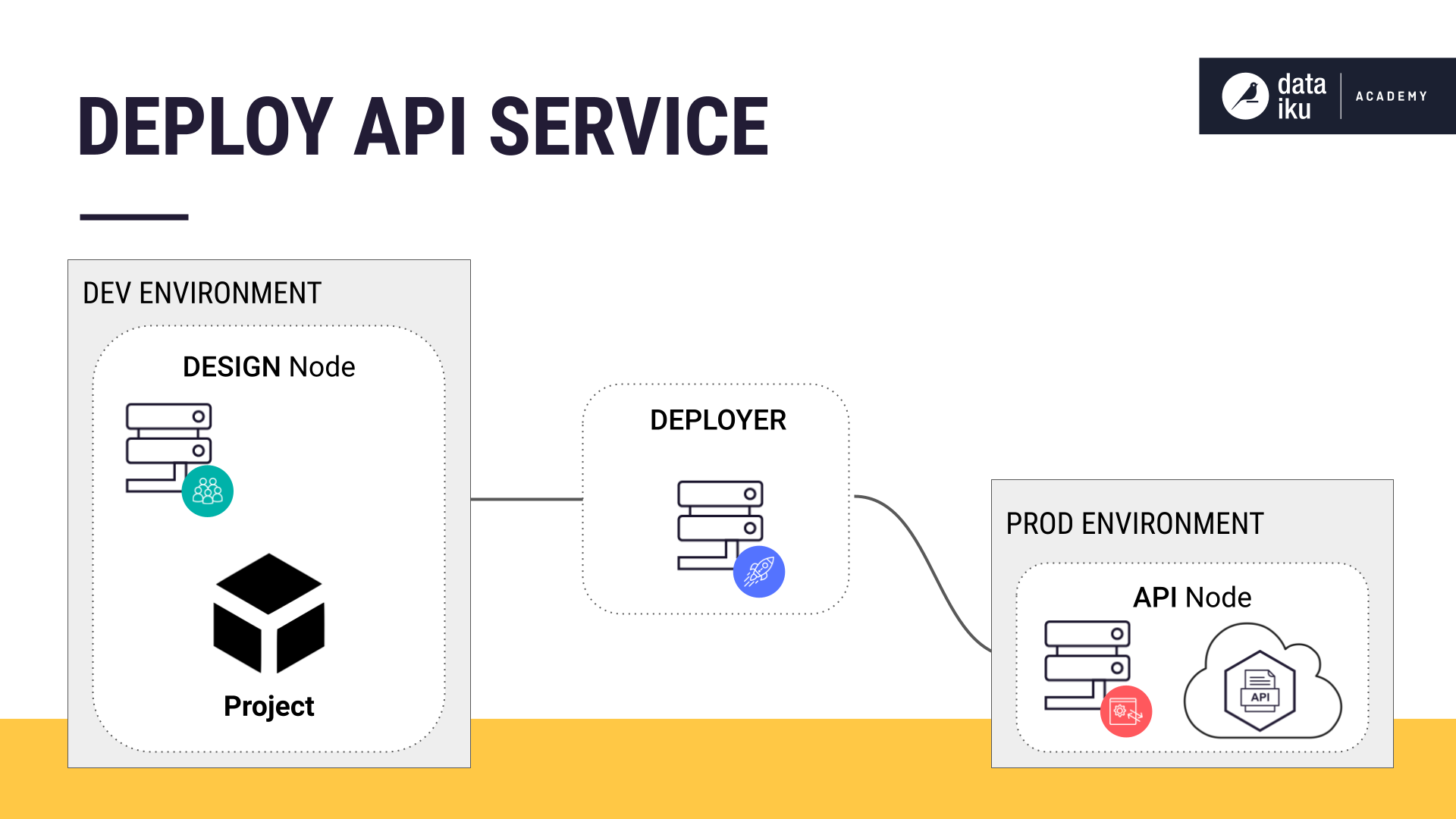

Once we run tests, we push our API package to the Deployer. In the Deployer, we will be able to see that we have both Projects and API Services available for deployment.

To deploy our API Service, we’ll be using another component of the Deployer known as the API Deployer. The API Deployer pushes our API service to the API node. The API node is a horizontally scalable and highly available web server. It operationalizes ML models and answers prediction requests.

Once we have deployed our API service to the API node, we can monitor the real-time predictions.

Summary¶

In summary, Dataiku is an end-to-end platform where analysts work within the tool to design and operationalize models.

Simply put, the architecture of Dataiku allows you to deploy models seamlessly and gives you the ability to monitor and maintain models and redeploy them.

In addition, Dataiku supplies tools to perform monitoring tasks such as tracking model quality or pipeline health.

Works Cited

Mark Treveil and the Dataiku team. Introducing MLOps: How to Scale Machine Learning in the Enterprise. O’Reilly, 2020.