Concept: Explainable AI¶

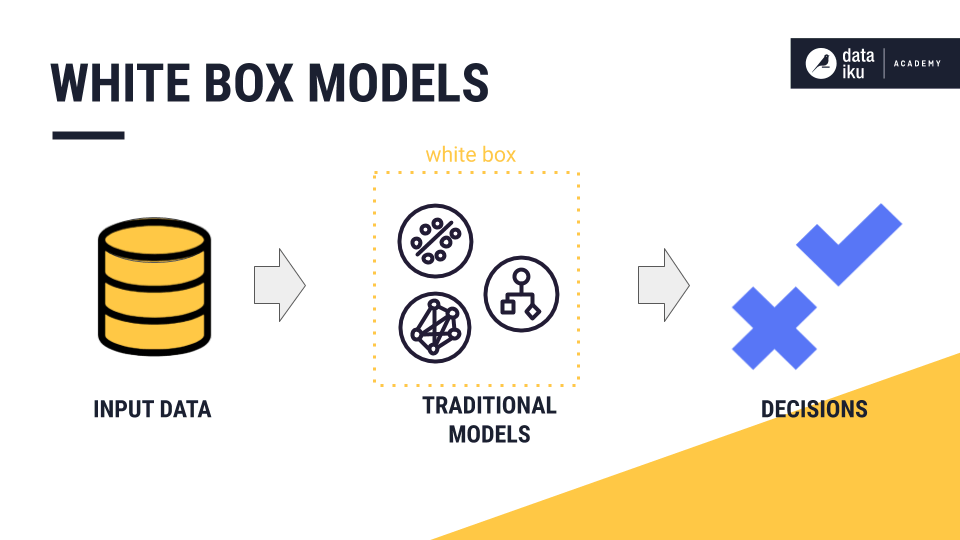

Traditional models, such as regression or tree-based, are easier to understand and are known as white box models. The relationship between the data used to train the model and the model outcome is explainable.

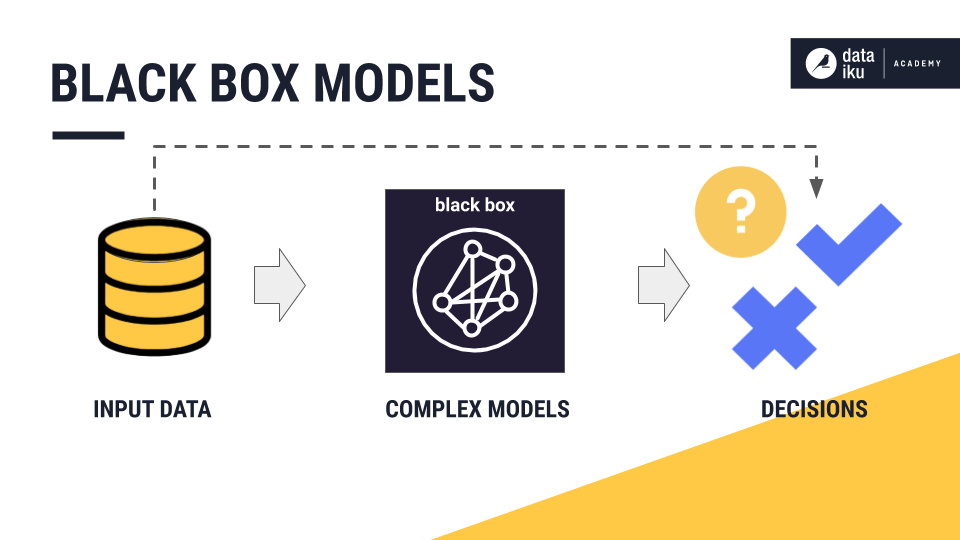

More complex models, such as ensemble and deep learning, can be more accurate, but are more difficult to understand. These are known as black box models. The relationship between the data used to train the model and the model outcome is less explainable.

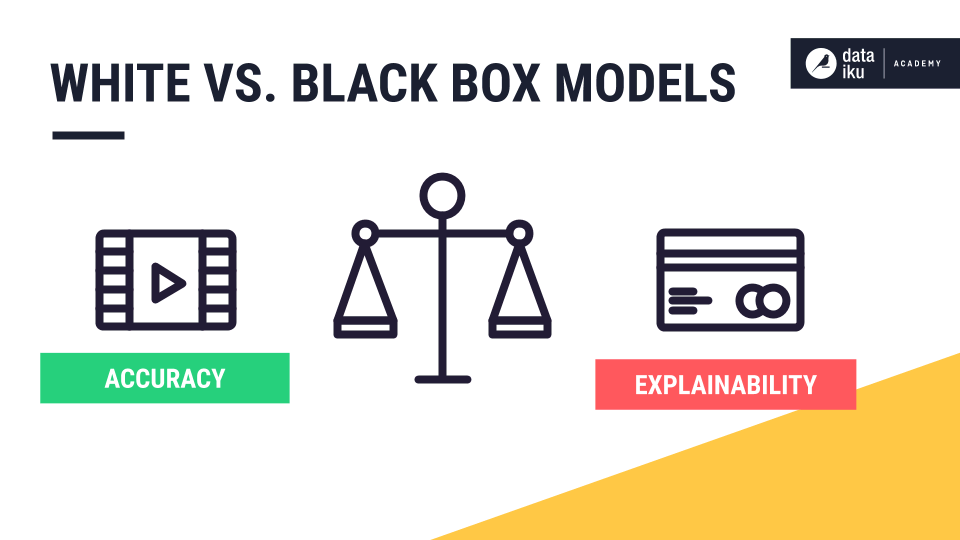

In some situations, we may be comfortable with this tradeoff between accuracy and explainability. For example, we might not care why an algorithm recommends a particular movie if it is a good match, but we do care why someone has been rejected for a credit card application.

In addition to accuracy and explainability, we want models that are free from bias. All it takes is to scan the news headlines for examples of why we might have reason to worry about bias in artificial intelligence. Bias in models, of course, can originate long before the training and testing stages. The data used for model training can be entangled in its own set of biases.

In this section, we’ll focus on specific tools available in the summary report of a visual prediction model that aim to help us build trustworthy, transparent, and bias-free models. These tools include the following:

Partial dependence plots

Subpopulation analysis

Individual explanations

Interactive scoring

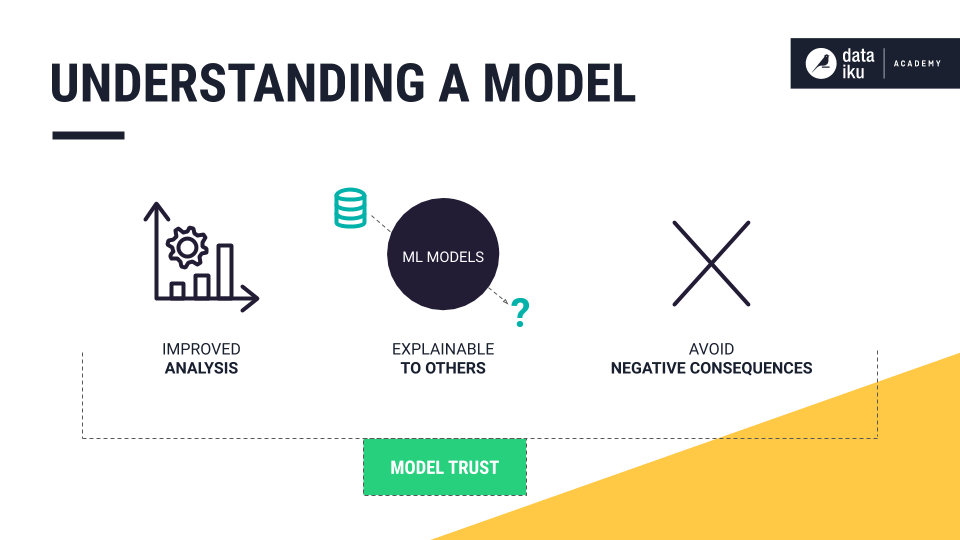

The ability to understand how a model makes choices is important for three reasons:

It gives us the opportunity to further refine and improve our analysis.

It makes it easier to explain to non-practitioners how the model uses the data to make decisions.

Explainability can help practitioners to avoid negative or unforeseen consequences of their models.

These three factors of explainable AI gives us more confidence in our model development and deployment.