Automation Best Practices¶

The Production Concepts course introduced many important MLOps concepts. Underpinning all of these concepts are Dataiku features introduced in the Automation course of the Advanced Designer learning path.

Review of Automation Features¶

Before any Dataiku project can begin its journey into production (either deploying a bundle to an Automation node, or an API service to an API node), a robust set of metrics, checks, scenarios, triggers, and reporters should be established in the project on the Design node.

Infrastructure aside, there is no substitute for a set of well-designed scenarios that support the project’s objectives. Mastering these automation features—and diligently testing they work as expected—is an essential task while preparing for production.

Consider some of the most common production tasks:

retraining models or refreshing dashboards and reports in a batch manner;

scoring new data in real-time; and

monitoring the quality of incoming data and the performance of models.

Executing these tasks requires being able to:

define the correct set of metrics on objects like datasets, models, and managed folders;

incorporate those key metrics into simple checks;

use those checks in a scenario to execute a wide variety of objectives—such as data quality monitoring to name one;

define the correct set of triggers to launch scenarios at the desired timing;

all the while, alerting stakeholders to what is happening using reporters.

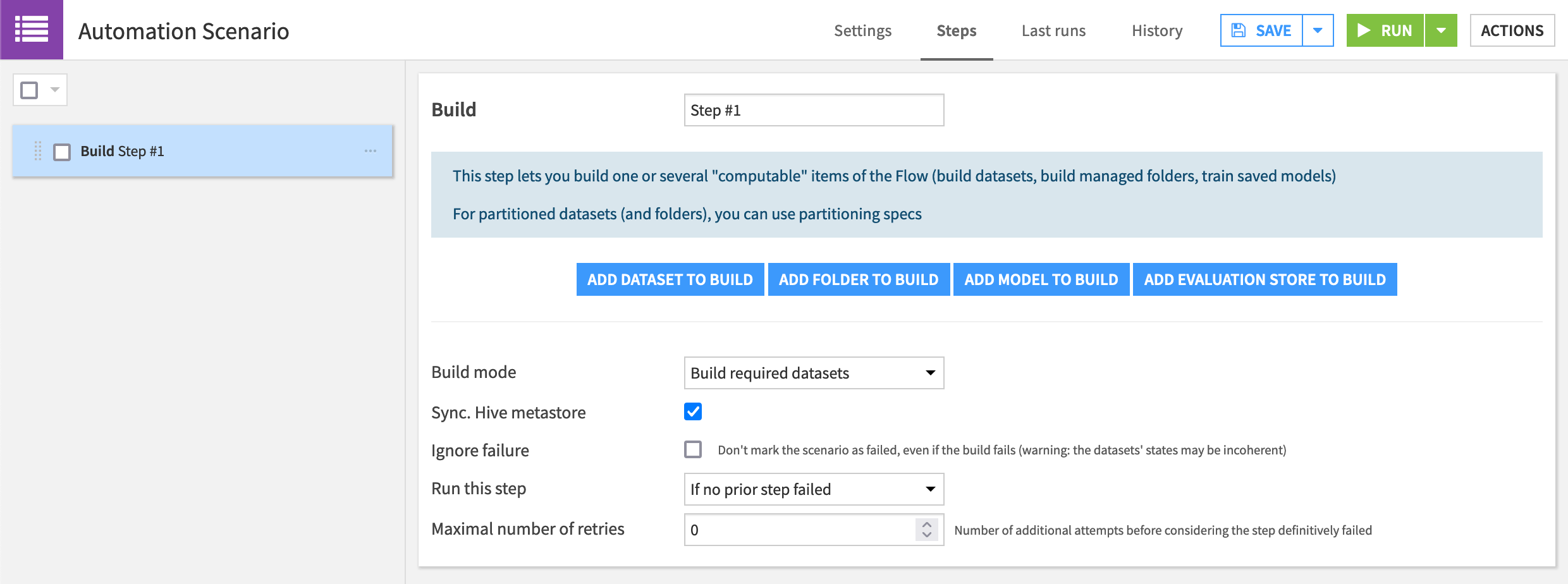

Together, these features allow for considerable complexity. For example, consider the most common scenario step— Build/Train. You can use this step to build datasets, managed folders, models, and model evaluation stores.

However, you can configure this one step in many different ways that can dramatically alter the step’s behavior. In this step, you can rebuild objects according to different build modes. You can have the step ignore failures or run only based on the success of other steps. You can even have the step retry a specified number of times before ultimately failing.

Scenario Steps¶

Moreover, scenario steps are not limited to building datasets and training models. As a reminder, there are steps for:

executing code and macros,

creating and destroying clusters,

creating and updating project or API deployments,

running or stopping other scenarios.

On top of this, all of these elements are highly customizable, such as with Python or SQL code. And of course, scenarios can also be entirely Python-based.

Note

The full catalog of scenario steps are covered in the product documentation.

What’s Next?¶

With this in mind, before advancing in your MLOps journey, be sure you have mastered the basics of automation within Dataiku!

Note

The product documentation records all of the automation functionality available with scenarios, metrics, and checks.