Hands-On Tutorial: Model Fairness Report¶

Evaluating ML model fairness is challenging, because there is no single metric for every situation. The Model Fairness Plugin provides a dashboard of key model fairness metrics so you can compare how models treat members of different groups, and identify problem areas to rectify. Learn how to use the Model Fairness Report with this hands-on exercise.

Prerequisites¶

You will need:

A Dataiku DSS instance with the Model Fairness Report plugin installed.

A Python 3 code environment on that instance to build your models with. The Model Fairness plugin requires models built with Python 3.

Getting Started¶

You will need a Dataiku DSS project with a dataset that you can use to create a predictive model. We will use the starter project from the Machine Learning Basics course as an example. There are two ways you can import this project:

From the Dataiku DSS homepage, click +New Project > DSS Tutorials > ML Practitioner > Machine Learning Basics (Tutorial).

Download the zip archive for your version of Dataiku DSS, then from the Dataiku DSS homepage, click +New Project > Import project and choose the zip archive you downloaded.

You should now be on the project’s homepage.

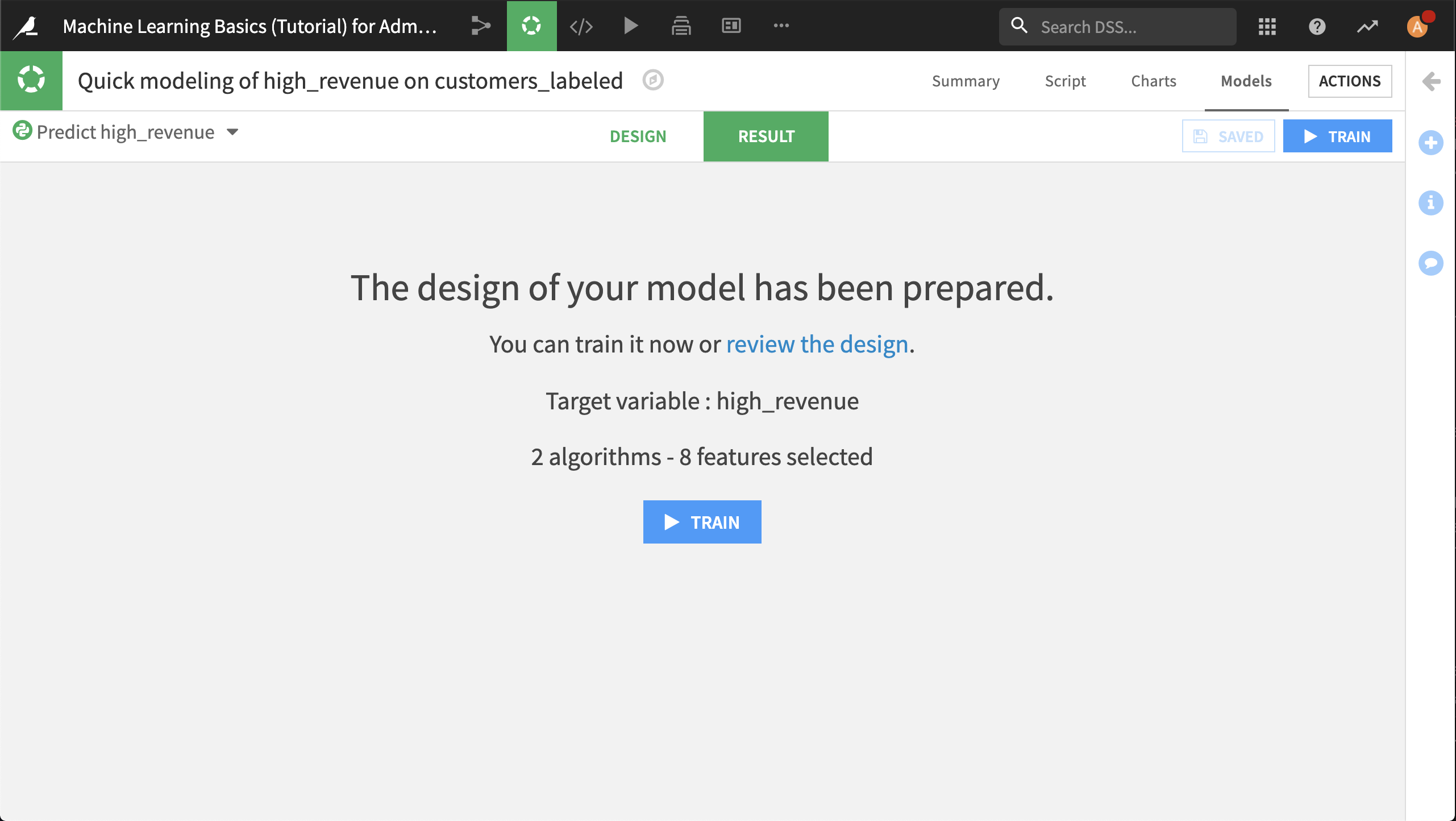

Go to the project’s Flow and select the customers_labeled dataset.

In the right panel, click Lab > AutoML Prediction.

In the dialog that opens, choose to create a prediction model on the column high_revenue.

Click Create to create a new prediction modeling task.

Your quick model is now ready to train.

Creating the Report¶

On the Runtime environment panel of the Design tab, ensure that the code environment selected is a Python 3 environment.

Click Train.

When training is complete, deploy a model to the Flow.

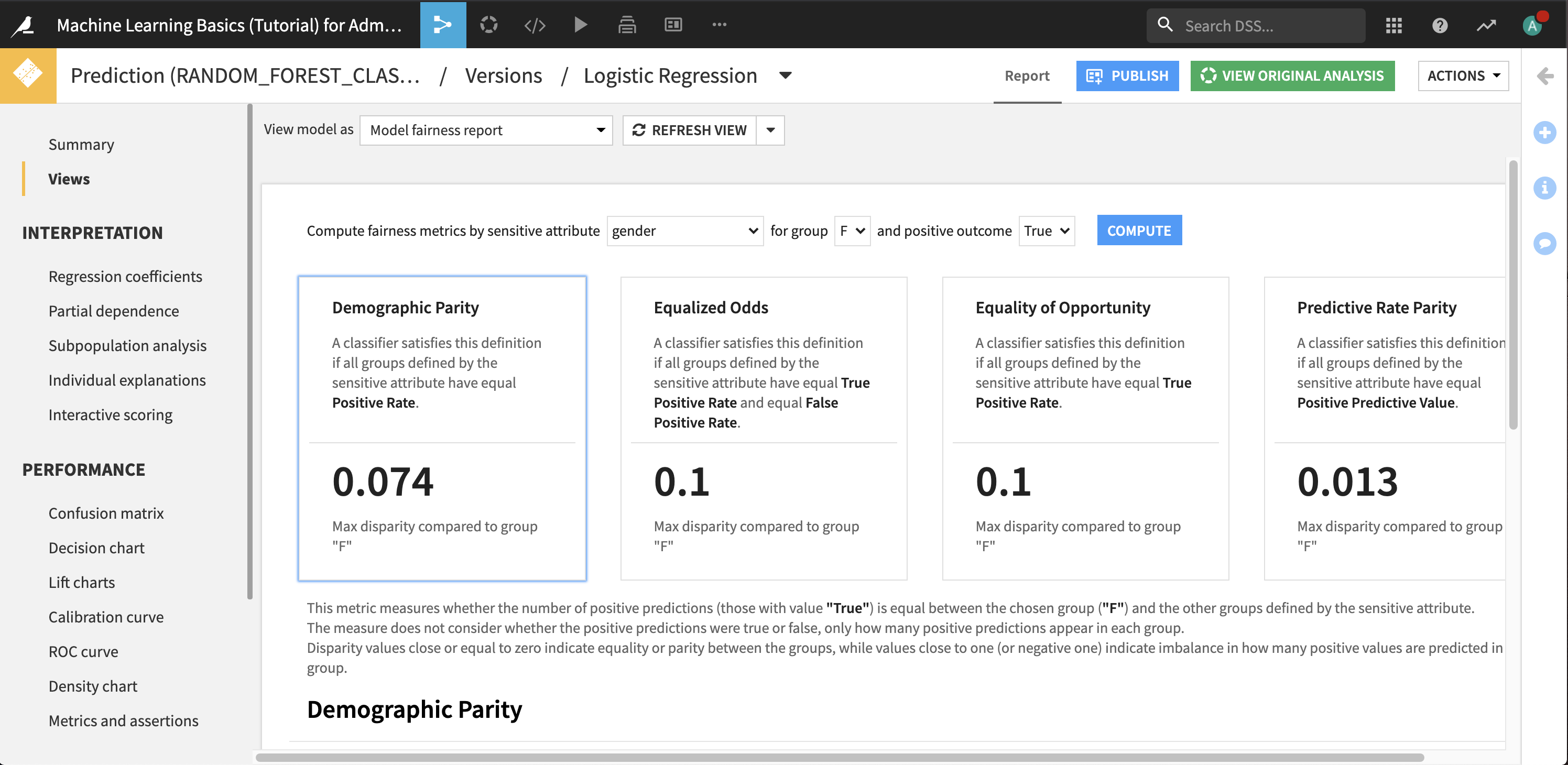

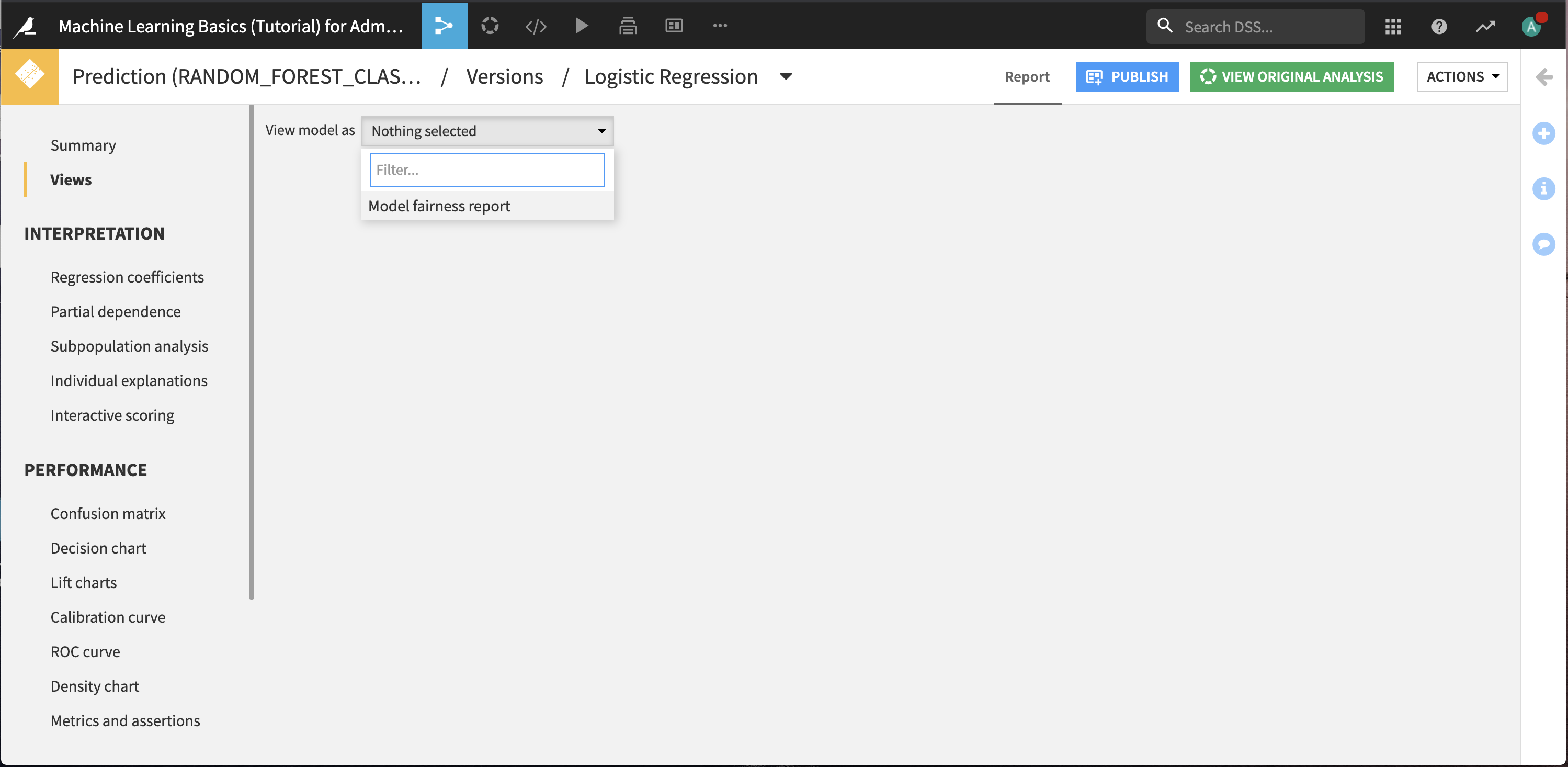

Go to the Views panel of the active model’s page within the deployed object on the Flow.

Choose to view the model as Model fairness report.

We may be concerned about whether the model fairly identifies women as high revenue customers. To do this:

Choose to compute metrics by gender for group F and positive outcome True.

Click Compute.

The report is displayed. For each fairness metric, there is a detailed description of how to interpret it. In this particular case, there doesn’t appear to be a disparity between how the model identifies men and women as high revenue customers.