Python in Dataiku DSS¶

Dataiku allows you to seamlessly integrate Python code and visual recipes in a Flow.

In this tutorial, we will show you how to:

Integrate Python as part of your data pipeline through code recipes.

Use Jupyter notebooks to prototype and test code.

Transfer a Dataiku dataset into a pandas dataframe and back, using the

dataikuPython package.

We will work with the fictional retailer Haiku T-Shirt’s data.

Prerequisites¶

This tutorial assumes that you are familiar with the Dataiku DSS Basics courses.

Create Your Project¶

The first step is to create a new Dataiku DSS Project.

From the homepage, click +New Project > DSS Tutorials > Developer > Code Notebooks (Tutorial).

From the project homepage, click Go to Flow.

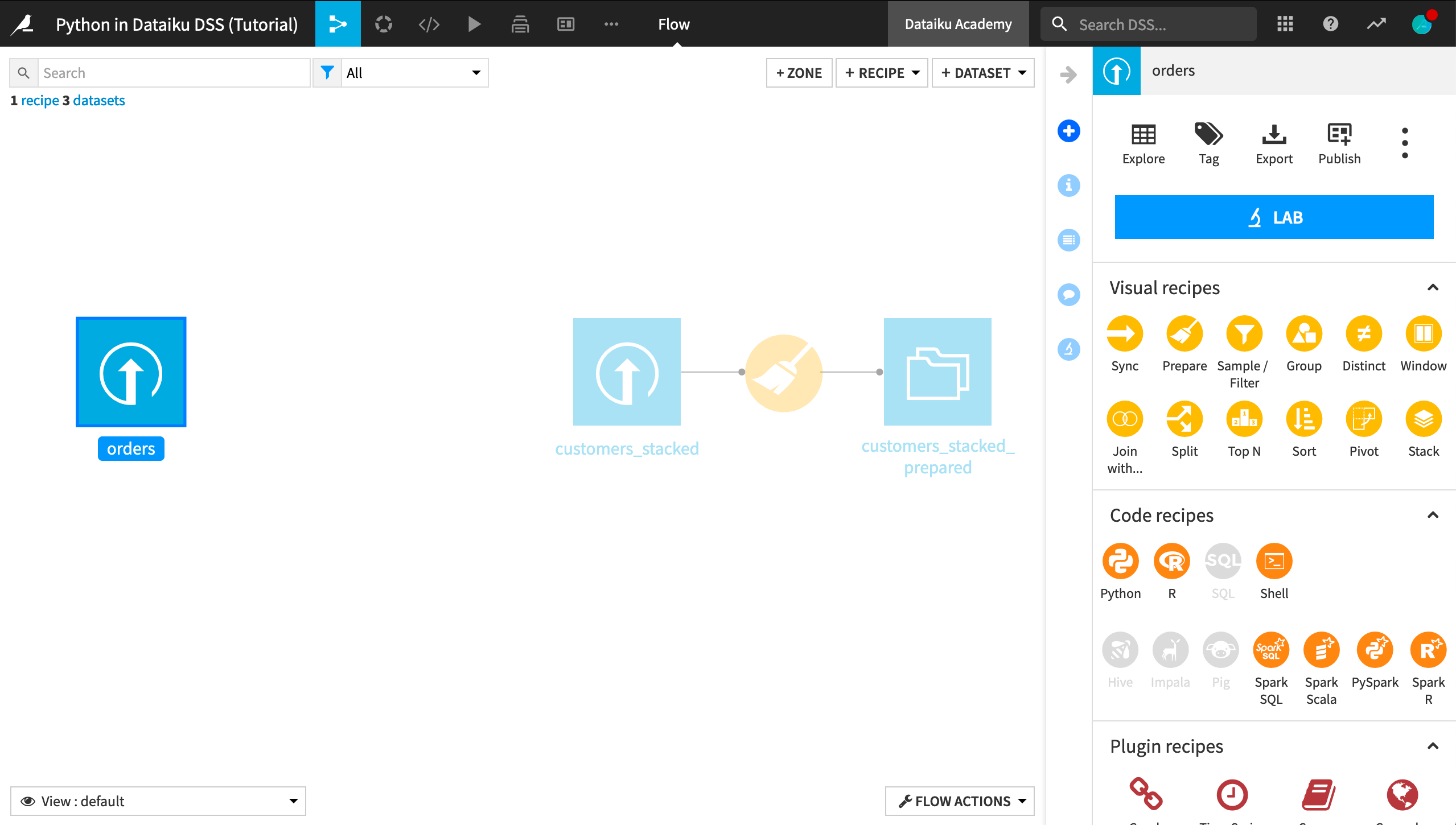

In the Flow, you see the Haiku T-Shirt orders and customers_stacked data uploaded into Dataiku DSS. Further, the customers_stacked data has been prepared with a visual Prepare recipe.

Your First Python Recipe¶

Our current goal is to group past orders by customer, aggregating their past interactions. In the Basics courses, we accomplished this with a visual Group recipe, but it can also be easily accomplished with Python code.

With the orders dataset selected, choose Actions > Code Recipes > Python.

Add a new output dataset named

orders_by_customer.Click Create Recipe.

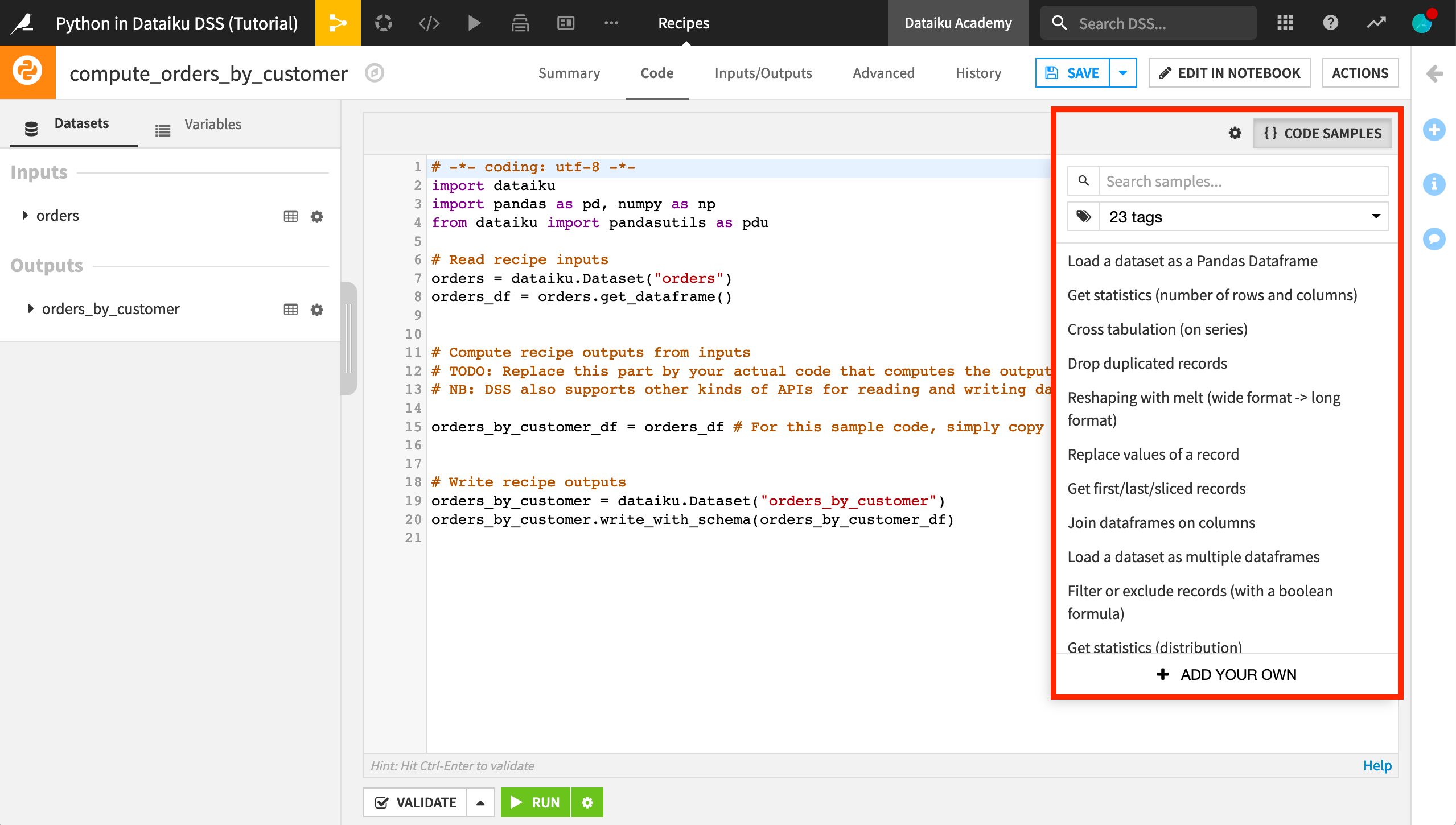

The recipe editor is now populated with the following code, which:

reads the orders dataset into a pandas dataframe named orders_df,

passes it unchanged to a new dataframe named orders_by_customer_df, and

writes that new dataframe out to the orders_by_customer dataset.

# -*- coding: utf-8 -*-

import dataiku

import pandas as pd, numpy as np

from dataiku import pandasutils as pdu

# Read recipe inputs

orders = dataiku.Dataset("orders")

orders_df = orders.get_dataframe()

# Compute recipe outputs from inputs

# TODO: Replace this part by your actual code that computes the output, as a Pandas dataframe

# NB: DSS also supports other kinds of APIs for reading and writing data. Please see doc.

orders_by_customer_df = orders_df # For this sample code, simply copy input to output

# Write recipe outputs

orders_by_customer = dataiku.Dataset("orders_by_customer")

orders_by_customer.write_with_schema(orders_by_customer_df)

As the commented TODO says, we’ll need to provide the code that aggregates the orders by customer. Dataiku DSS provides a number of code samples to help get us started.

In this case, replace the line that defines

orders_by_customer_dfwith the following code.

orders_by_customer_df = orders_df.assign(total=orders_df.tshirt_price*orders_df.tshirt_quantity

).groupby(by="customer_id"

).agg({"pages_visited":"mean",

"total":"sum"})

This creates a dataframe with rows grouped by customer_id. For each customer, we’ve computed the average number of pages on the Haiku T-shirt website visited by the customer during orders, and the sum total of the value of orders made by the customer, where the value of each order is the price of each t-shirt times the number of t-shirts purchased.

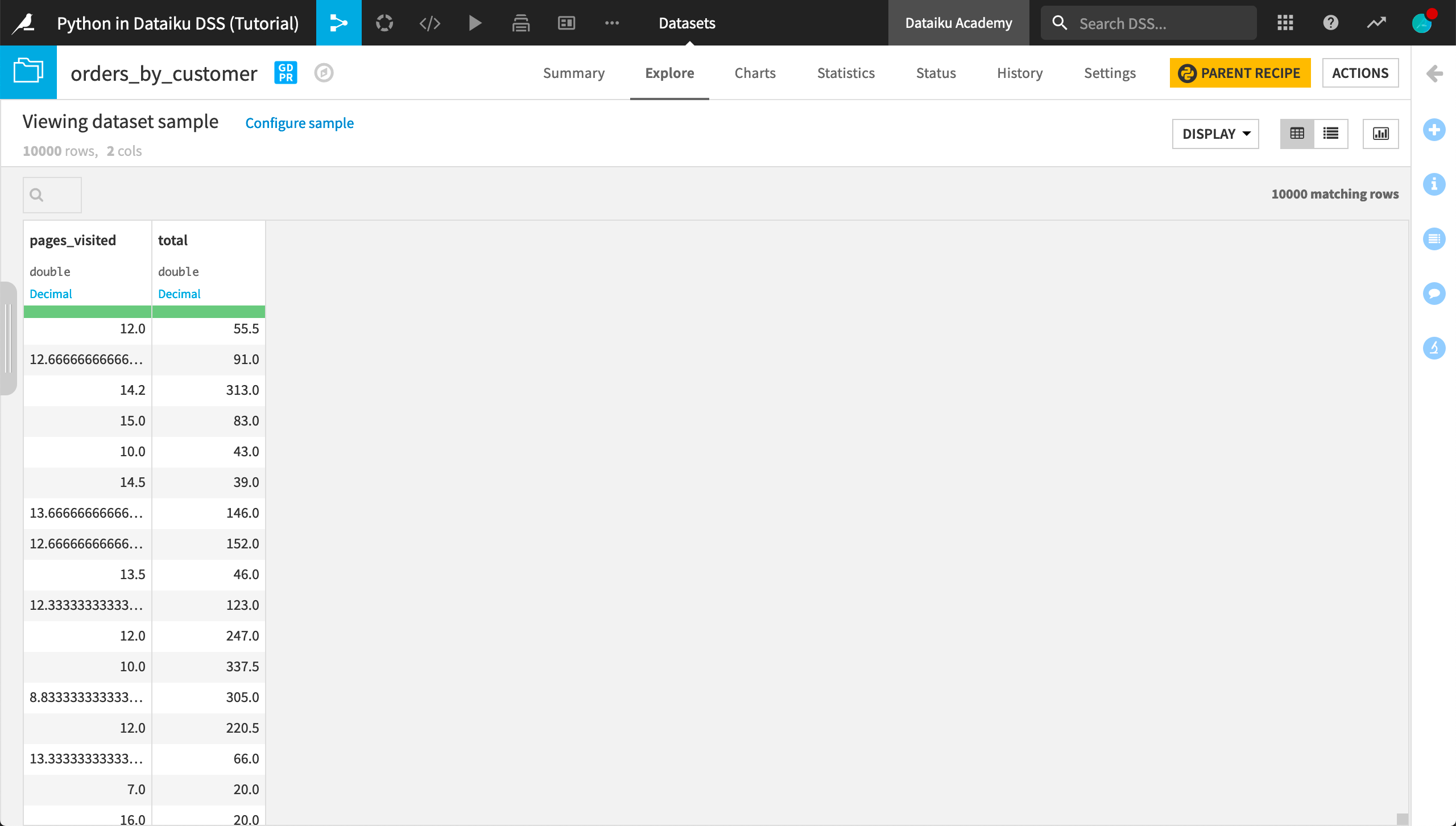

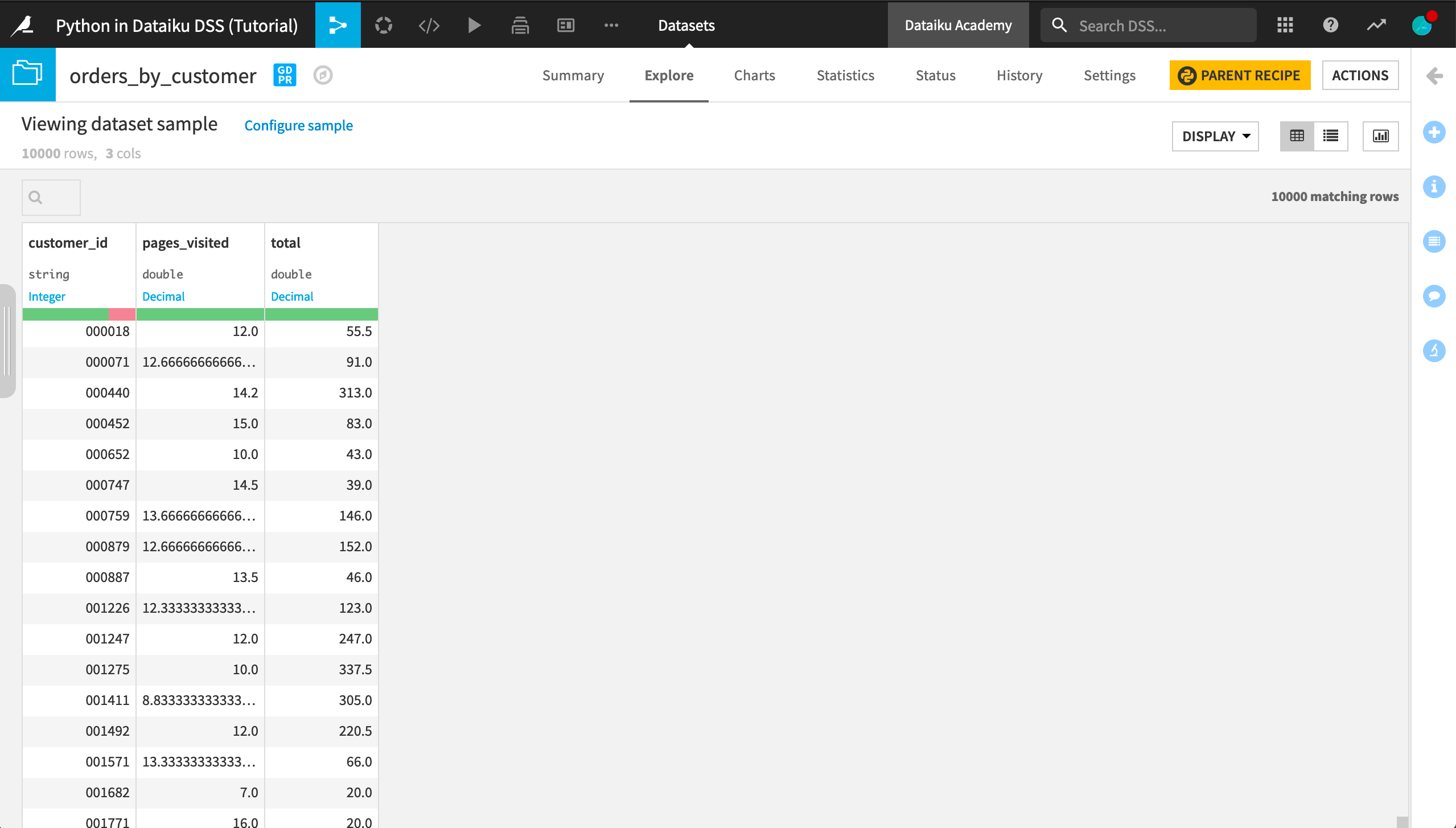

Now run the recipe, and when it completes, explore the output dataset.

Interestingly, the output dataset does not contain the customer_id column, but we’ll need it later in order to join the customers and grouped orders data.

To diagnose the issue, in the orders_by_customer dataset click Parent Recipe to reopen the Python recipe.

Then click Edit in Notebook.

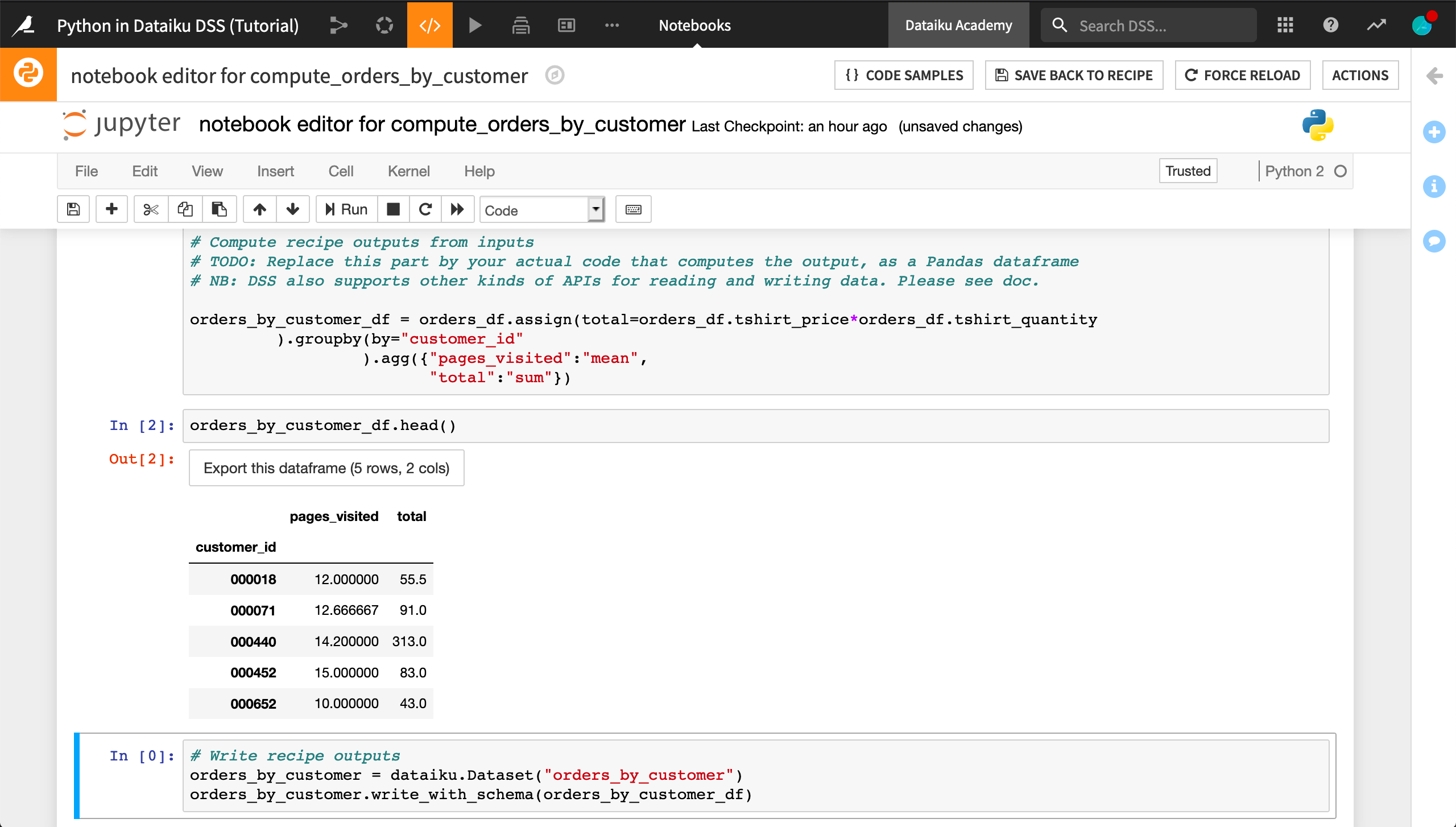

This opens a Jupyter notebook with the recipe code, where we can interactively test it. The recipe code begins in a single cell.

Split the cell so that the code to write recipe outputs is in a separate cell.

Add a cell between the two existing cells and put the following code in it.

orders_by_customer_df.head()

Run the first two cells.

The output shows that the orders_by_customer dataframe has the customer_id information; however, the dataframe has a hierarchical index.

In order to flatten the index, add

.reset_index()to the code that defines the dataframe so that it looks like the following.

orders_by_customer_df = orders_df.assign(total=orders_df.tshirt_price*orders_df.tshirt_quantity

).groupby(by="customer_id"

).agg({"pages_visited":"mean",

"total":"sum"}).reset_index()

Re-run the first two cells to see how the dataframe has changed.

Click Save back to recipe and run the recipe again.

Now the output dataset contains a customer_id column.

Explore with a Python Notebook¶

Previously, we started with a Python recipe because we had a specific goal of transforming the orders dataset. Alternatively, we can explore a dataset using a notebook.

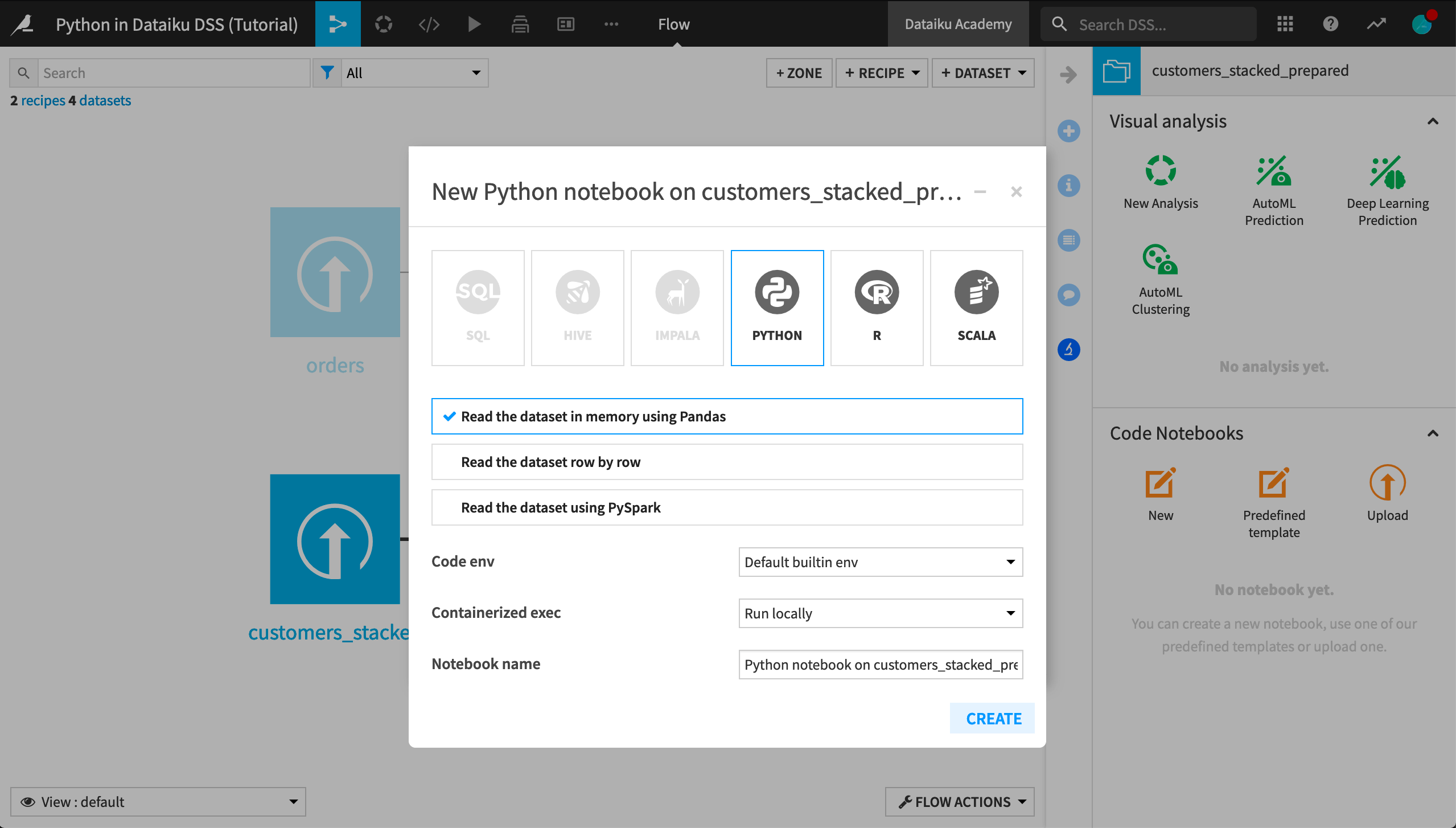

In the Flow, select the customers_stacked_prepared dataset.

From the Actions tab, click Lab > New Code Notebook > Python.

Read the dataset in memory using Pandas.

Click Create.

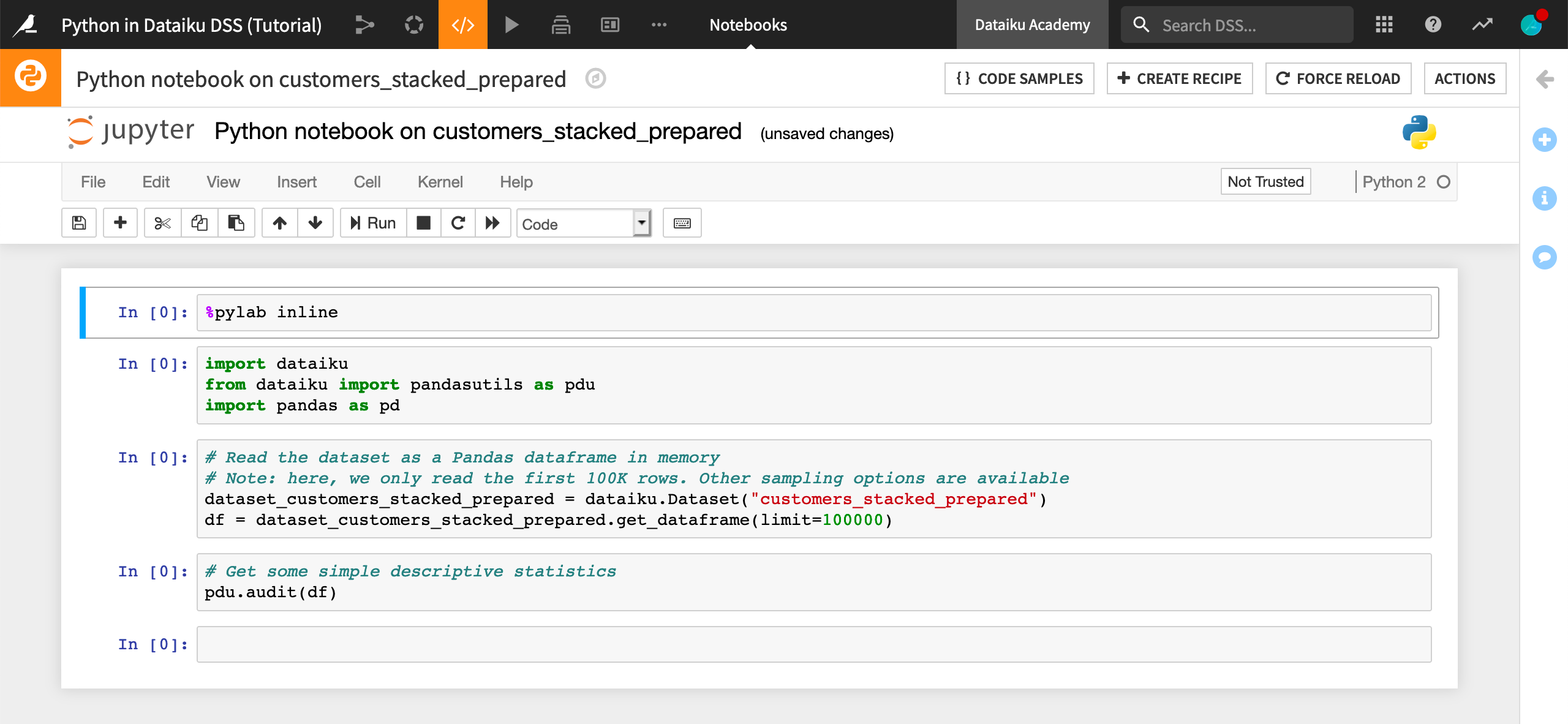

The notebook is automatically populated with four cells.

The first two cells import various useful packages. The first cell uses the built-in magics to import the numpy and matplotlib packages.

%pylab inline

import dataiku

from dataiku import pandasutils as pdu

import pandas as pd

The third cell reads the customers_stacked_prepared dataset into a pandas dataframe named df.

# Read the dataset as a Pandas dataframe in memory

# Note: here, we only read the first 100K rows. Other sampling options are available

dataset_customers_stacked_prepared = dataiku.Dataset("customers_stacked_prepared")

df = dataset_customers_stacked_prepared.get_dataframe(limit=100000)

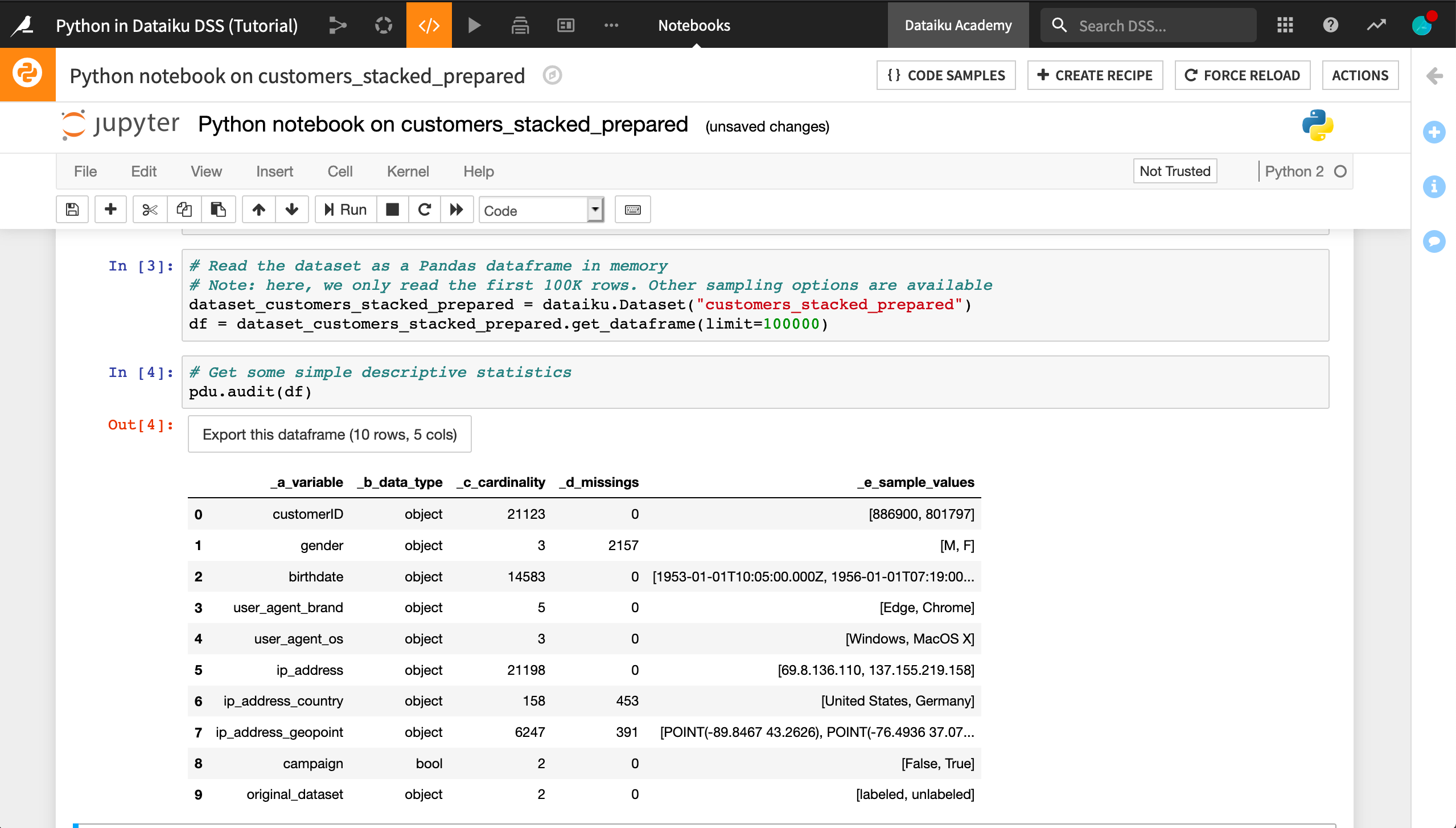

The fourth cell audits the columns in the dataframe to provide information on the type of data in the column and the number of unique and missing values.

# Get some simple descriptive statistics

pdu.audit(df)

Run each of the cells in order to produce the output.

Note

The notebook also now has the dataframe df ready in memory.

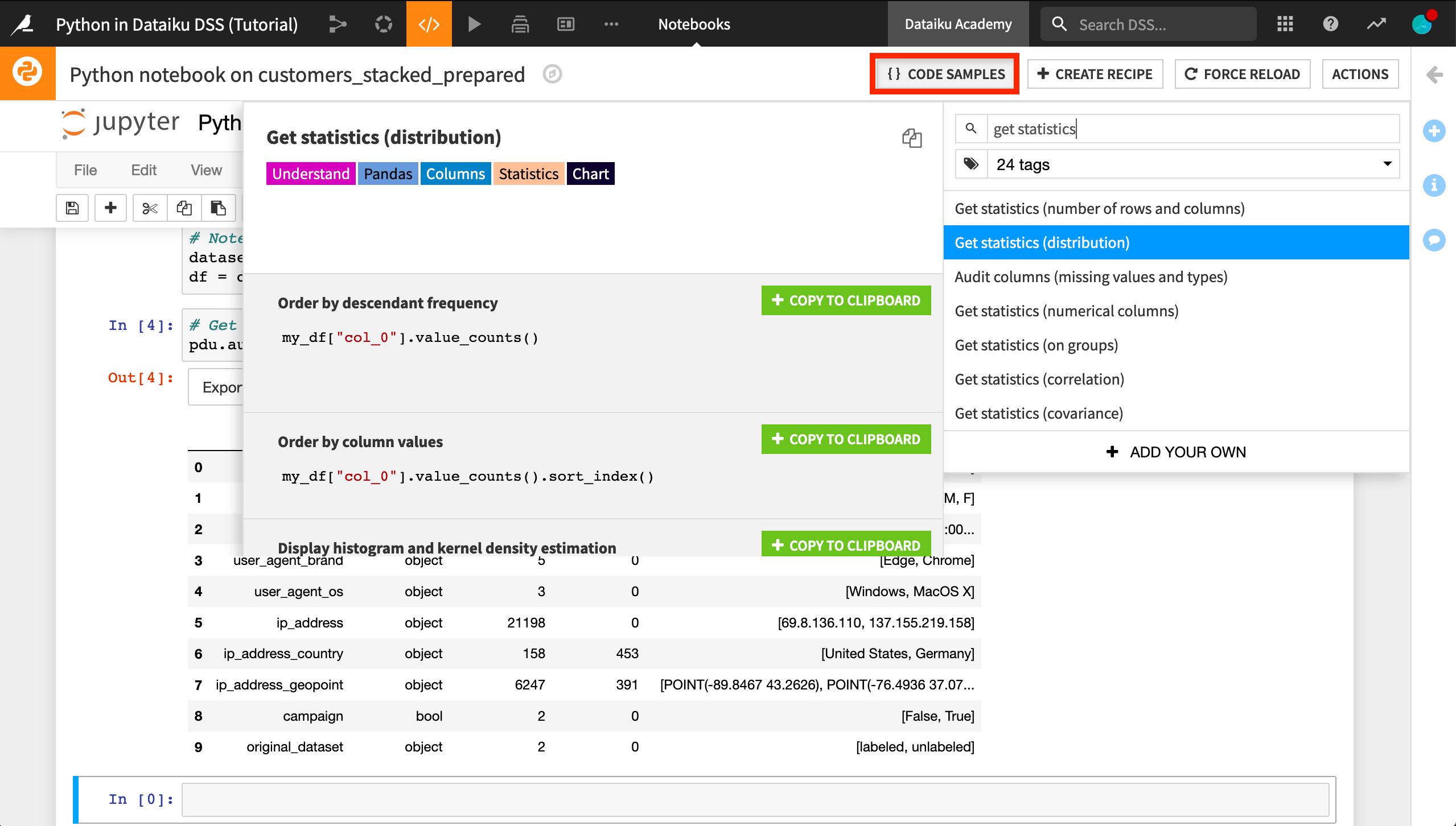

As in the Python recipe, Dataiku DSS provides code samples to help us get started.

Open up the list of code samples and search for

Get statistics (distribution).

Copy the first code sample

my_df["col_0"].value_counts()and paste it into a new cell in the notebook.Edit the code to be relevant to our data and then run it:

df["campaign"].value_counts()

The cell returns the number of customers who are part of the marketing campaign and the number who are not. Now we’d like to visualize the effect of campaign on the total amount a customer has spent.

Since that information is in the orders_by_customer dataset, read that dataset into a new dataframe:

dataset_orders = dataiku.Dataset("orders_by_customer")

df_orders = dataset_orders.get_dataframe(limit=100000)

Then join it with the df dataframe.

Search the code samples for

Join dataframes on columns.Copy the code for On columns with different names to a new notebook cell, and modify it to apply to our data.

df_joined = df.merge(df_orders,left_on="customerID",right_on="customer_id")

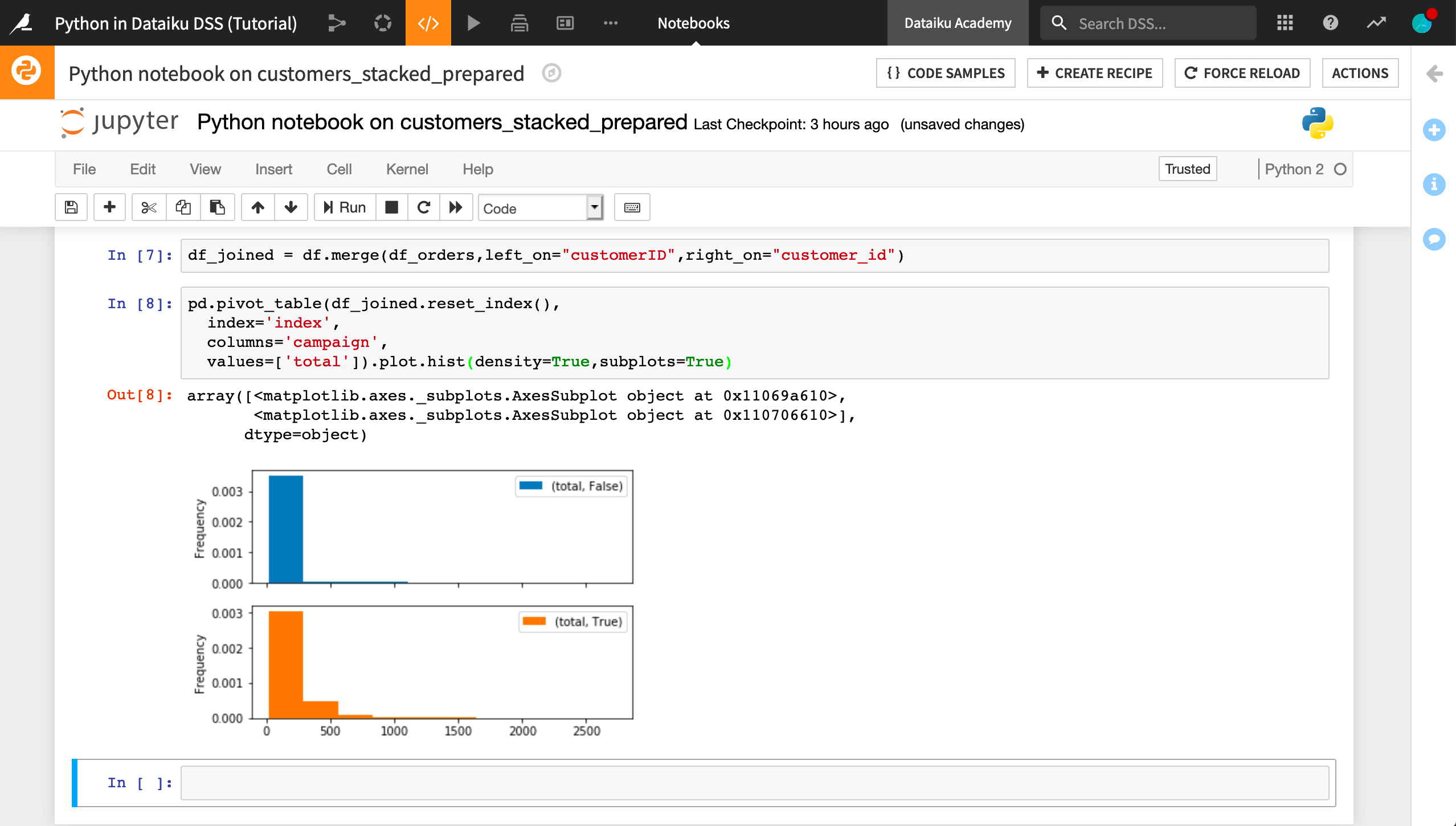

Finally, the following code produces a paneled histogram with the bar heights normalized so that it’s easier to compare across values of campaign.

pd.pivot_table(df_joined.reset_index(),

index='index',

columns='campaign',

values=['total']).plot.hist(density=True,subplots=True)

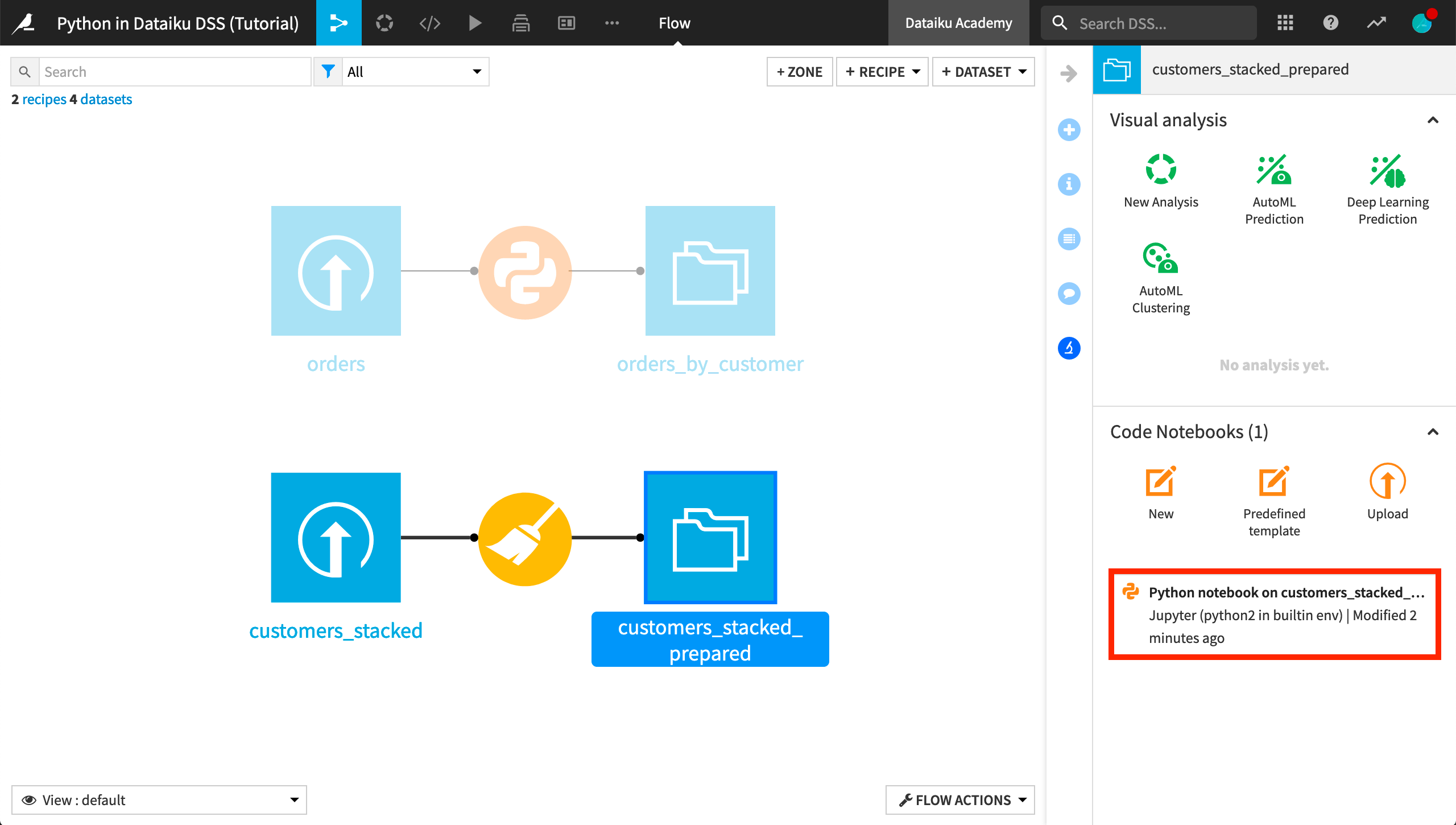

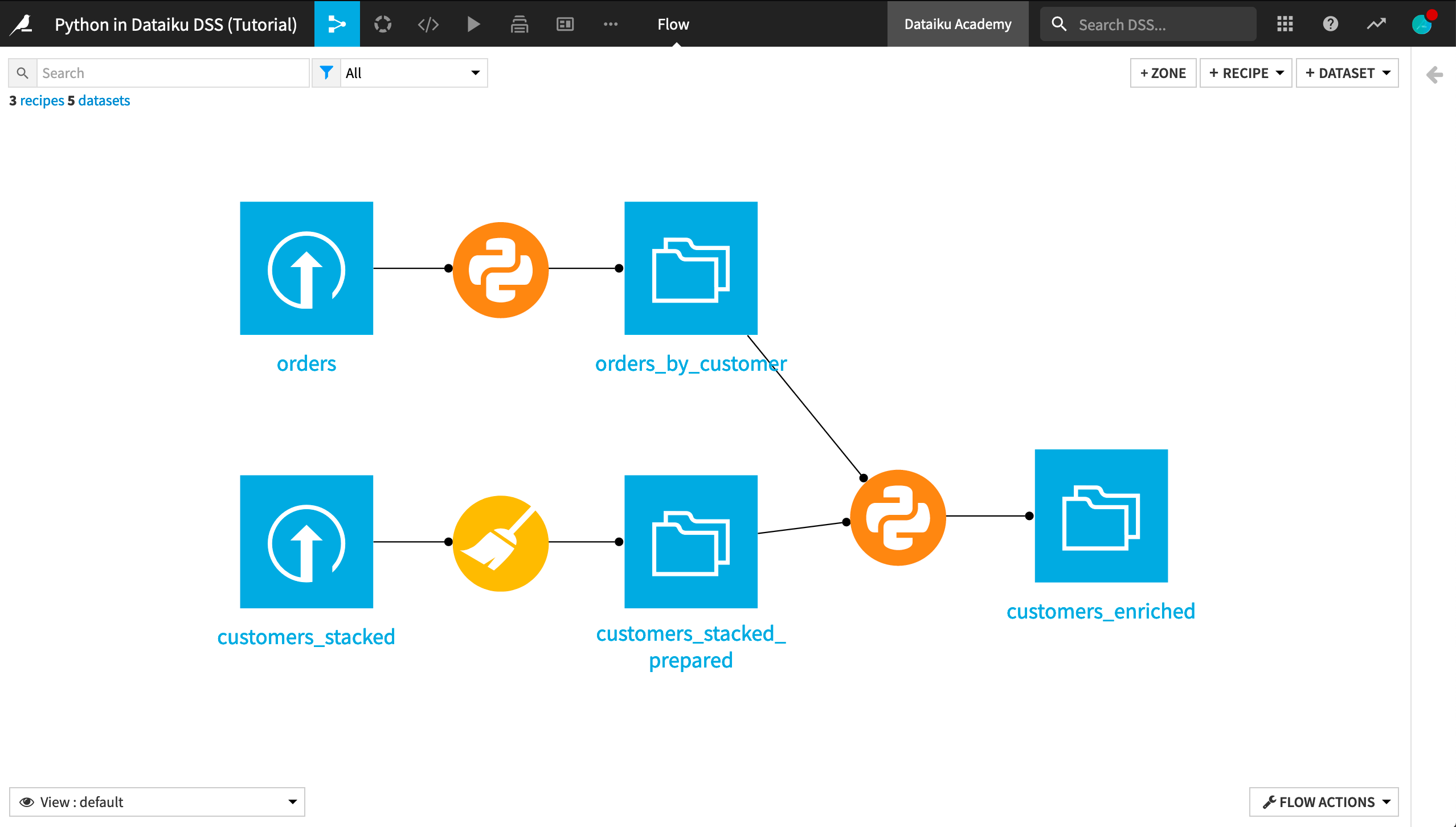

Recall that the notebook is a lab environment, intended for experimentation. Therefore, the join we performed between the dataframes isn’t reflected in the current Flow as part of our data pipeline until we create a recipe.

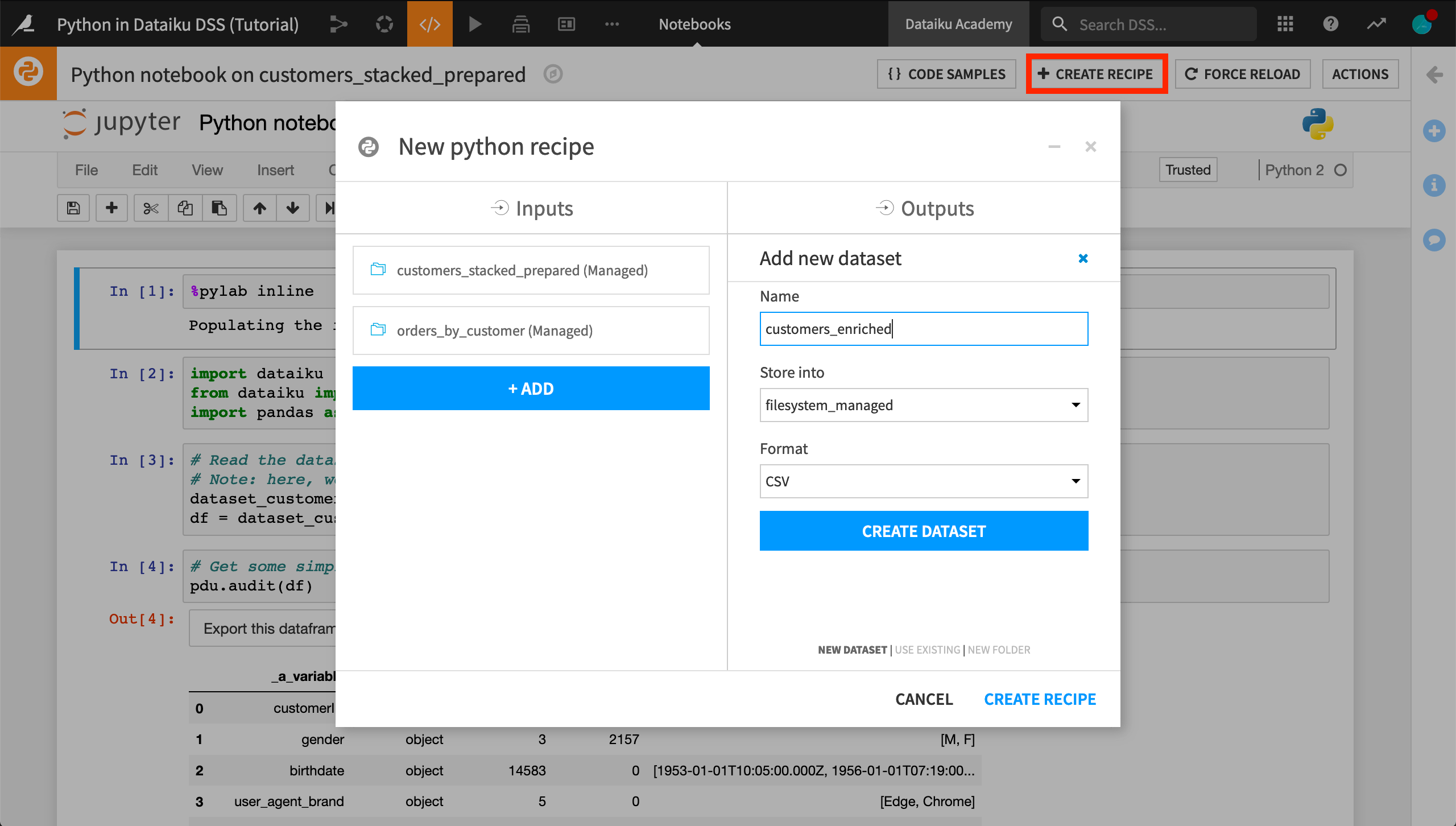

From within the notebook, click Create Recipe > Python recipe.

It has automatically included the customers_stacked_prepared dataset as an input, but now we’ll want to add one input and name the output.

Add orders_by_customer as an input dataset.

Create a new output dataset called

customers_enriched.

Note

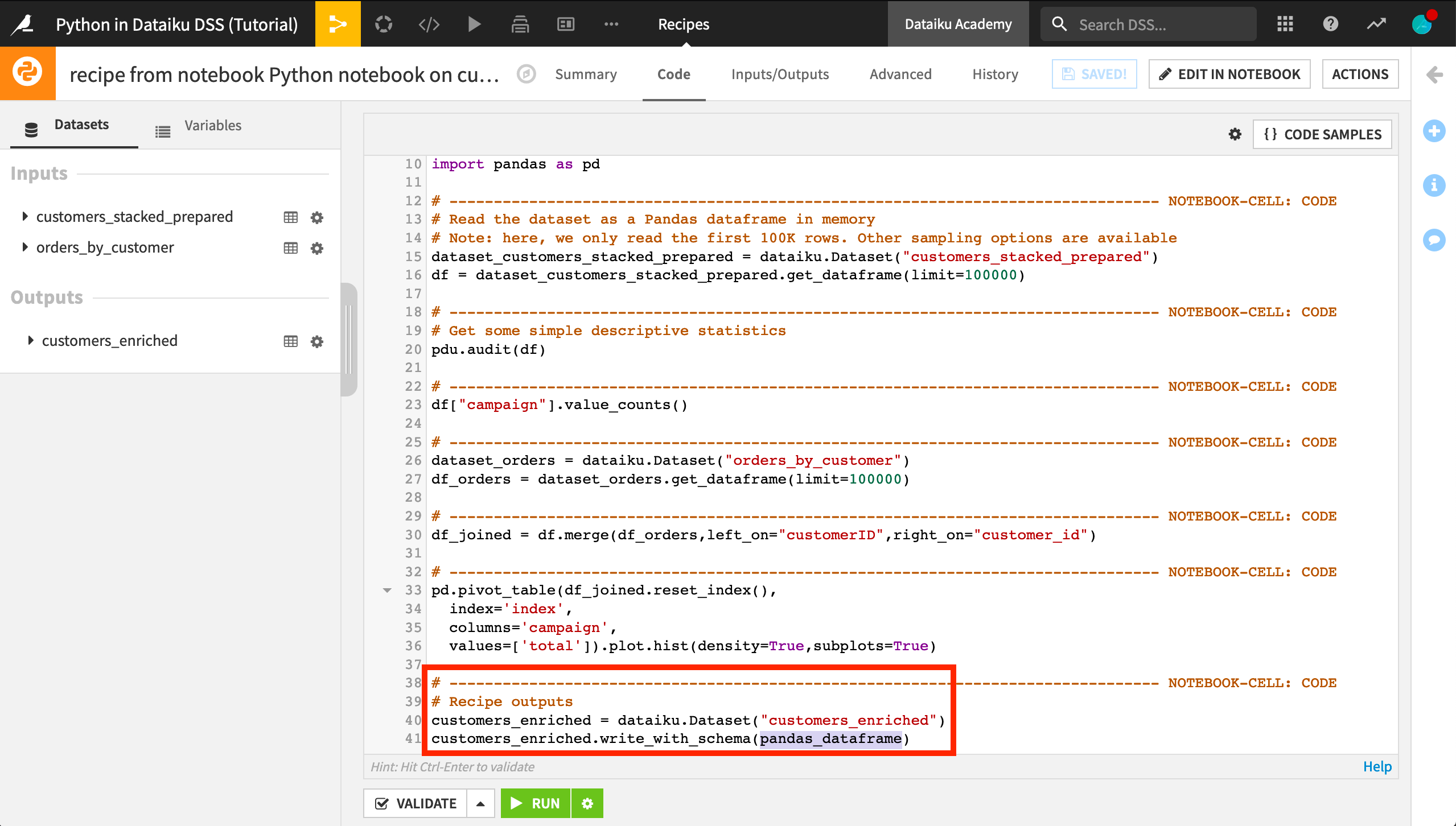

In the resulting recipe, note that Dataiku adds a number of commented lines, each of which shows the beginning of a notebook cell. This way, if we need to edit the recipe in a notebook again, our existing cells are maintained.

Dataiku has added lines for the recipe output to the customers_enriched dataset. However, it cannot know which dataframe we want to output. Accordingly:

In the last line, change

pandas_dataframetodf_joined.

Run the recipe and see how the Flow is affected.

What’s next¶

Congratulations! You’ve taken the first steps on Python integration in Dataiku DSS. As you progress, you’ll find that:

Python is everywhere. You can use Python code to create:

Standard webapps with custom Python backends

Scripts that use the Python API to remotely control Dataiku

… and more!

Python is extensible. You can create:

Code environments to manage package dependencies and versions for your projects

Custom Python libraries: reuse code all over the place. Should connect in to the Git-based dev workflow