Crawl budget prediction for enhanced SEO with the OnCrawl plugin¶

We’re pleased to share that Dataiku has published an OnCrawl plugin.

At OnCrawl, we are convinced that data science, like technical SEO, is essential to strategic decision-making in forward-looking companies today. The complexity of today’s markets, the sheer volume of data available affecting SEO, the growing opacity of search engine ranking algorithms, and the ability to easily manipulate and analyze data now makes the difference between SEO as a marketing tool, and SEO as an executive-level strategy.

The search market is getting more and more competitive. It is thus important to optimize your crawl budget - a budget allowed by Google to analyze and rank your website - in order to focus on the right SEO projects and to increase conversions. In this article, we are going to explain how to easily deploy a method to predict your crawl budget.

What is OnCrawl?¶

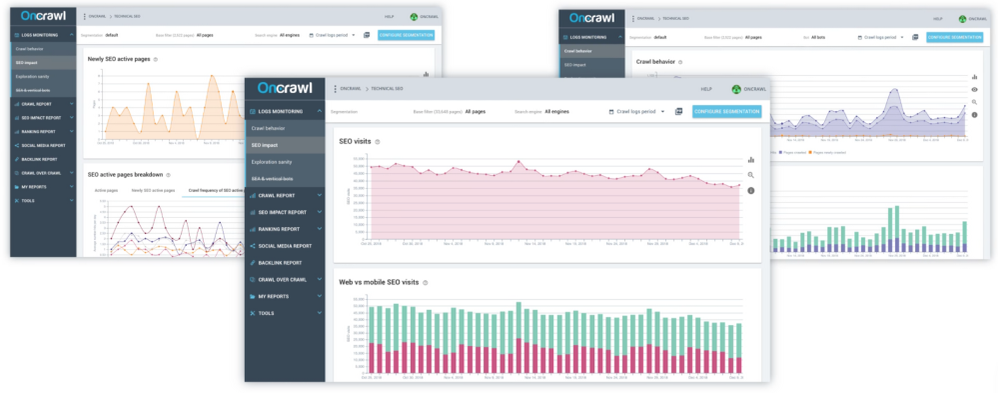

OnCrawl is an award-winning technical SEO platform that helps you open Google’s blackbox to make smarter decisions. The solution helps 1000+ companies improve their traffic, rankings and revenues by supporting their search engine optimization plans with reliable data and actionable dashboards. The platform offers:

A semantic SEO crawler in SaaS mode that visits and analyzes all the pages of your site to provide you with enhanced information on content, indexing, internal linking, performance, architecture, images, etc.

A log analyzer that helps you understand how Google behaves on each page of a site.

A Data Platform that allows you to cross-analyze third-party data (logs, backlinks, analytics, csv, rankings) with crawl data to understand their impact on site traffic.

How to install the OnCrawl plugin?¶

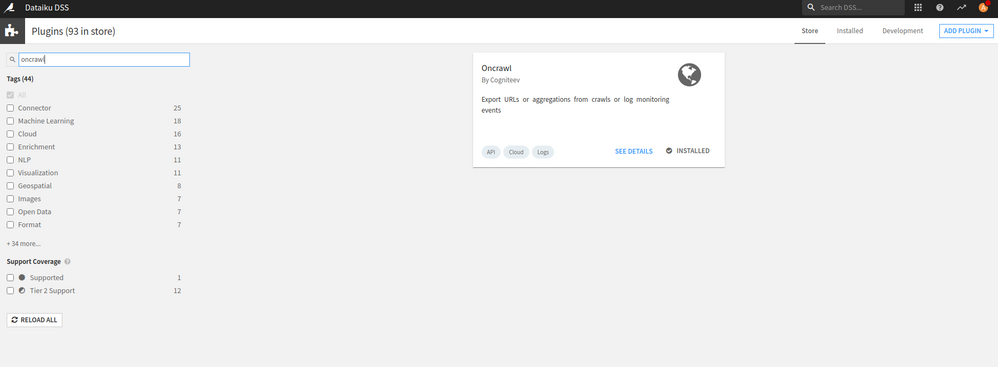

Some features of Dataiku DSS are delivered as separate plugins. A DSS plugin is a zip file.

Inside DSS, click the top right gear → Administration → Plugins → Store

In the search engine, type “OnCrawl” and click on “Install”:

The plugin is now ready-to-use.

How to use the OnCrawl plugin?¶

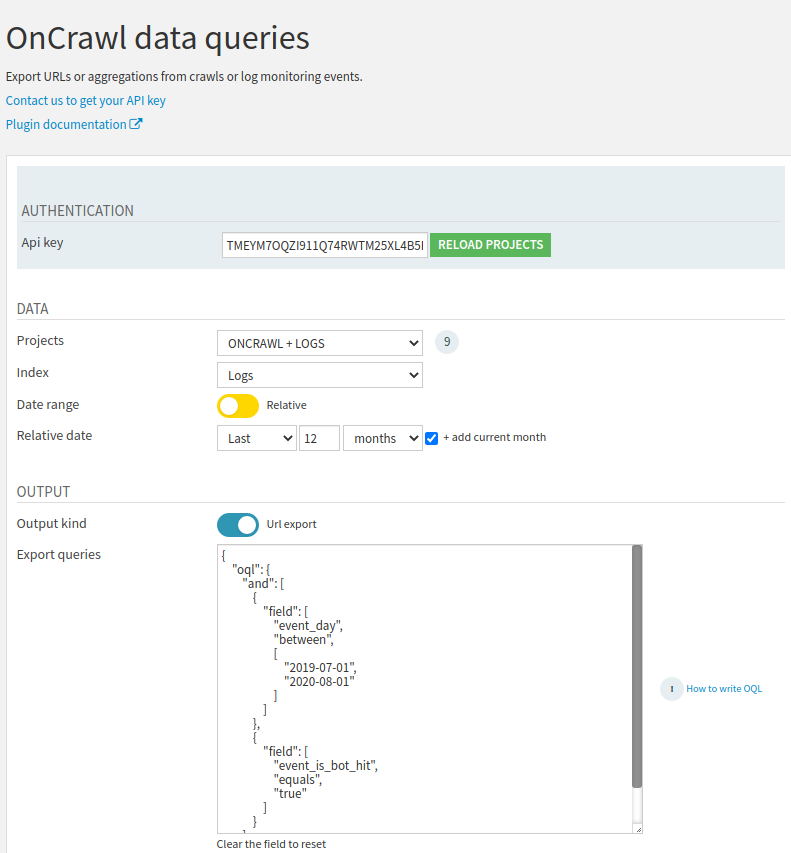

In your DSS flow, create a recipe “Oncrawl – Data queries”

Set an API access token

You will need to have a subscription that includes the OnCrawl API option. If you don’t have an API access yet, please reach out to our team using the Intercom blue chat button or by sending an email at sales@oncrawl.com

From your account settings page, scroll down to the “API Access Tokens” section. Click on “View API Access Tokens” to see the list of tokens you have generated or click on “Add API Access Token” if you want to create a new one.

After that, feel free to edit default configuration:

Choose project source: all or only a specific one

Choose data type among pages, links or logs

Define a crawl or logs timeframe

If you selected pages or links:

Choose a crawl configuration: an empty crawl configuration list means that you have no crawl available for the selected project or timeframe. Adjust these parameters.

Choose crawl source: all, only a specific one, or the last one into the date range selected

- Last step: choose the output type among aggregations or URL export

Aggregations: edit the JSON object to define your own aggregations: write one or several OQL (OnCrawl Query Language) queries into the array of aggregate queries. OQL language for aggregations is described here

URL export: edit JSON object writing your own OQL query to filter the output URL list. OQL language for URL export is described here

Using machine learning and data science to predict crawl budget¶

Method¶

Google daily sets up the amount of resources dedicated to crawl your website. This is what is called “Crawl Budget”. The mission of any SEO team is to drive Google’s crawl budget to the pages that matter. This article aims at explaining the method to predict your crawl budget and we’ll be sharing the full project with a zip file. Different use cases can be addressed:

Monitor your crawl budget by category or subcategory to detect SEO issues

Detect the best new products for the next few weeks (It could be interesting to highlight these products)

Monitor your crawl budget based on different Google bots to focus on the right technologies

Step 1: Get your logs with our plugin previously installed¶

Step 2: Install the Forecast Plugin¶

This plugin provides 3 visual recipes to train and deploy R forecasting models on yearly to hourly time series.

It covers the full cycle of data cleaning, model training, evaluation and prediction.

Clean time series: resample, aggregate and clean time series from missing values and outliers

Train models and evaluate errors on historical data: train forecast models and evaluate them on historical data using temporal split or cross-validation

Forecast future values and get historical residuals: use previously trained models to predict future values and/or get historical residuals

Step 3: Test our data flow¶

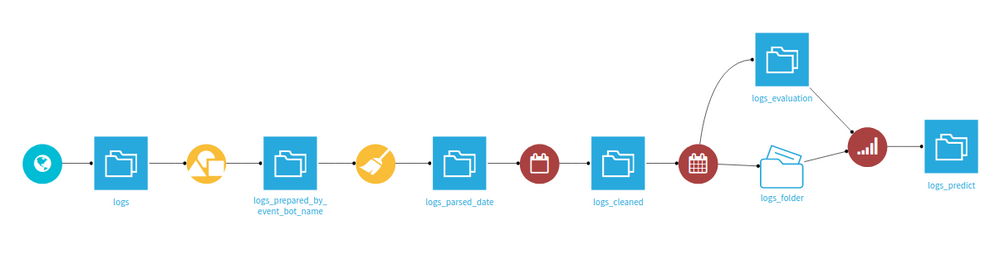

With the 2 Dataiku DSS plugins, the workflow is simple to use:

The first plugin gets your logs from OnCrawl platform

The first recipe groups data by day and by bot name

The second recipe parses the date for the next plugin

The second plugin resamples your time series, trains different models and uses previously trained models to predict future values

You can download the project zip file here

Results¶

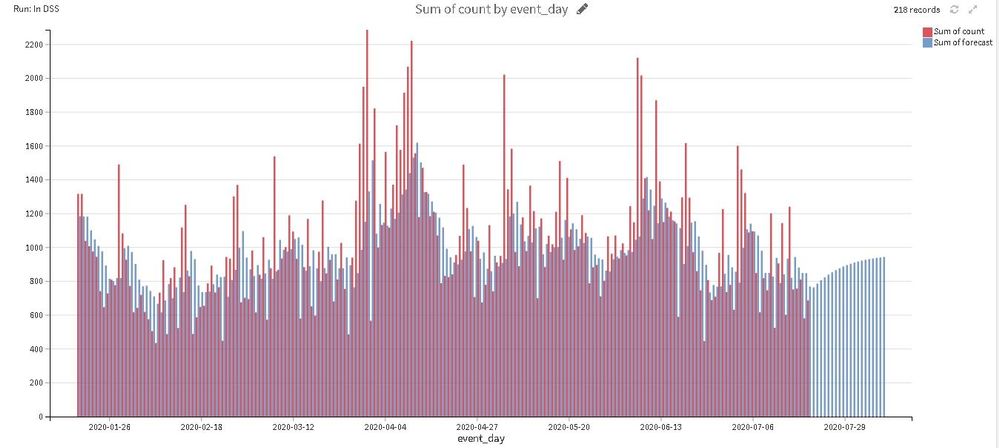

You can display the result directly in Dataiku DSS in order to compare the forecast with historical data.

2 important notes:

Please make sure your number of new pages is constantly equal because the crawl budget increases naturally with new pages. Then, if you notice that your crawl budget will increase, you know that your SEO forecast will be correct.

If you want to predict a very long period, you need more historical data.

Going further with crawl budget optimization¶

If you wish to enhance your crawl budget, please read this blog post to take advantage of advanced OnCrawl features to improve your efficiency during daily SEO monitoring.

You can now improve your data workflow and monitor your crawl budget by category or subcategory in order to detect SEO issues or detect the best new products for the next weeks. It might also be interesting to monitor your crawl budget based on the different Google bots (google_image, google_smartphone, google_web_search…).

If you don’t have a Dataiku DSS licence, you can test this project with the free edition.

You can try OnCrawl with the 14-day free trial and request API access by reaching out using the blue Intercom chat button.

About the author¶

Vincent Terrasi is Product Director at OnCrawl since 2019 after working as a Data Marketing Manager at OVH. He is also the co-founder of dataseolabs.com where he offers training about Data Science and SEO.

Vincent has a very varied background with 7 years of entrepreneurship for his own sites, then 3 years at M6Web and 3 years at OVH as Data Marketing Manager. He’s a pioneer in Data Science and Machine Learning for SEO in France.