Using Jupyter Notebooks in DSS¶

Jupyter notebooks are a favorite tool of many data scientists. They provide users with an ideal environment for interactively analyzing datasets directly from a web browser, combining code, graphical output, and rich content in a single place.

Given their usefulness for doing data science, Jupyter notebooks are natively embedded in Dataiku DSS, and tightly integrated with other components.

Creating a Jupyter Notebook¶

Depending on your objectives, you can create a Jupyter notebook in Dataiku DSS in a number of different ways.

Create a Blank Notebook¶

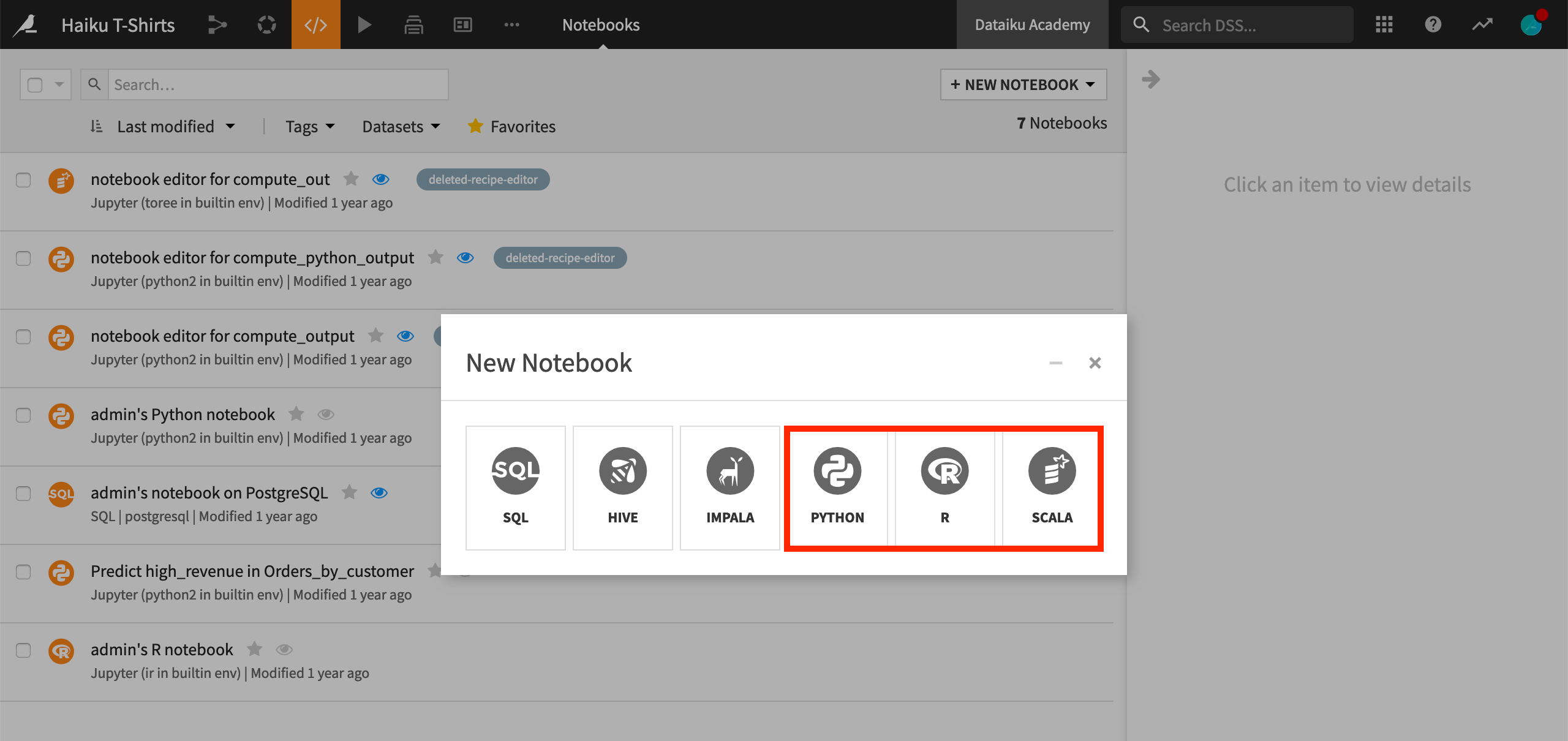

In order to create a blank notebook, navigate to the Notebooks section from the Code menu of the top navigation bar (shortcut

G+N).Click + New Notebook > Write your own.

You will then have the choice of creating a code notebook for a variety of languages.

At this point, you can start a Jupyter notebook from a Python, R, or Scala kernel in the code environment of your choice. You will also be asked to choose a starter template. For example, will you be reading in a dataset from memory or using Spark?

Create a Notebook from a Dataset¶

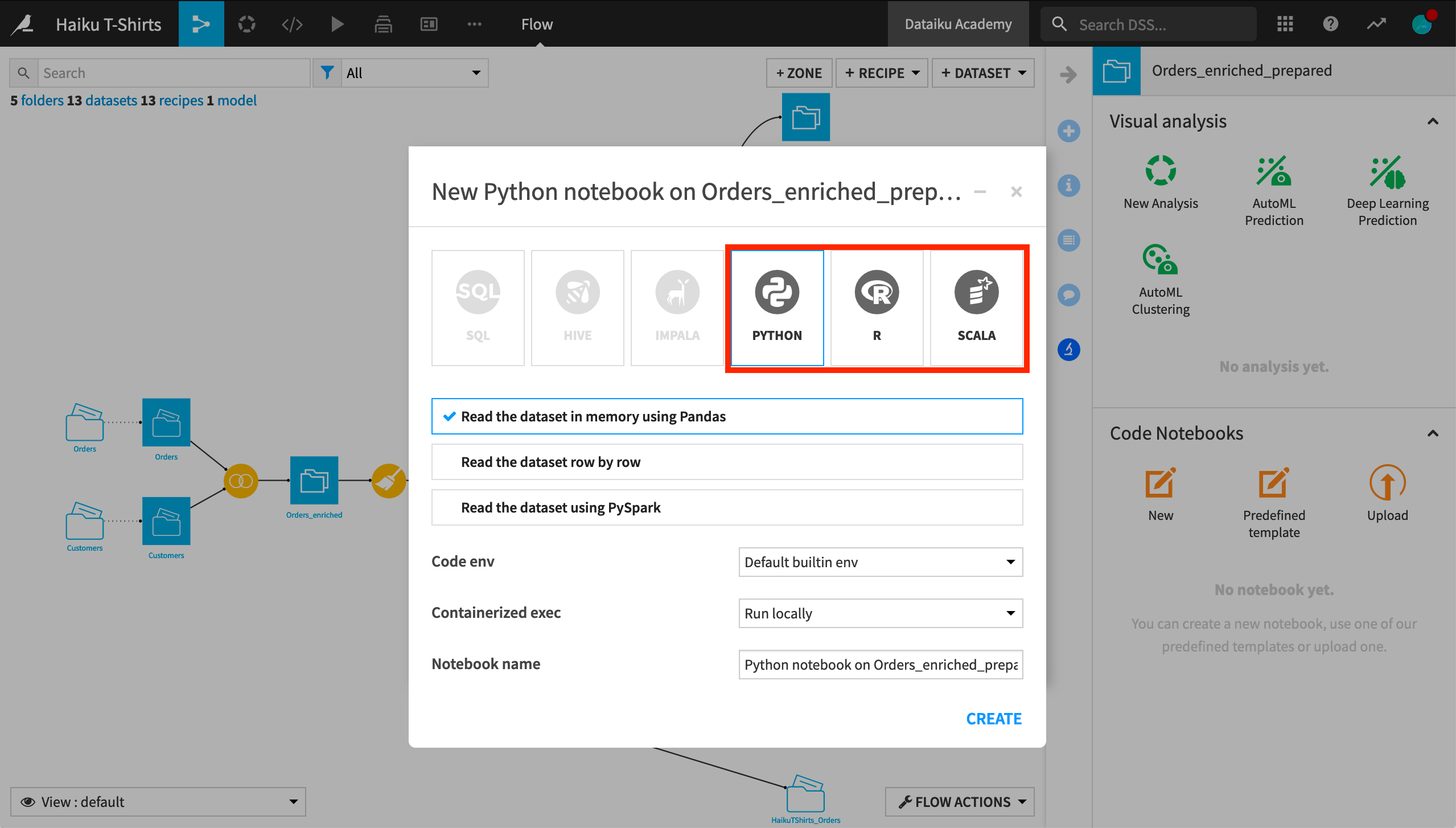

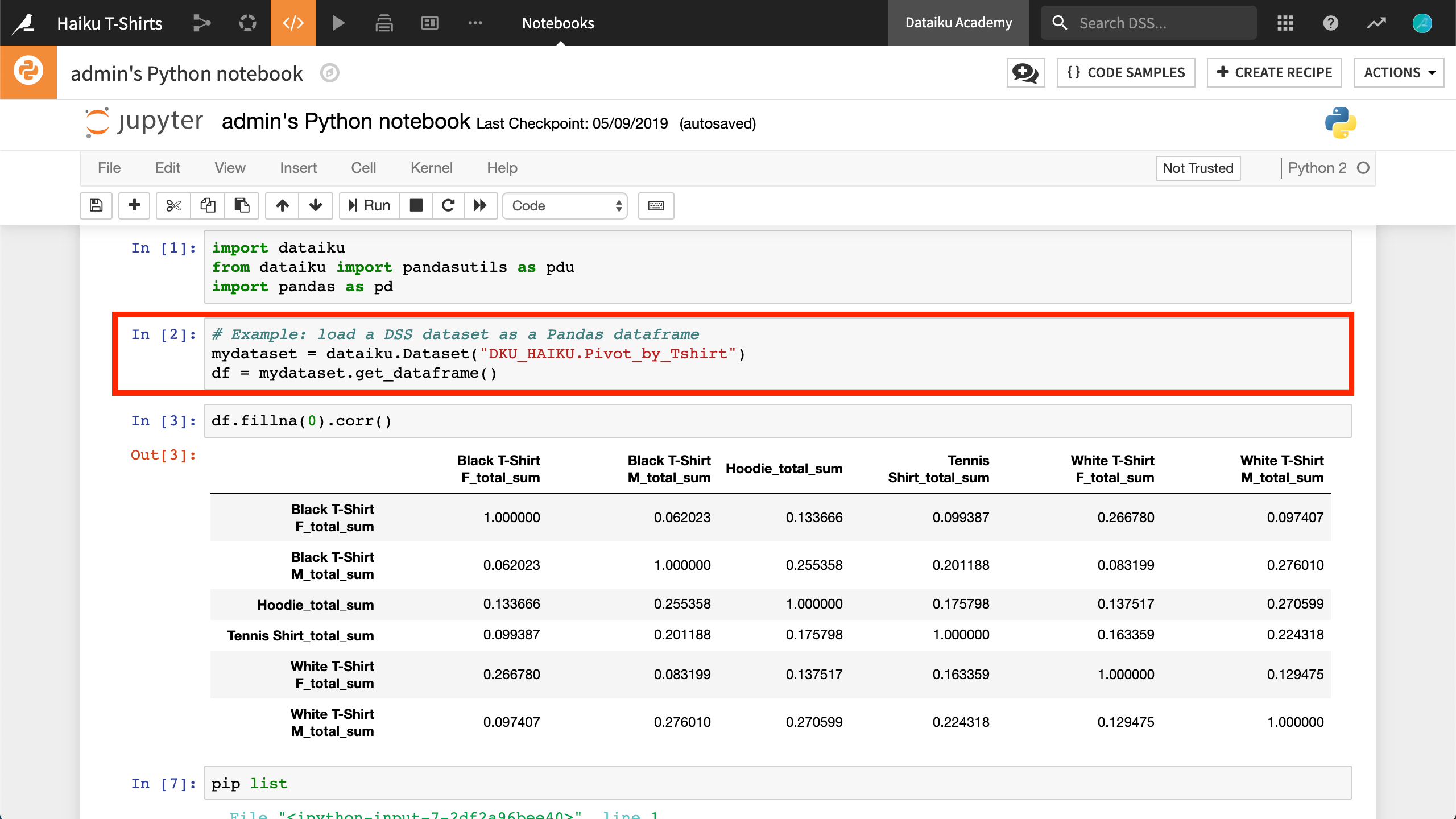

A second option for creating a notebook simplifies reading in the dataset of interest using the Dataiku API.

From the Flow, select a dataset and enter the Lab from the Actions menu of the right sidebar or directly from the Lab menu.

Create a new code notebook.

The starter code of a notebook created in this manner will have already read in the chosen dataset to a df variable, whether it may be a Pandas, R, or Scala dataframe.

Create a Notebook from a Recipe¶

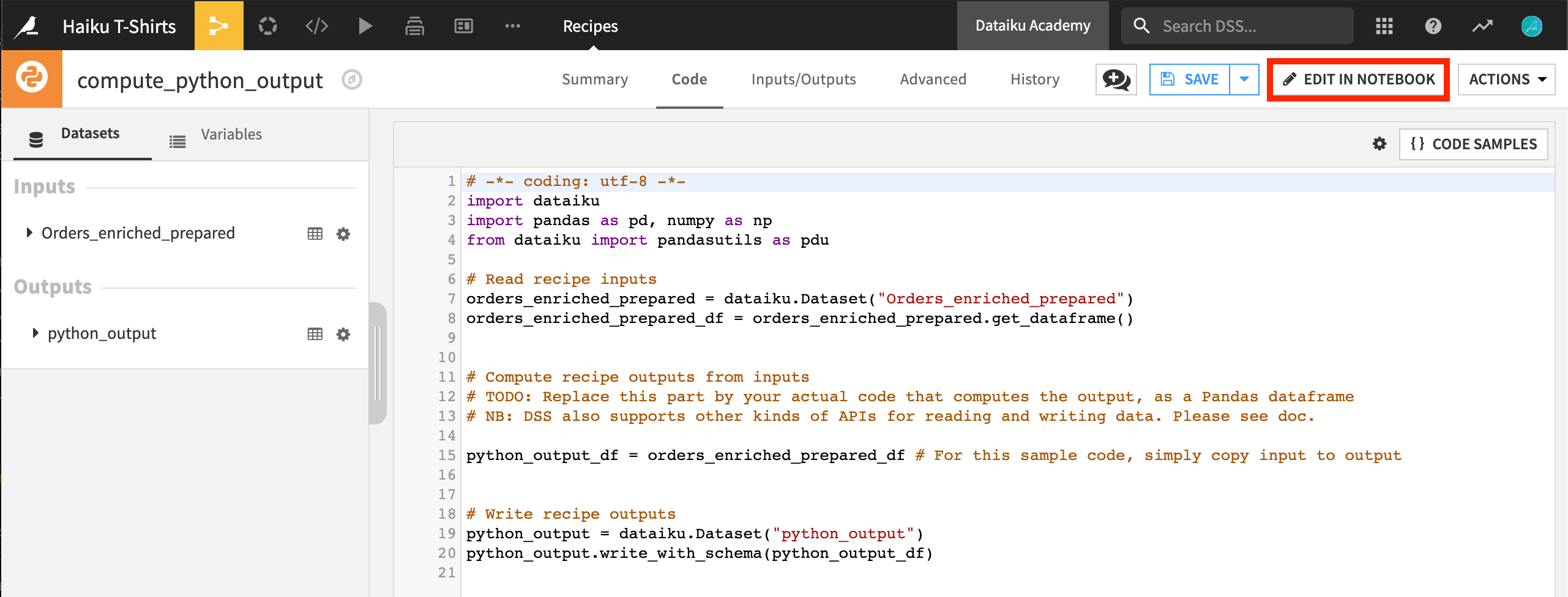

One last option is similar to the Lab route.

From the Flow, select a dataset and create a Python, R, or Scala code recipe.

You can then select the Edit in Notebook option.

This will take you into a Jupyter notebook where you can interactively workshop the recipe before saving it back into the Flow.

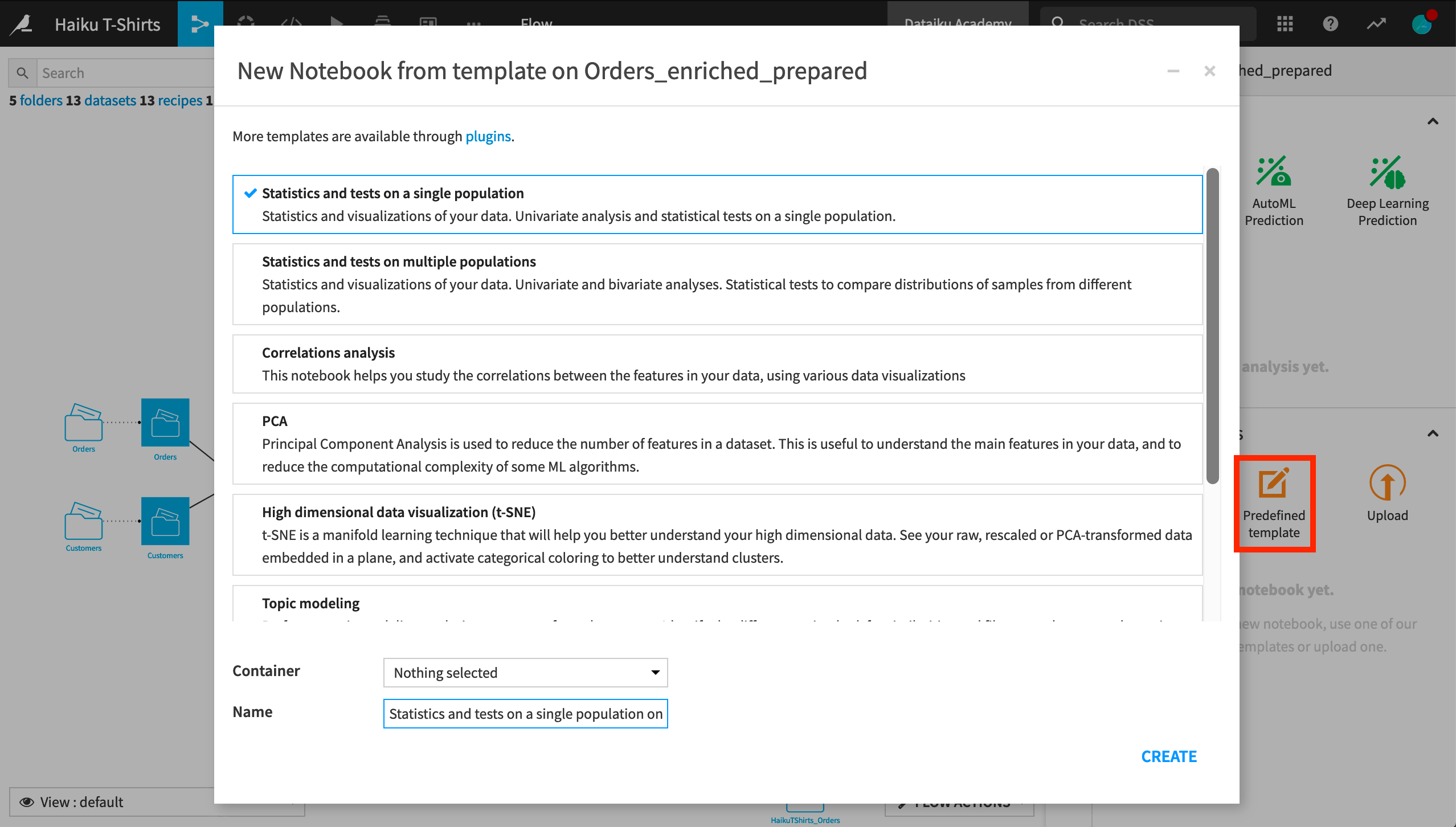

Pre-defined Notebook Templates¶

Another useful feature of Jupyter notebooks in Dataiku DSS is pre-defined code notebooks to kickstart common kinds of statistical analyses, such as dimensionality reduction, time series, or topic modeling. You can run these notebooks as given, or modify them to go deeper into an analysis.

Create one by entering the Lab and choosing a Pre-defined template instead of a new one.

You can also create your own notebook templates through the plugin system.

For more information about pre-defined notebooks, please see the product documentation.

Sharing Output from Jupyter Notebooks¶

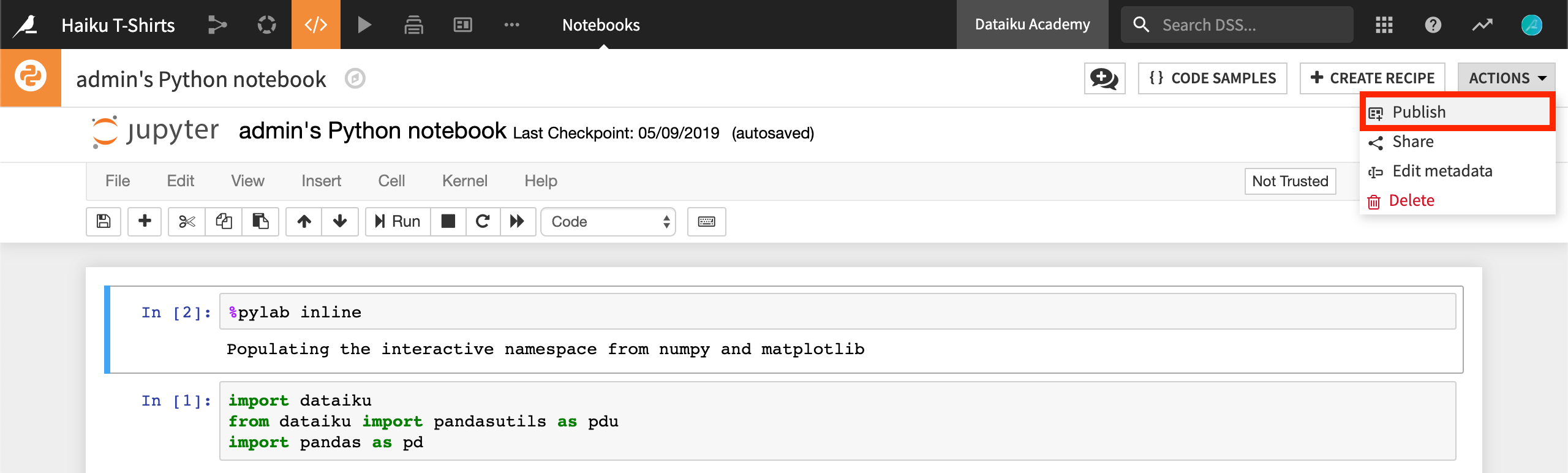

For a collaborative platform like Dataiku DSS, the ability to share work and analyses is of high importance. Dataiku DSS allows you to save static exports of Jupyter notebooks in an HTML format, which can be shared on dashboards.

To share a notebook on a dashboard, click Publish from the Actions menu of the notebook and indicate the dashboard and slide where it should appear.

Note

This also adds the notebook to the list of saved insights.

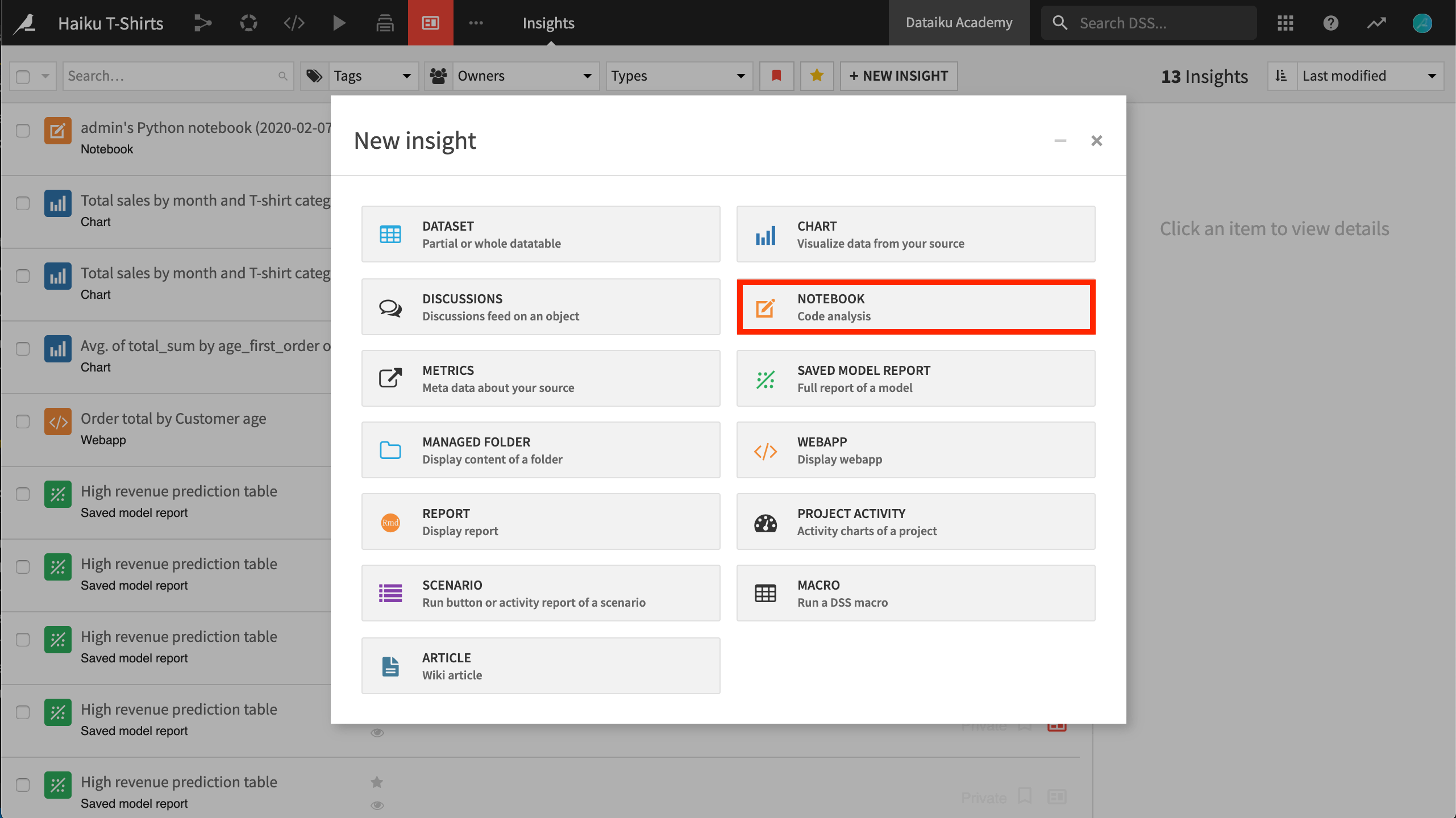

Alternatively, you can first create the insight before publishing it on a dashboard.

Navigate to the Insights menu (

G+I) and click +New Insight.Choose Notebook from the available options.

To learn more about sharing Jupyter notebooks as insights, please see the product documentation.

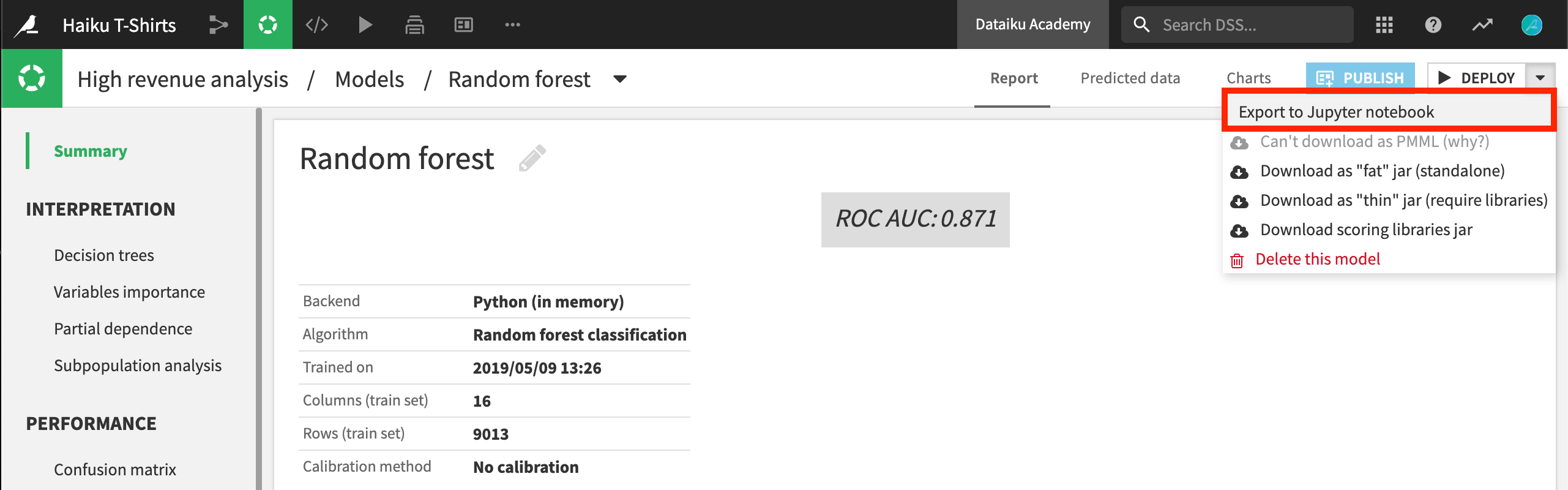

Generating a Notebook from a Model¶

Finally, another interesting feature is the ability to create a Jupyter notebook directly from a trained machine learning model.

For explanatory purposes, you can export similar versions of models trained using the in-memory Python engine to a Jupyter notebook. You can access this feature from the caret menu next to the Deploy button.

For more information, please consult the product documentation.

What’s Next?¶

Jupyter notebooks are first-class citizens in Dataiku DSS. They are in the toolbox of most of the data scientists, and they make a great environment for interactively analyzing your datasets using Python, R, or Scala.

To learn more about notebooks in Dataiku DSS, including SQL notebooks, please see the product documentation on code notebooks.