Hands-On: Object Detection¶

In this hands-on lesson, we’ll apply an object detection task to our Image Classification project. We’ll make use of two elements in the Flow that we haven’t used yet. Objects to detect is a folder that contains images, and Object_labels is a dataset that contains labeled images with bounding-box coordinates.

Image classification and object detection tasks can be achieved using a deep learning model that is based on a neural network. The difference is, in image classification, a neural network is used to return the probability that the image belongs to a class, while in object detection, a neural network is used to return the position of detected objects in images, the class of the object, and the associated probability.

To accomplish an object detection task, we’ll use the Object detection in images plugin that we installed in the lesson, Hands-On: Install the Deep Learning Plugins. Our objective is to download a deep learning object detection model and re-train it. There is no coding required.

Explore the Plugin’s Components¶

Before performing object detection tasks, let’s explore the components of the Object detection in images plugin.

From the Apps menu, go to Plugins, then choose the Installed tab to view installed plugins. Click the Object detection in images plugin to view its components.

The plugin includes the following recipes and macros:

Recipe: Fine-tune detection model

Recipe: Detect objects in images

Recipe: Display bounding boxes

Recipe: Detect objects in video

Macro: Object detection endpoint

Macro: Download pre-trained detection model

We will use these recipes and macros to accomplish our object detection task.

Add a Pre-Trained Detection Model to the Flow¶

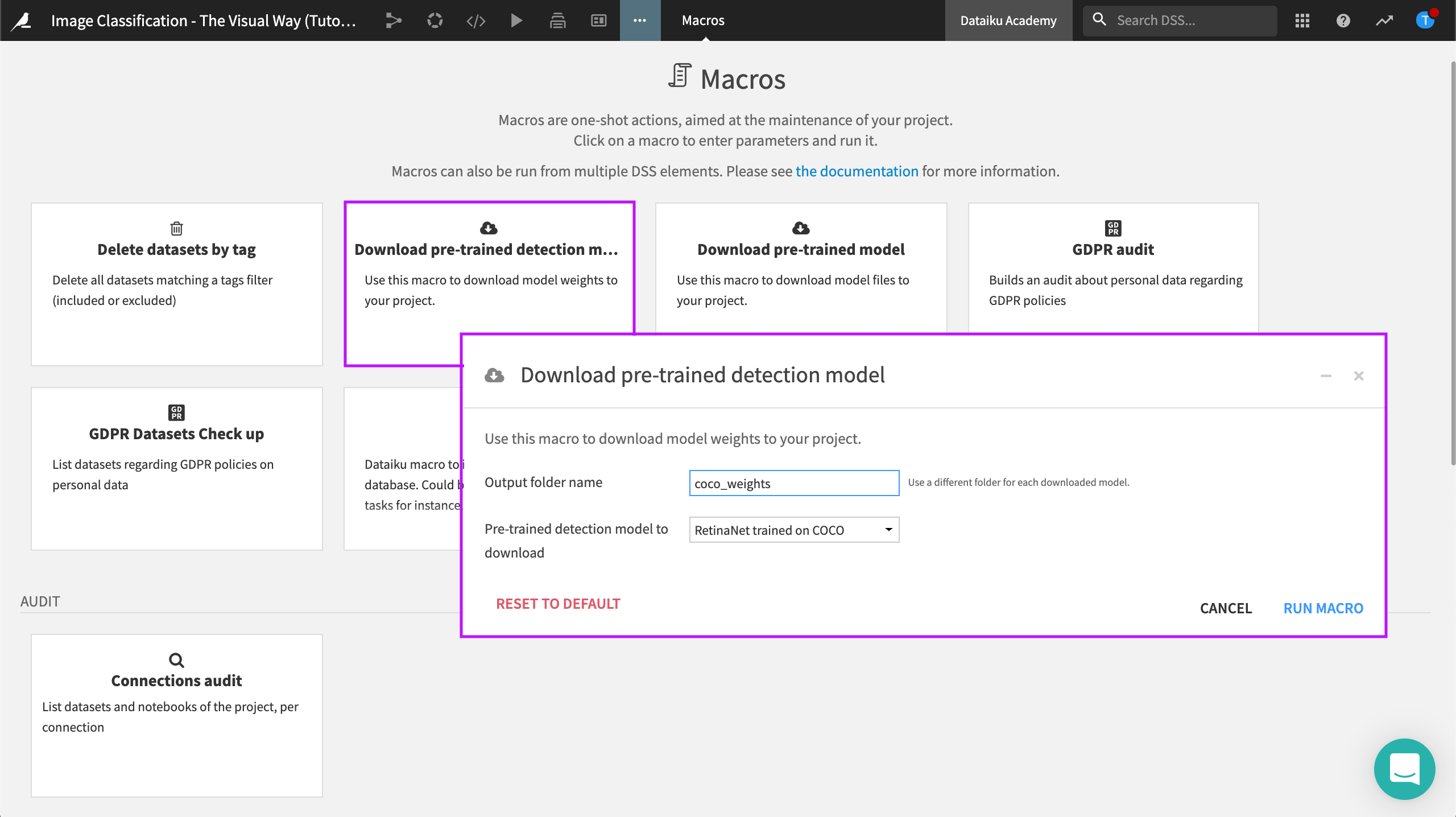

Navigate to the project home, then to Macros in the top navigation bar. Click Download pre-trained detection model.

In the Download pre-trained model dialog, type coco_weights as the output folder name. Click Run Macro.

Note

COCO is a large-scale object detection, segmentation, and captioning dataset.

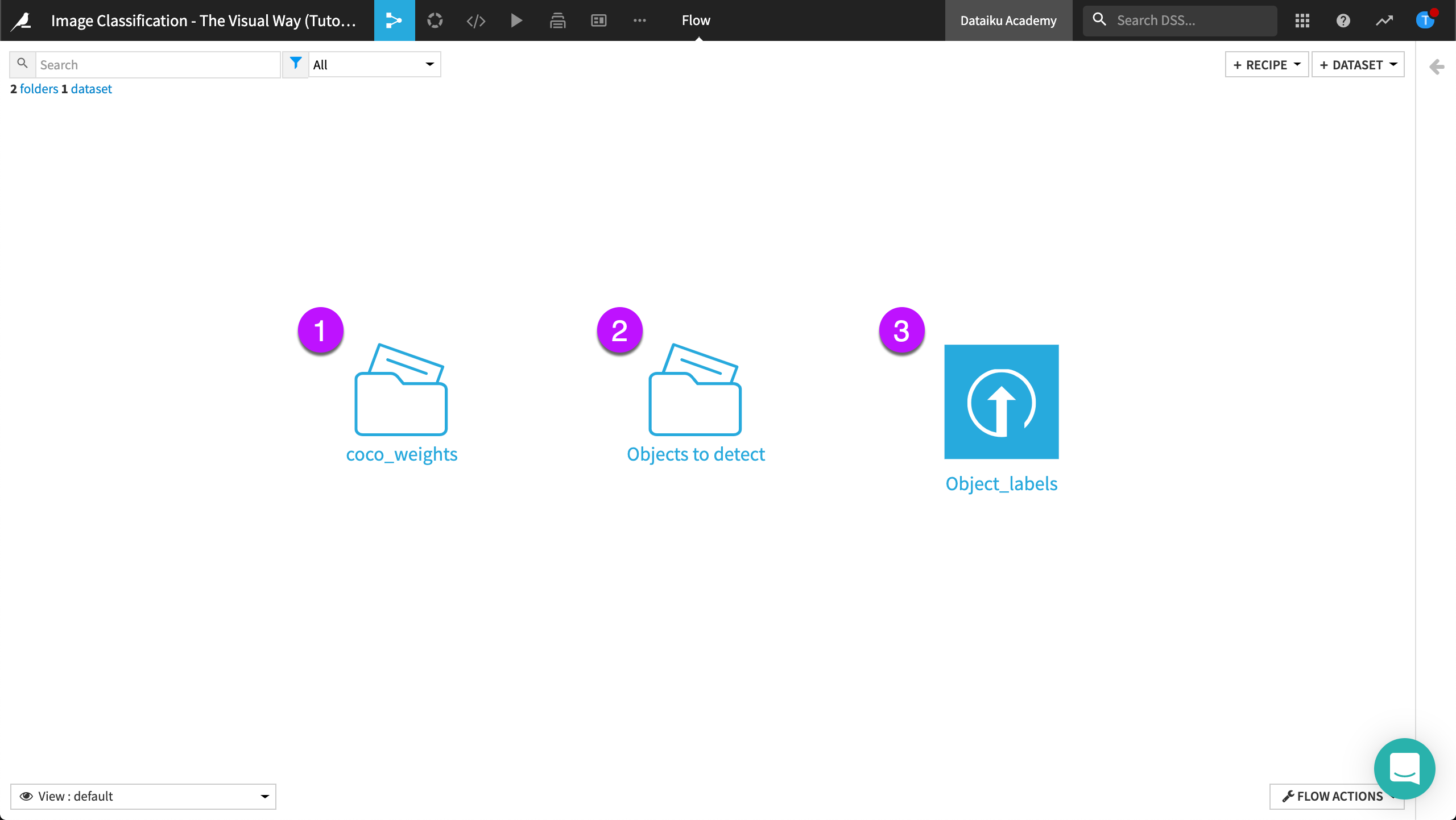

When the process completes, click Close, then return to the Flow to see that the folder has been added and the pre-trained model is ready for use.

Predict the Missing Labels¶

We’ll use the plugin recipe Retrain object detection model to learn from the labeled images. This will allow us to predict the missing labels. This recipe requires three inputs:

The pre-trained model,

A folder containing images, or objects, we want to detect, and

A dataset containing bounding box labels.

From the + Recipe dropdown, select Object detection in images, then select the Retrain object detection model recipe.

In the create recipe dialog, select Objects to detect as the images folder, and Object_labels as the dataset of labeled bounding box coordinates. Then select coco_weights as the initial weights to use. Create a new output folder called images_weights. Click Create Folder, then click Create.

In the Retrain object detection model dialog, set the Dataset With Labels settings as follows:

Image filename column: path

Label column: class_name

x1 column: x1

y1 column: y1

x2 column: x2

y2 column: y2

Set the Configuration settings as follows:

Minimum/maximum file size > 800 x 1737

Set the Training settings as follows:

Number of epochs > 8

Select Reduce LR on plateau

Save the recipe, then click Run.

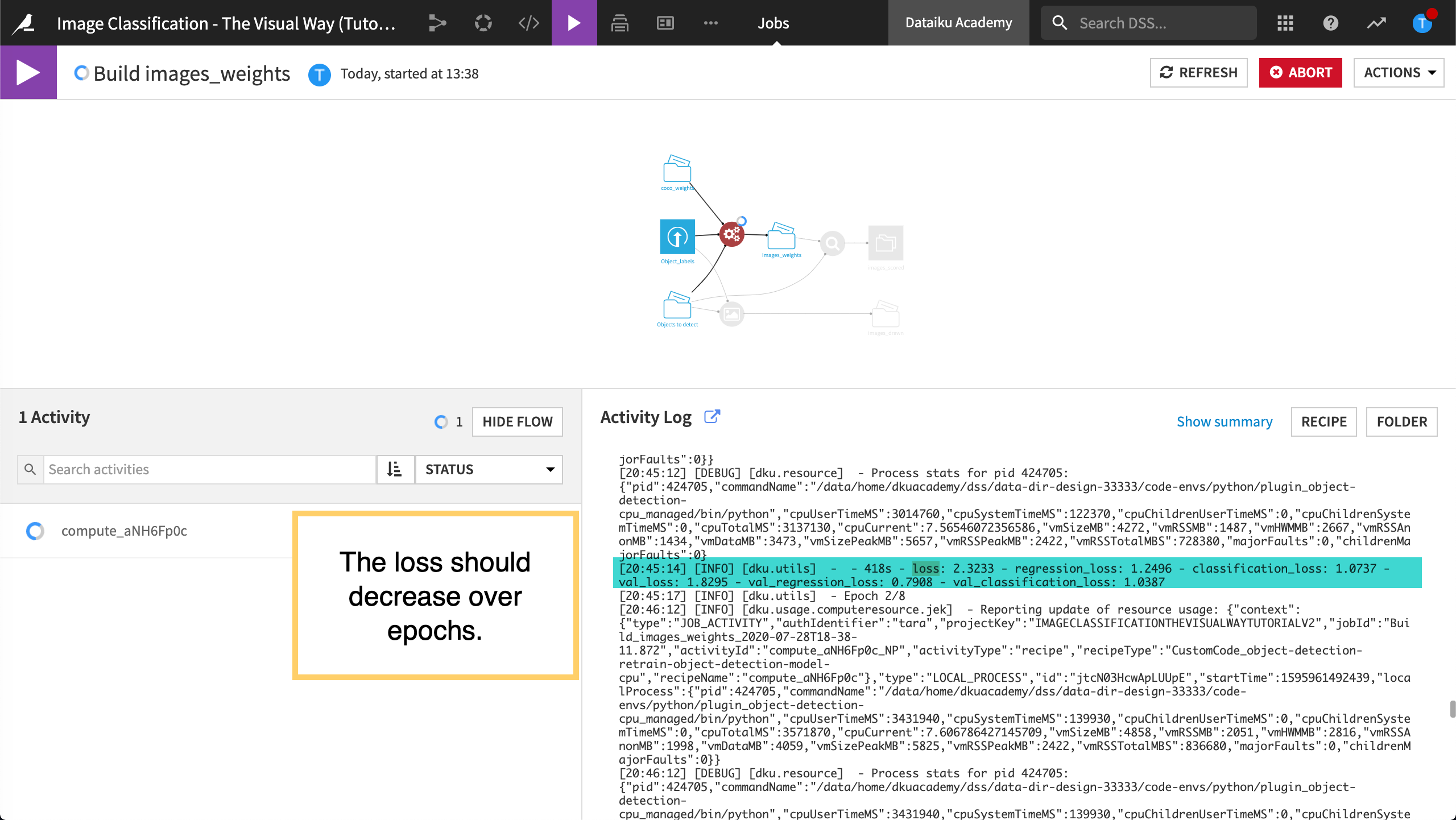

Since this computation is time consuming, let’s view the loss evolution over epochs while the recipe is running.

View the Loss Evolution Over Epochs¶

While our Retrain object detection model recipe is running, let’s monitor the job.

Note

Number of epochs is the number of times that the model will run through the entire training dataset. For example, eight epochs means the model will run through the training dataset, Object_labels, eight times.

In the Jobs menu, click to view the most recent job in the left panel.

Then click to view the Activity log, to monitor the loss for each epoch.

The loss is a metric that measures the error of the model. By monitoring the loss over epochs, we can monitor the model’s performance. By searching the log for “epoch”, we can see that the loss is decreasing for the first three epochs. If the loss starts to increase, we might want to stop training earlier by setting a lower number of epochs.

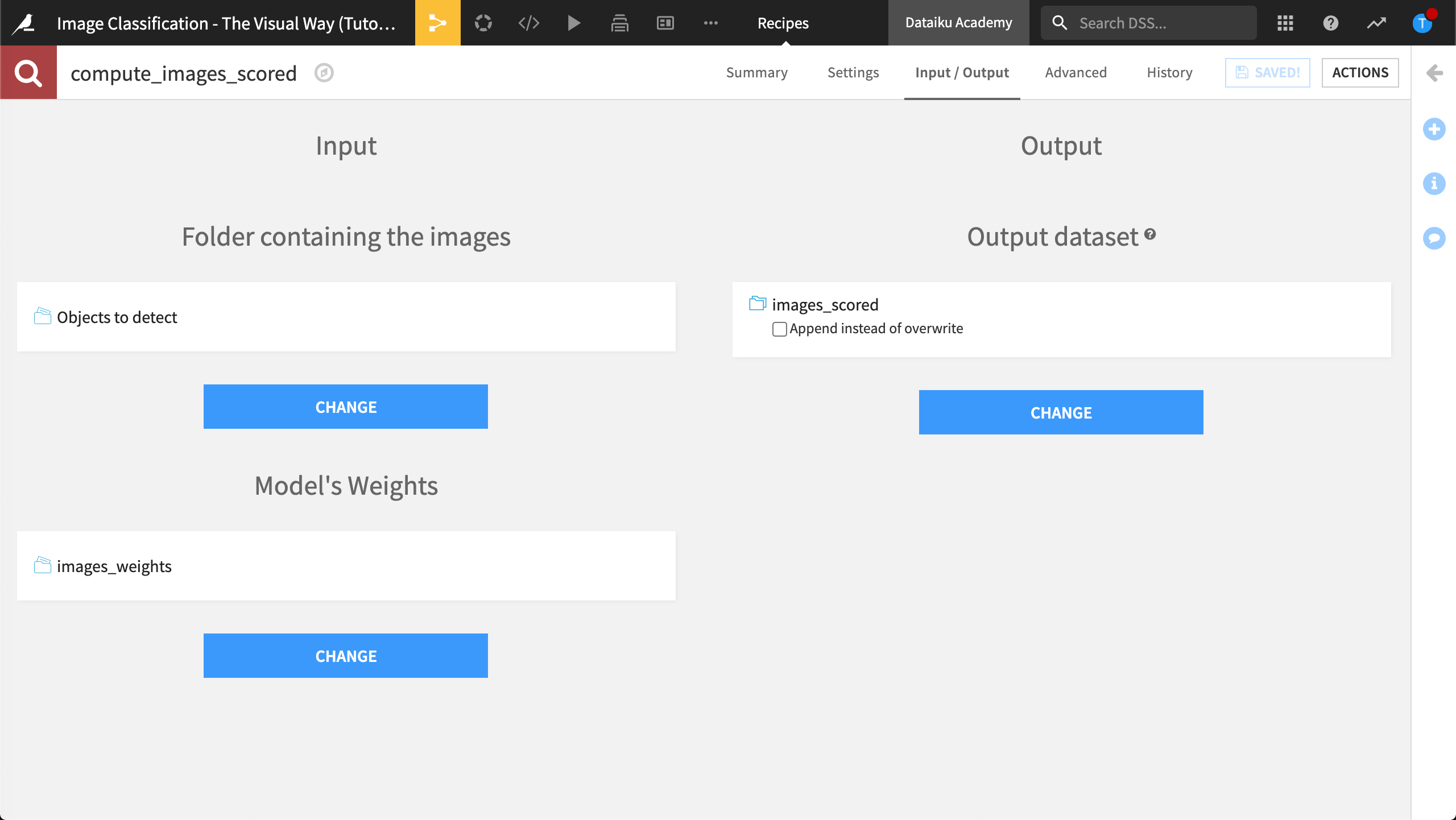

Score Images¶

Let’s create a dataset containing the locations of all found objects in our Objects to detect folder.

Return to the Flow.

From the + Recipe dropdown, select Object detection in images.

Select the Detect objects in images recipe.

In the create recipe dialog, select Objects to detect as the images folder, and images_weights as the model’s weights. Create a new output folder called images_scored. Click Create Dataset then click Create.

Keep the configuration settings, then click Run.

Explore Prediction Labels¶

In the Flow, select the images_scored dataset.

Analyze the class_name column to view the count of lion images vs. tiger images.

Analyze the confidence column to view the minimum value (minimum confidence).

Note

In this tutorial, a small number of images is used to help reduce processing time. However, a real-world dataset is likely to contain a much larger number of images. This would likely increase the minimum socre but would also increase processing time.

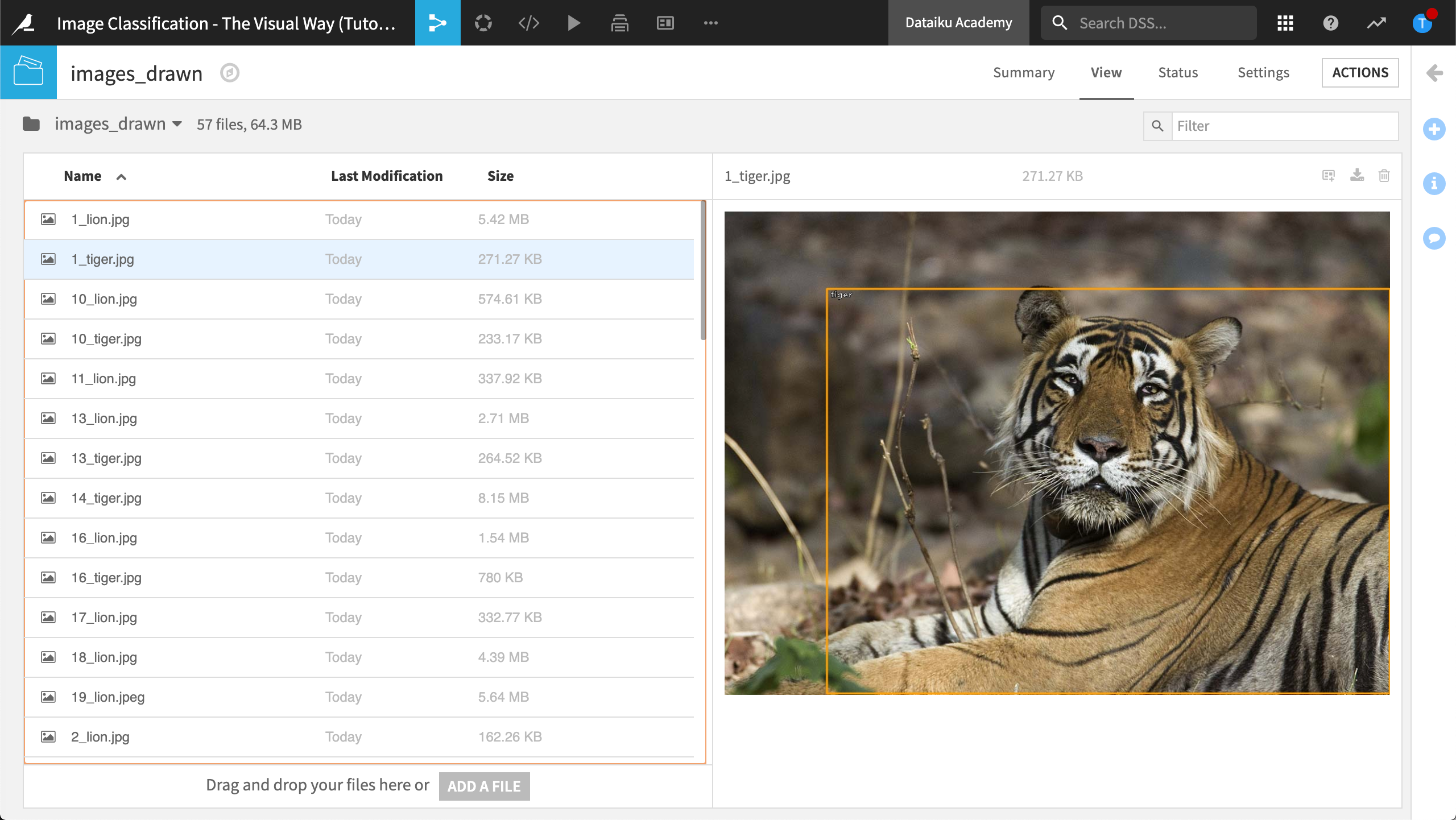

Draw Bounding Boxes¶

Let’s draw bounding boxes around the detected objects in our images to help visualize how our model is doing.

Return to the Flow.

From the + Recipe dropdown, select Object detection in images.

Select the Draw bounding boxes recipe.

In the create recipe dialog, select Objects to detect as the images folder, and Object_labels as the associated bounding boxes. Create a new output folder called images_drawn. Click Create Folder then click Create.

In the Draw bounding boxes dialog, ensure the Draw label checkbox is selected, then click Run.

Explore Images With Bounding Boxes¶

Let’s explore the images with the newly drawn bounding boxes.

In the Flow, select the images_drawn dataset.

Select an image to view the bounding box.

What’s Next¶

You have completed the image classification hands-on lessons and learned how to extend image classification to images within images. If you have registered for the course, you can test your knowledge by completing the course checkpoint. You can even try image classification on your own datasets!