Concept: Schema Propagation & Consistency Checks¶

A dataset in Dataiku DSS has a schema that describes its structure. The schema of a dataset includes the list of columns with their names and storage types. Often, the schema of our datasets will change when designing the Flow.

Some changes that can impact the schemas of output datasets or downstream datasets in our Flow include the following:

Changes in a recipe, such as adding and removing columns, renaming columns, or changing the storage type of a column

Changes to the columns of source datasets

Schema changes when designing a Flow are to be expected. However, once a Flow is in production, one should be acutely aware of any schema changes and what impact they may have on downstream datasets.

All schema changes eventually need to be propagated downstream in the Flow. When we want to build a Flow with consistent dataset schemas, there are tools we can leverage.

Tip

This content is also included in a free Dataiku Academy course on Flow Views & Actions, which is part of the Advanced Designer learning path. Register for the course there if you’d like to track and validate your progress alongside concept videos, summaries, hands-on tutorials, and quizzes.

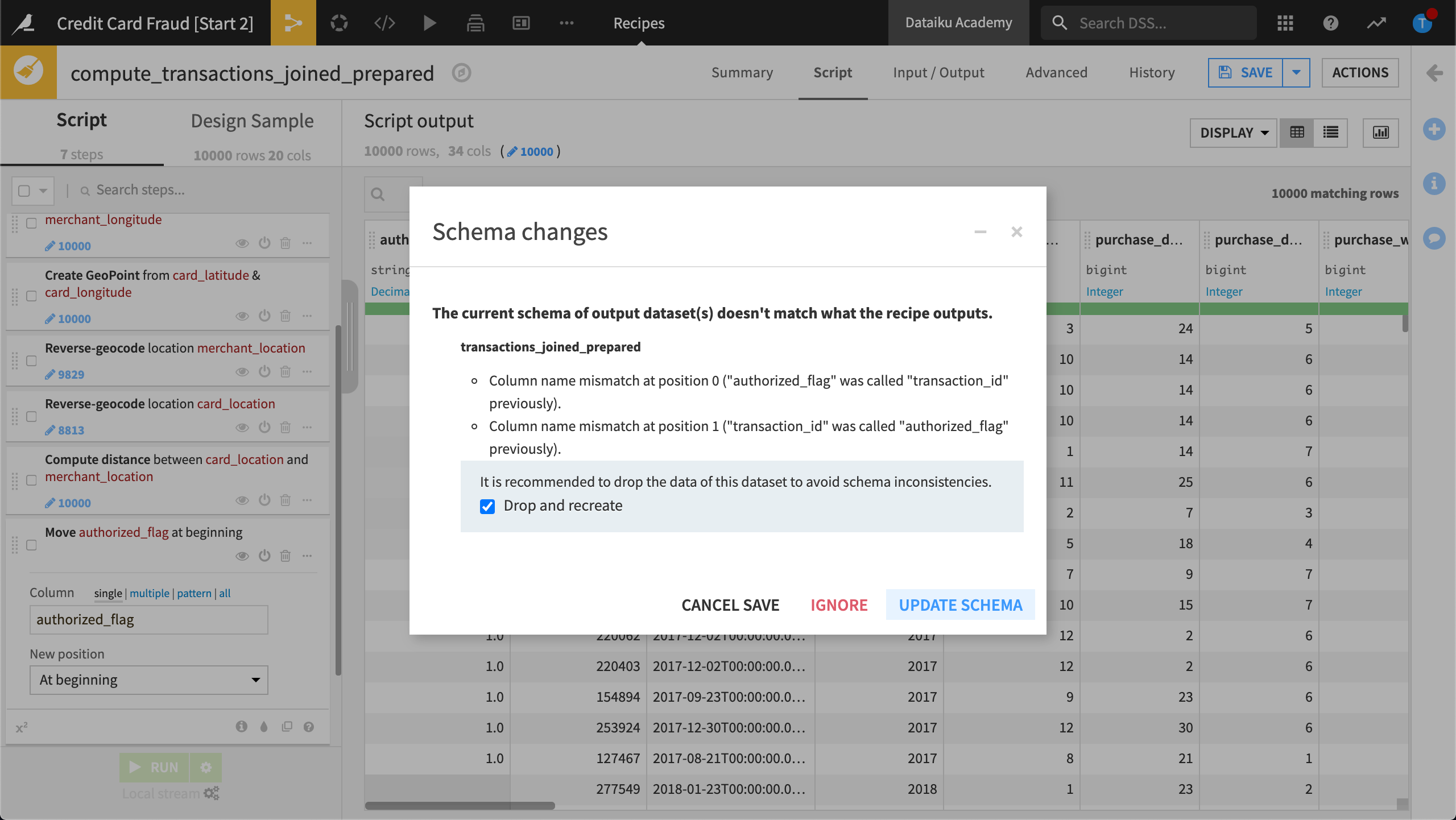

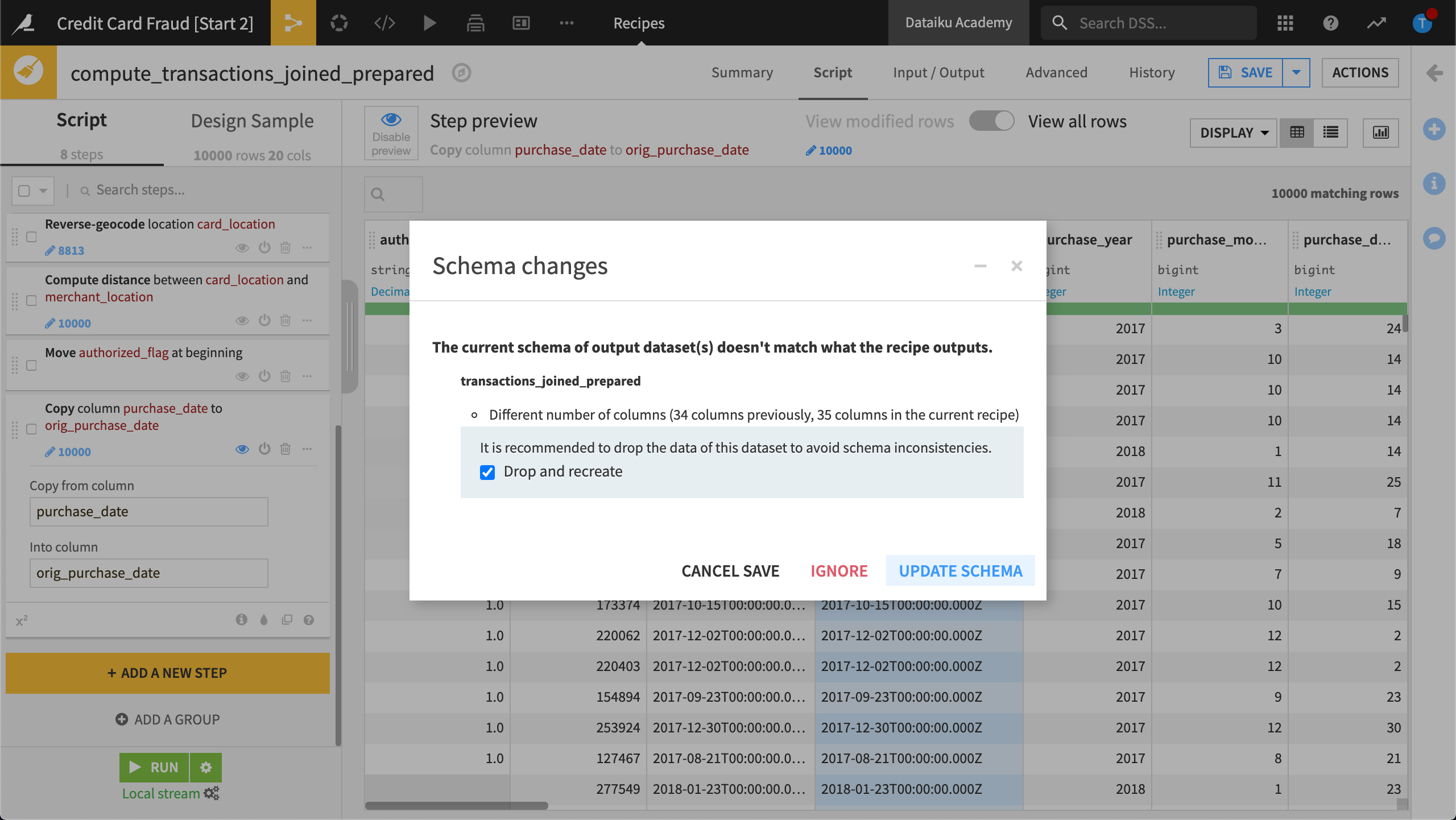

Propagate Schema Changes from Inside a Recipe¶

One way to propagate schema changes is directly from a recipe’s editor screen. Whenever we save or run a recipe, Dataiku DSS performs a schema check.

If our change modifies the output dataset’s schema, we will see a warning about the schema change, with an option to update the output dataset’s schema.

Propagate Schema Changes from Outside of a Recipe¶

When you build a dataset from outside the recipe editor, for example from:

the dataset’s “Actions” menu

the “Flow Actions” menu

running a scheduled job in a scenario, or

running an API that rebuilds the dataset from an external system,

Dataiku DSS does not perform the schema check automatically. However, there are tools available to help us.

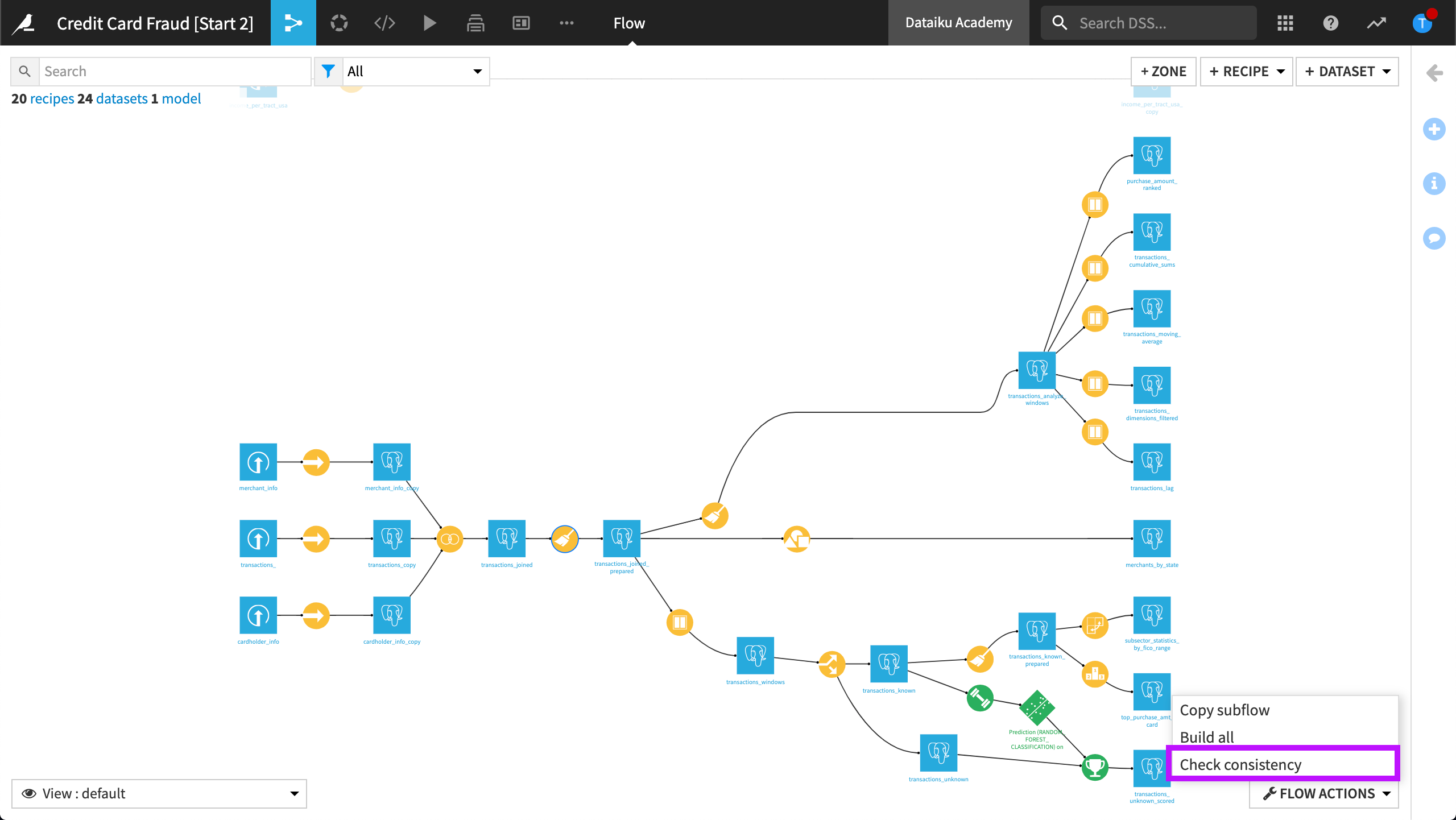

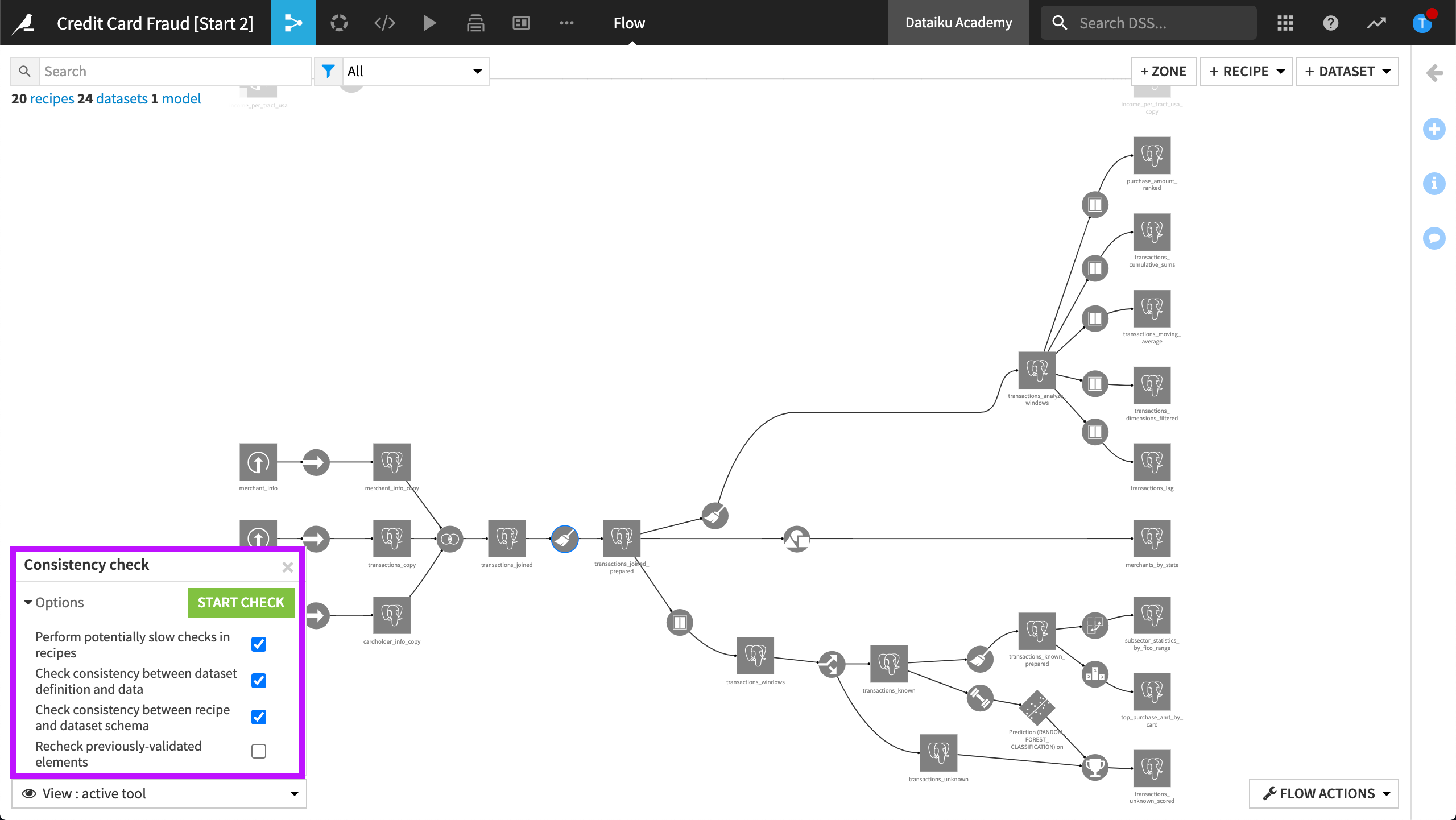

Schema Consistency Check Tool¶

One tool available to us is the Schema Consistency Check tool.

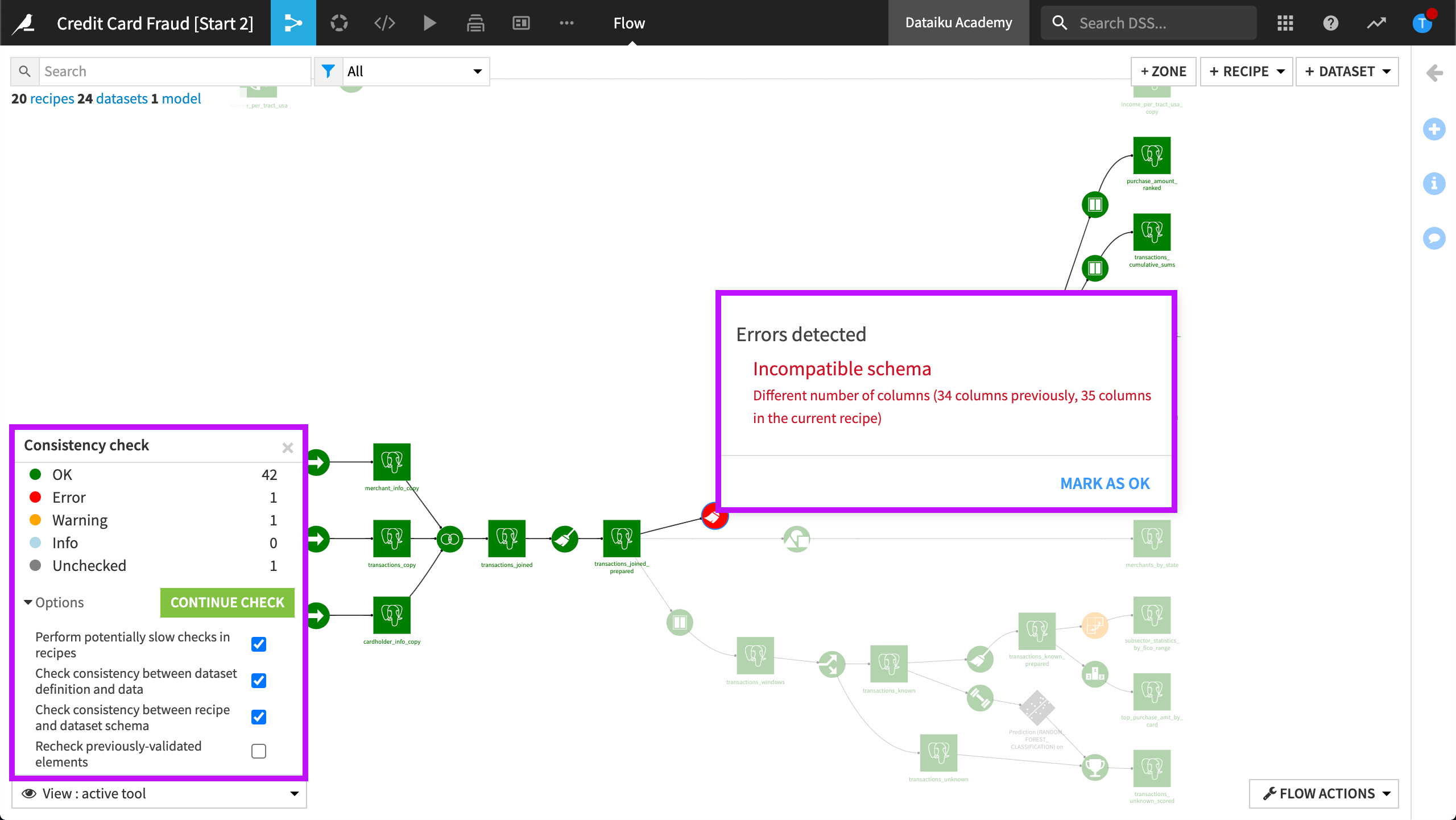

In this example, we add a column to the compute_transactions_joined_prepared Prepare recipe, prompting a schema change update from within the recipe.

Then, we run the schema consistency check tool to check the schema consistency.

Dataiku DSS displays four options:

Perform potentially slow checks in recipes. This option performs computationally-intensive checks.

Check consistency between dataset definition and data. This option checks if the schema of our dataset has been changed, but the underlying data has not been updated. This option is useful if we manually change the schema of our dataset from its Settings tab.

Check consistency between recipe and dataset schema. This option performs a consistency check between the schema of the recipe’s output preview and the schema of the output dataset.

Recheck previously-validated elements. This option re-runs all the checks, even on previously validated elements.

In our example, the tool has detected an error in a subsequent recipe, the compute_transactions_analyze_windows Prepare recipe. Clicking the recipe shows the error details — Dataiku DSS has detected a change in the number of columns.

Note

Because schema changes are not automatically detected when rebuilding datasets from a scenario, there is a “Consistency check” step available in scenarios.

Schema Propagation Tool¶

It would be tedious to open up each recipe and manually update the schema of downstream datasets, especially when working with large Flows. This is where the schema propagation tool comes in handy!

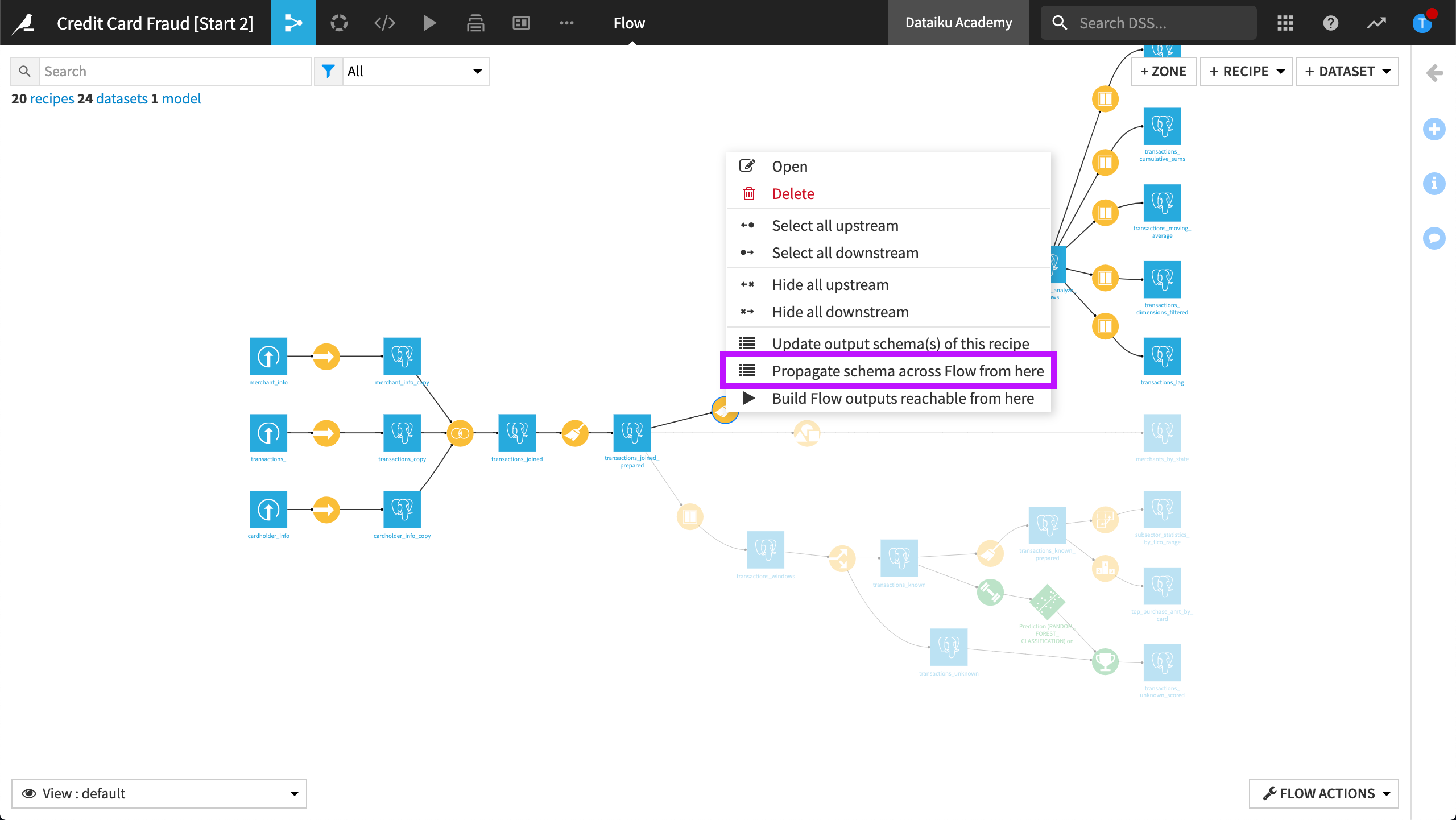

To open the schema propagation tool, at the point in the Flow where the schema needs to be updated, we right-click the recipe and then select Propagate schema across Flow from here.

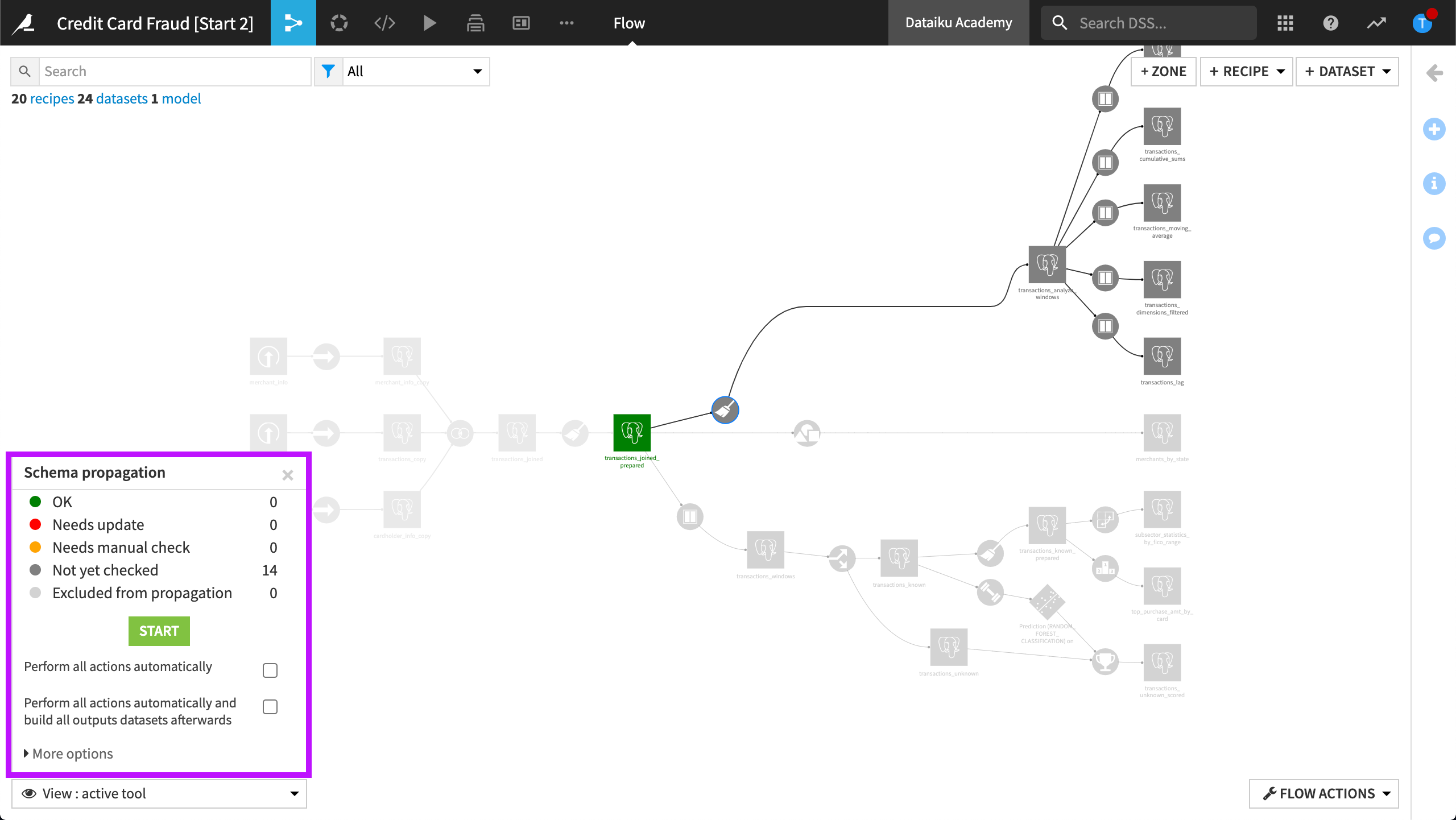

The tool detects the recipes that need updates.

Accepting the changes would update the schemas, after which we would need to rebuild the datasets.

Limitations¶

The schema propagation tool cannot verify the output schema for certain recipes without running them first.

Examples include code recipes, which update their output schemas at runtime, or the Pivot recipe, where the output schema depends on the values of its input dataset.

Automating the Process¶

In addition to using the schema propagation tool to manually propagate schemas, we can also automate the process, either by:

Performing all actions automatically, so that schema propagation is done with minimal user intervention, or

Performing all actions automatically and building all output datasets afterwards. This option is similar to the previous one, with the additional benefit that it performs a standard recursive “Build All” operation to rebuild all datasets.

Tool Options¶

Additional options when propagating schema changes include the following:

Rebuild input datasets of recipes whose output schema may depend on input data. If we have no such recipes it may be faster to unselect this option.

Rebuild output datasets of recipes whose output schema is computed at runtime. This option will ensure the rebuild of all code recipes and Pivot recipes that are set to “recompute schema on each run” in the Output step of the recipe.

When we launch the tool, all datasets that need to be rebuilt will be automatically rebuilt, as long as none of the recipes are broken.

Learn More¶

To learn more about Flow Views & Actions, including through hands-on exercises, please register for the free Academy course on this subject found in the Advanced Designer learning path.

For more information about schema propagation and rebuilding datasets in Dataiku DSS, please visit the product documentation.