Best Practices to Improve Your Productivity¶

In this series of guides we would like to pass on some experience we gained over several projects. Here we will share some productivity tips to facilitate your work.

Flow management¶

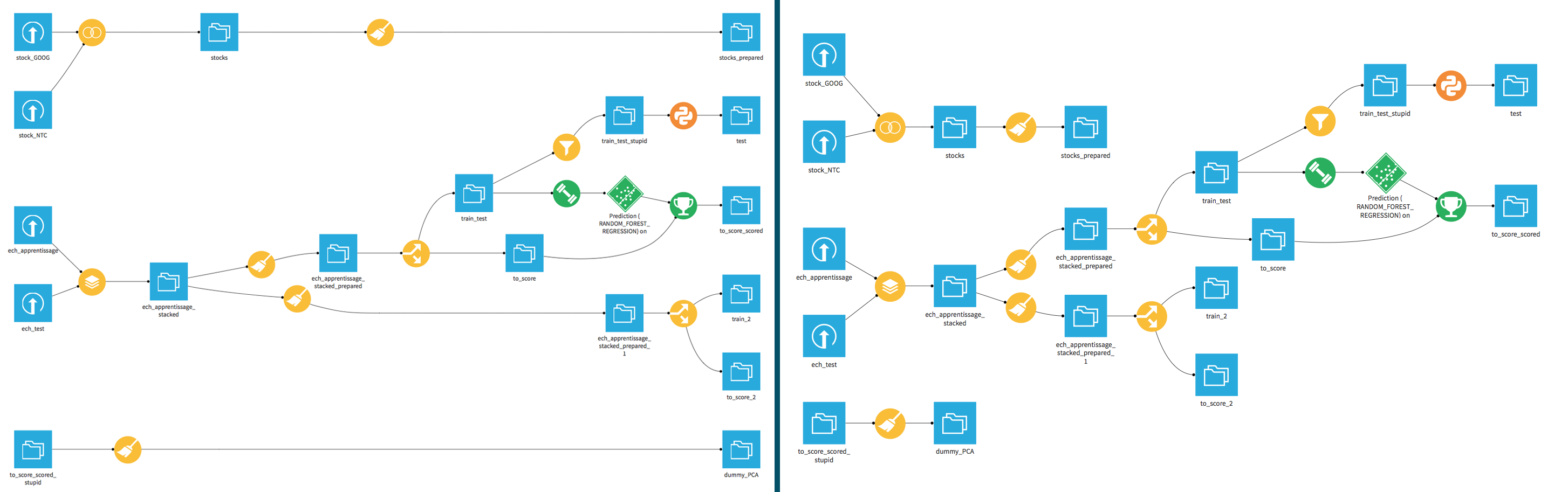

Anchoring¶

When your flow is composed of multiple short branches, it can be a pain to see them stretch all the way to the right of the screen. Of course the ideal shape for a flow is a pipe, with clear inputs and outputs, and no branches dangling. However, not all projects lead to such clean flows, and sometimes you are required to fork your work at multiple steps. In order to better visualize your flow in that case, navigate to the project homepage, click on the settings menu, under the config tab uncheck the Anchor Flow graph option.

Dataset and models exposition¶

If major parts of your flow are independent and relatively complex, you can use multiple projects to split your work. For example you could have a flow for data collection, then a flow for machine learning and analysis, and finally a flow for visualization, all in their own separate project.

In order to use the datasets of a project in another project, go to project settings → config → exposed elements and expose datasets (or models) to another project. Exposed datasets become a source element in the other project.

This is a way to split a big undertaking into a few projects and allowing each user to work in her own project.

Some users also create a “datamart” project, containing mostly datasets meant to be exposed to other projects.

Beware that the dependencies between projects can quickly become a maze, get the global picture before exposing datasets.

Refactoring¶

Refactoring will be reworked in future versions to make things easier. While most refactor operations are possible today, some require multiple steps when one would rather use a single click operation.

To keep the flow simple and clean:

Refactor from time to time.

“Temporary” copies of a recipe tend to be not so temporary… It’s OK in a hurry, but make sure you have time after the deadline to come back. If not in a hurry, it’s often better to work a bit harder to rework the flow instead of copy-pasting a recipe.

Talk! When creating a new branch in the flow, explain what it is needed for, and especially when it can be deleted.

Conversely, clean your own stuff. Factorize with what other people did in the project, to avoid reinventing the wheel.

Know what your colleagues did, ask if you may modify their work so that it suits your need too.

Doing exploratory work¶

When in need of a new code recipe (Python, R, SQL…) we recommend you to:

Create a new notebook with a meaningful name.

Interactively develop the code needed. Notebooks allow to execute code at each step, you’ll always know if you’re on the right track!

When the code is satisfying, convert it to a recipe:

for SQL, click “convert to recipe” in the notebook

for Python, click “export as .py”, copy the code, create a new Python recipe, paste the code (this might be simplified in future versions)

Rebuilding the flow¶

Rebuilding one element¶

There a a few options when building a dataset:

Build only this dataset: only one recipe is ran.

Recursive build: DSS computes which recipes need to be ran based on your choice:

Smart reconstruction: DSS walks the flow upstream (i.e. considers all datasets on the left of the current one) and for each dataset and recipe checks if it has been modified more recently than the dataset you clicked on. If it finds something, DSS rebuilds all impacted datasets down to the one you clicked on. This is the recommended default.

Forced recursive rebuild: DSS rebuilds all upstream datasets of the one you clicked on. This can be used for instance at the end of the day, to have all datasets rebuilt during the night, and start the next day with a double-checked and up to date flow.

“Missing” data only: Like “Smart reconstruction”, but DSS considers a dataset needs to be (re)built only if it’s empty. Recommended use is during development: “I don’t need the most up to date data, I just need some example data to design my recipe. Then tonight I’ll launch a forced recursive rebuild.”

Note that dependencies are always “pull” based: DSS answers the question “to build the dataset the user asked, I first need to build those datasets”.

“Propagate schema changes”¶

Sometimes it’s necessary to change the schema of a dataset in the beginning or middle of the flow: there is a new column of data available, there is a new idea for feature engineering, etc. One then wants to add/remove/rename the impacted column(s) in all downstream datasets. The feature “Propagate schema changes” has been designed for that.

Right click a dataset → tools → “Start schema check from here”, then “start” in the lower left corner. You get a new view of the flow. Now, for each recipe that needs update (in red):

Open it (preferably in a new tab)

Force a save of the recipe: hit Ctrl+s, (or modify anything, click Save, revert the change, save again). For most recipe types, saving it triggers a schema check, which will detect the need for update and offer to fix the output schema. DSS will not silently update the output schema, as it could break other recipes relying on this schema. But most of the time, the desired action is to accept and click “update schema”.

You probably need to run the recipe again

Some recipe types cannot be automatically checked, for instance Python recipes. Indeed, Python code can do pretty much anything, so it’s impossible to know the output schema of a python recipe without running it, and we don’t want to run the whole recipe just to check the schema (for theorists: we mean mathematically impossible, this is the halting problem ;-)

“Build from here”¶

There is a special feature to compute dependencies downstream, i.e. to recompute the datasets impacted by a change: right click a dataset → “Build from here”. When you do so:

DSS starts from the clicked dataset (let’s call it foo), walks the flow downstream following all branches, down to the “output” datasets.

For each of those output datasets, it builds it with the selected option: smart rebuild / build only missing datasets / force rebuild all datasets.

(This means that the dataset foo has no special meaning in dependencies computation: foo and the upstream datasets may or may not be rebuilt, depending on wether or not they are out of date with respect to the output datasets.)