Concept: Model Evaluation¶

This lesson contains the same information as the video, Concept: Model Evaluation.

In this lesson, we will discover some common tools and metrics that we can use to evaluate our models. The metrics described in this lesson mainly apply to classification models.

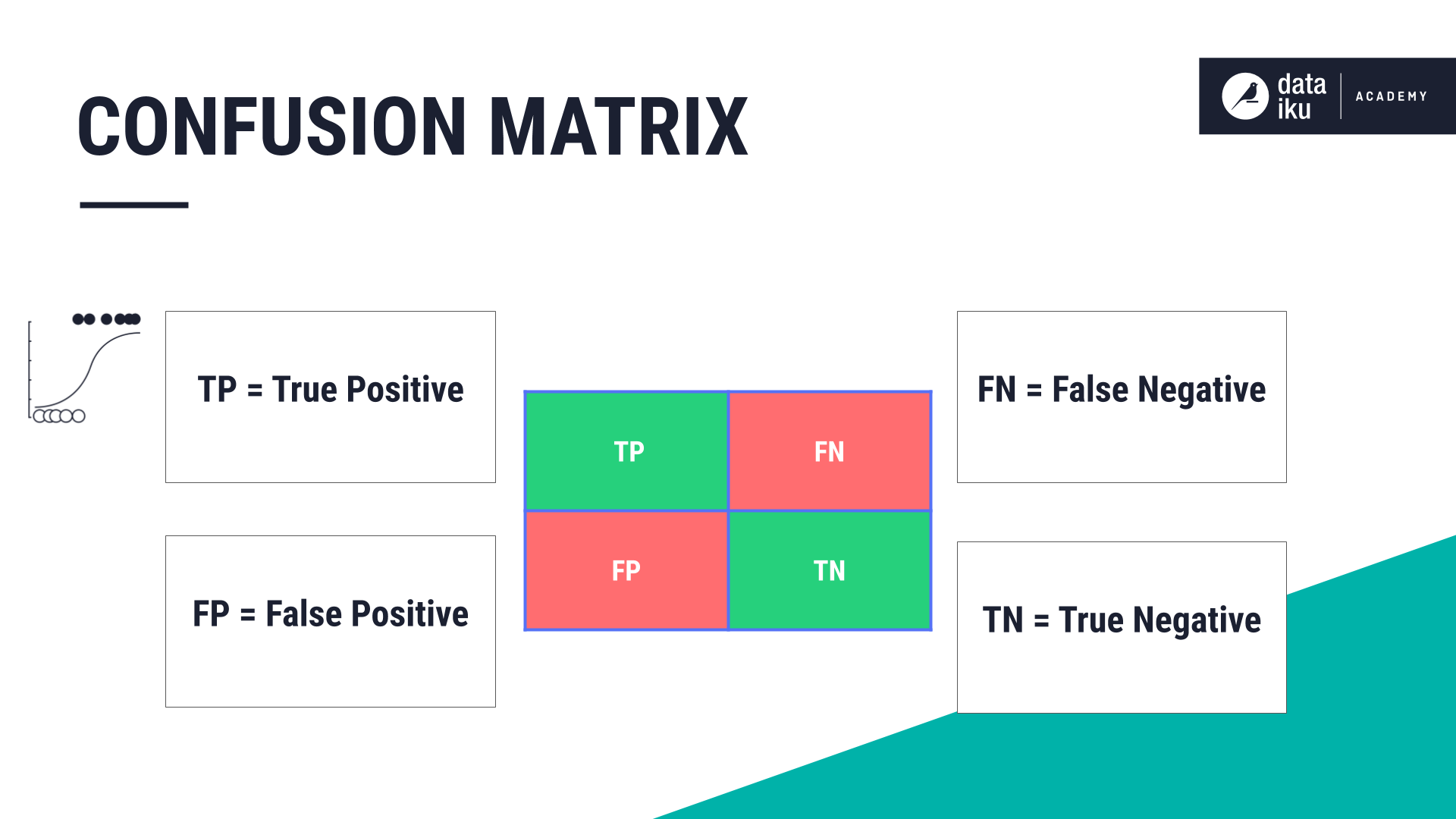

Confusion Matrix¶

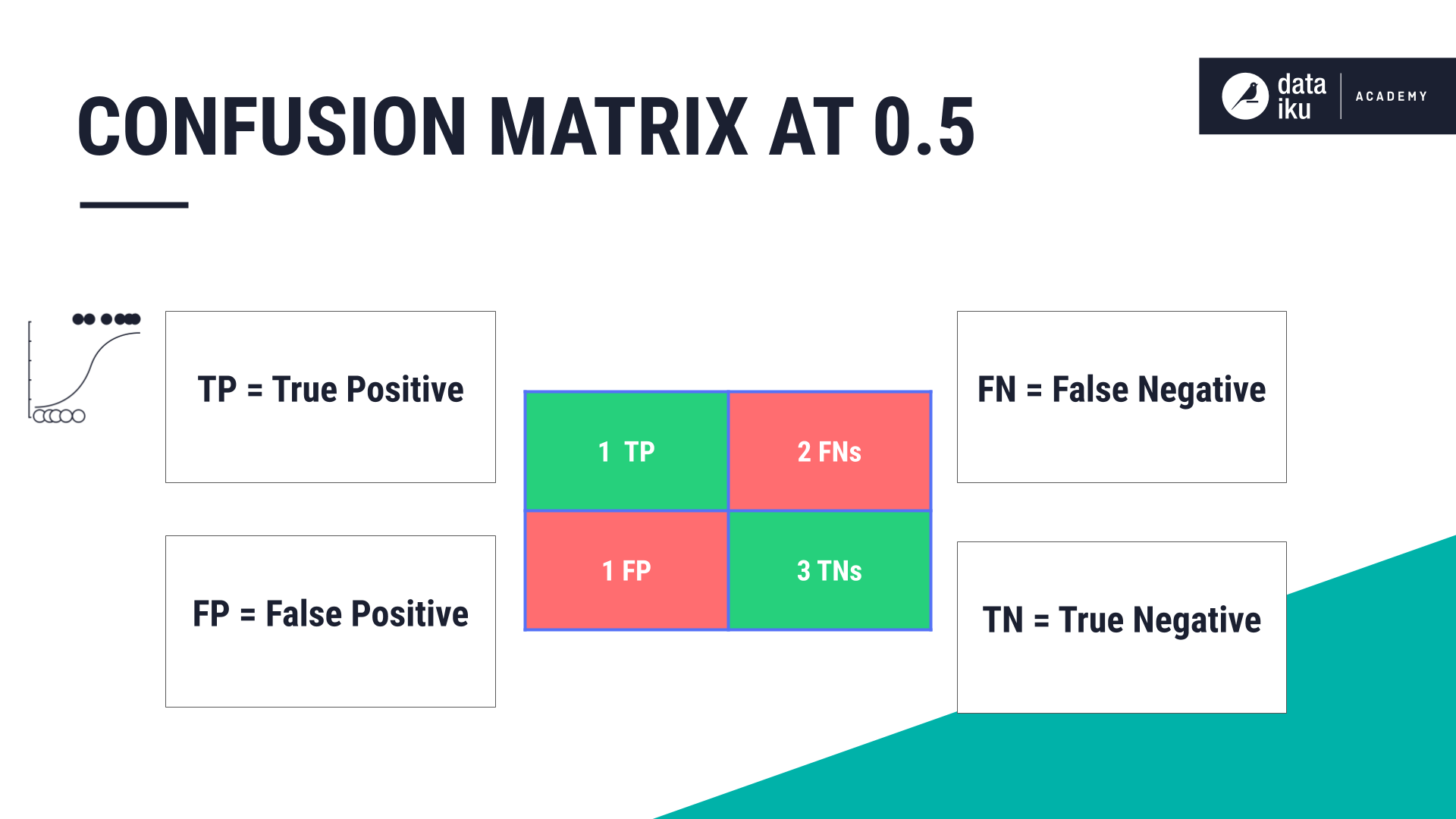

One tool used to evaluate and compare models is a simple table known as a confusion matrix. The number of columns and rows in the table depends on the number of possible outcomes. For binary classification, there are only two possible outcomes, so there are only two columns and two rows.

The labels that make up a confusion matrix are TP, or true positive, FN, or false negative, FP, or false positive, and TN, or true negative.

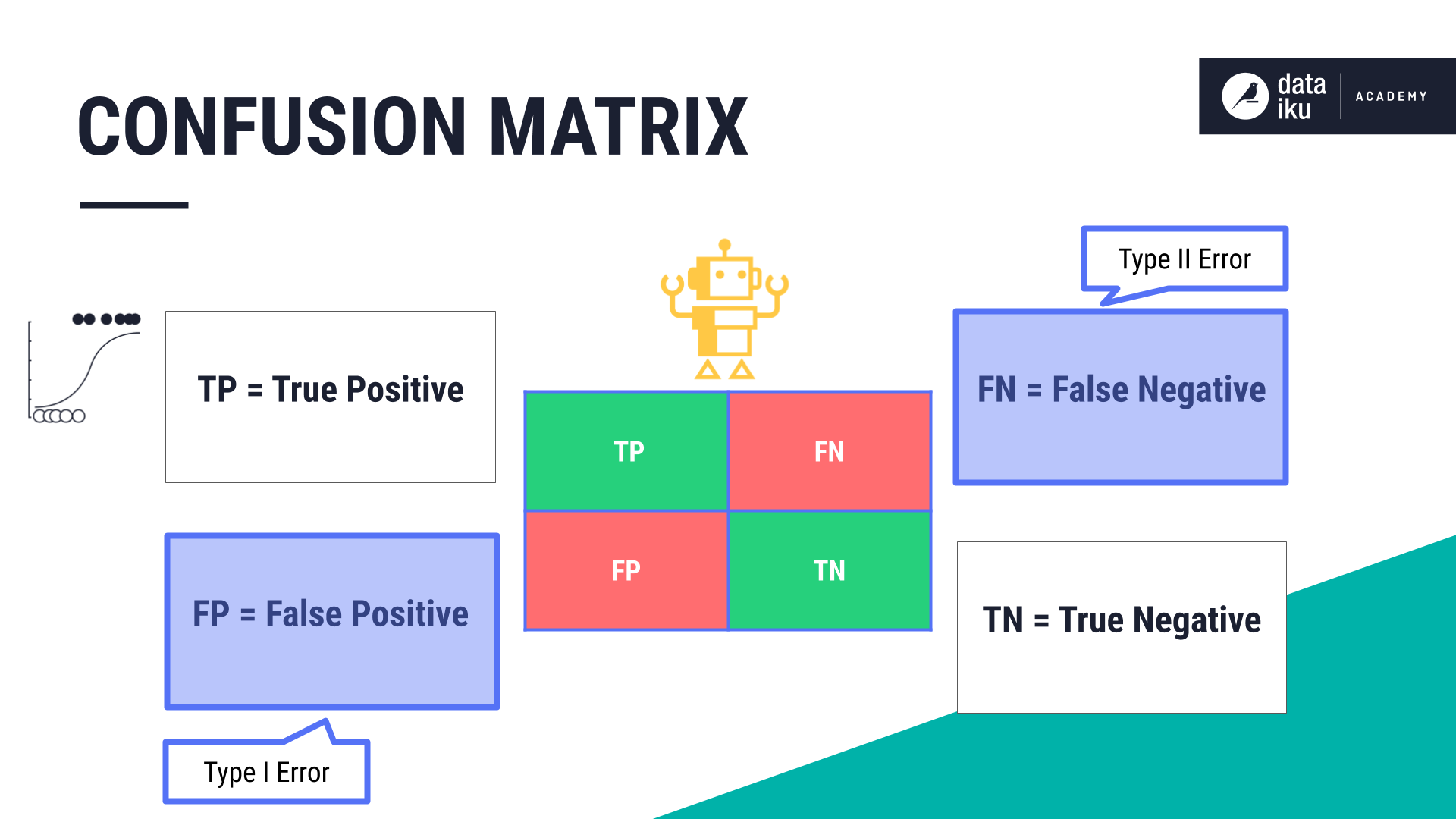

Type I and Type II Errors¶

There will always be errors in prediction models. When a model incorrectly predicts an outcome as true when it should have predicted false, it is labeled as FP, or false positive. This is known as a type I error. When a model incorrectly predicts an outcome as false when it should have predicted true, it is labeled as FN, or false negative. This is known as a type II error.

Depending on our use case, we have to decide if we are more willing to accept higher numbers of type I or type II errors. For example, if we were classifying patient test results as indicative of cancer or not, we would be more concerned with a high number of false negatives. In other words, we would want to minimize the number of predictions where the model falsely predicts that a patient’s test result is not indicative of cancer.

Similarly, if we were classifying a person as either guilty of a crime, or not guilty of a crime, we would be more concerned with high numbers of false positives. We would want to reduce the number of predictions where the model falsely predicts that a person is guilty.

Building a Confusion Matrix¶

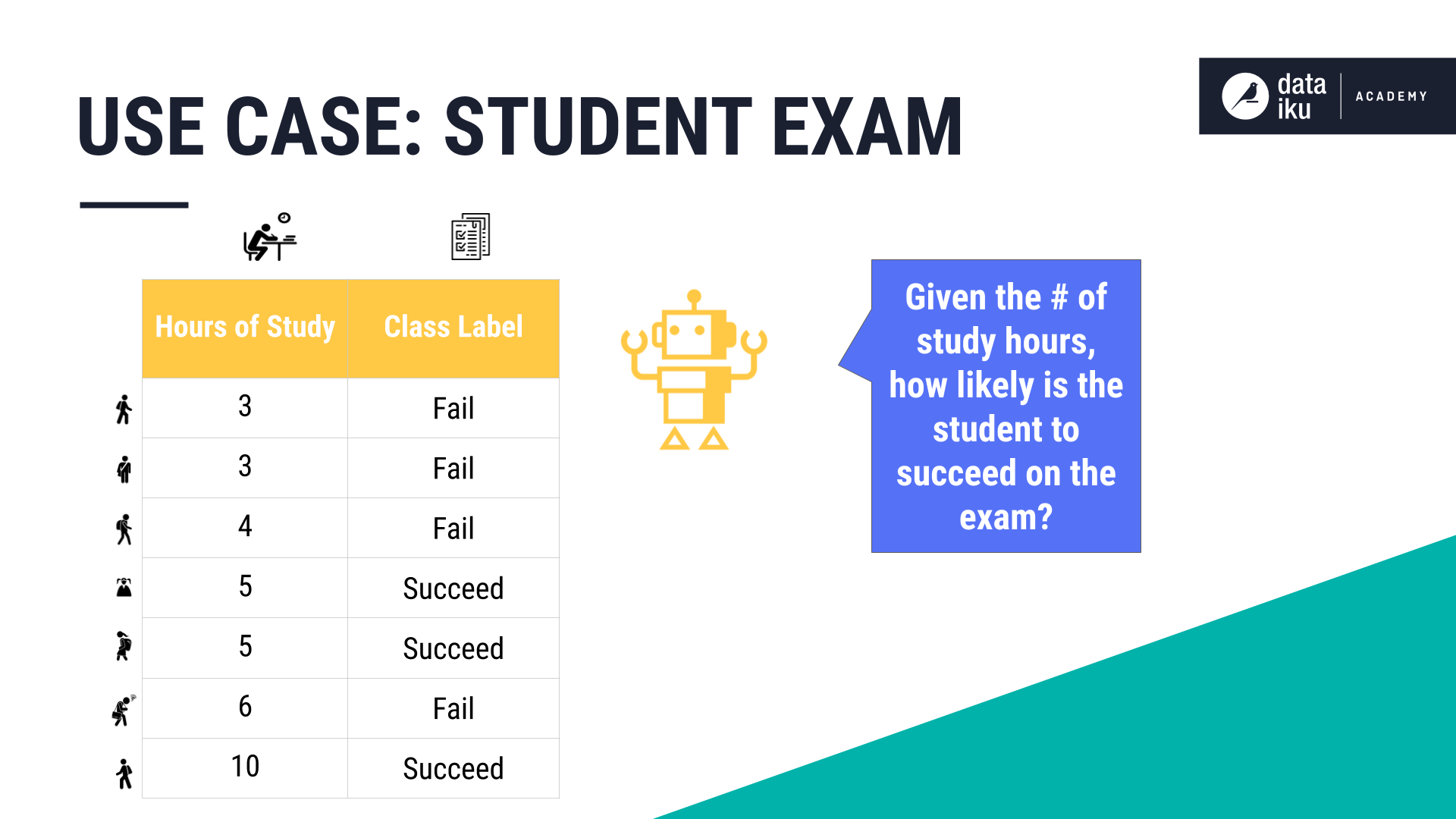

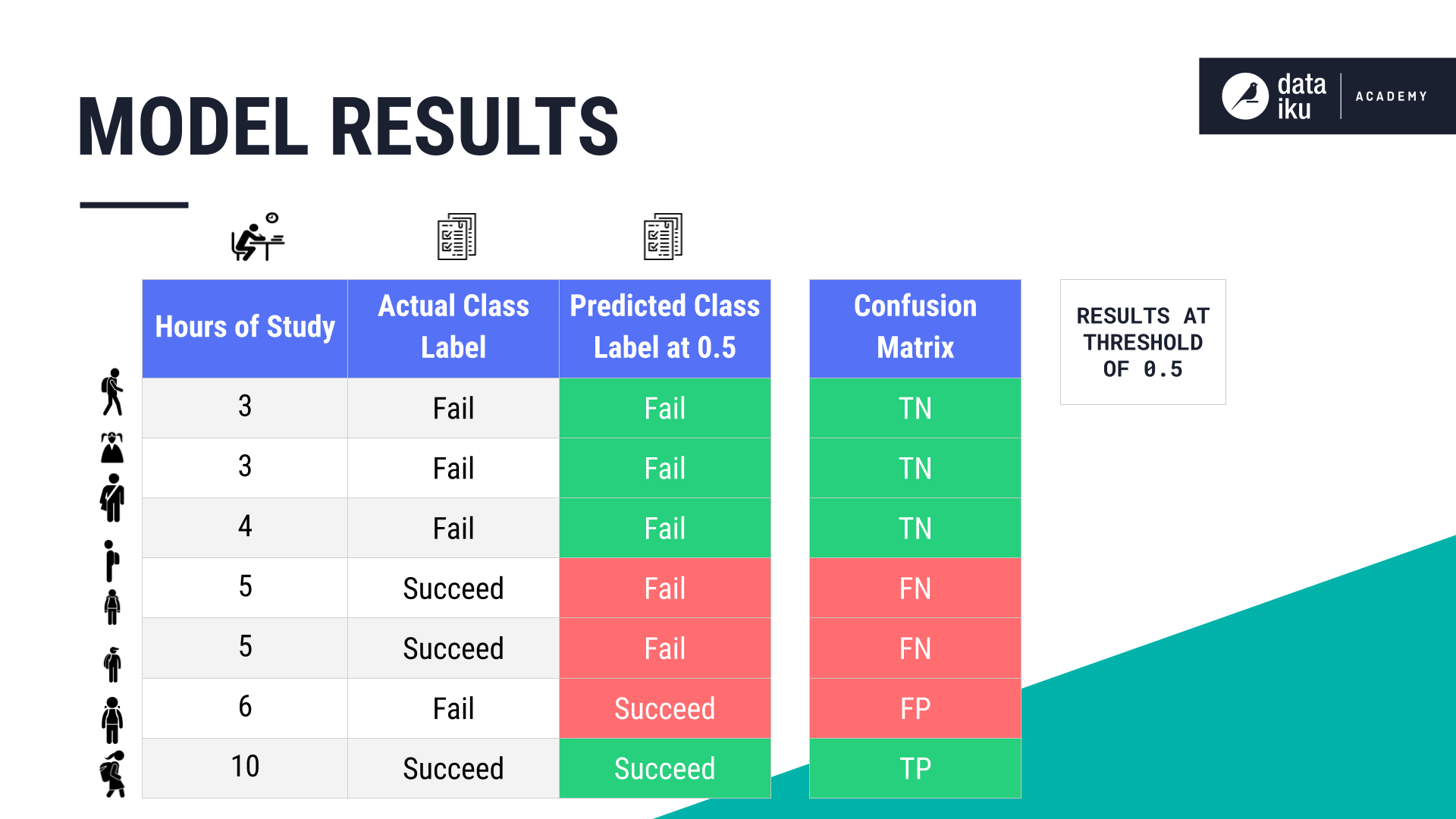

Let’s build a confusion matrix using our Student Exam Outcome use case where the input variable, or feature, is the hours of study and the outcome we are trying to predict is Succeed or Fail.

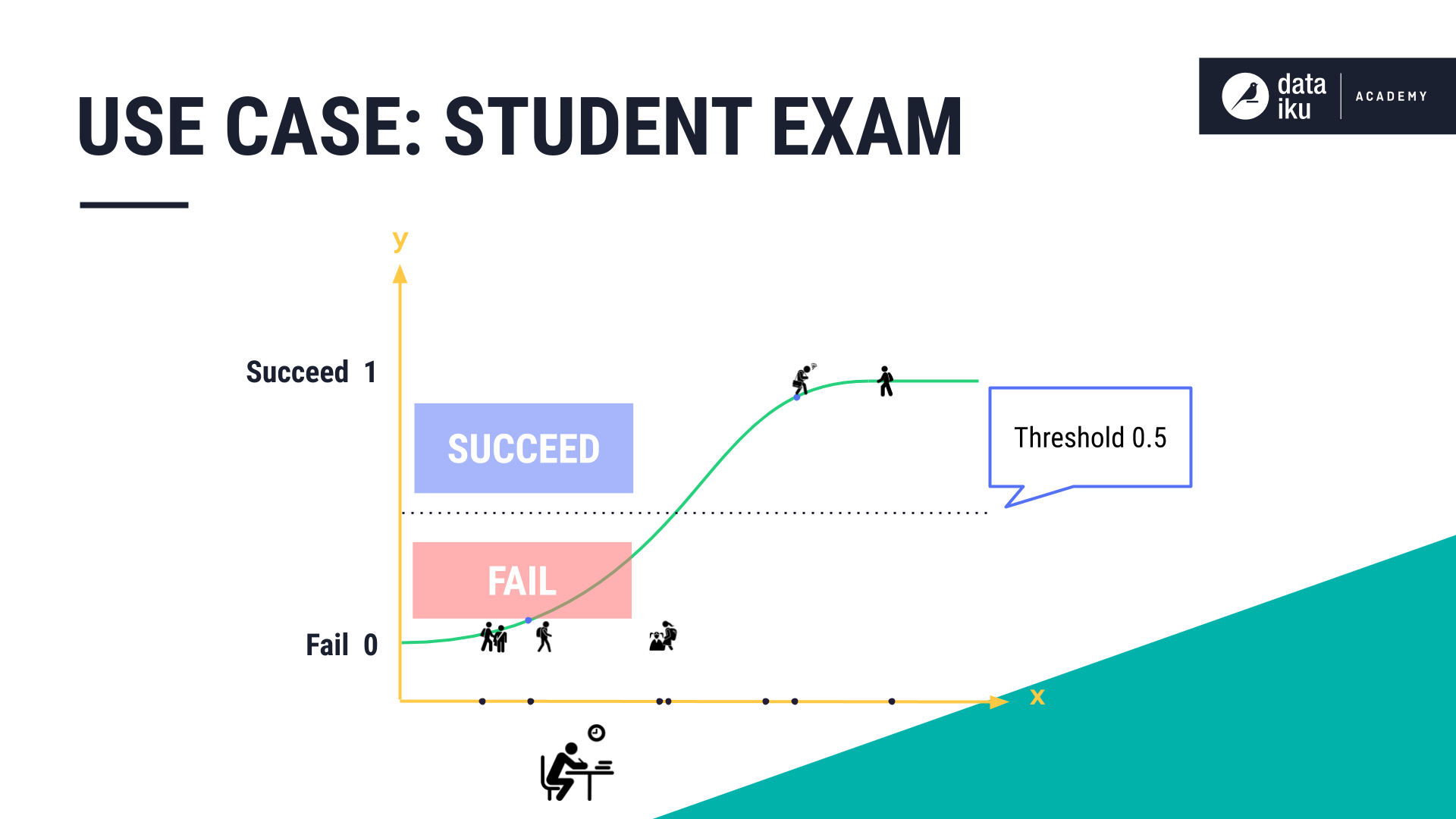

In this binary classification example, we have two classes, or outcomes: Succeed and Fail. Since we know the outcome of the data fed into the model, we will know if the model made correct predictions. The initial probability threshold, or cutoff point, of “0.5”, for our logistic regression algorithm is about halfway between Succeed and Fail. The threshold is the cutoff point between what gets classified as Succeed and what gets classified as Fail.

To create our confusion matrix, we refer to our results that show the actual class label, along with the predicted class label. We then assign each result one of the four labels (TP, TN, FP, and FN).

With this information, we can fill in the confusion matrix table. The model made four correct predictions: one TP and three TNs. The model also made three incorrect predictions: one FP and two FNs.

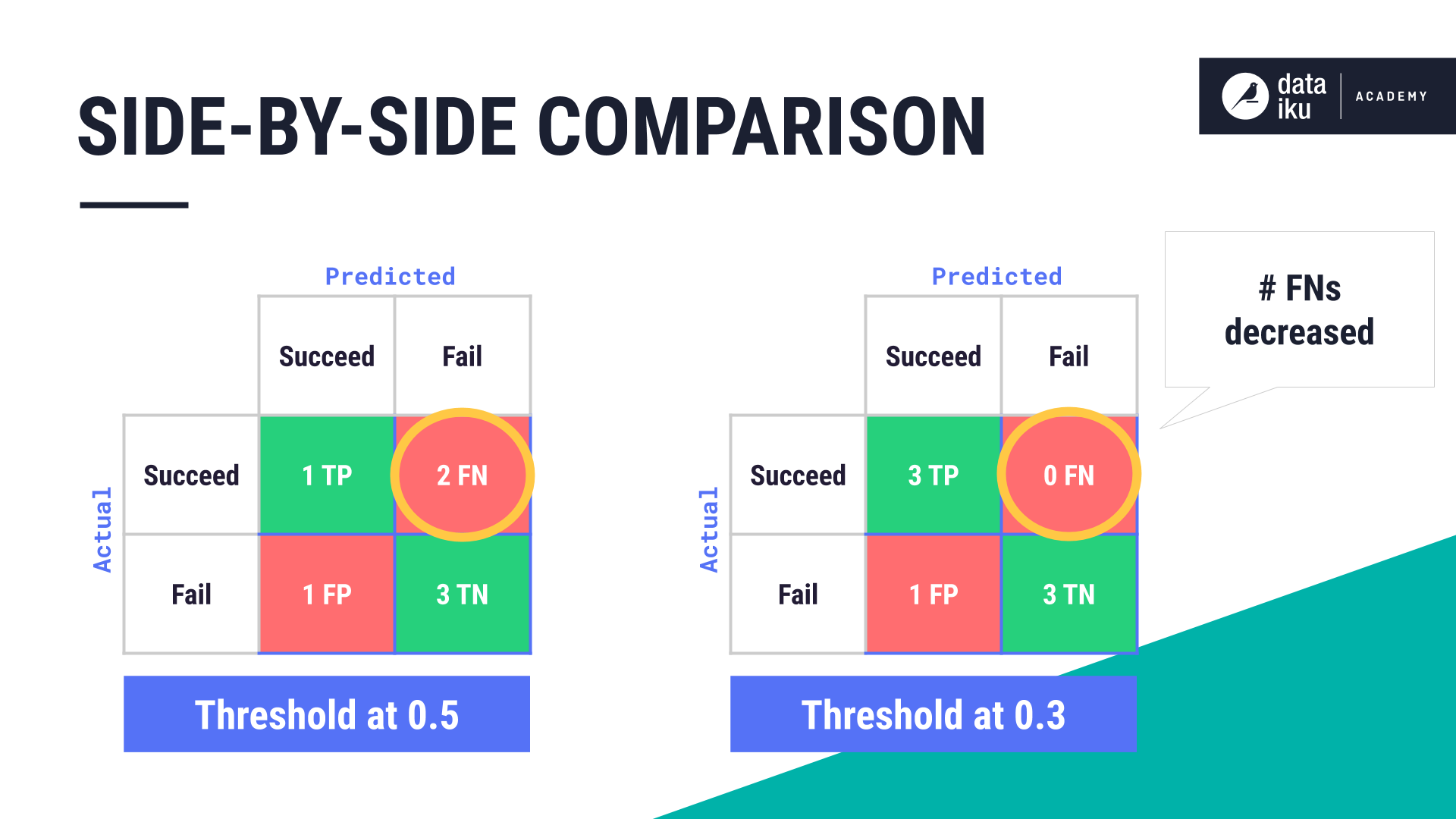

Testing a Different Threshold¶

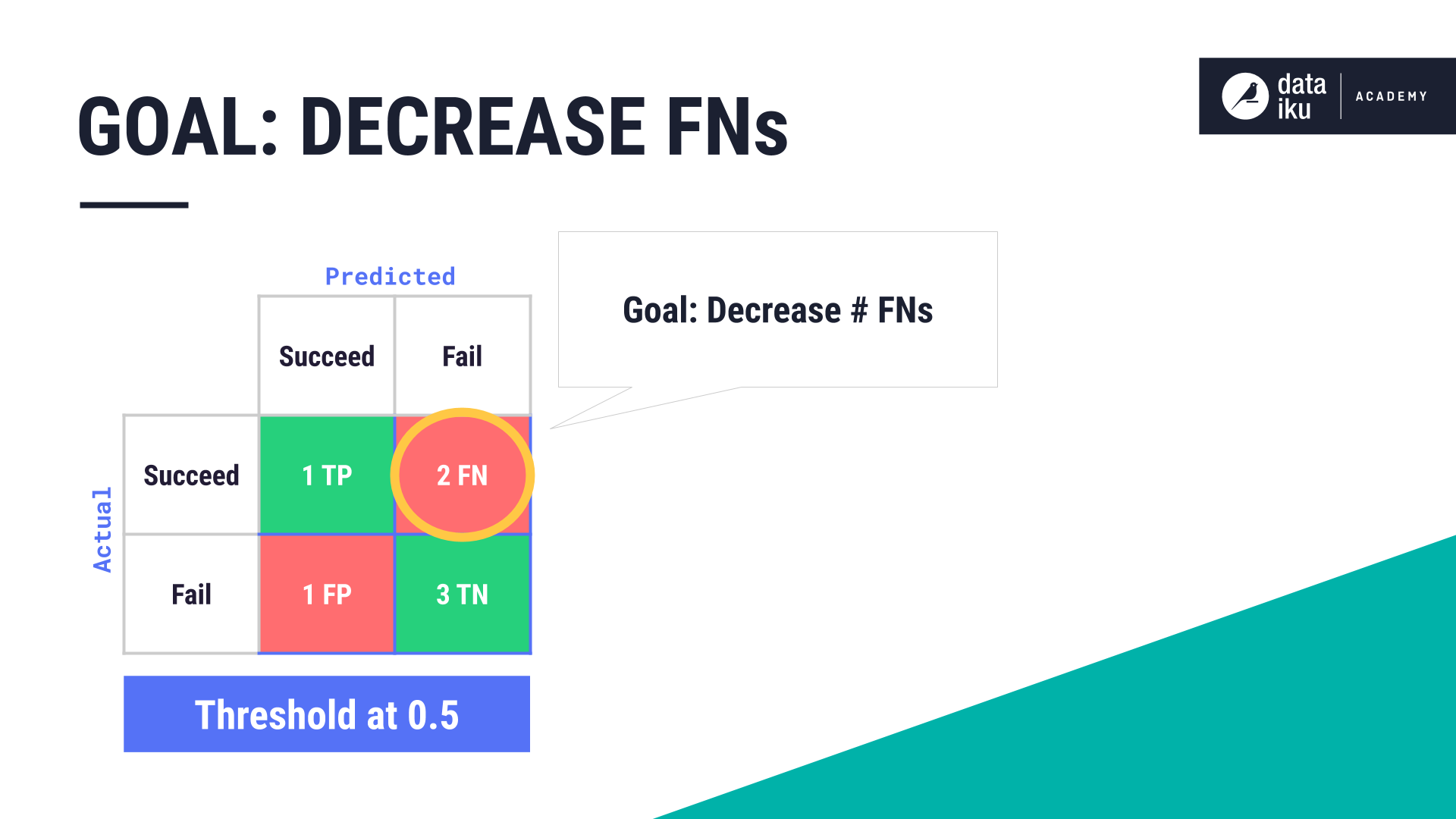

Let’s say our goal is to minimize the number of false negatives, or the number of Fail predictions that have an actual outcome of Succeed. That is, we’ve decided that we are more willing to accept too many false positives (predicting that a student who actually Failed would Succeed) than too many false negatives. To minimize the number of false negatives, we can try a different threshold.

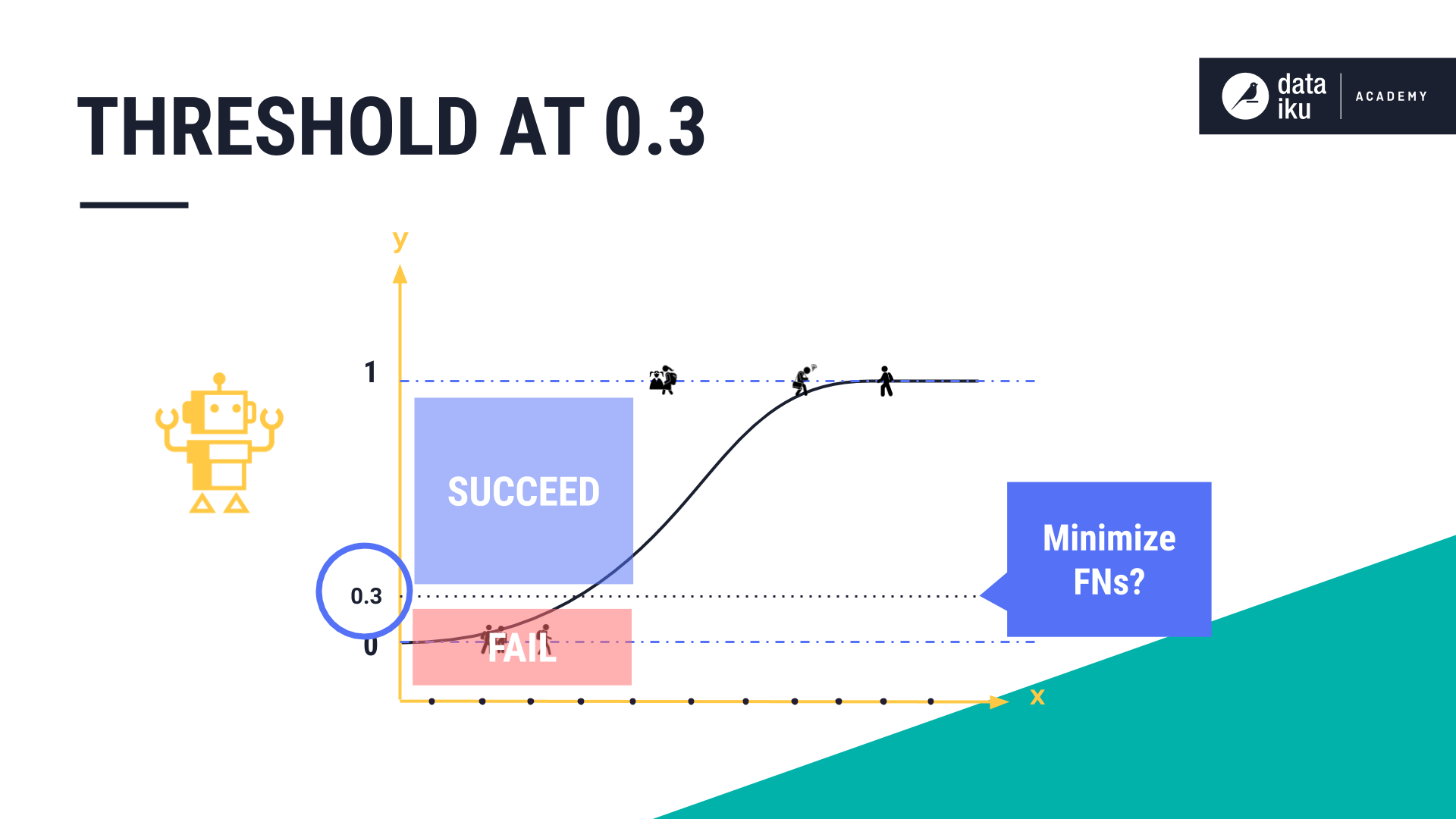

For our exam outcome use case, we’ll attempt to decrease the number of false negatives by trying a different threshold, this time “0.3”, and applying the model again.

At a threshold of “0.3”, the model has made some correct and some incorrect predictions. But the number of false negatives has decreased from two to zero.

In our use case, using logistic regression, the more optimal threshold seems to be “0.3” with the observations that we have tested so far. Creating a confusion matrix for every possible threshold would be too much information to compare. Thankfully, there are metrics to speed up the process.

Metrics¶

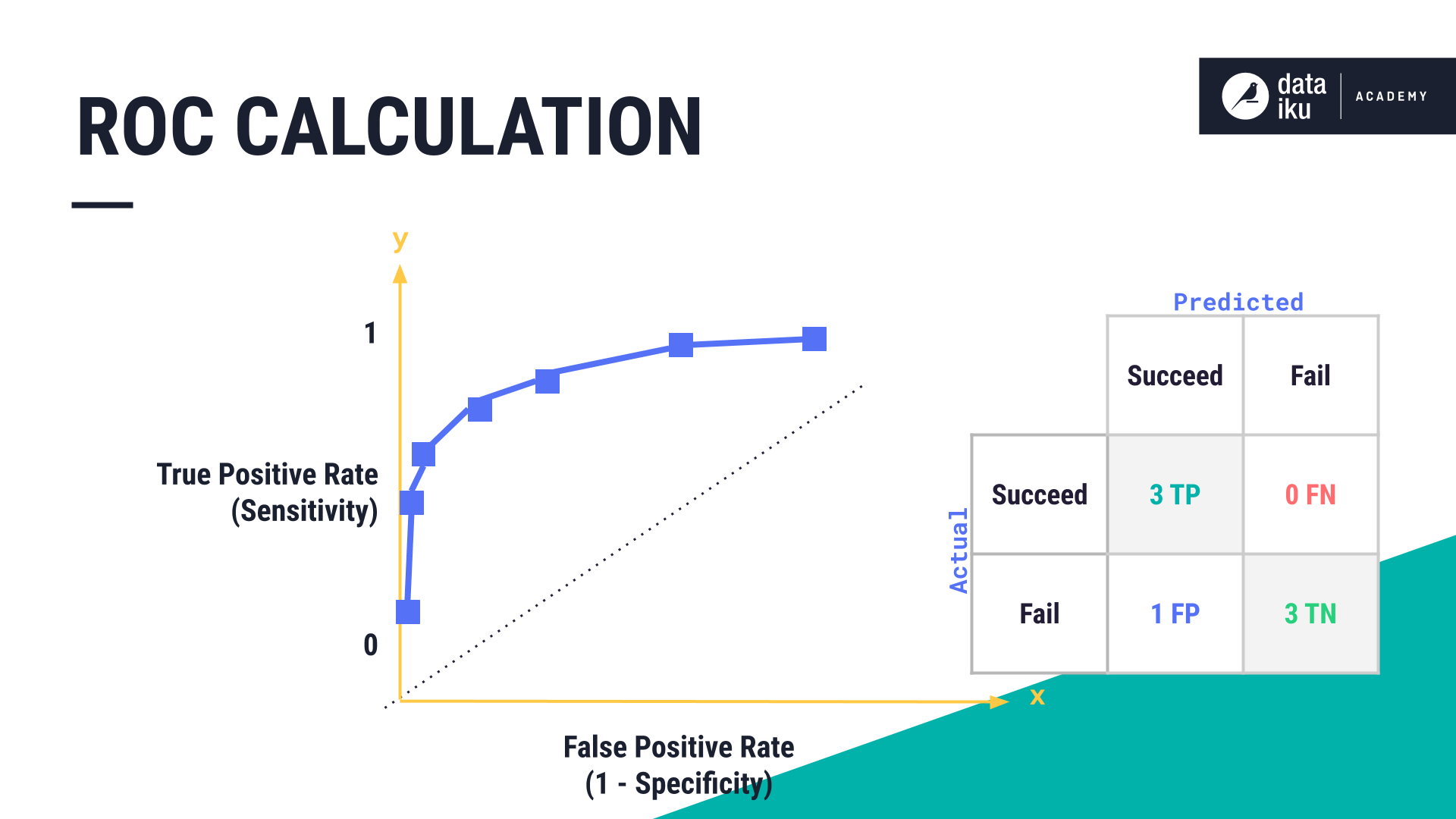

Common metrics used to evaluate classification models are based on the TPR–true positive rate, and the FPR–false positive rate. The TPR tells us what proportion of “Succeed” samples were correctly classified and is shown on the Y axis. It is also known as sensitivity. The FPR tells us what proportion of “Fail” observations were incorrectly classified as “Succeed” and is shown on the X axis. It is also known as specificity.

ROC Curve¶

Using the TPR and FPR, we can create a graph known as a receiver operator characteristic curve, or ROC.

The True Positive Rate is found by taking the number of true positives in the confusion matrix and dividing it by the number of true positives plus the number of false negatives.

The False Positive Rate is found by taking the number of false positives and dividing it by the number of false positives plus the number of true negatives.

In plotting the TPR and FPR at each threshold, we create our ROC curve.

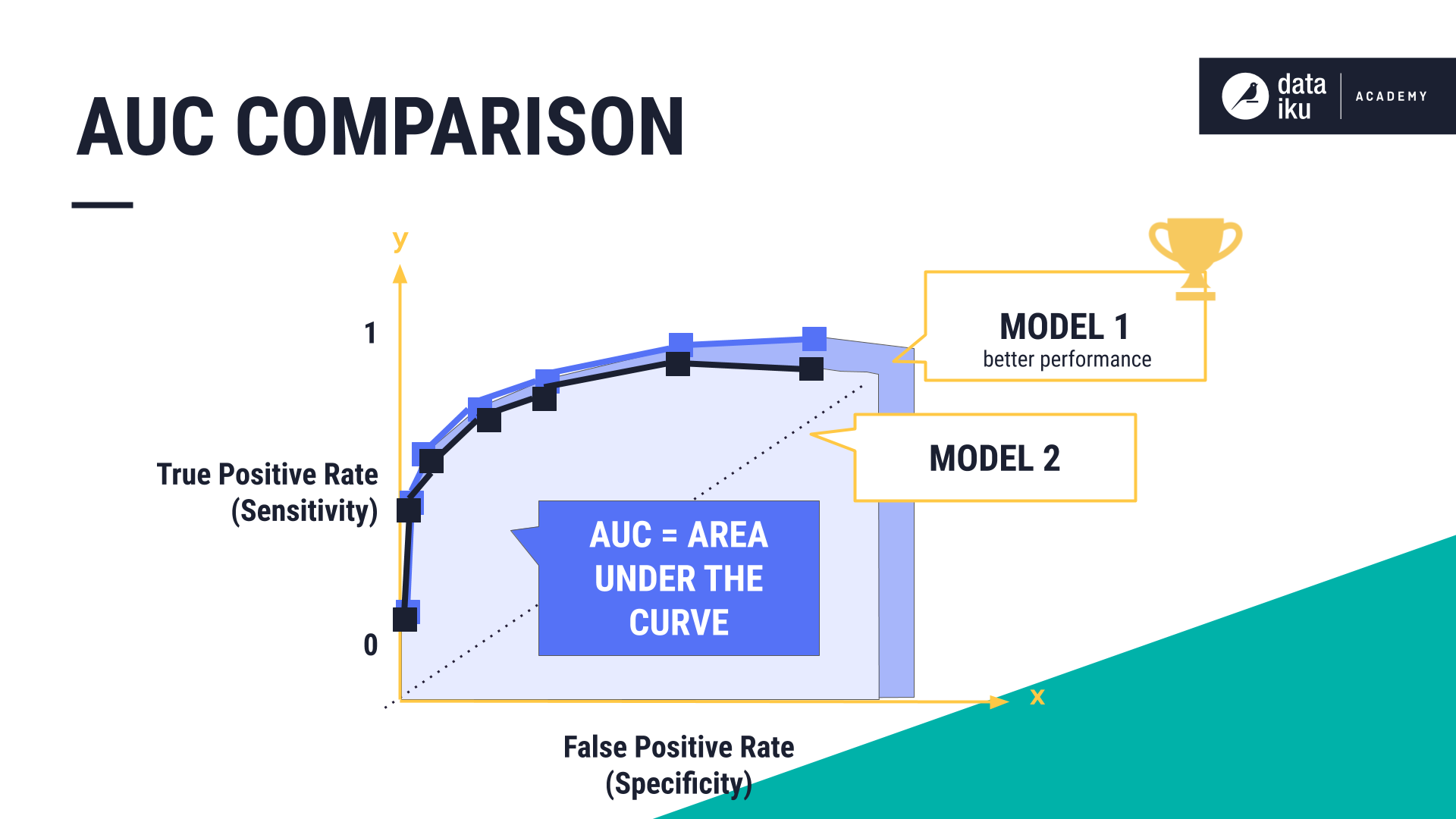

AUC Score¶

To convert our ROC curve into a single numerical metric, we can look at the area under the curve, known as the AUC. The AUC is always between 0 and 1. The closer to 1, the higher the performance of the model. ROC curve and AUC scores are often used together. The ROC/AUC represents a common way to measure model performance.

We can compare models side-by-side by comparing their ROC/AUC graphs. If one model has more area under its curve, and thus, a higher AUC score, it generally means that model is performing better.

Other Key Metrics¶

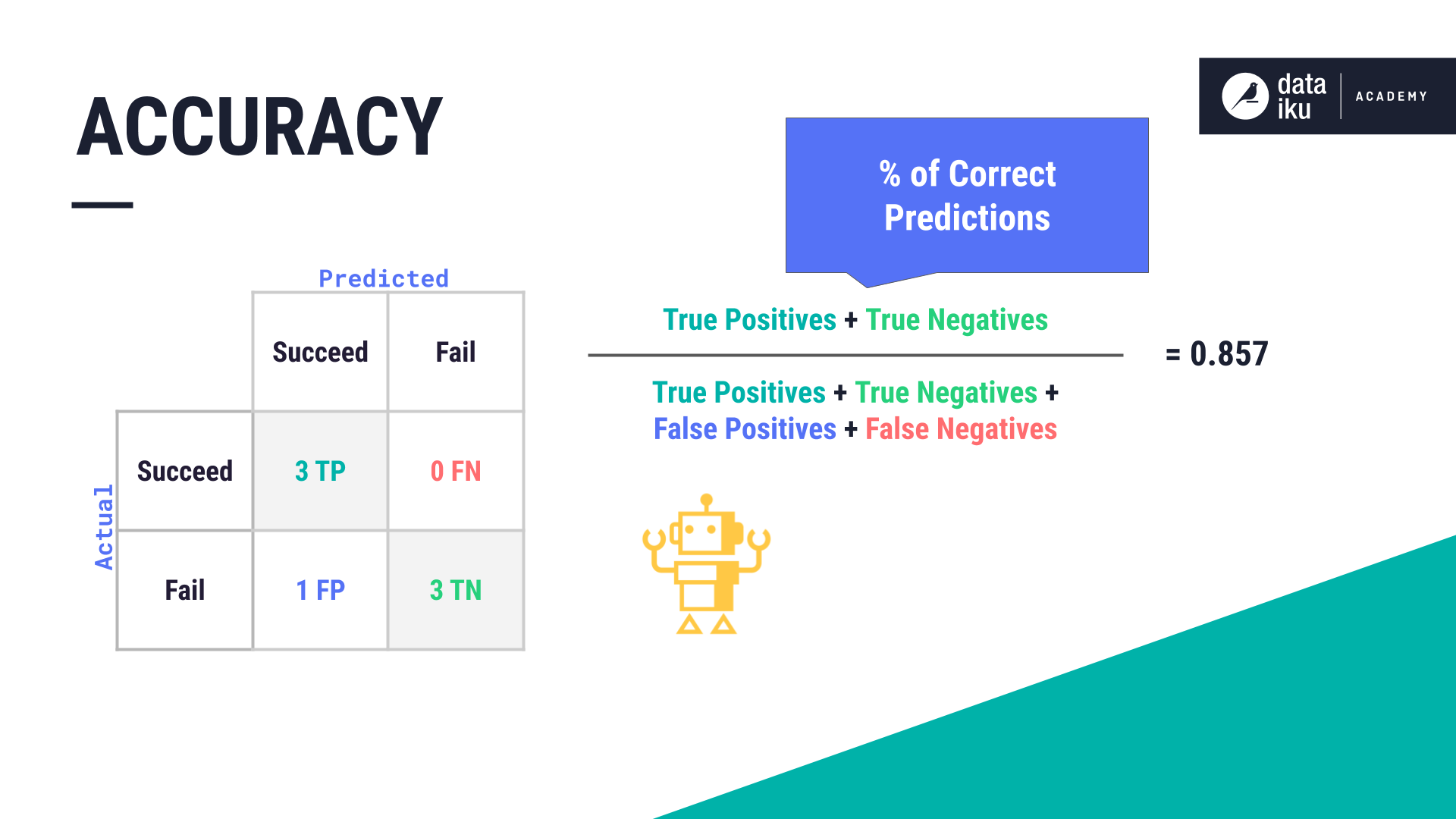

Other metrics used to evaluate classification models include Accuracy, Precision and Recall.

Accuracy

Accuracy is a simple metric for evaluating classification models. It is the percentage of observations that were correctly predicted by the model. We calculate accuracy by adding up the number of true positives and true negatives then dividing that by the total population, or all counts. Which is “0.857” in our case.

Accuracy seems simple but should be used with caution when the classes to predict are not balanced. For example, if 90% of our test observations were Succeed, we could always predict Succeed (and we would have a 90% accuracy score), however, we would never correctly classify a Fail.

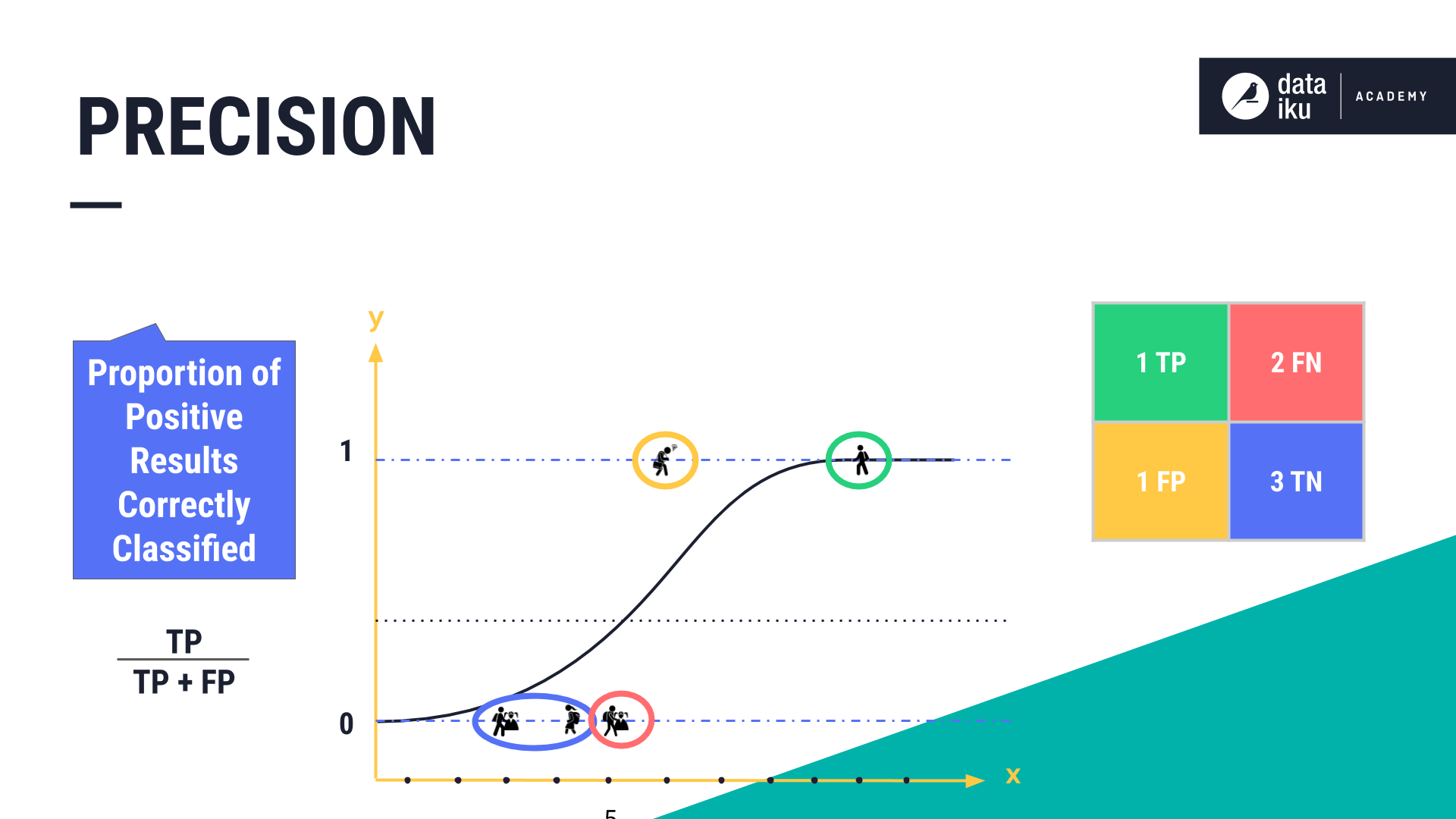

Precision

Precision is the proportion of positive classifier results that were correctly classified (in our use case, these are the correctly classified Succeed results).

Precision might be preferred over FPR. FPR, in our case, tells us what proportion of Fail observations were incorrectly classified as Succeed. If we had many more test observations that were Fail (or “not Succeed”), resulting in an imbalance of the test observations, then we might want to use Precision rather than the FPR. This is because Precision would ignore the number of True Negatives, the true “Fails”. If we had such an imbalance in our sample set then it would not impact Precision.

Machine learning practitioners often use Precision with another metric, Recall.

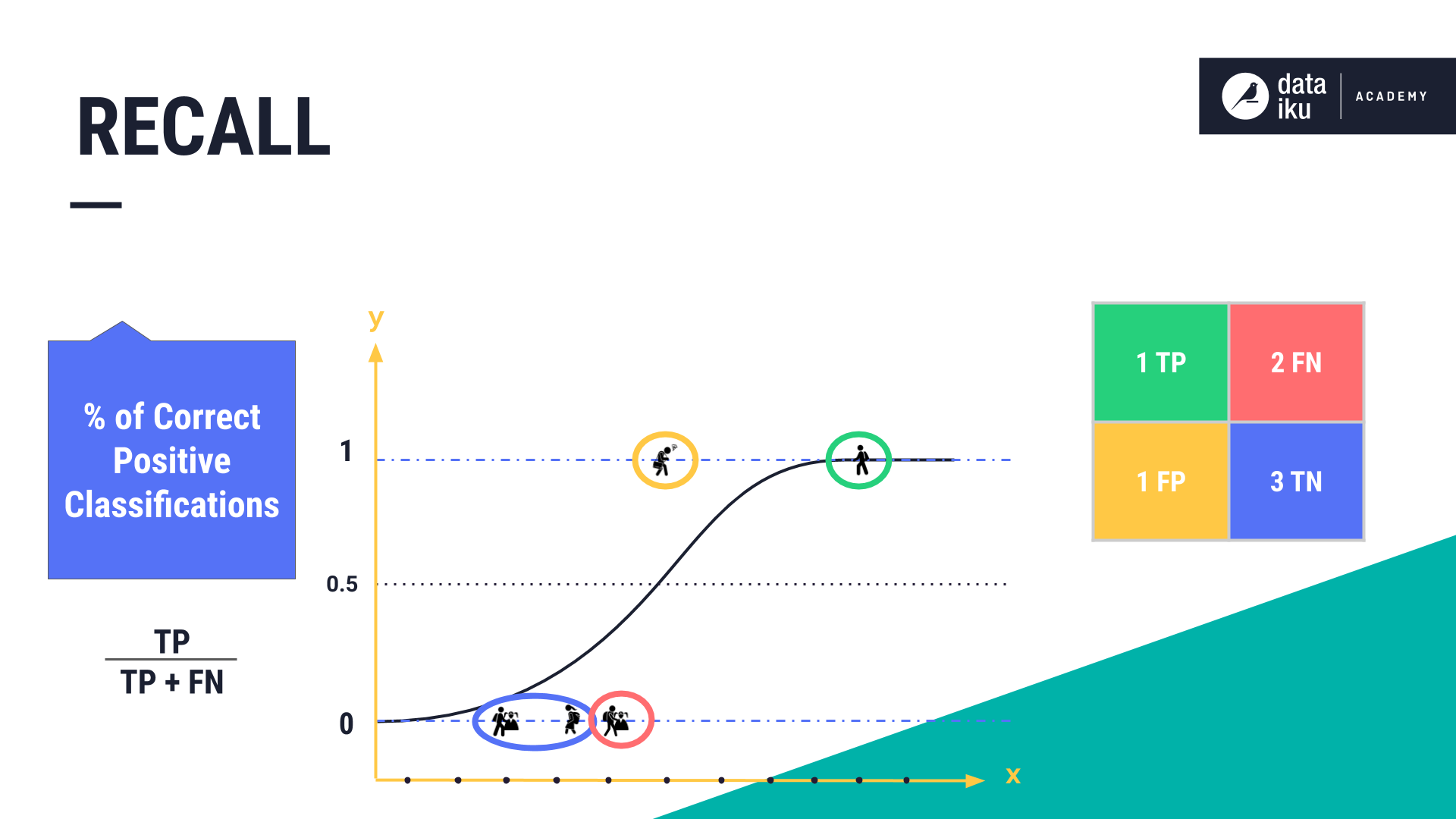

Recall

Recall is the percentage of correct positive classifications (true positives) from cases that are actually positive. In our case, this is the percentage of correct Succeed classifications.

Depending on our use case, we might want to check our number of False Negatives. For example, in our use case, if the model results in a large number of false “Fails”, it might indicate that we Failed to classify student observations correctly.

Metrics to Evaluate Regression Models¶

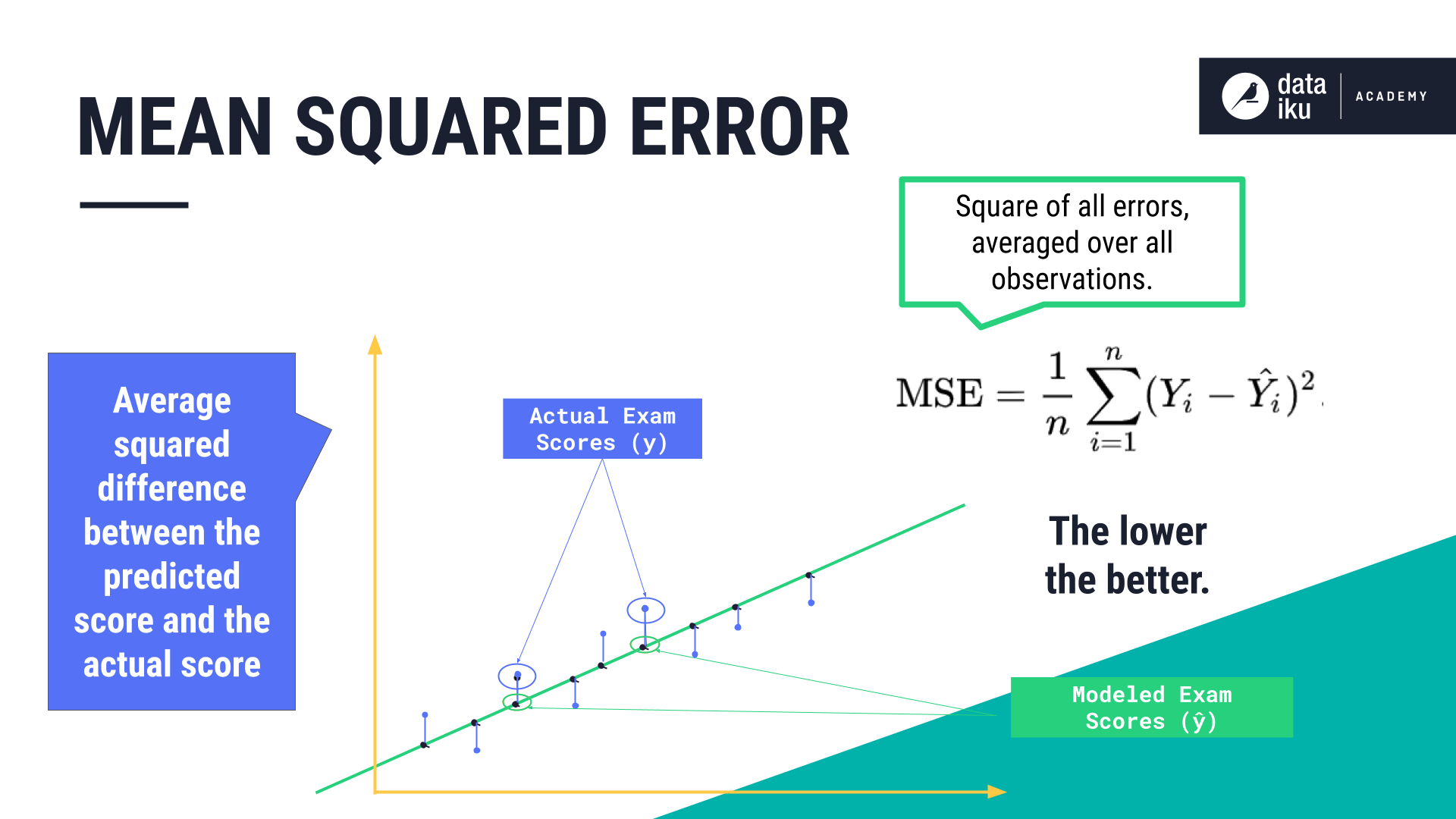

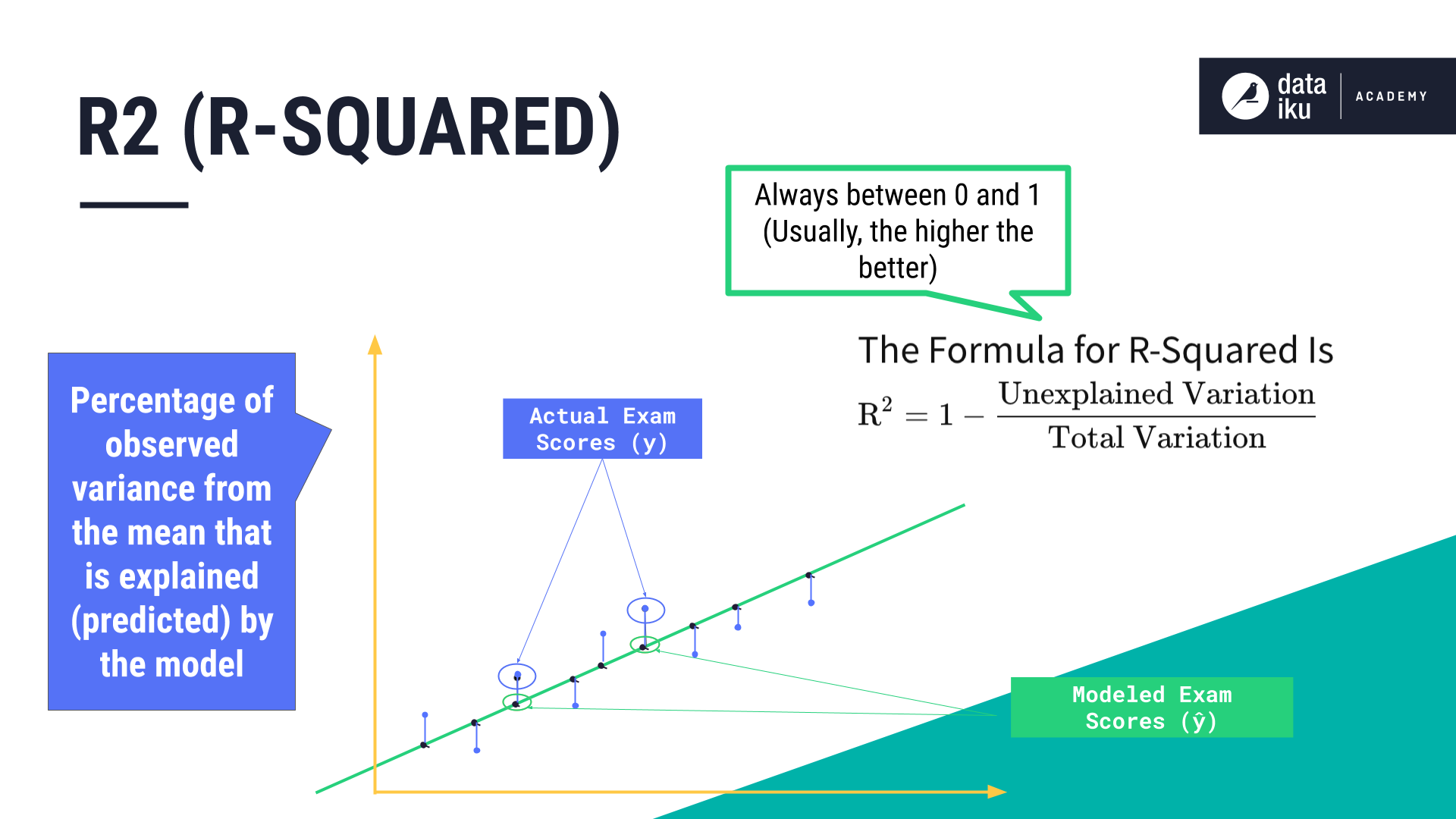

Let’s look at a few key metrics used to evaluate regression models: Mean Squared Error and R2( or R-Squared).

Mean squared error (MSE) is calculated by computing the square of all errors and averaging them over the number of observations. The lower the MSE, the more accurate our predictions. This metric measures the average squared difference between the predicted values and the actual values.

R2 is the percentage of the variance within the target variable that is represented by the features in our model. A high R-squared, close to 1, indicates that the model fits the data well and frequently makes correct predictions.

Summary¶

There are several metrics for evaluating machine learning models, depending on whether you are working with a regression model or a classification model. In this lesson, we mainly learned about tools for evaluating classification models. For example, we learned that a confusion matrix is a handy tool when trying to determine the threshold for a classification problem. We also learned that the TPR and FPR are used to create an ROC chart which is then used to calculate the AUC.

What’s next¶

You can visit the other sections available in the course, Intro to Machine Learning and then move on to Machine Learning Basics.