Concept: Preparing a Dataset for Machine Learning¶

Before building a model, it is good practice to carefully build and understand your training dataset in order to prevent issues with your model down the line. Preparation steps can also improve the performance of your model.

Check for Data Quality Issues

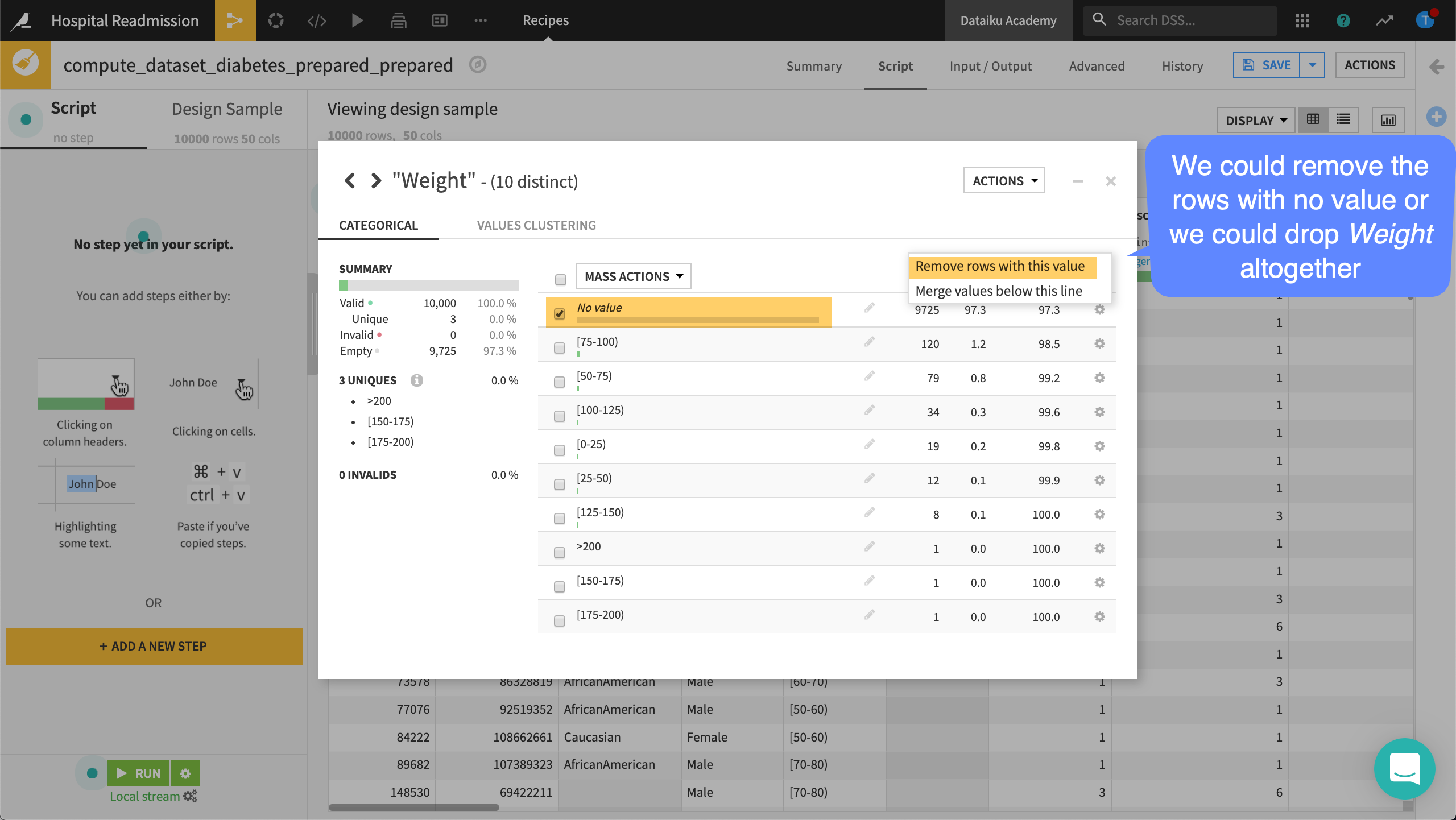

Dataiku DSS lets you easily build and explore your dataset to address common pitfalls before training your model. One common issue is data quality. In the dataset explore view, you’ll be able to check for missing values or inconsistent data.

For example, you may want to drop a variable that has too many missing or invalid values. Such variables won’t have any predictive power for your model. This can be done in a Prepare recipe before training the model or in the Script tab in the Lab.

You’ll then want to select features and avoid those that will negatively impact the performance of the model.

Perform Feature Selection

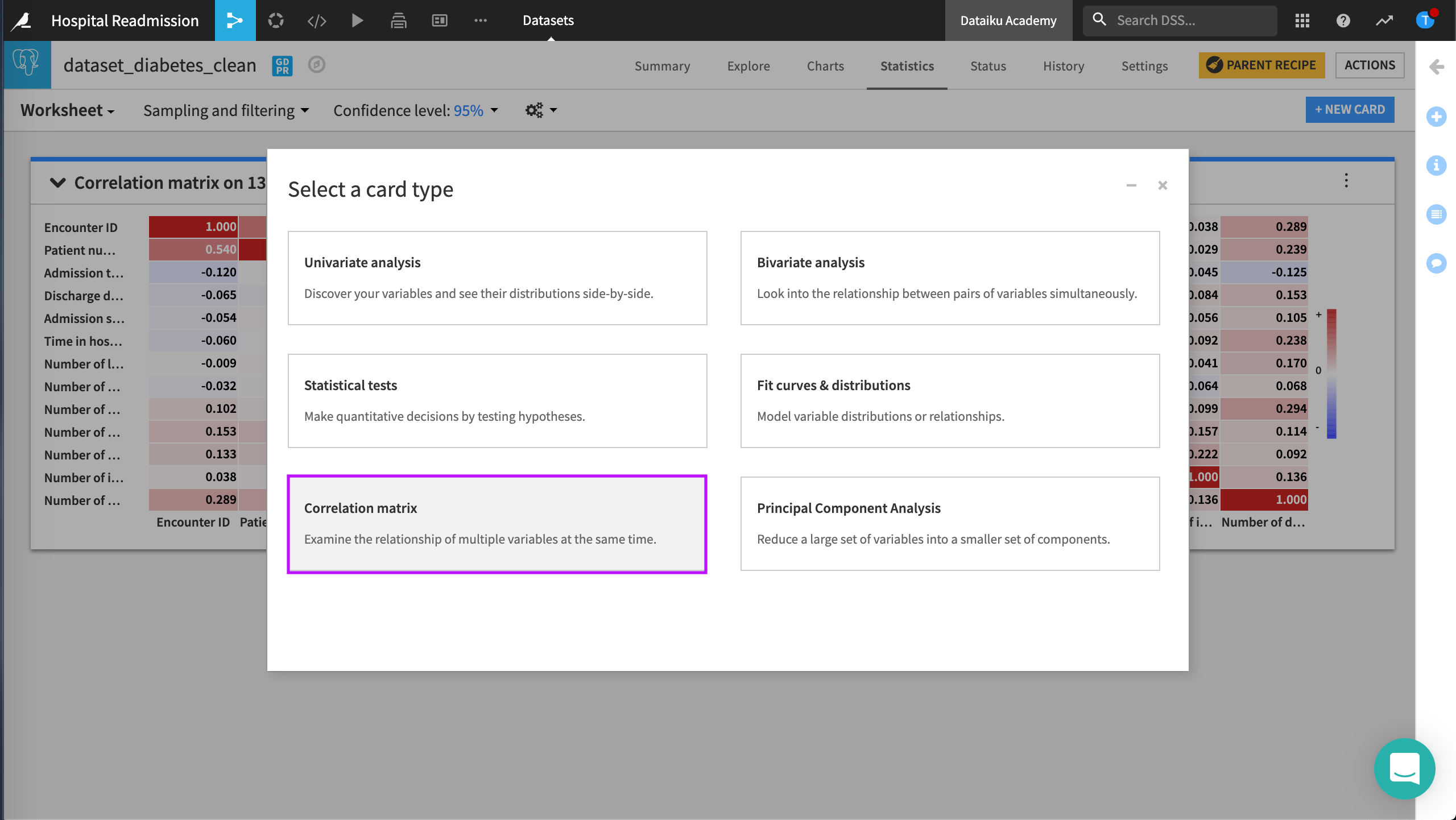

After cleaning the dataset, you’ll want to select the features you’ll use to train your model. Some features can negatively impact the performance of the model, so you’ll want to identify and remove them. In DSS, you can perform feature selection both manually and automatically.

One way to perform feature selection manually is to use the Statistics tab in the dataset. This will allow you to perform statistical tests and analyses that will help you select features. For example, using a correlation matrix, you can identify highly correlated feature pairs and drop redundant features. Redundant features can harm the performance of some models.

Perform Feature Engineering

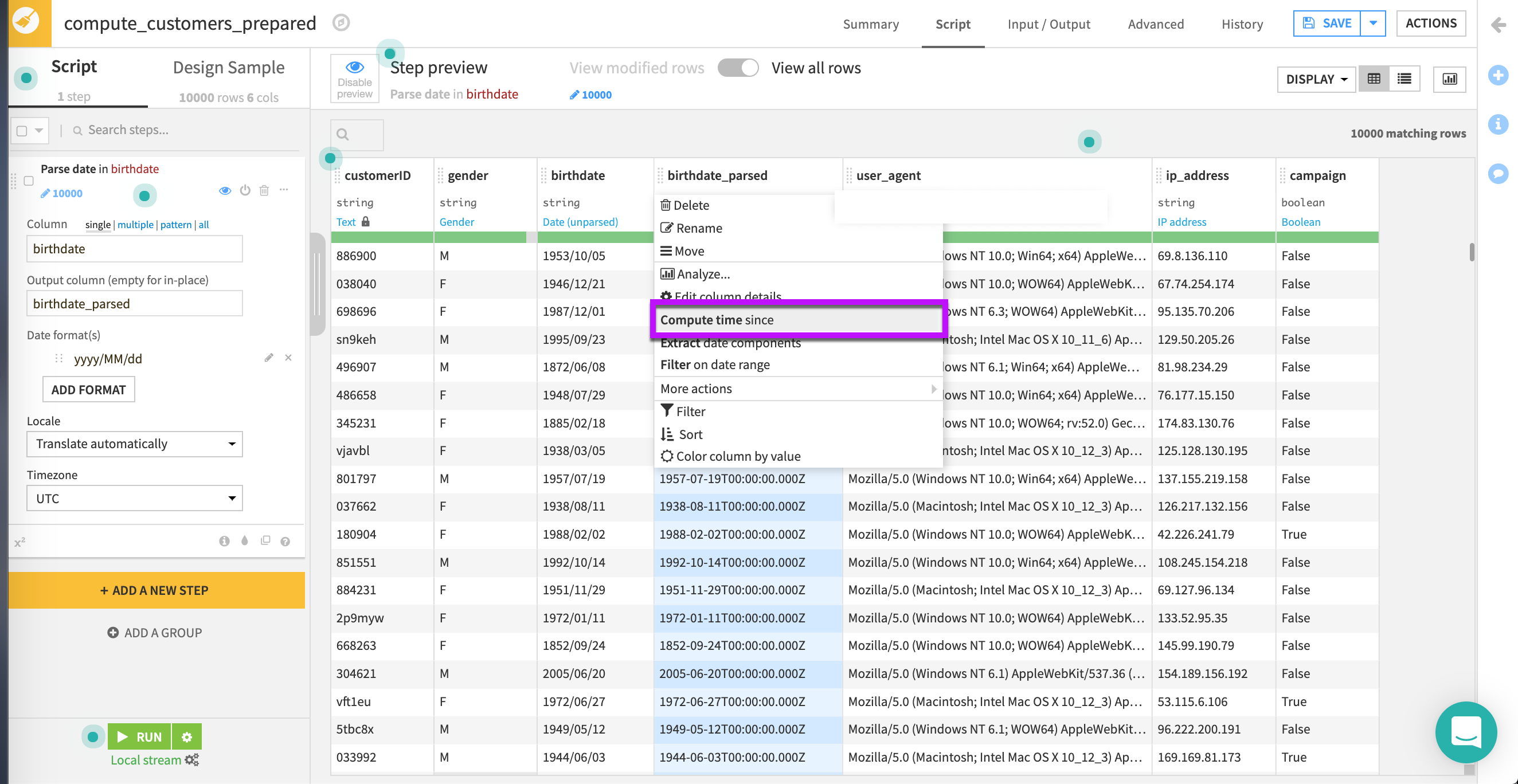

Another strategy you can use to improve the performance of your model is feature engineering. Feature engineering relates to building new features from your existing dataset or transforming existing features into more meaningful representations.

One example is raw dates. Raw dates are not well understood by machine learning models. A good strategy would be to convert dates into numerical features to preserve their ordinal relationship.

For example, in the Prepare recipe or in the Script tab in the Lab, you can transform the birth date column into an age column.

This simple transformation can dramatically improve the performance of your model. One last pitfall to check for is data leakage.

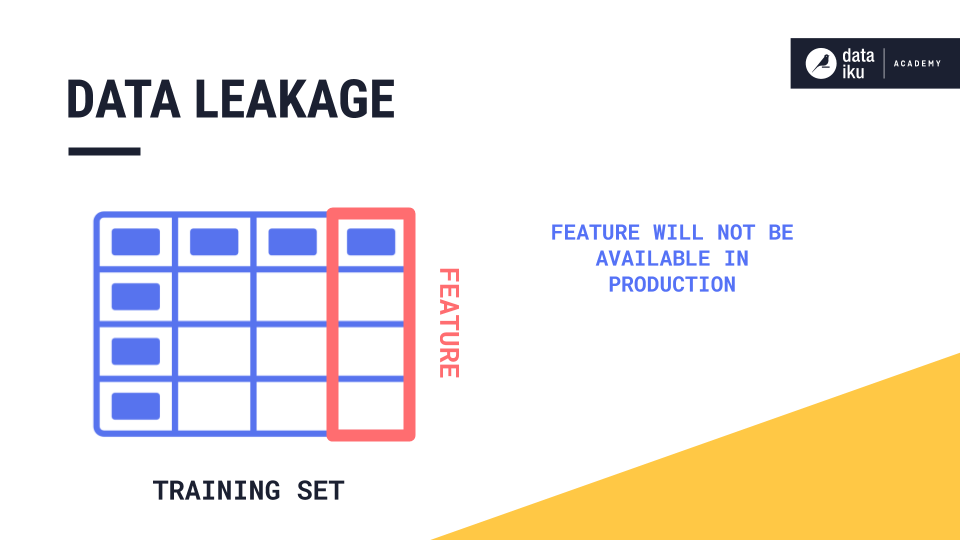

Look for Data Leakage

Data leakage happens when you train your model using features that won’t be available when predicting new data once the model is deployed in production. This issue will cause overly optimistic performance on your validation set, and very low generalization performance on real data.

When building your training set, it is essential to check that all the features you are using will be available at prediction time and that none of your features contain the information you are trying to predict.

For example, let’s say you are trying to predict sales for a given day, and you’ve created a feature using the sales amount in the 3 days leading to this day. The feature contains your target, unless you make sure that your feature only looks at the three days prior to the target day.