Concept: Model Summary Overview¶

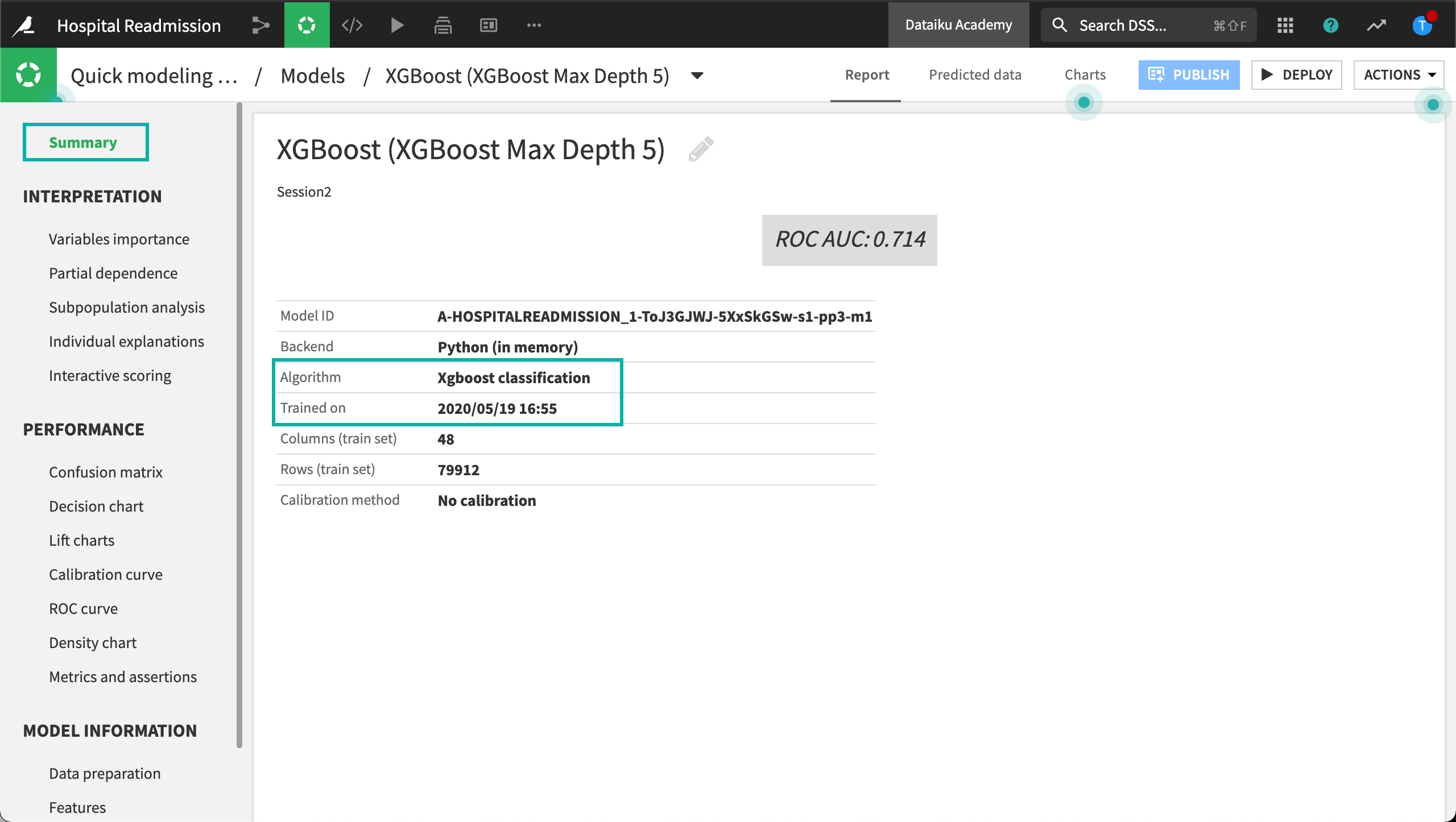

Let’s take a closer look at the results of the XGBoost model from the “Create the Model” concept video. Clicking on the model name from the Result tab takes us to the Report page for the XGBoost model.

The Summary panel of the Report page displays general information about the model, such as the algorithm and training date. In addition, the report page also contains sections relating to the model interpretation, performance, and detailed information about the model.

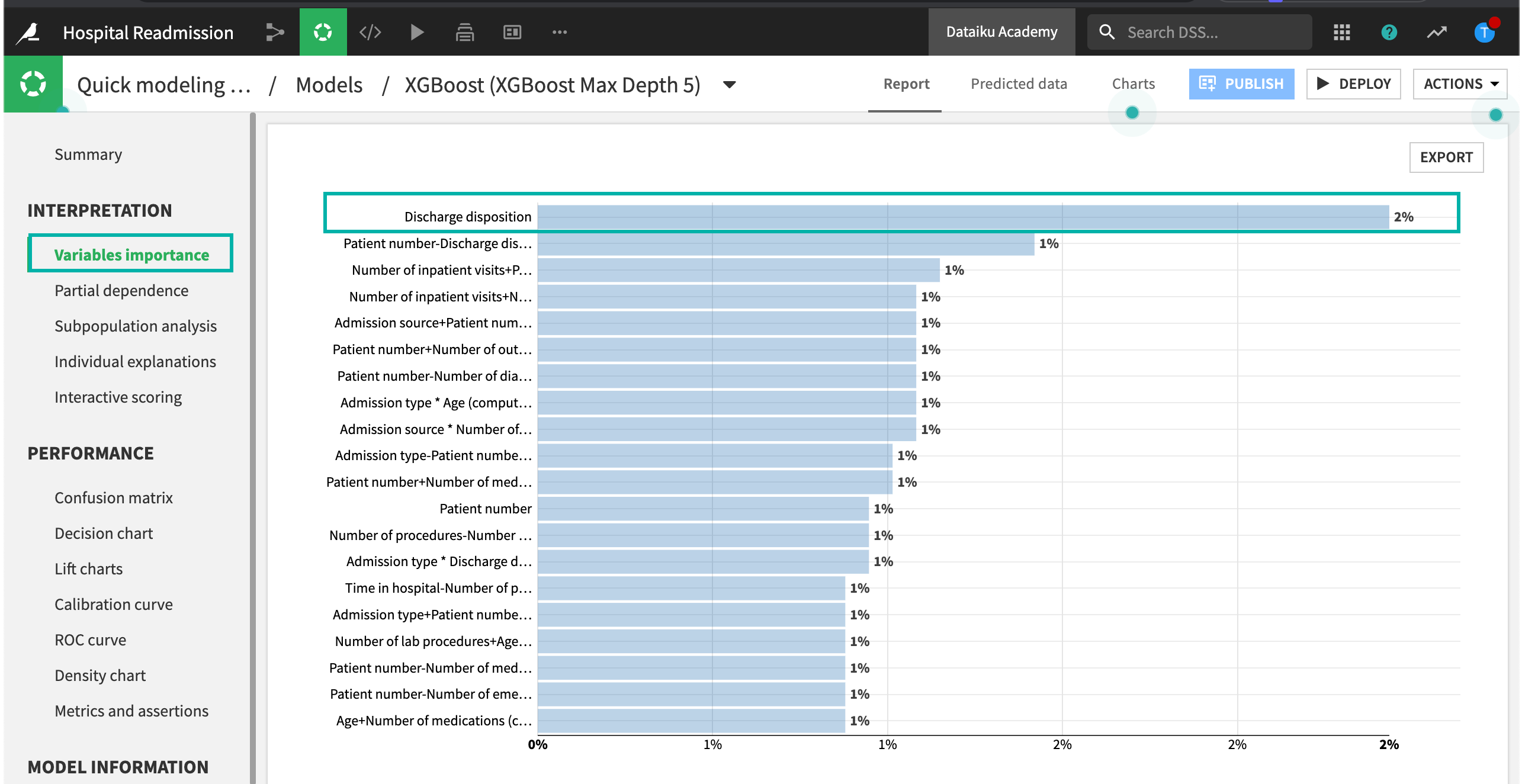

Model Interpretation

In the Interpretation section, we can see that the Variables importance tab displays the global feature importance of our model. The Patient Number, Discharge Disposition, and Age features have the strongest relationship with hospital readmission rates.

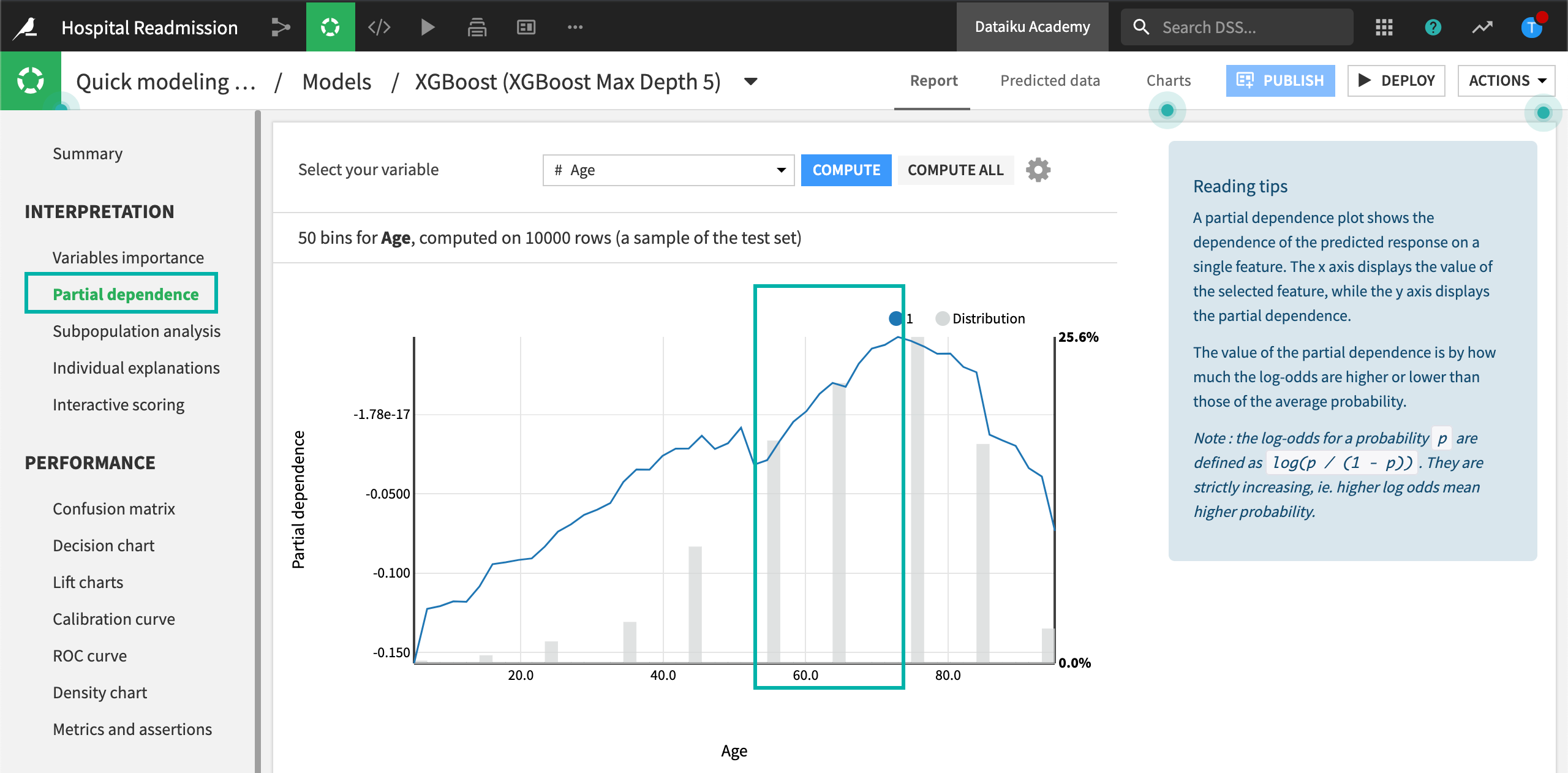

Partial dependence plots help us understand the effect an individual feature has on model predictions. For example, computing the Partial dependence plot of the Age feature reveals the likelihood of hospital readmission increases with age for ages between 60 and 80.

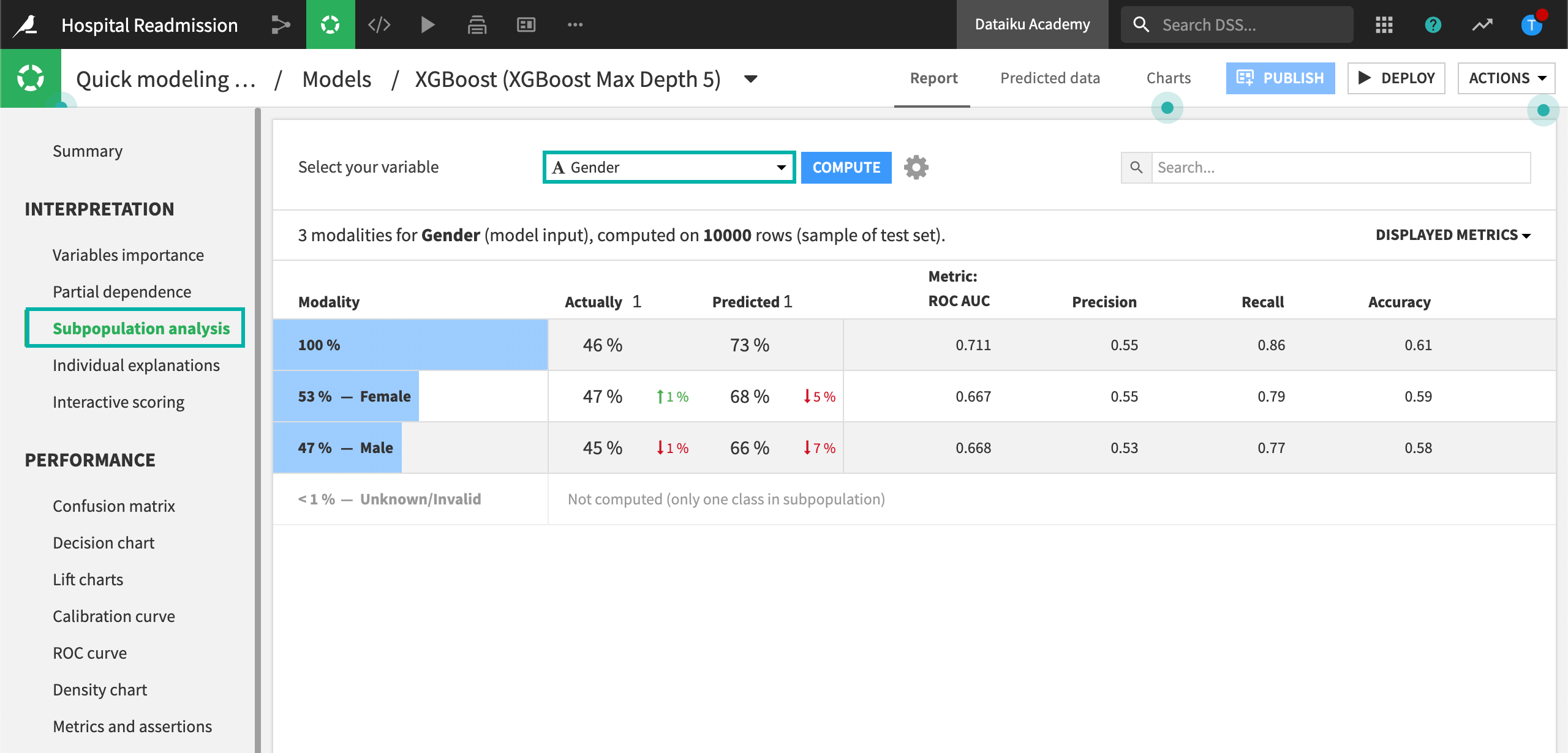

Subpopulation analysis allows us to assess the behavior of the model across different subgroups. For example, we can analyze the model performance based on Gender. The results show similar model behavior across genders, with a slight decrease in performance for male patients.

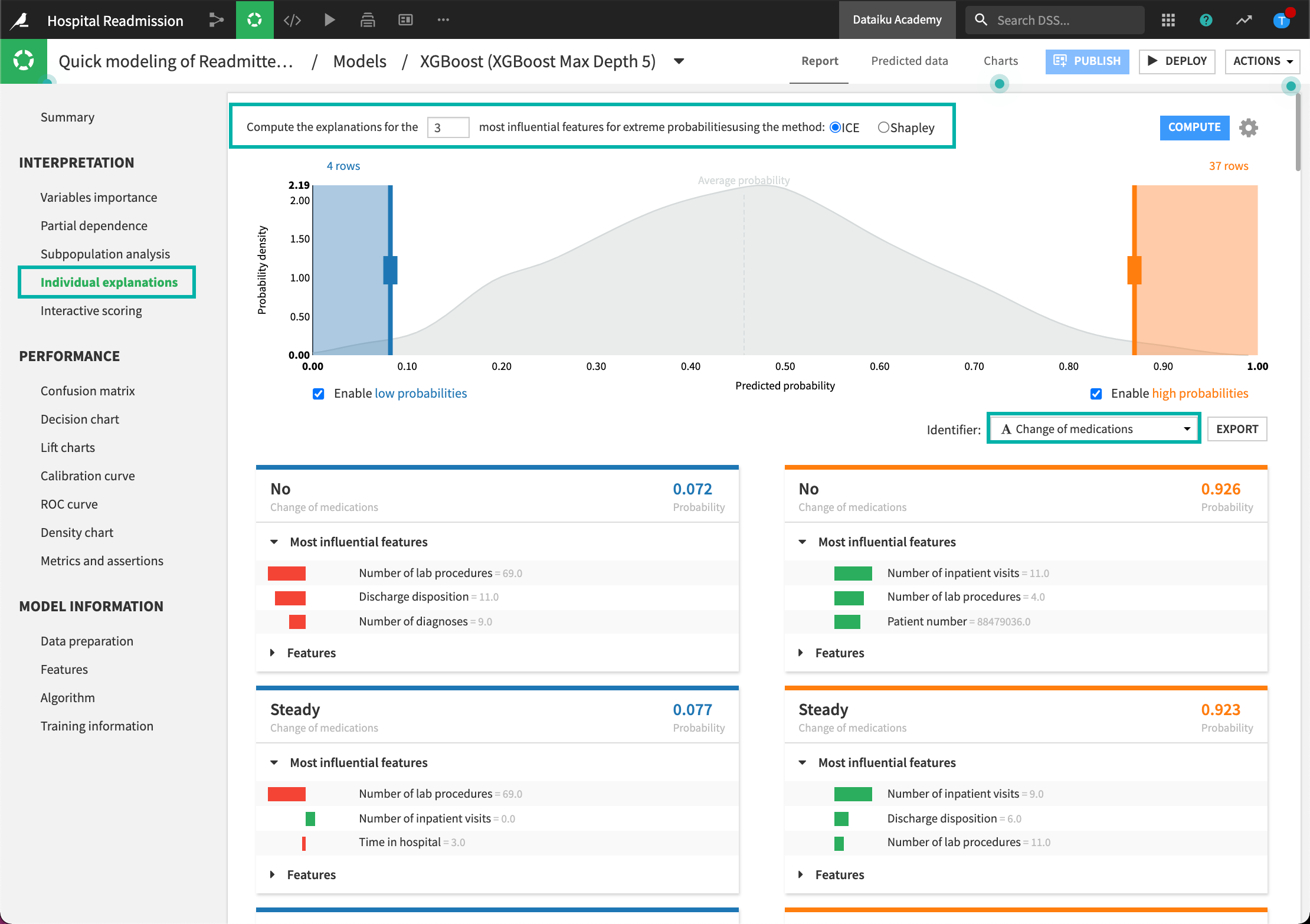

While global variable importance can be a useful metric in determining overall model behavior, it does not provide insights into individual model predictions. DSS allows users to generate individual prediction explanations. For example, we can see that the Discharge Disposition feature heavily influenced our model’s prediction for this individual patient.

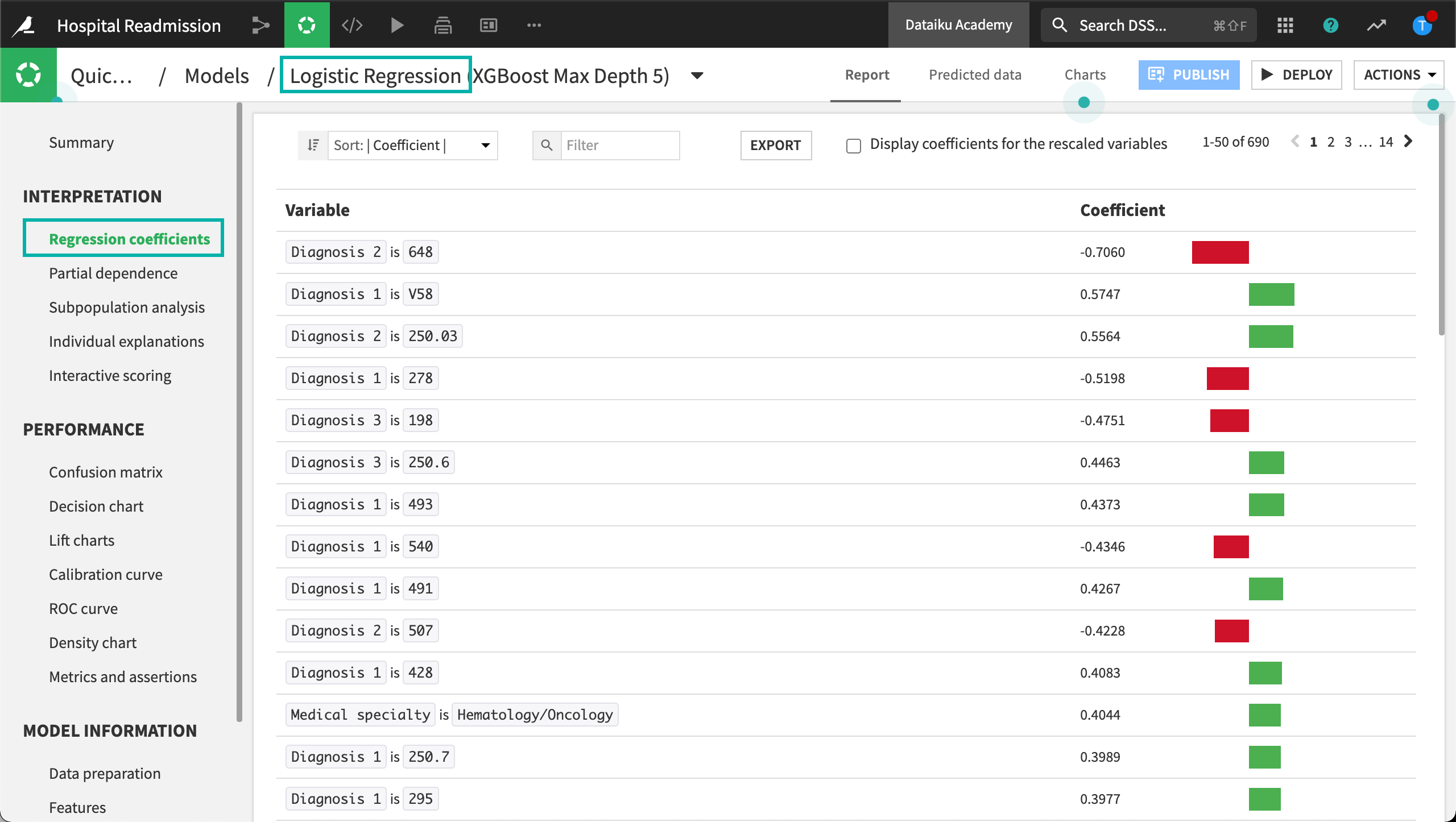

Some of the panels in the Interpretation section are algorithm-dependent. For example, a linear model, such as Logistic Regression, will display information about the model’s coefficients, instead of variable importance, as in the case of an XGBoost model.

Model Performance

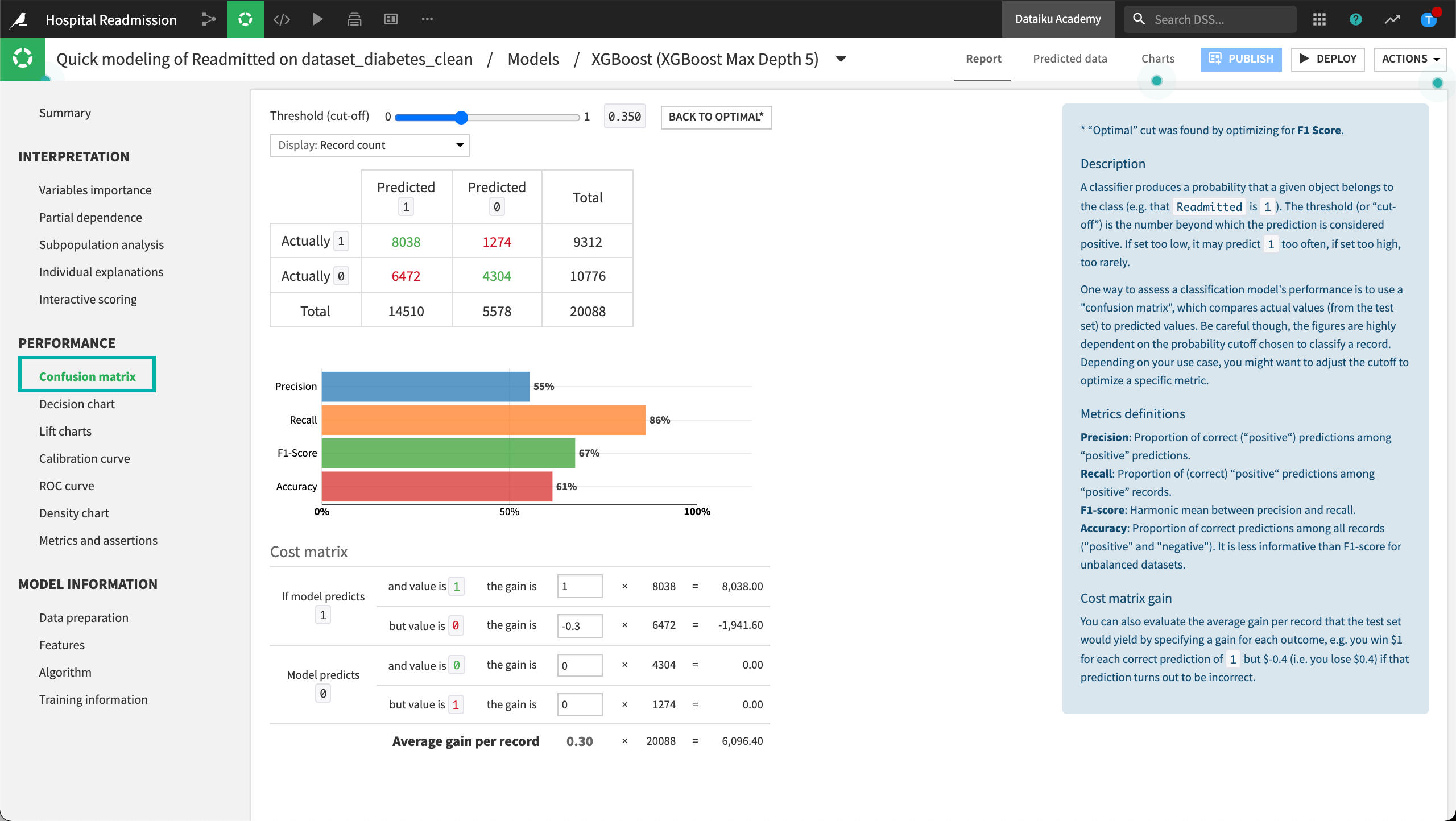

The Confusion matrix compares the actual values of the target variable with the predicted values. In addition, DSS displays some associated metrics, such as “precision”, “recall”, and the “F1-score”. For example, the Confusion matrix shows that our model has a 44% false-positive rate, and a recall of 84%.

By default, DSS displays the Confusion matrix and associated metrics at the optimal threshold (or cut-off). However, manual changes to the cut-off value are reflected in both the Confusion matrix and associated metrics in real-time.

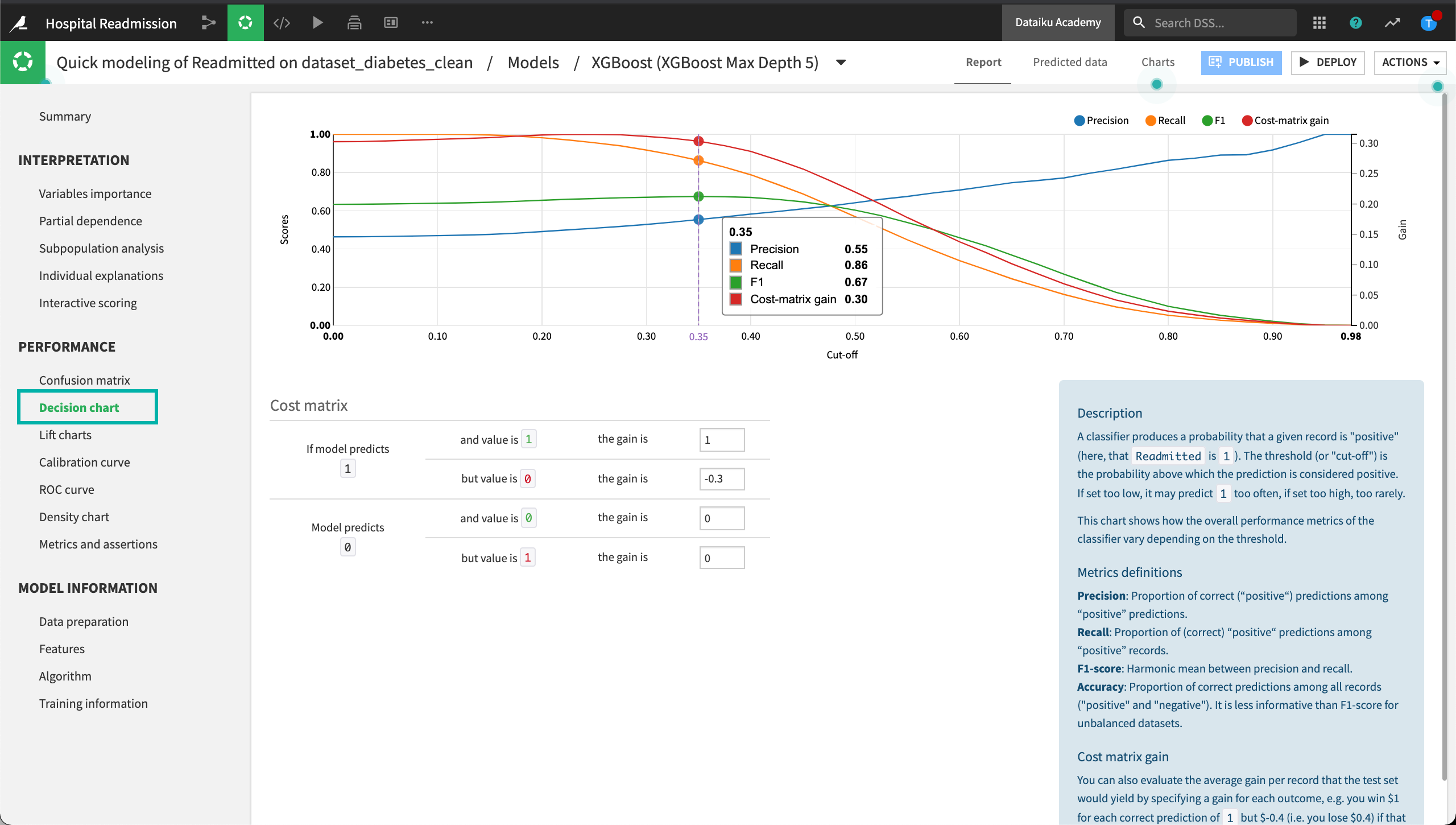

The Decision chart tab displays a graphical representation of the model’s performance metrics for all possible cut-off values.

The Decision chart also shows the location of the optimal cut-off (based on the F1 score), which is 0.38 for our XGBoost model.

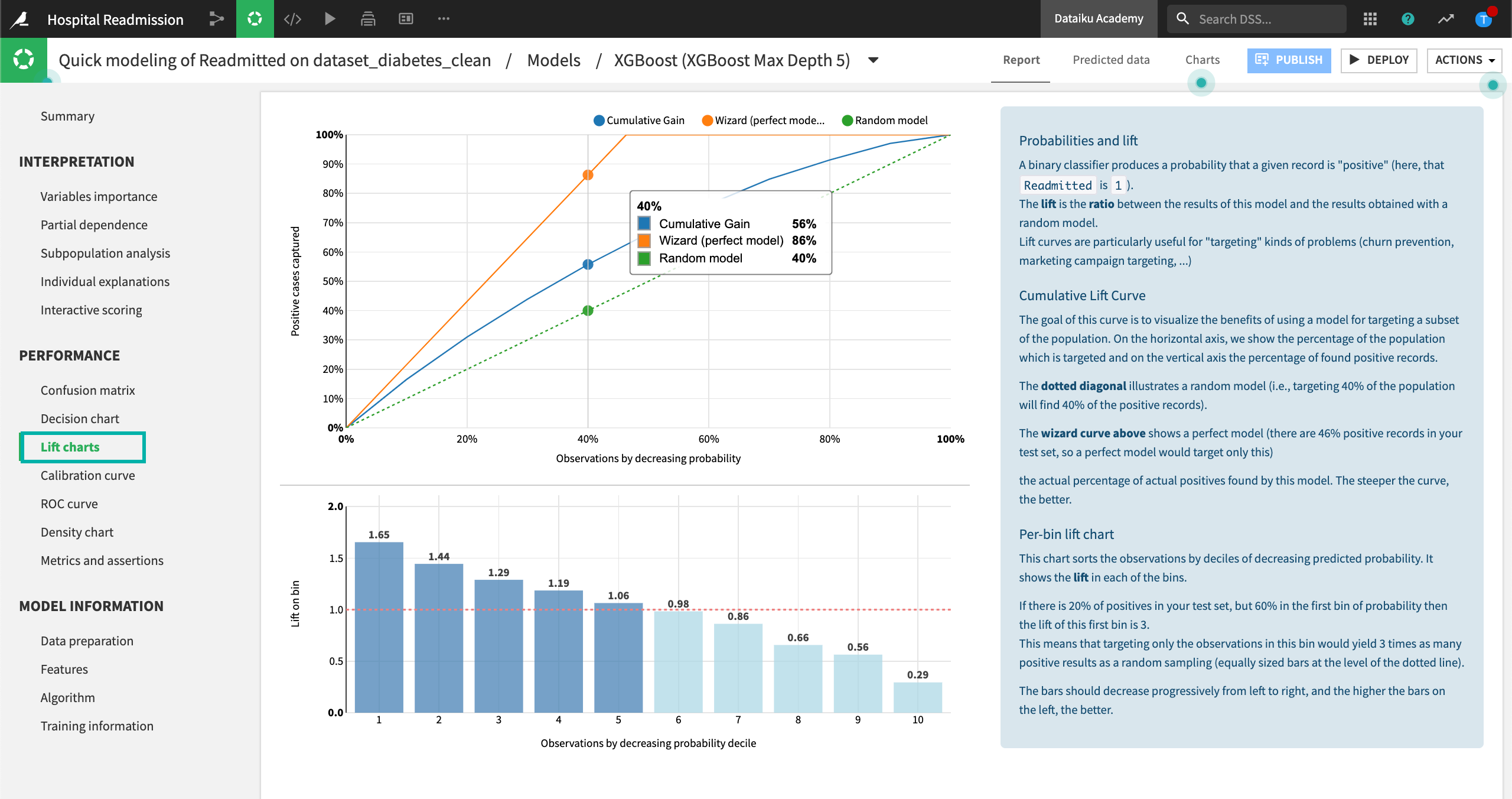

The Lift charts and ROC curve are visual aids that can be used to assess the performance of a machine learning model. The steeper the curves are at the beginning of the graphs, the better the model.

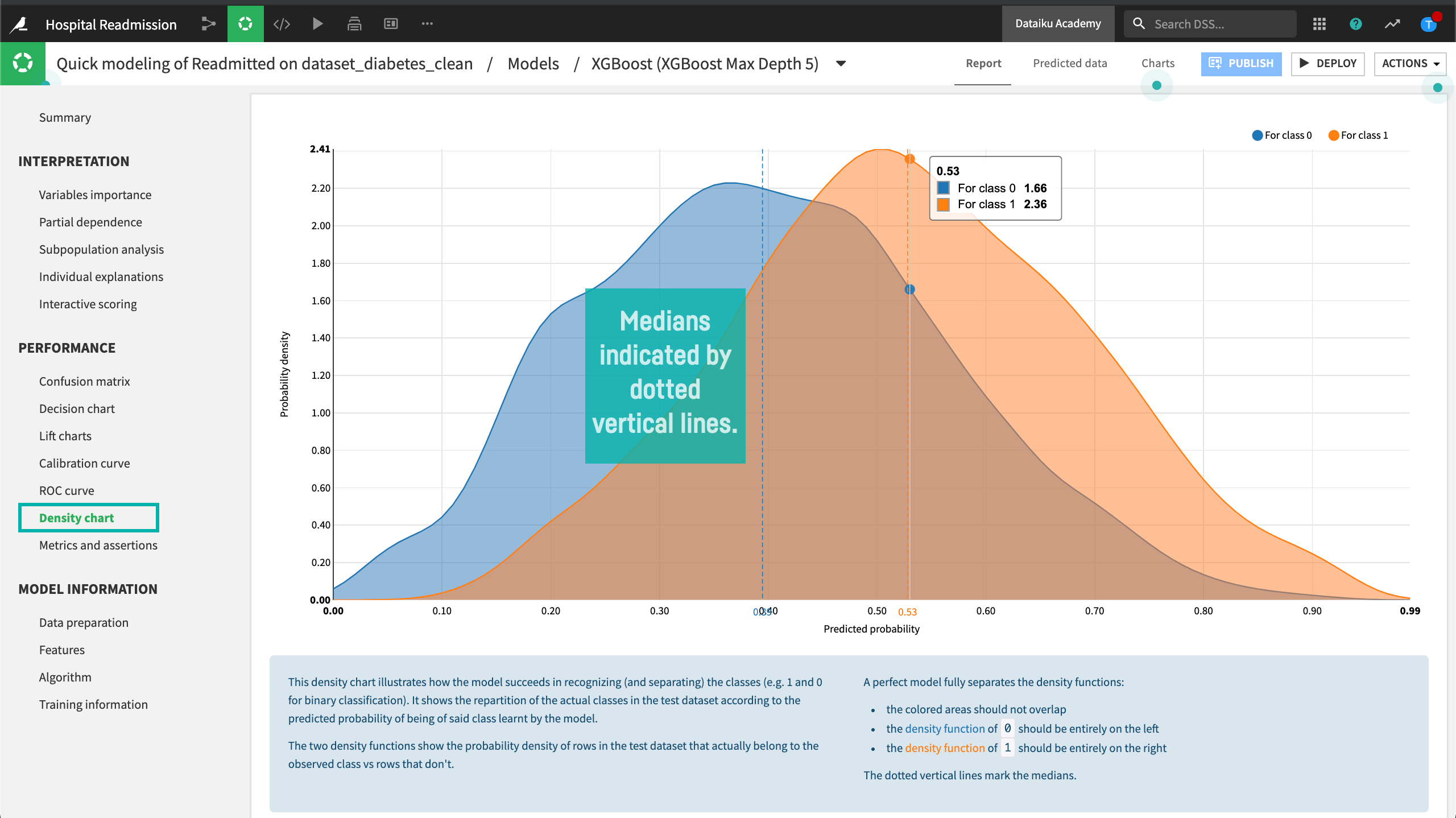

Finally, the Density chart illustrates how the model succeeds in recognizing and separating the classes. While in a perfect model the probability densities for the different would not overlap, this is almost never the case for models trained on real data. For our XGBoost model, we are able to observe two distinct distributions, with their medians separated by a 12% predicted probability.

Model Information

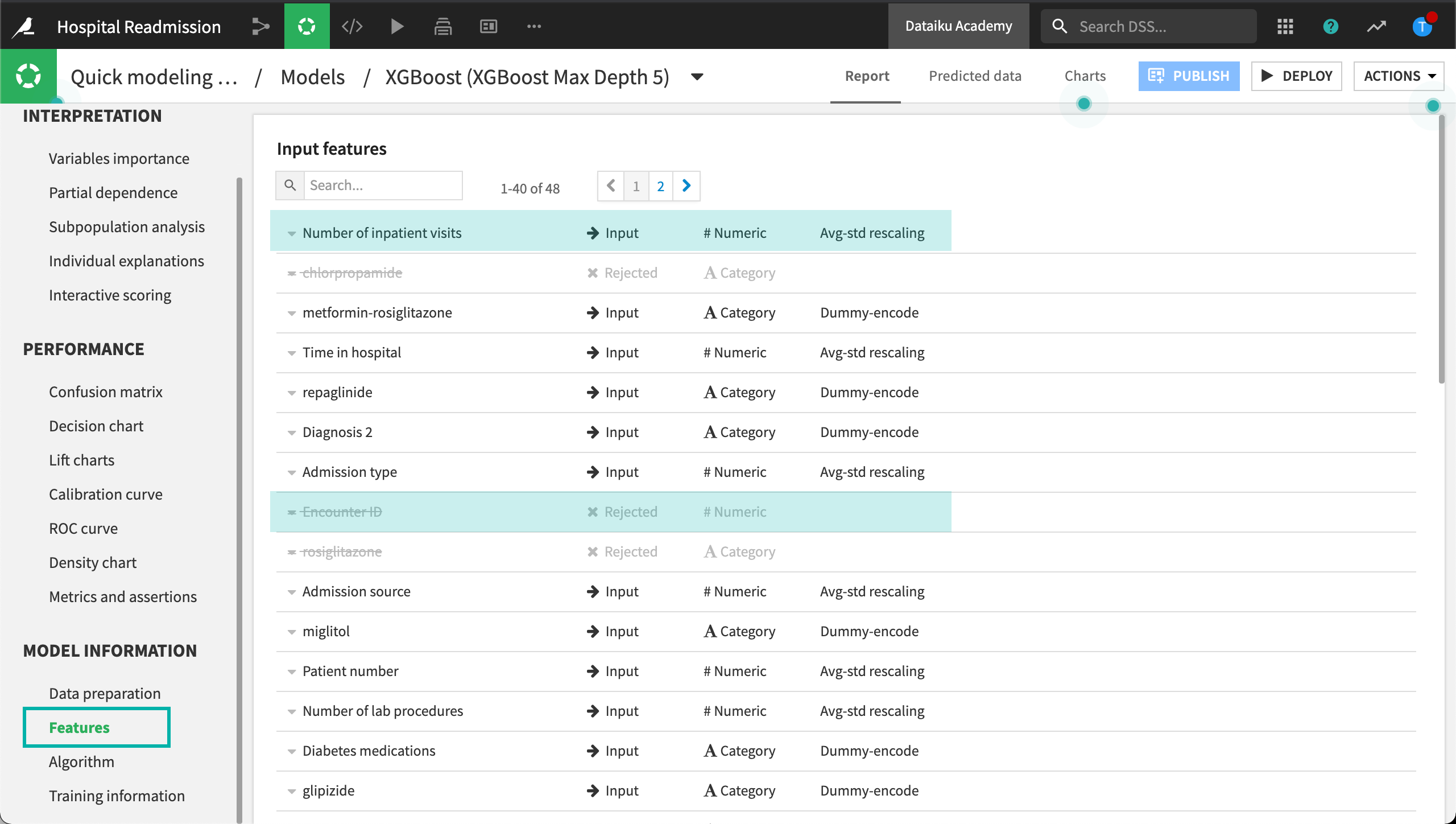

Finally, let’s explore the Model Information section. The Features tab includes information on feature handling, as well as a list of all preprocessed features. For our XGBoost model, we can see that the Insulin feature was rejected, while the Number of Emergency Visits was processed as a numeric feature and standardized.

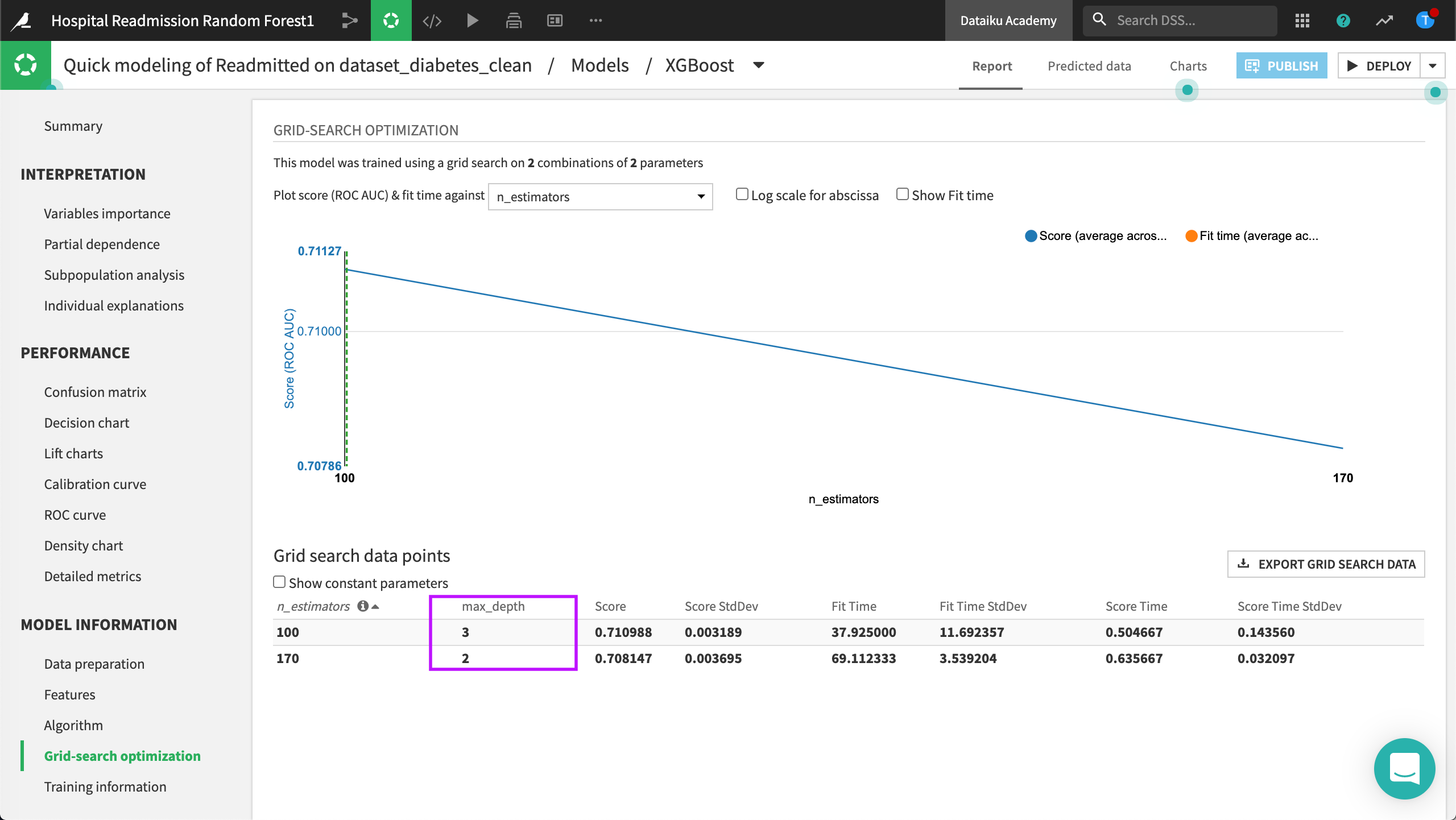

The Grid-search optimization panel shows a history of all models trained during the hyperparameter grid-search. In our case, we can see that DSS trained two XGBoost models, varying the “max_depth” hyperparameter from 2 to 3.

The Algorithm panel contains information on the optimum model resulting from the hyperparameter grid search. For our XGBoost model, we can see that DSS selected the XGBoost model with a “Max tree depth” of 3.