Automate the Heavy Lifting¶

In this section, we’ll automate the metrics and checks we created earlier.

The manual tasks involved in data pipeline rebuilding can become monotonous when its datasets are continuously refreshed. Dataiku’s automation features allow you to automate manual tasks, including intelligent data pipeline rebuilding, model retraining, reporting, and deployment.

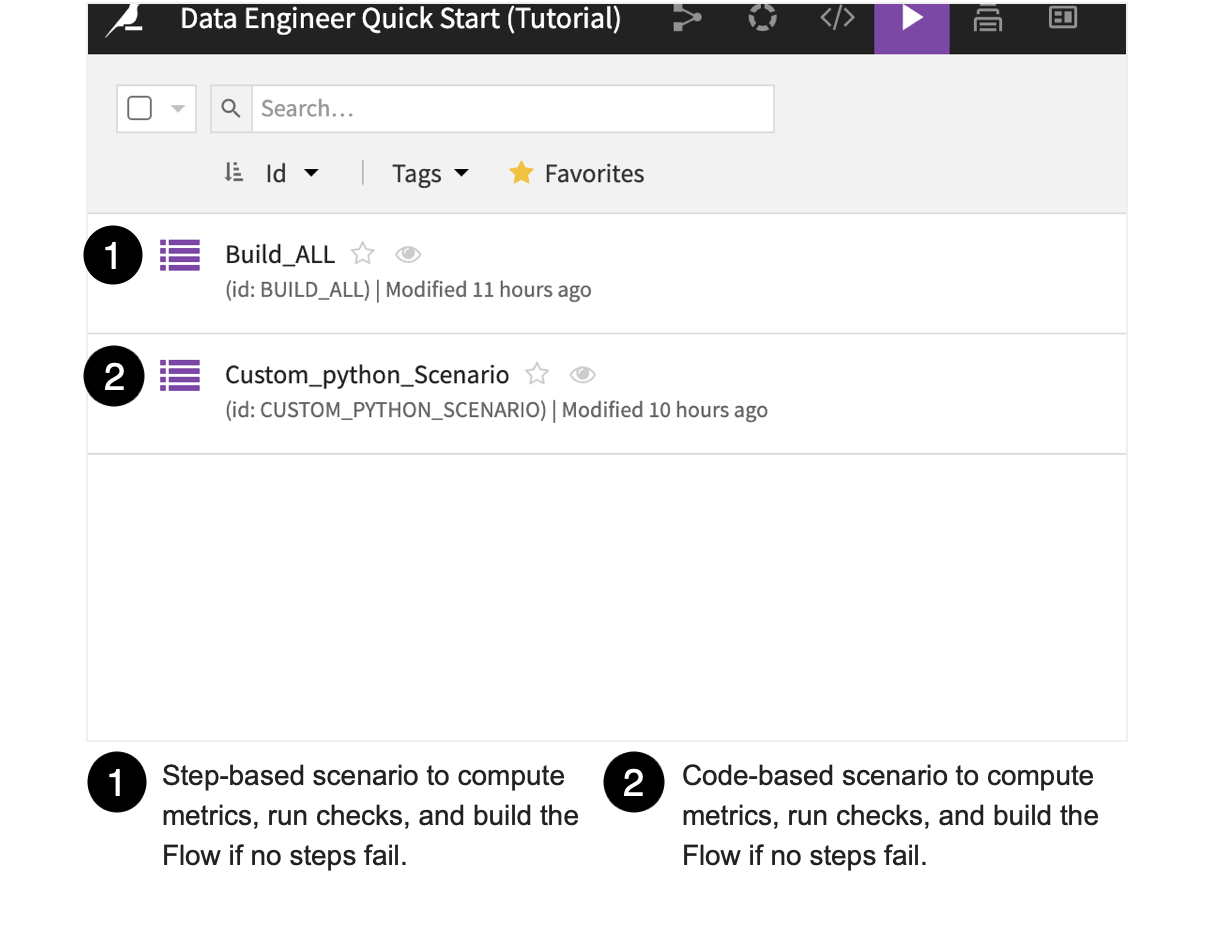

Let’s look at ways we can use scenarios to automate the project so the workflow is built and the checks are performed automatically. In Dataiku DSS, we can use both step-based (point-and-click), and code-based scenarios. We’ll use both types of scenarios for comparison.

Since our upstream datasets, flight_data_input and flight_input_prepared, will change whenever we have fresh data, we want to be able to automatically monitor their data quality and only build the model evaluation dataset, flight_data_evaluated, if all dataset metrics meet the specific conditions we created.

To do this, we’ll set up an automation scenario to monitor checks of the metrics that have already been created for these datasets.

Automate Metrics and Checks Using a Step-Based Scenario¶

Let’s look at automating metrics and checks using a step-based scenario. We’ll also learn about scenario triggers and reporters.

Go to the Flow.

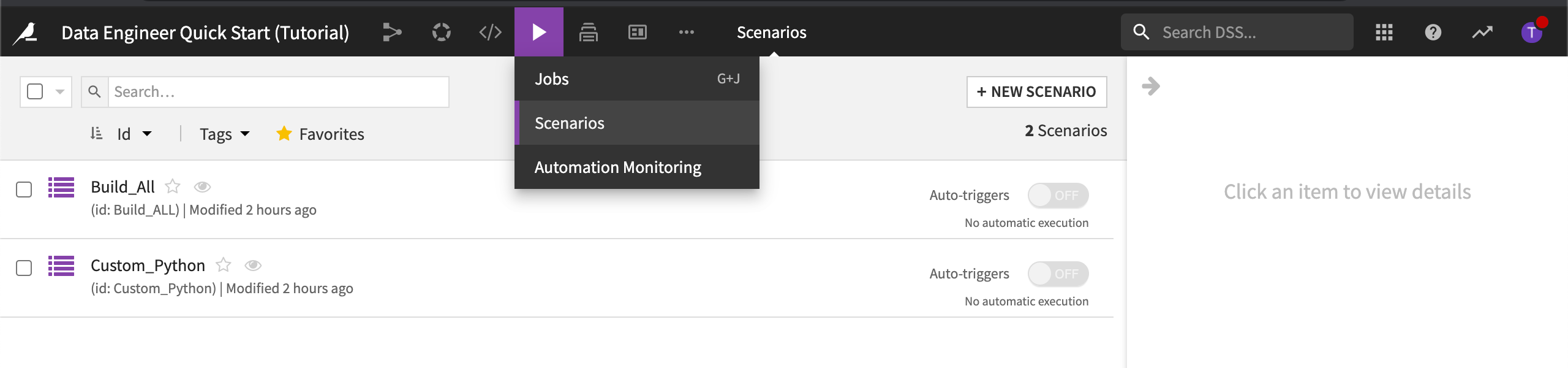

From the Jobs dropdown in the top navigation bar, select Scenarios.

Select the Build_All scenario to open it.

Go to the Steps tab.

Creating the Steps of the Scenario¶

The Build_ALL scenario contains steps needed to build the flight input datasets, compute the metrics and run the checks, then build and compute metrics for the final dataset in the Flow.

Open the step used to build flight_data_evaluated.

The final step builds flight_data_evaluated only if no prior step failed. Its Build mode is set to Build required datasets (also known as smart reconstruction). It checks each dataset and recipe upstream of the selected dataset to see if it has been modified more recently than the selected dataset. Dataiku DSS then rebuilds all impacted datasets down to the selected dataset.

For more information, visit, Can I control which datasets in my Flow get rebuilt during a scenario?.

Note

Depending on your project and the desired outcome, the build strategy you follow may be different than the strategy used in this tutorial. For example, if there is a build step that builds the final dataset in the Flow, and the Build mode is set to Force-rebuild dataset and dependencies, Dataiku DSS would rebuild the dataset and all of its dependencies, including any upstream datasets. This build mode is more computationally intensive.

Triggering the Scenario to Run¶

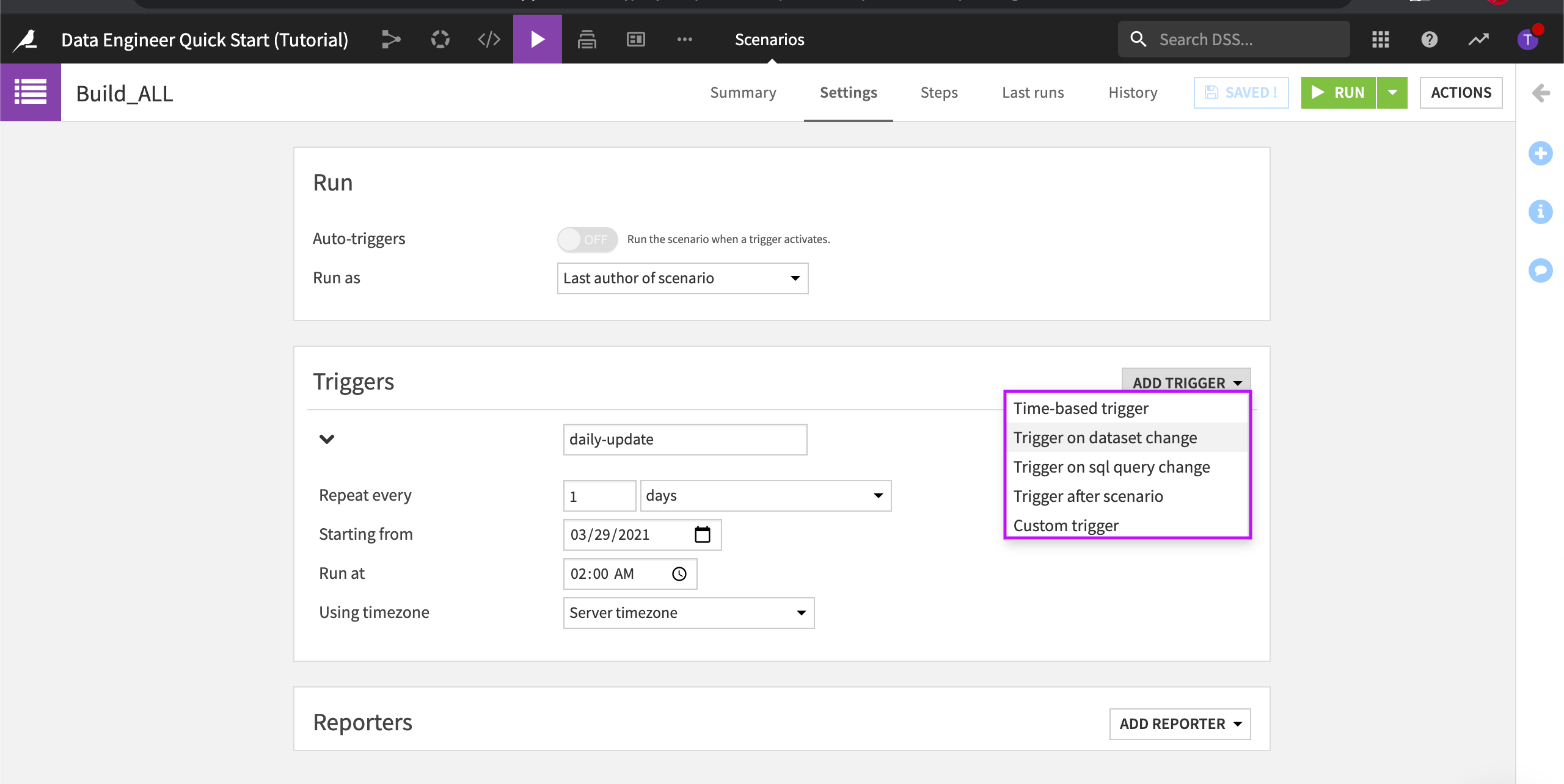

Triggers are used to automatically start a scenario, based on one or more conditions. Each trigger can be enabled or disabled. In addition, the scenario must be marked as “active” for triggers to be evaluated.

Go to the Settings tab.

In Triggers, expand the daily-update trigger to learn more about it.

This particular trigger is set to repeat daily. Dataiku DSS lets you add several types of triggers including time-based triggers and dataset modification triggers. You can even code your own triggers using Python.

Reporting on Scenario Runs¶

In Reporters, click Add Reporter to see the available options.

We can use reporters to inform our team members about scenario activities. For example, scenario reporters can send updates about the training of models or changes in data quality. Reporters can also create actionable messages that users can receive within their email or through other messaging channels.

Automate Metrics and Checks Using a Code-Based Scenario¶

Let’s discover how we can automate metrics and checks using a code-based scenario.

From the list of scenarios, select the Custom_Python scenario to open it.

Go to the Script tab.

Run the script.

This script performs the same steps as in the step-based scenario.