Demand dataset¶

Create a new dataset from the yearly demand files (i.e. accidents.zip). Since all the files have the same structure, we can drag and drop the zip file in a single upload, and Dataiku will stack the four CSV files automatically. Name the output accidents_database.

The accidents_database first needs cleaning in order to be suitable for analysis. The main preparation steps we will undertake include:

Cleaning and parsing the date for each observation

Cleaning longitudes and latitudes and creating geopoints

Enriching geopoints with administrative information

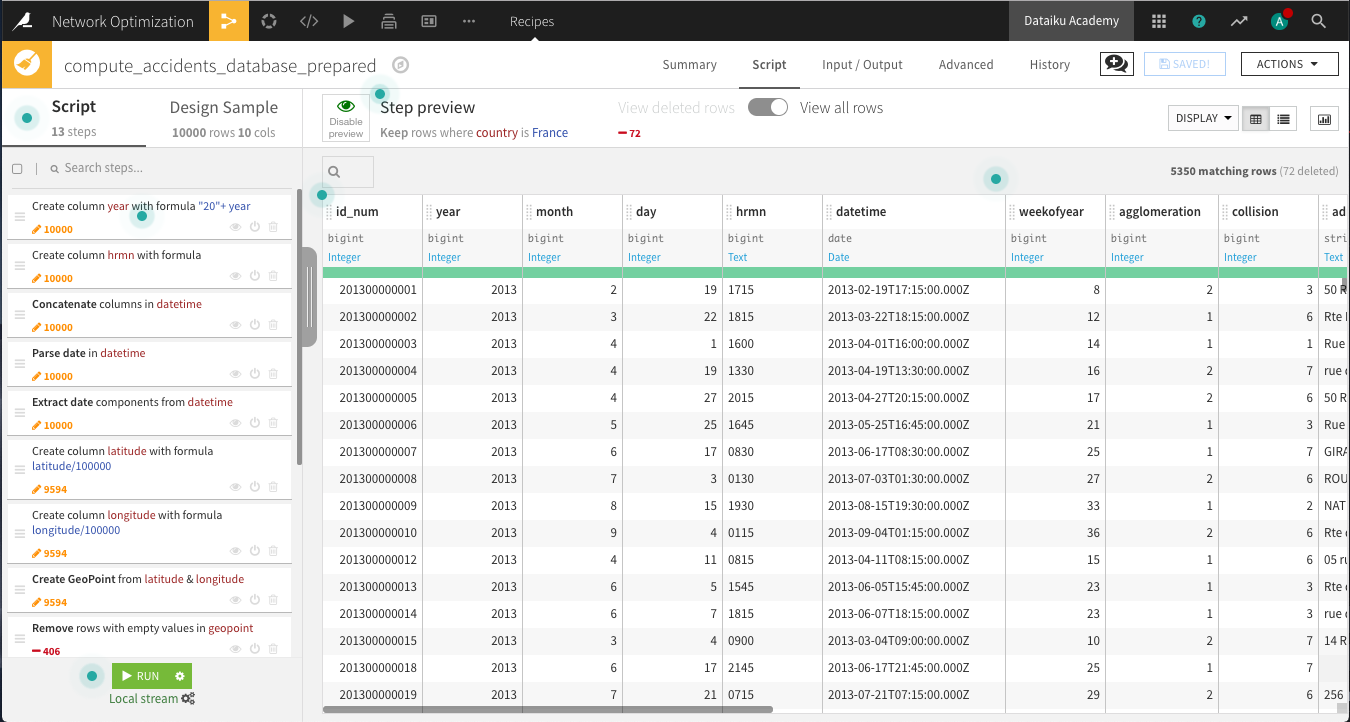

From the accidents_database, create a Prepare recipe and store the output in the default name, accidents_database_prepared.

Cleaning Dates and Times¶

To get a recognizable datetime object, we will need to clean and concatenate the year, month, day and hrmn columns by adding the following steps to the Prepare script.

The year column has two-digit years instead of four. Add a new step using the Formula processor. Name the output column year, and use the expression

"20" + year.There are inconsistencies in the number of digits in the hrmn column, which represents the hours and minutes. Convert it to a 24-hour (military time) format using the Formula processor. Name the output column hrmn. Copy and paste the expression below, so that 12:30 am will be written as “0030”, 6:45am as “0645”, 10:00 pm as “2200”, and so on. In other words, the output of each row in the column should contain four digits according to a 24-hour clock format.

if(length(hrmn) == 3,"0"+hrmn,

if(length(hrmn) == 2,"00"+hrmn,

if(length(hrmn) == 1,"000"+hrmn,hrmn)))

Use a Concatenate columns processor to merge the year, month, day, and hrmn columns into an output column

datetime, using-as the delimiter.Because the new datetime column is stored as a string, use the Parse date processor in-place on datetime as the input column. Depending on your sample configuration, the likely format will be

yyyy-MM-dd-HHmmoryyyy-MM-ddZ. Be sure the new storage type is date.Use another processor to Extract date components from the datetime column. Only create one new additional column,

weekofyear, to mark the particular week in a year (1-52).

Cleaning Geographic Columns¶

The latitude and longitude columns are not yet in the right format. We want to use the WGS 84 coordinates system, so these columns should be decimal values. Add a Formula processor that divides latitude by

100000.Repeat the same for longitude.

Use the Create GeoPoint from lat/lon processor with the input columns latitude and longitude. Name the output

geopoint.

Click on the geopoint column header and select Analyze. Note that about 45% of the column values are “POINT(0 0)” or empty. Discard these observations in the next two steps:

Use the Remove rows where cell is empty processor on geopoint. You can also find it in the More actions menu after selecting the column header.

Use the Filter rows/cells on value processor on geopoint to remove matching rows where geopoint has the value

POINT(0 0).

Use the Reverse-geocode location processor on the geopoint column, producing the output columns named city (level 8), department (level 6), region (level 4) and country (level 2).

Note

If you have successfully installed the Reverse Geocoding plugin, but run into difficulty using it the first time, note that the Reverse Geocoding plugin is one of the few plugins that requires restarting Dataiku DSS before using it.

Delete the four columns with the “_enName” suffix. Switching to the Columns view makes this especially easy.

Keep only the rows for which country is

France. Using the Filter rows/cells on value processor, select country as the column, and addFranceas the value to match.

Run the recipe, updating the schema to 18 columns. We now have a clean dataset of the dates, times, and geographic locations of car accidents in France over a four year period.