Preparing the Usage Dataset¶

The usage dataset tracks the mileage for rental cars, identified by their Asset ID, at a given point in Time.

The Use variable records the total number of miles a car has driven at the specified Time.

The units of the Time variable are not clear. Perhaps days from a particular date? This might be a question for the data engineers who produced this dataset.

Here Asset ID is not unique, i.e., the same rental car might have more than a single row of data.

On importing the CSV file, from the Explore tab, we can see that the columns are stored simply as “string” type (the grey text beneath the column header), even though Dataiku DSS can interpret the meanings to be “text”, “integer”, and “decimal” (the blue text beneath the storage type).

Note

For more information, please see the reference documentation expounding on the distinction between storage types and meanings.

Accordingly, with data stored as strings, we won’t be able to perform any mathematical operations on seemingly-numeric columns such as Use. Let’s fix this.

Navigate to the Settings > Schema tab, which shows the storage types and meanings for all columns.

Click Check Now to determine that the schema and data are consistent.

Then click Infer Types from Data to allow DSS to assign new storage types and high-level classifications.

Returning to the Explore tab, note that although the meanings (written in blue) have not changed, the storage types in grey have updated to “string”, “bigint”, and “double”.

Note

As noted in the UI, this action only takes into account the current sample, and so should be used cautiously. There are a number of different ways to perform type inference in Dataiku DSS, each with their own pros and cons, depending on your objectives. The act of initiating a Prepare recipe, for example, is another way to instruct DSS to perform type inference.

For most individual cars, we have many Use readings at many different times. However, we want the data to reflect the individual car so that we can model outcomes at the vehicle-level. Now with the correct storage types in place, we can perform the aggregation with a visual recipe:

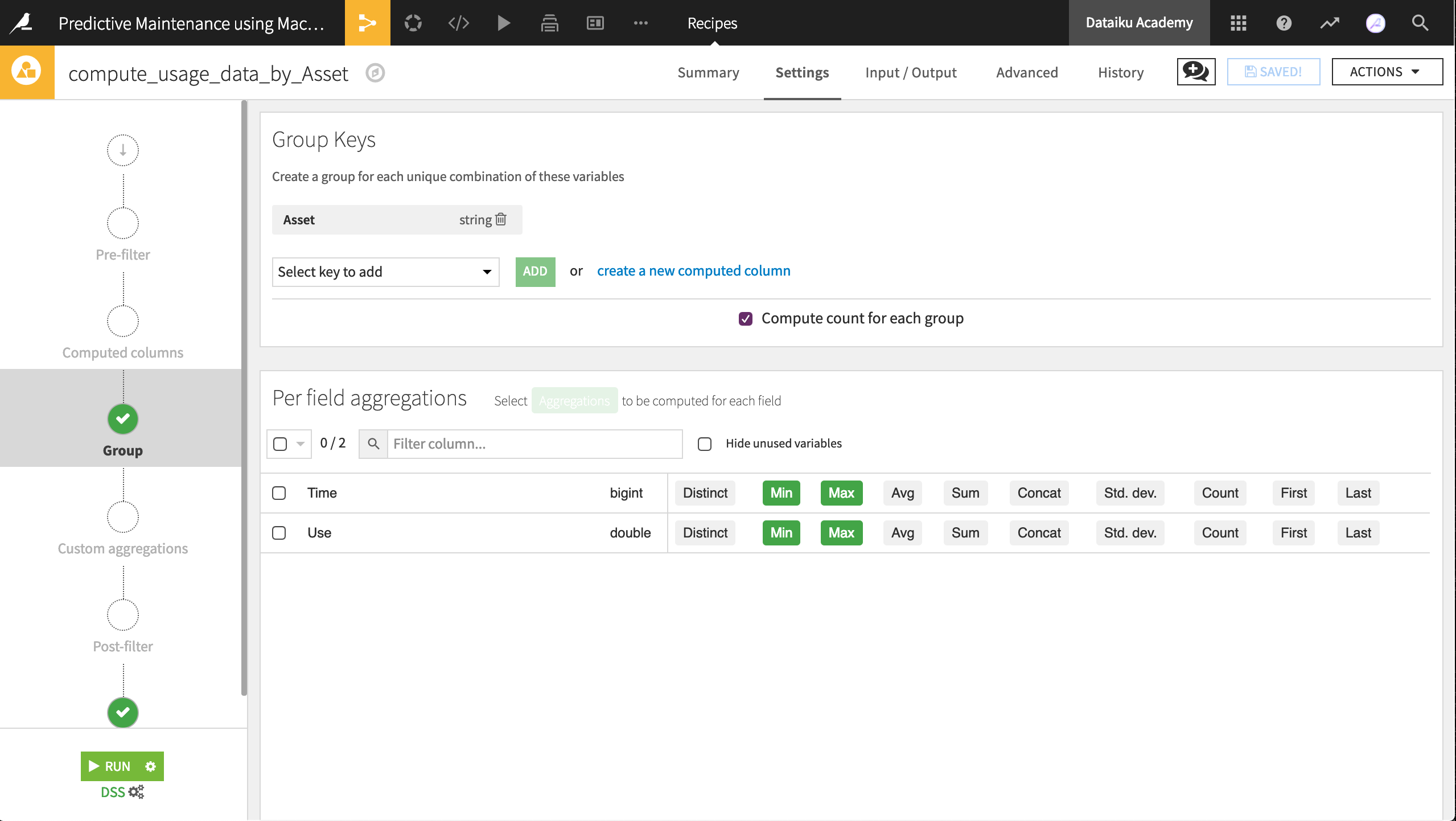

From the usage dataset, initiate a Group By recipe from the Actions menu.

Choose to Group By Asset in the dropdown menu.

Keep the default output dataset name

usage_by_Asset.In the Group step, we want the count for each group (selected by default). Add to this the Min and Max for both Time and Use.

Run the recipe, updating the schema to six output columns.

Now aggregated at the level of a unique rental car, the output dataset is fit for our purposes. Let’s see if the maintenance dataset can be brought to the same level.