Tutorial | Build a text classification model#

Get started#

Consider a problem of binary classification of text data: sentiment analysis. It asks is a movie review positive or negative? [1]

The goal is to train a model on the movie reviews text that can predict the polarity of each review. This is a common task in natural language processing.

Objectives#

In this tutorial, you will:

Build a baseline binary classification model to predict text sentiment.

Use text cleaning and feature generation methods to improve the performance of a sentiment analysis model.

Experiment building models with different text handling preprocessing methods.

Deploy a model from the Lab to the Flow and use the Evaluate recipe to test its performance against unseen data.

Prerequisites#

Dataiku 12.0 or later.

An Advanced Analytics Designer or Full Designer user profile.

Basic knowledge of visual ML in Dataiku (ML Practitioner level or equivalent).

Create the project#

From the Dataiku Design homepage, click + New Project.

Select Learning projects.

Search for and select NLP - The Visual Way.

If needed, change the folder into which the project will be installed, and click Create.

From the project homepage, click Go to Flow (or type

g+f).

From the Dataiku Design homepage, click + New Project.

Select DSS tutorials.

Filter by ML Practitioner.

Select NLP - The Visual Way.

From the project homepage, click Go to Flow (or type

g+f).

Note

You can also download the starter project from this website and import it as a ZIP file.

You’ll next want to build the Flow.

Click Flow Actions at the bottom right of the Flow.

Click Build all.

Keep the default settings and click Build.

Explore the data#

The Flow contains two data sources:

IMDB_train |

Training data to create the model. |

IMDB_test |

Testing data to estimate of the model’s performance on data that it didn’t see during training. |

Let’s start by exploring the prepared training data.

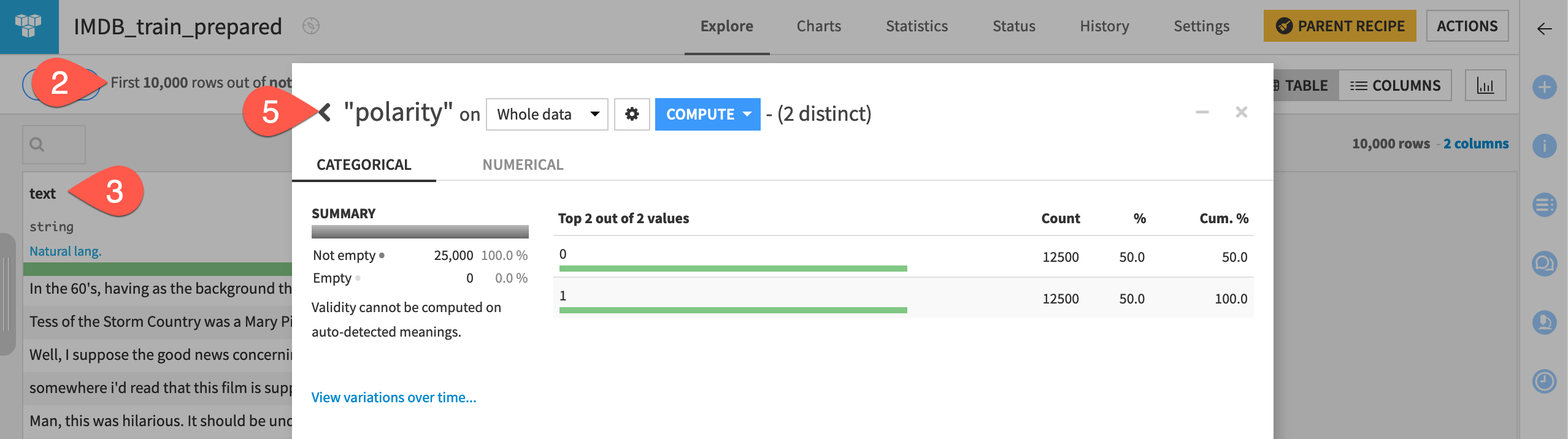

Open the IMDB_train_prepared dataset.

Near the top left, compute the number of records.

From the text column header dropdown, select Analyze. In the Categorical tab, recognize a small number of duplicates.

Switch to the Natural Language tab. Click Compute to find that the most frequent words include

movie,film, andbr(the HTML tag for a line break).Use the arrow at the top left of the dialog to analyze the polarity column. Switch to Whole data, and Compute metrics for the column to find an equal number of positive and negative reviews. This is helpful to know before modeling.

When you’ve finished exploring IMDB_train_prepared, follow a similar process to explore IMDB_test.

Train a baseline model#

With the training data ready, let’s build an initial model to predict the polarity of a movie review.

Create a quick prototype model#

Start with a default quick prototype.

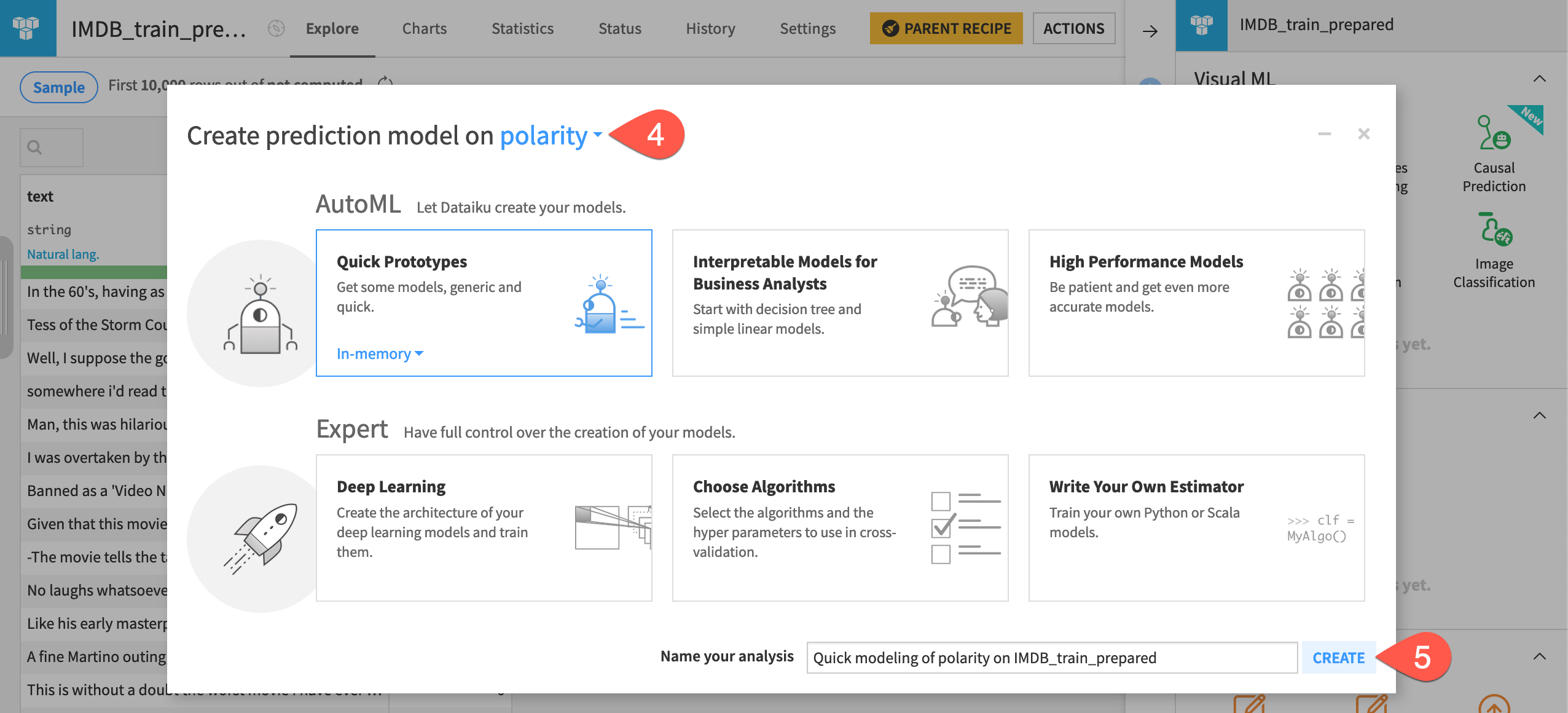

Select the IMDB_train_prepared dataset.

Open the Lab (

) tab from the right panel.

Select AutoML Prediction.

Select polarity as the target variable for the prediction model.

Leave the default Quick Prototypes, and click Create, and then Train.

Include a text column in a model design#

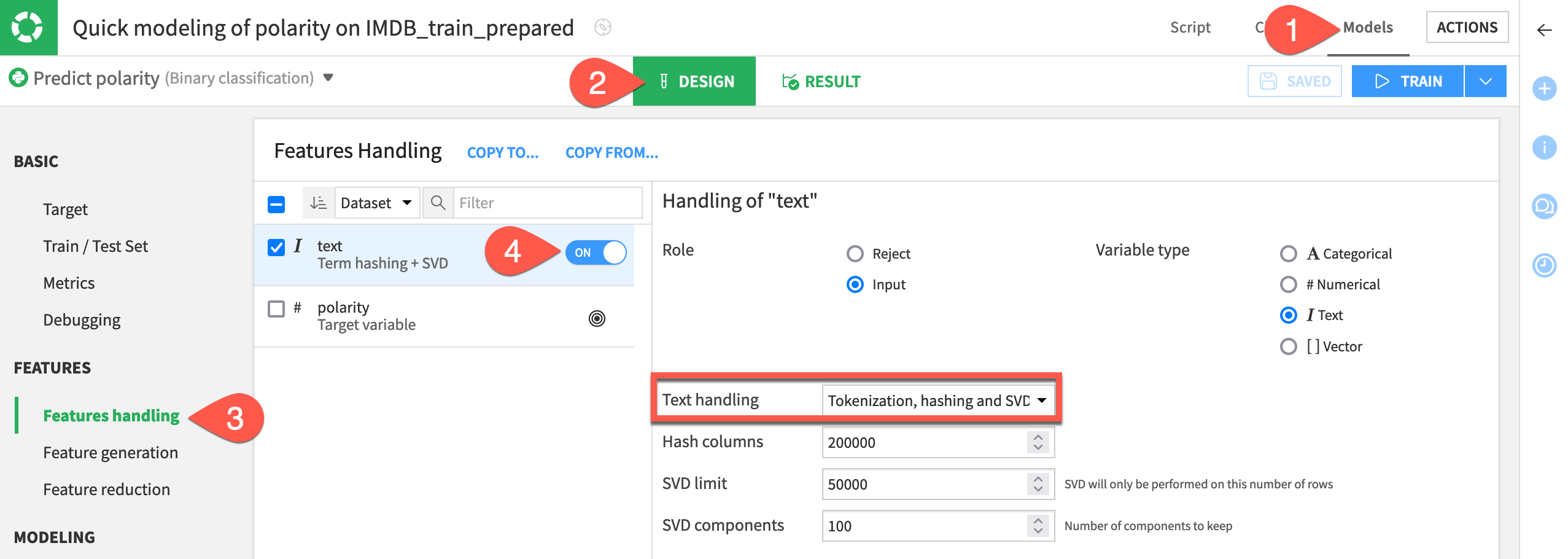

Dataiku, however, throws an error because no input feature is selected! Dataiku rejects text features by default. Accordingly, zero features were initially selected to train the model.

Let’s fix this.

Click Models at the top right.

Select the Design tab at the center.

Navigate to the Features handling panel on the left.

Toggle ON the text column as a feature in the model.

Important

The default text handling method is Tokenization, hashing, and SVD. You’ll experiment with others later. For now, let’s stick with the default option.

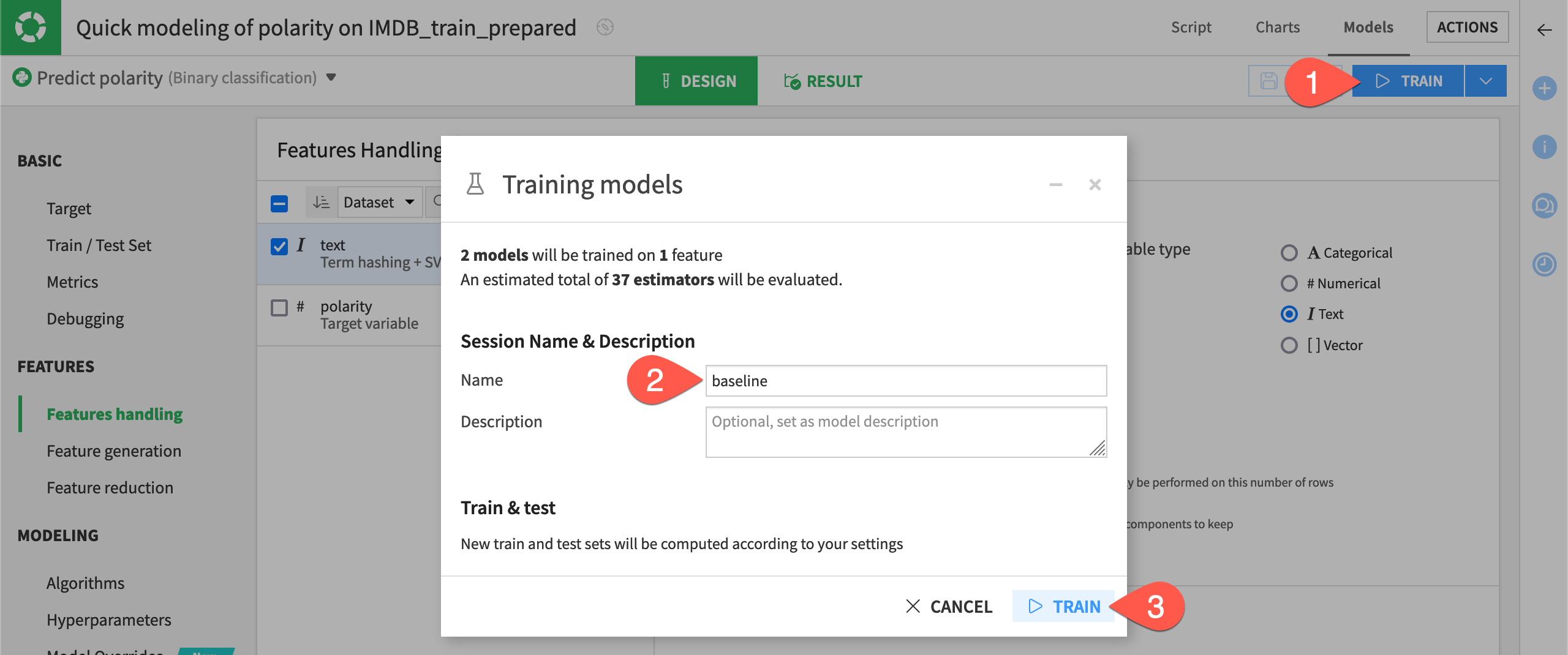

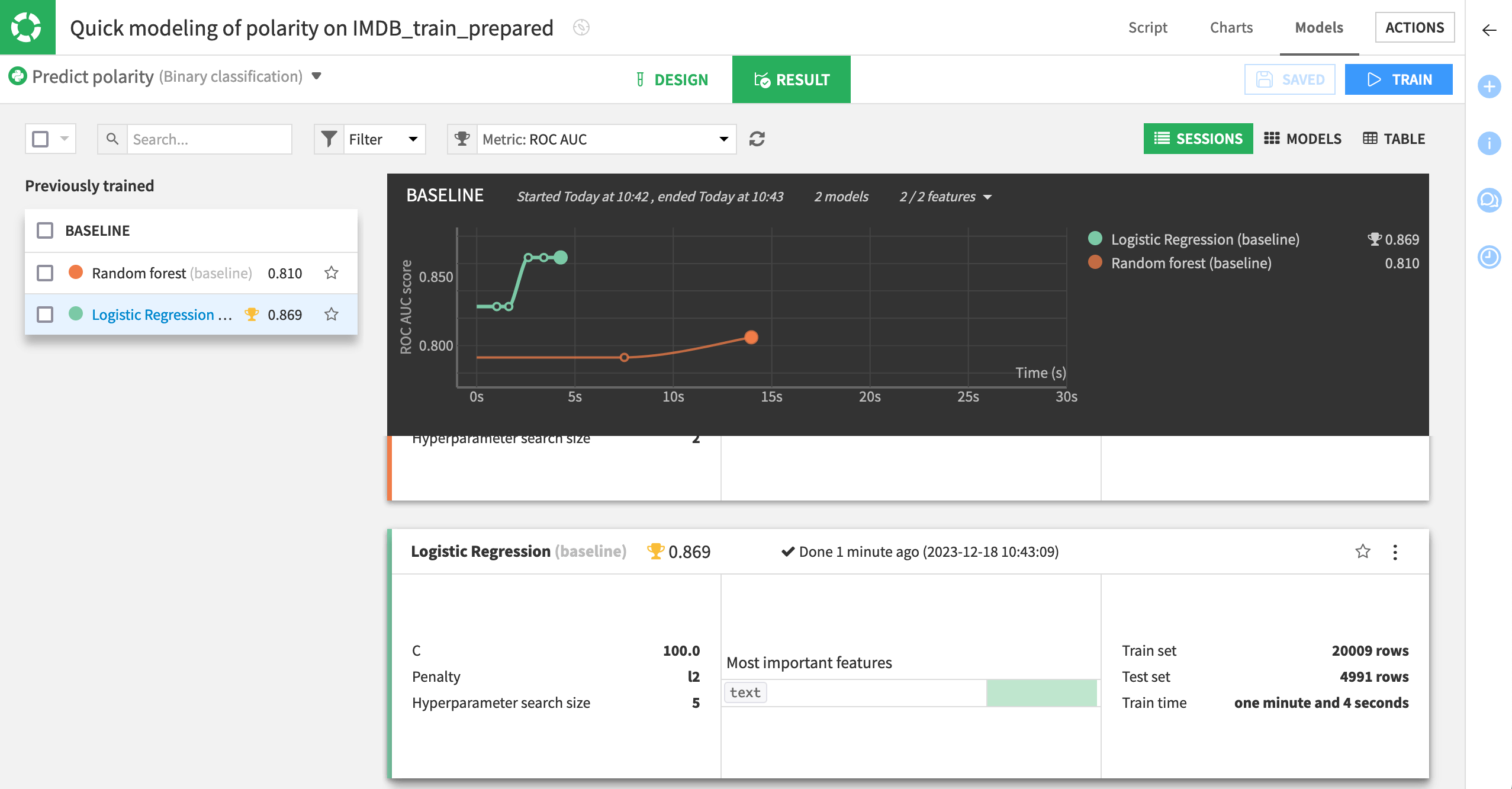

Train baseline models#

With the text column now included as a feature in the model design, let’s train a session of baseline models.

Click Train near the top right.

Give the name

baseline.Click Train.

For little effort, these results might not be too bad. From this starting point, you can improve the models with some simple data cleaning and feature engineering.

Clean text data#

Now that you have a baseline model to classify movie reviews into positive and negative buckets, let’s try to improve performance with some simple text cleaning steps.

Note

If the concepts here are unfamiliar to you, see Concept | Cleaning text data.

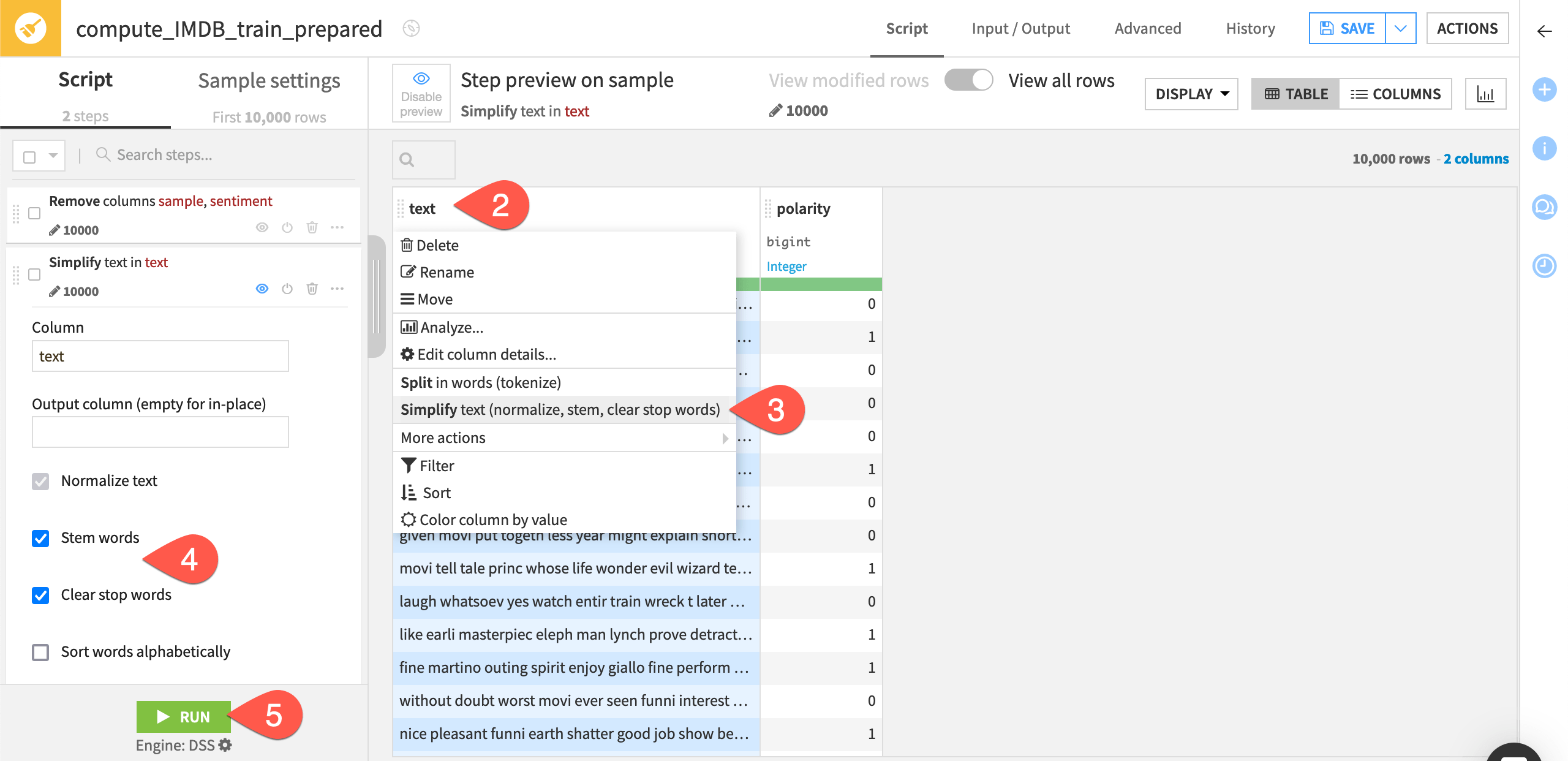

Simplify text#

The processor library includes a variety of steps for working with natural language data.

Let’s try out one of the most frequently used steps.

Return to the Flow, and open the Prepare recipe that produces the IMDB_train_prepared dataset.

Open the dropdown for the text column.

Select Simplify text.

Select the options to Stem words and Clear stop words.

Click Run, and open the output dataset.

Important

Dataiku suggested the Simplify text processor because it autodetected the meaning of the text column to be natural language.

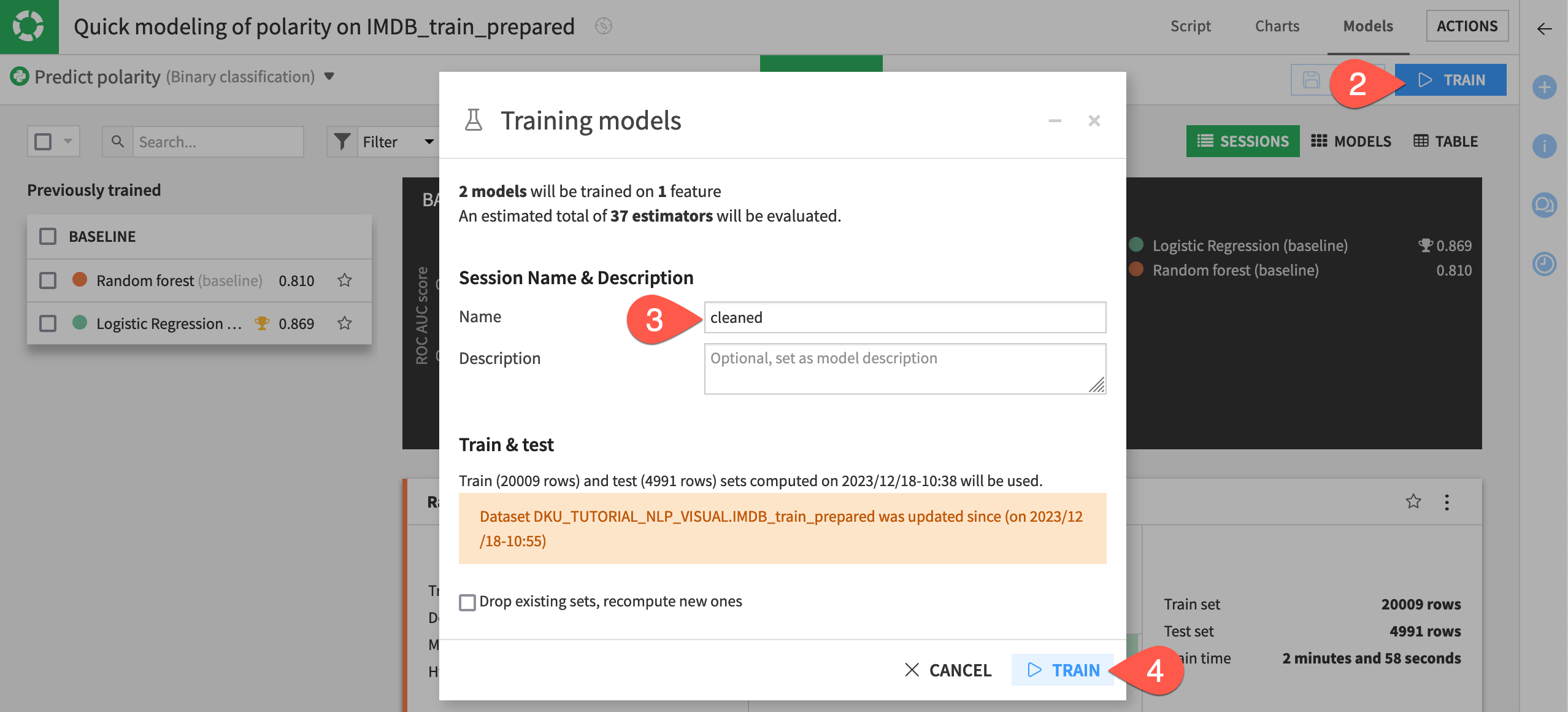

Train a new session of models#

With a simplified text column, let’s train a new session of models.

Return to the previous modeling task. You can find it in the Lab (

) tab of the right panel of the output dataset or the Visual ML (Analysis) page (

g+a).Click Train.

Name the session

cleaned.Important

In the training dialog, Dataiku warns that the training data is different from the previous session and that it will use the one computed before. The date of the computation shows you which one. To avoid that, we can recompute new sets.

Check the Drop existing sets, recompute new ones box.

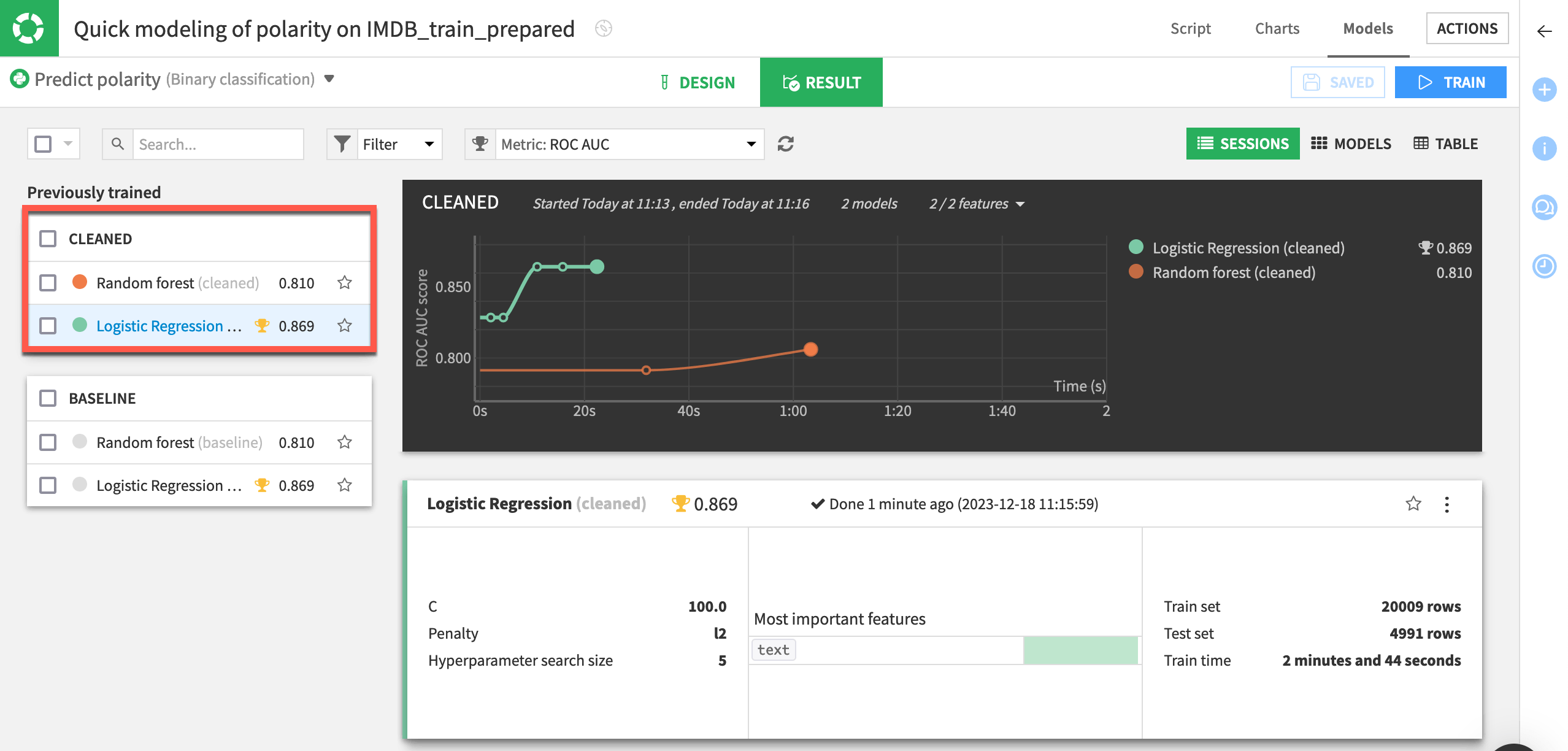

Click Train again.

In this case, cleaning the text not only led to relevant improvements over the baseline effort but also significantly reduced the feature space without any loss in performance.

Formulas for feature generation#

Next, let’s try some feature engineering.

Return to the Prepare recipe.

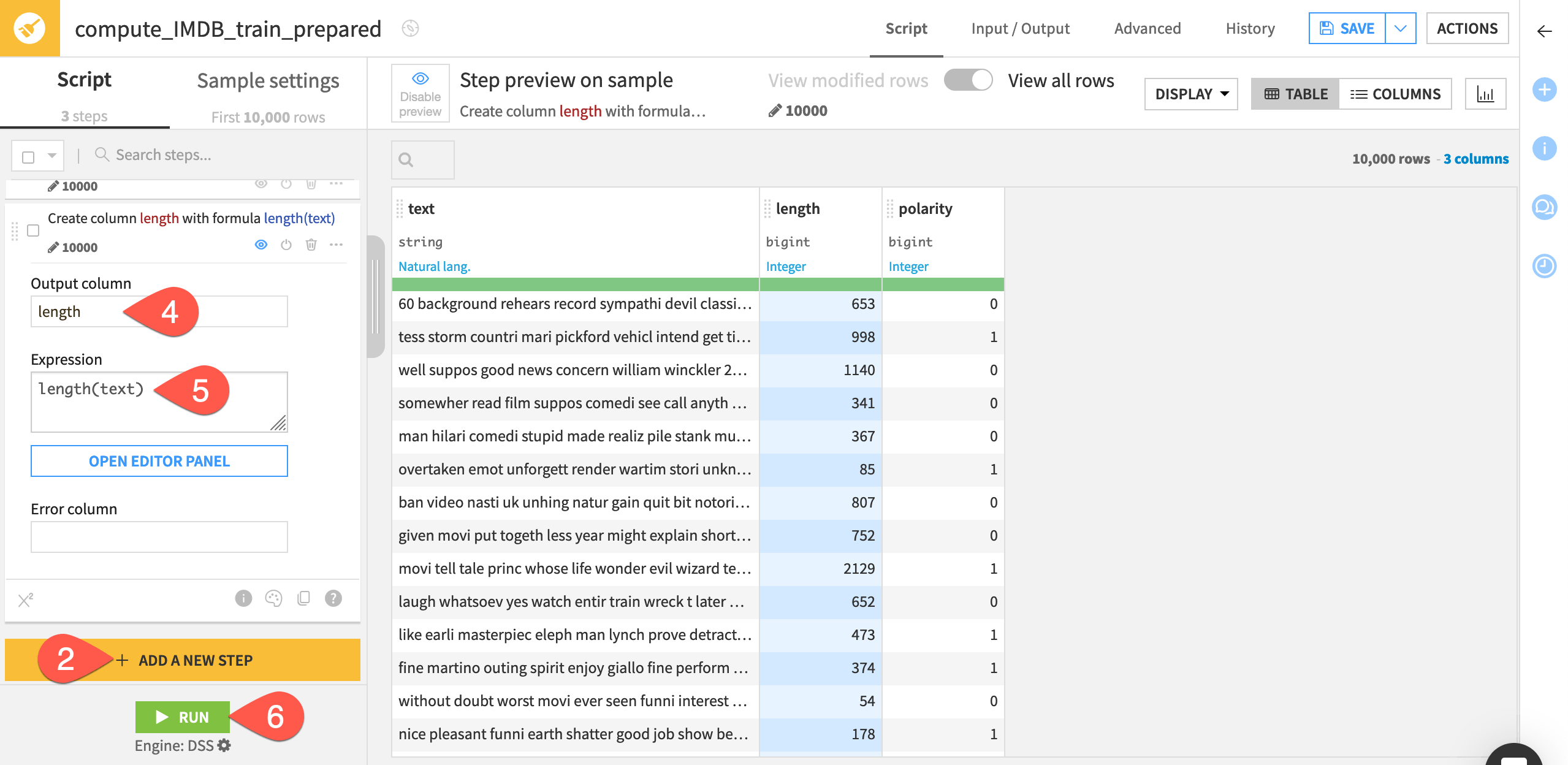

Click + Add a New Step at the bottom left.

Search for and select Formula.

In the Formula editor, enter

lengthas Formula for.Copy-paste the expression

length(text)to calculate the length in characters of the movie review.Click Apply.

Click Run, and open the output dataset again.

Tip

You can use the Analyze tool on the new length column to see its distribution from the Prepare recipe. You could also use the Charts tab in the output dataset to examine if there is a relationship between the length of a review and its polarity.

Train more models#

Once again, let’s train a new session of models.

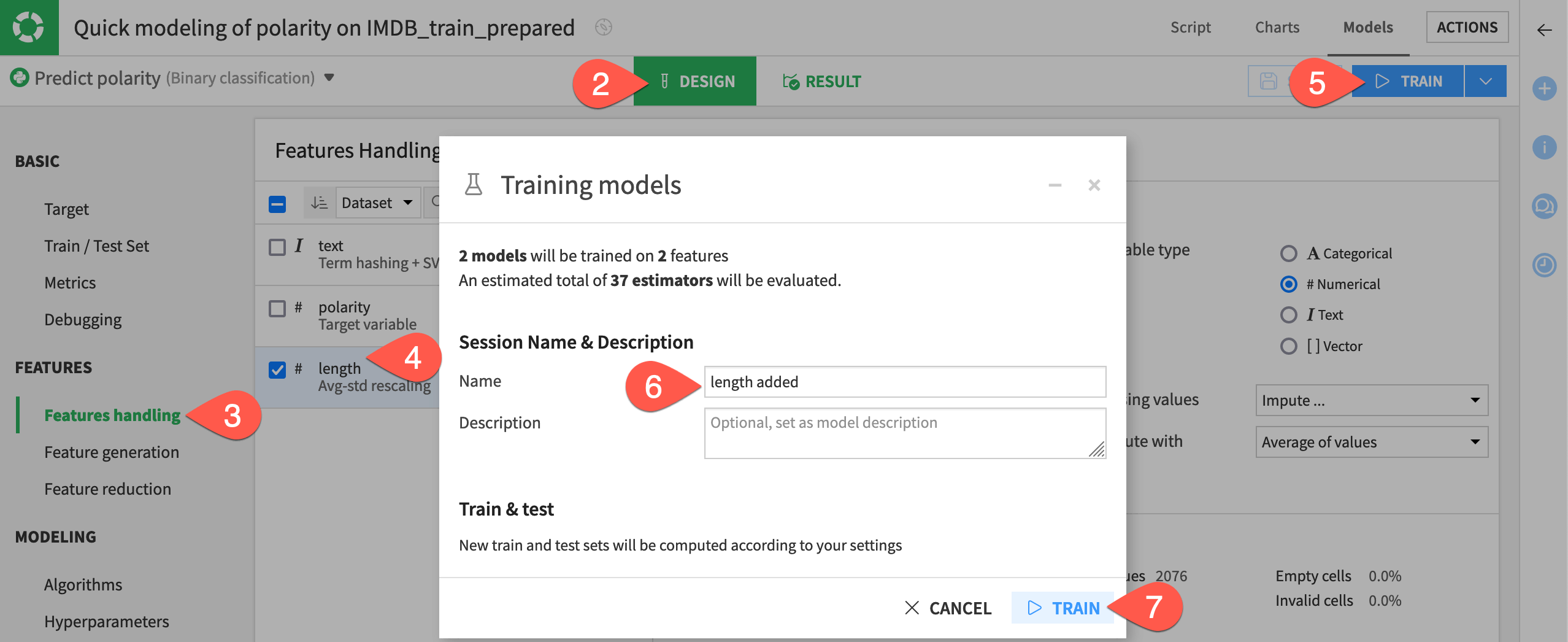

Return to the previous modeling task.

Before training, navigate to the Design tab.

Select the Features handling panel on the left.

Confirm length is included as a feature.

Click Train.

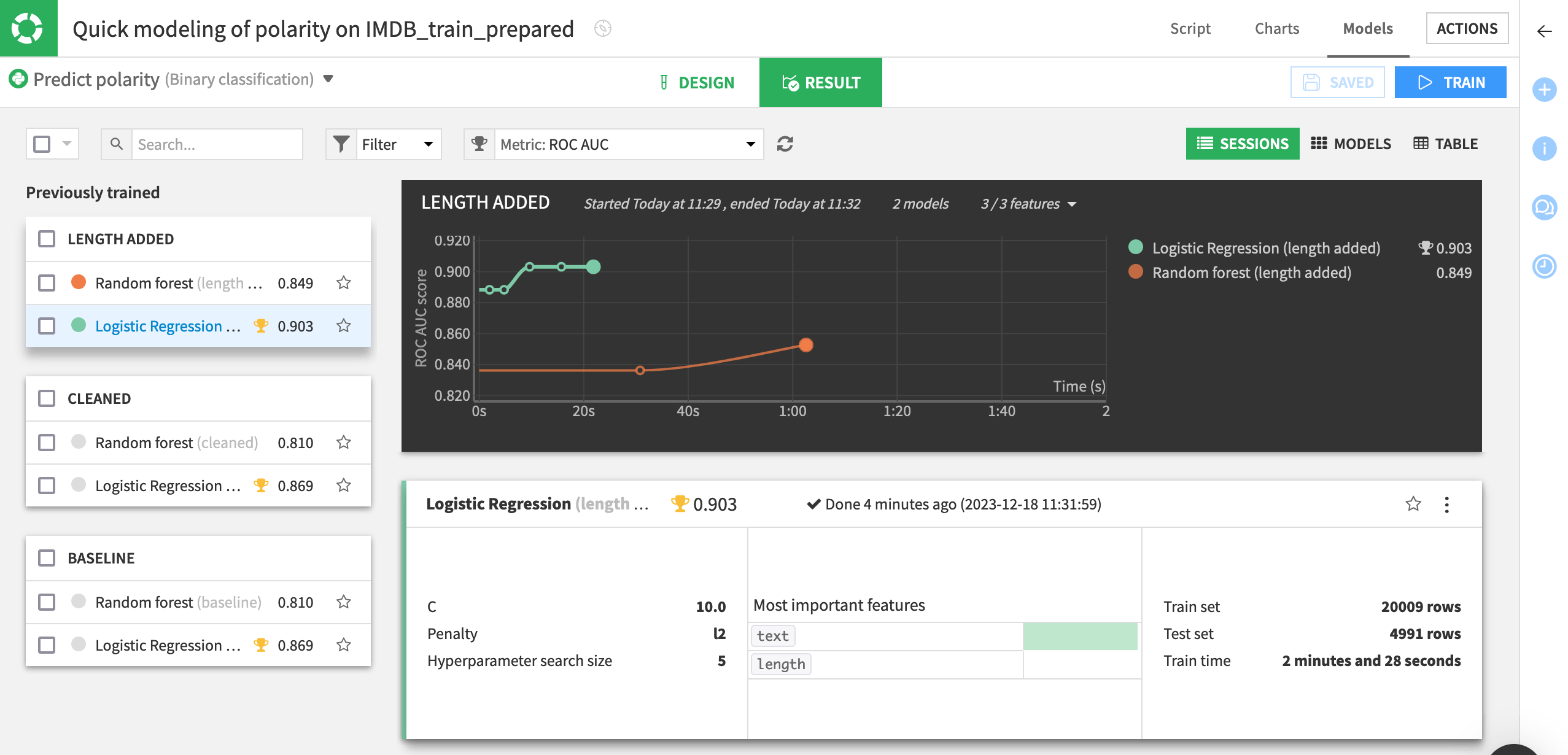

Name the session

length added.Click Train again.

This iteration doesn’t show improvement in both models. However, the results look already quite relevant for a simple feature engineering.

Aside from the specific results witnessed here, the larger takeaway is the value that text cleaning and feature engineering can bring to any NLP modeling task.

Tip

Feel free to experiment by adding new features on your own. For example, you might use:

A formula to calculate the ratio of the string length of the raw and simplified text column.

The Extract numbers processor to identify which reviews have numbers.

The Count occurrences processor to count the number of times some indicative word appears in a review.

Pre-process text features for machine learning#

You’ve now applied simple text cleaning operations and feature engineering to improve a model that classifies positive and negative movie reviews.

However, these aren’t the only tools at your disposal. Dataiku also offers many preprocessing methods within the model design.

Let’s experiment with different text handling methods before evaluating the performance of a chosen model against a test dataset.

Note

If concepts here are unfamiliar to you, see Concept | Handling text features for machine learning.

Count vectorization text handling#

Let’s apply count vectorization to the text feature.

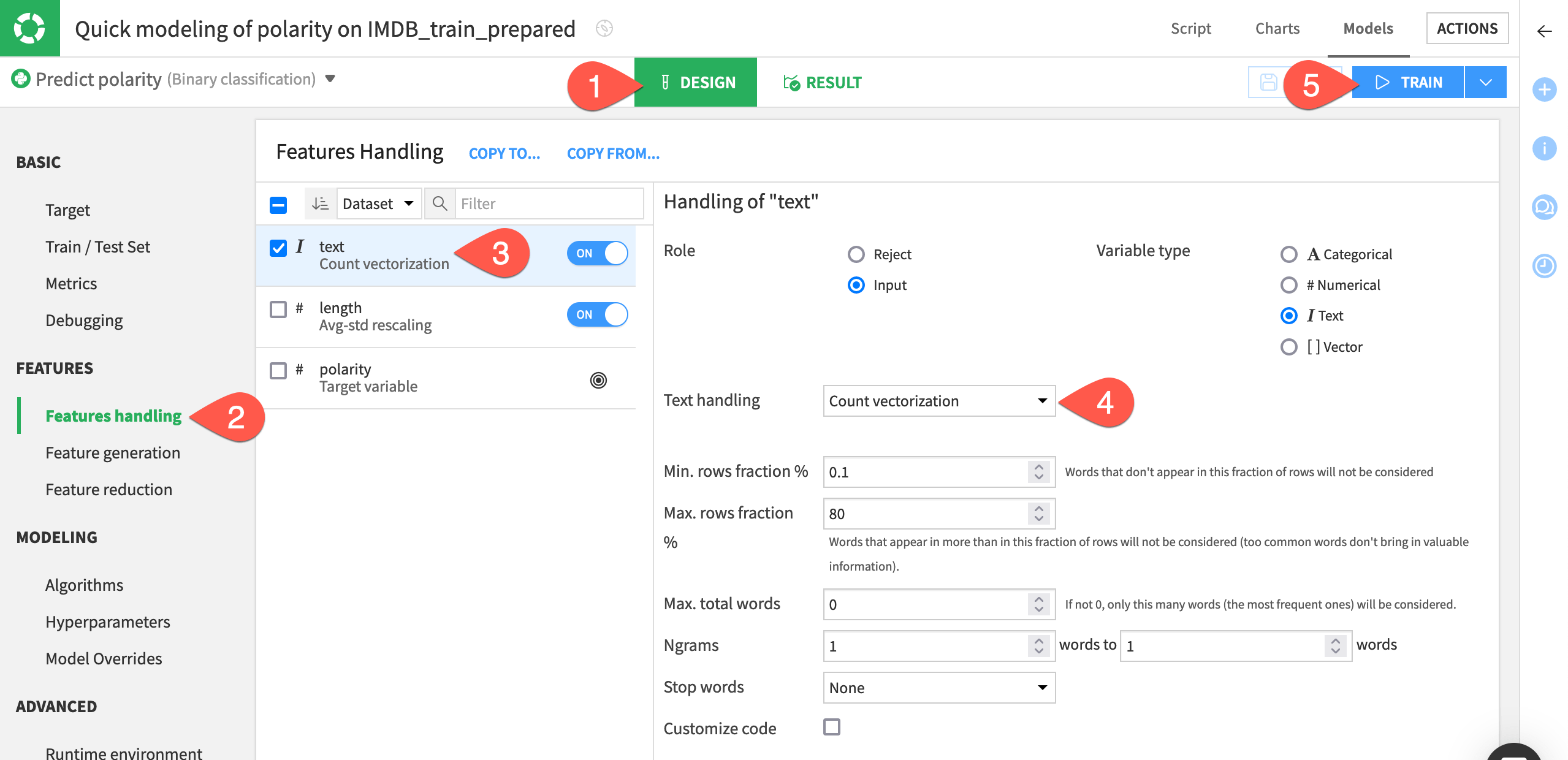

From the modeling task, navigate to the Design tab.

Select the Features handling pane.

Select the text feature.

Change the text handling setting to Count vectorization.

Click Train.

Name the session

count vec.Read the warning message about unsupported sparse features, and then check the box to ignore it.

Click Train.

When the fourth session finishes training, you can see that the count vectorization models have a performance edge over their predecessors.

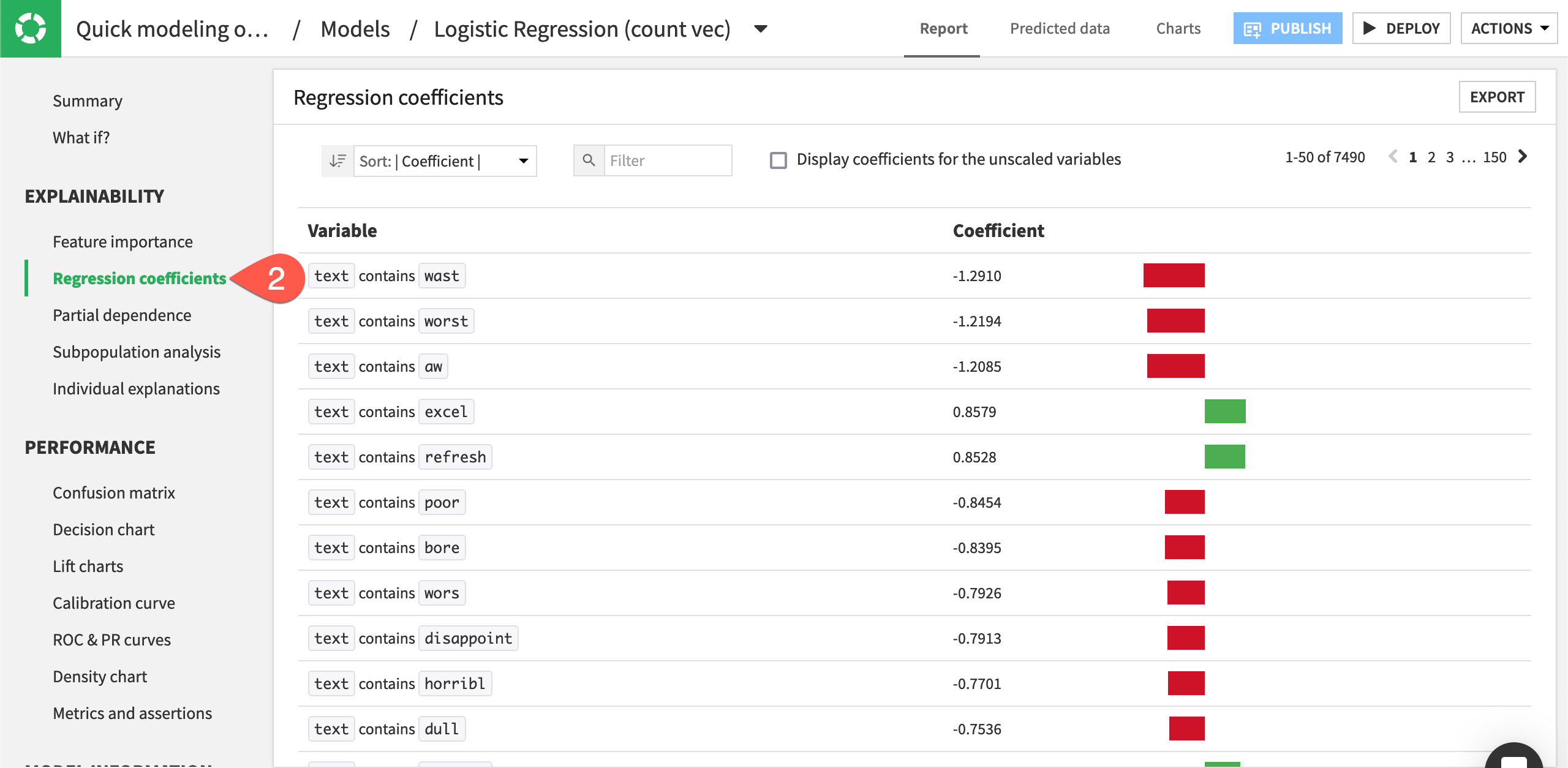

In addition to the performance boost, they also have an edge in interpretability. In the logistic regression model for example, you can see features such as whether the text column contains a word like worst or excel.

On the left, select the Logistic Regression model from the count vec session.

Navigate to the Regression coefficients panel on the left.

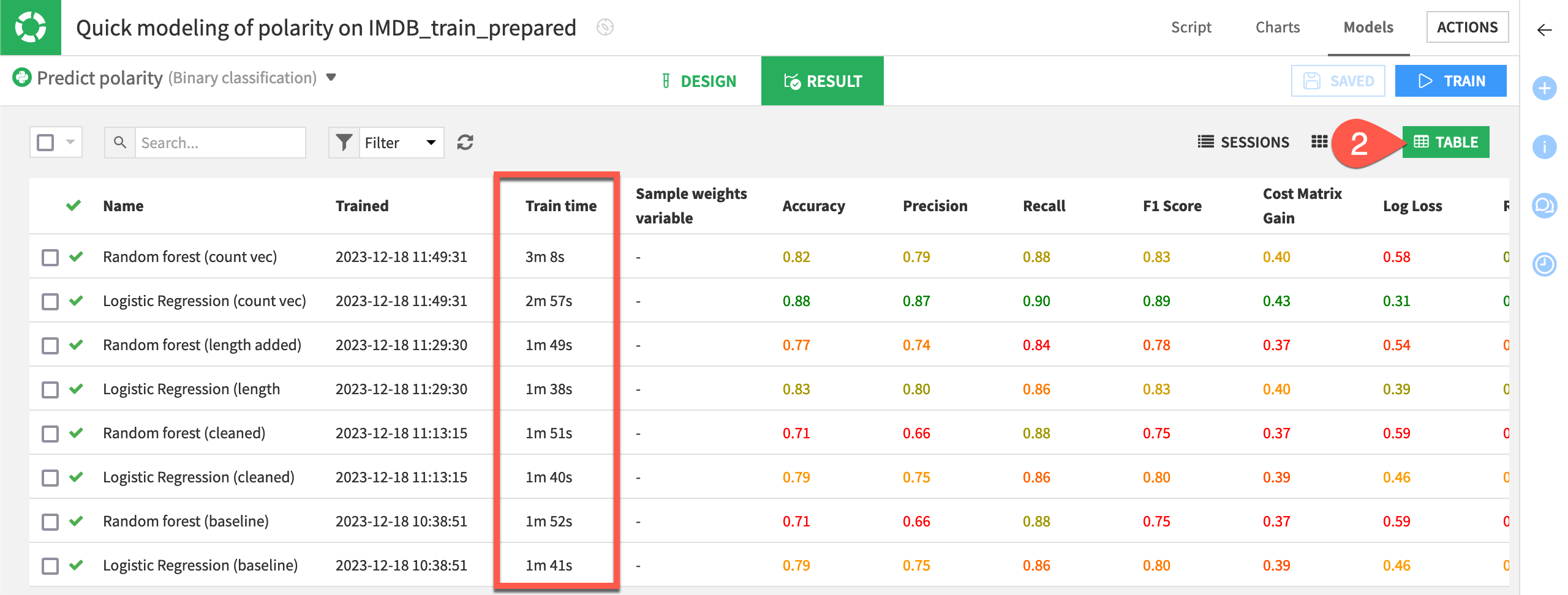

These benefits, however, didn’t come entirely for free. You can expect the training time of the count vectorization models to be longer than that of the tokenization, hashing, and SVD models.

For this small dataset, the difference may be relatively small. For a larger dataset, the increase in training time could be a critical tradeoff.

To see for yourself:

Click Models near the top left to return to the model training results.

Click the Table view on the right to compare training times.

Tip

On your own, try training more models with different settings in the feature handling pane. For example:

Switch the text handling method to TF/IDF vectorization.

Observe the effects of increasing or decreasing the Min. rows fraction % or Max. rows fraction %.

Include bigrams in the n-grams setting by increasing the upper limit to 2 words.

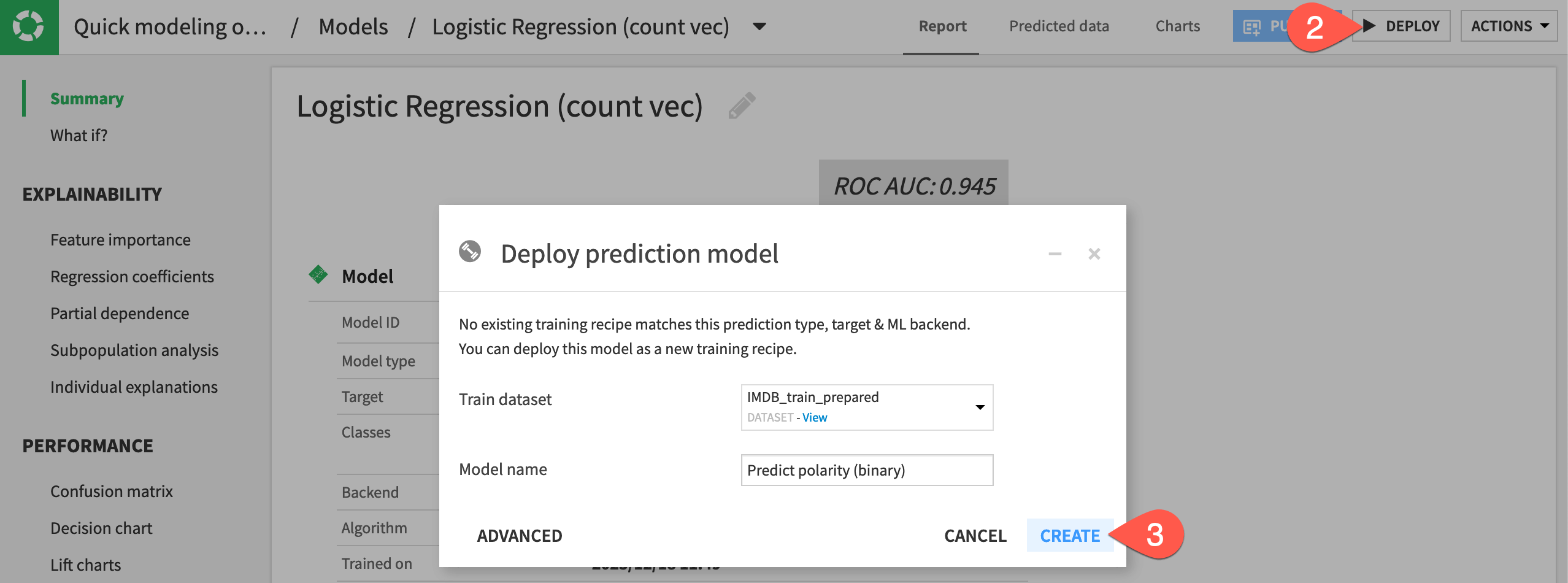

Deploy a model from the Lab to Flow#

When you have sufficiently explored building models, the next step is to deploy one from the Lab to the Flow.

A number of factors — such as performance, interpretability, and scalability — could influence the decision of which model to deploy. Here, just choose the best performing model.

From the Result tab of the modeling task, select the logistic regression model from the count vec session.

Click Deploy near the upper right corner.

Click Create.

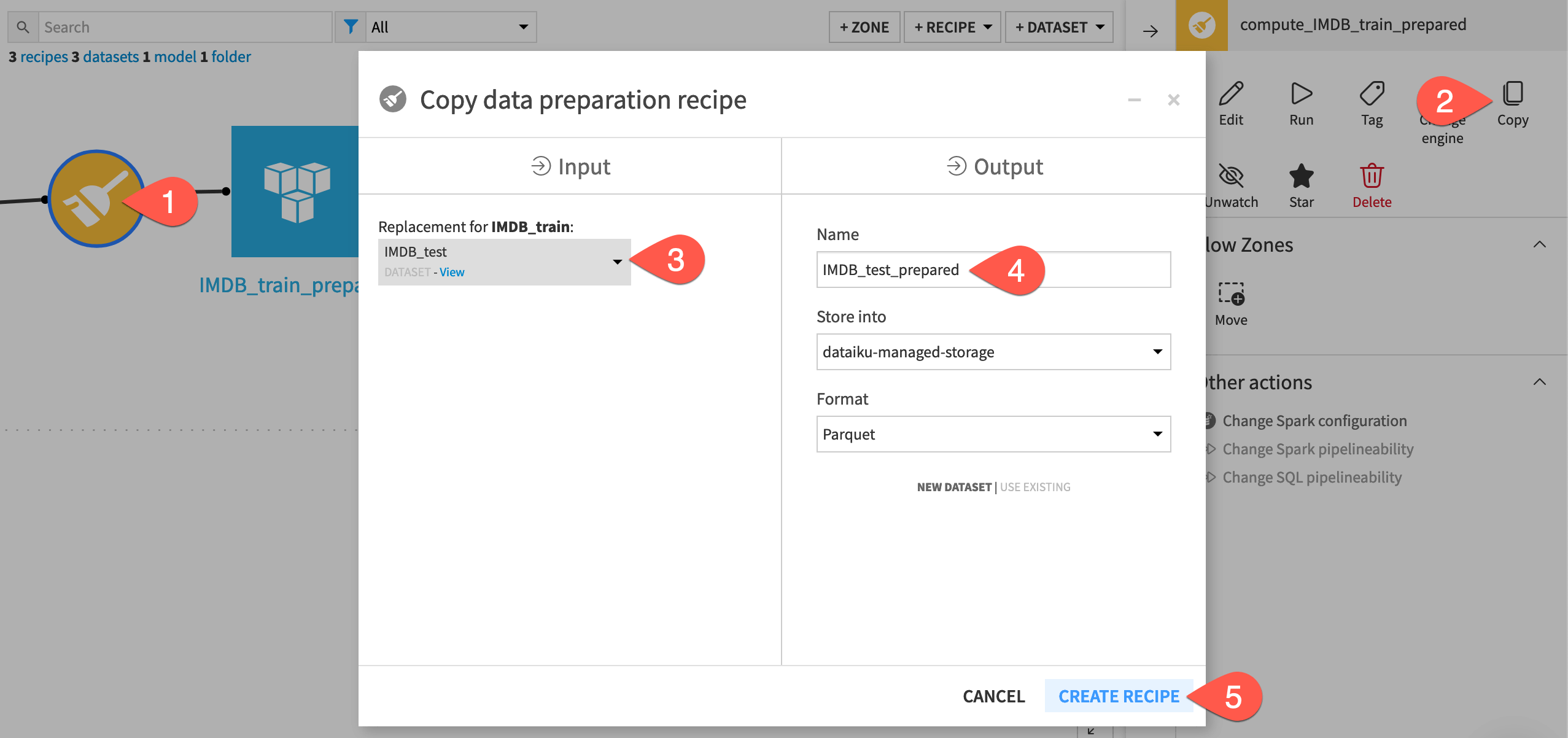

Copy data preparation steps#

With a model now in the Flow, the relevant question is whether this model will perform as well on data that it has never faced before. A steep drop in performance could be a symptom of overfitting the training data.

The Evaluate recipe can help answer this question. But first, we need to ensure that the test data passes through the same preparation steps that the training data received.

We’ll start by copying the existing Prepare recipe.

Select the Prepare recipe in the Flow.

In the Actions tab of the right panel, click Copy.

On the left, change the input dataset to IMDB_test.

Name the output dataset

IMDB_test_prepared.Click Create Recipe.

In the recipe’s first step, delete the non-existent sample column so that the step only removes the sentiment column.

Click Run.

Evaluate the model#

Once the test data has received the same preparation treatment, you’re ready to test how the model will do on this new dataset.

Let’s take the first step toward this goal.

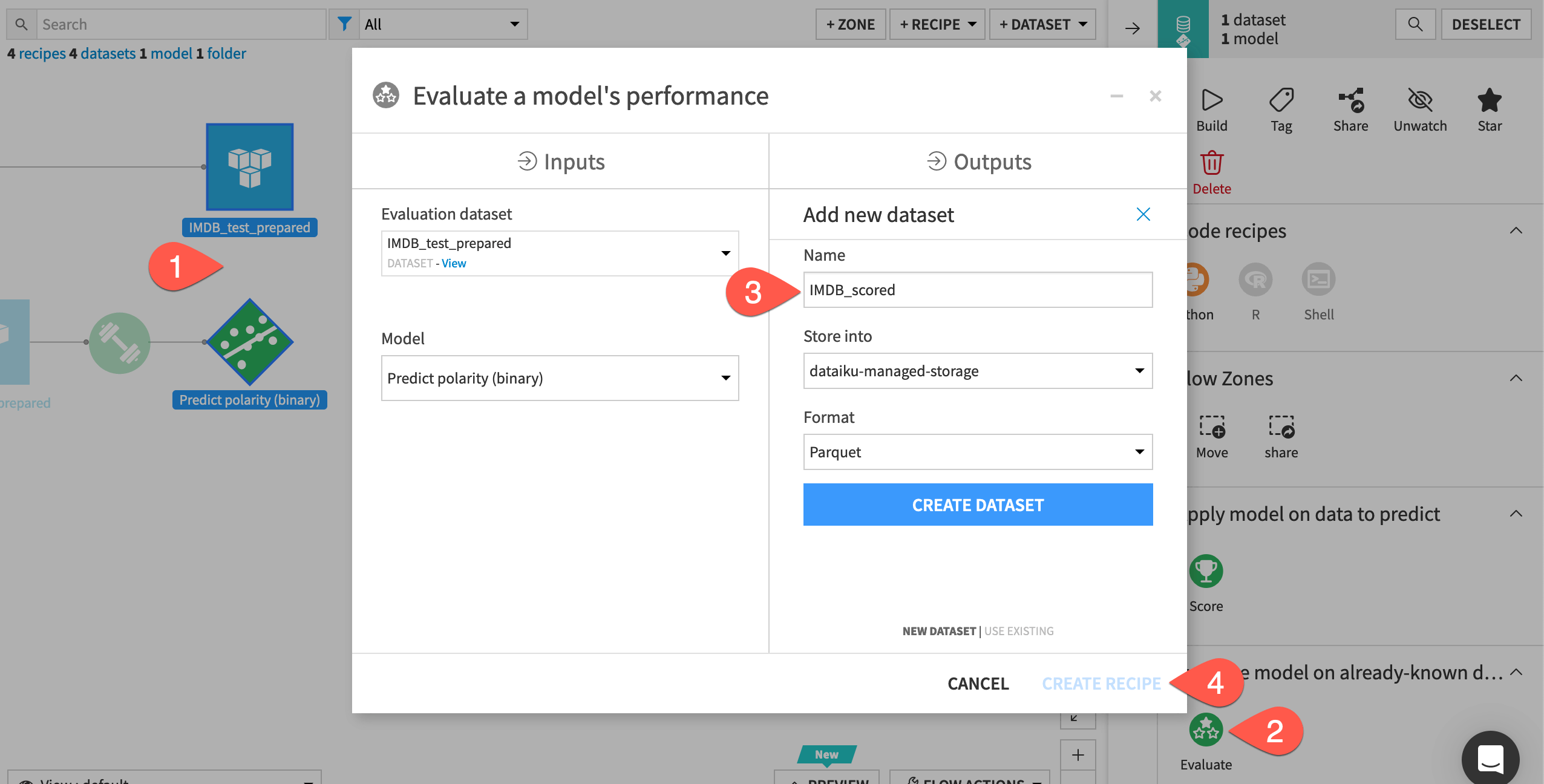

From the Flow, select the deployed model Predict polarity (binary) and the IMDB_test_prepared dataset.

Select the Evaluate recipe from the Actions tab.

Click Set for the output dataset. Name it

IMDB_scored, and click Create Dataset.Click Create Recipe.

Click Run on the Settings tab of the recipe, and then open the output dataset.

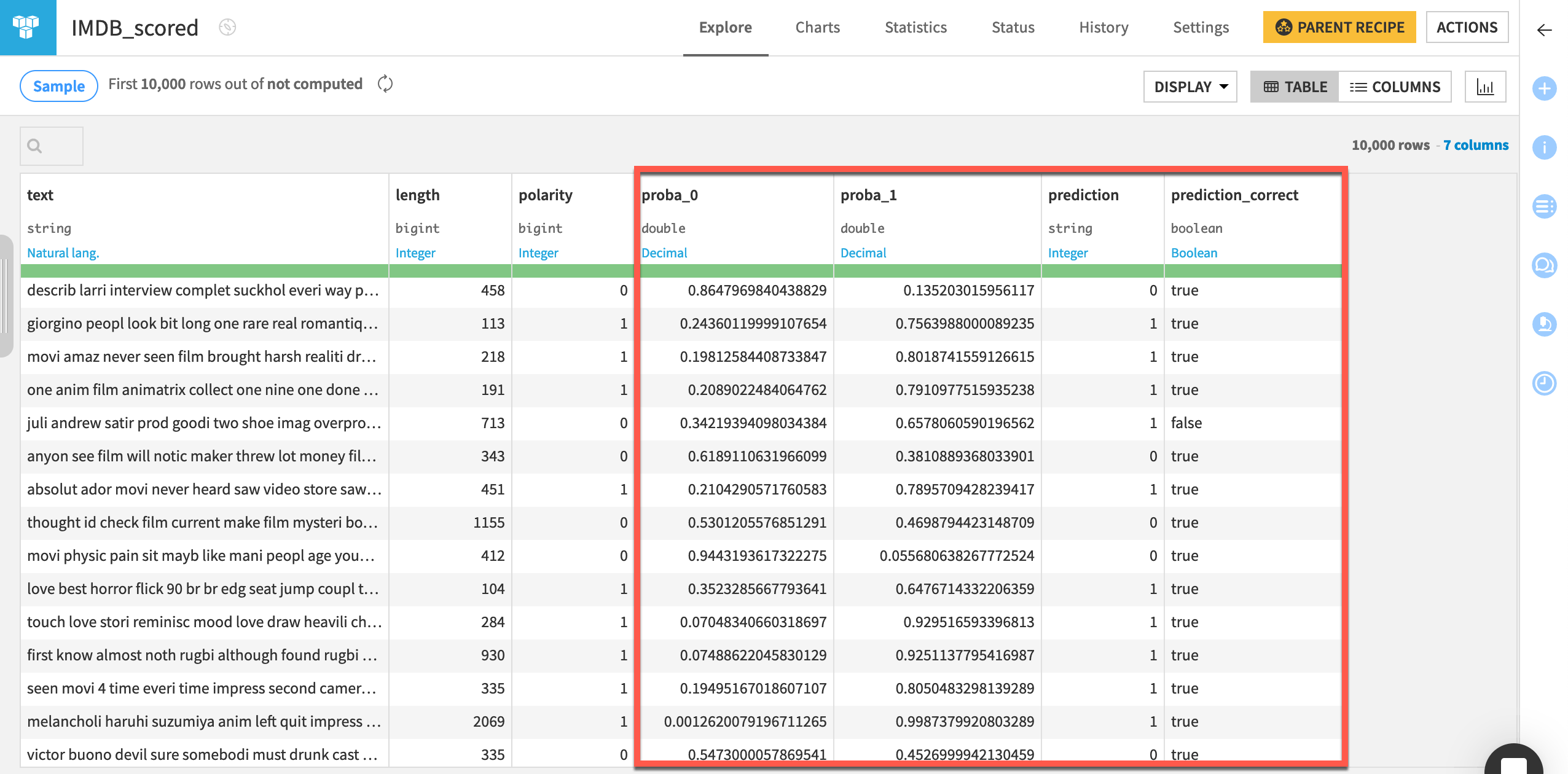

The Evaluate recipe has appended class probabilities and predictions to the IMDB_scored dataset. As you update the active version of the model, you could keep running the Evaluate recipe to check the performance against this test dataset.

Overall, it appears that the model’s performance on the test data was similar to the performance on the training data. One way you could confirm this is by using the Analyze tool on the prediction and prediction_correct columns.

Note

The Evaluate recipe includes options for two other types of output.

One is a dataset of metric logs comparing the performance of the model’s active version against the input dataset. It’s particularly useful for exporting to perform analysis elsewhere, such as perhaps a webapp.

The second is a model evaluation store, a key tool for evaluating model performance in Dataiku, but outside the scope covered here.

Next steps#

Congratulations! You have successfully built a model to perform a binary classification of the text sentiment. Along the way, you:

Witnessed the importance of text cleaning and feature engineering.

Explored the tradeoffs between different text handling strategies.

Evaluated model performance against a test dataset.

See also

This was just the start of what you can achieve with Dataiku and NLP! Visit the reference documentation on Text & Natural Language Processing to learn more.

Tip

For a bonus challenge, return to the sentiment column provided in the raw data and try to perform a multiclass classification. You’ll find that the distribution of sentiment is bimodal. No one writes reviews of movies for which they don’t have an opinion! Perhaps use a Formula to add a third category to polarity (0 for low sentiment scores, 1 for middling, and 2 for high). Are you able to achieve the same level of performance with the addition of a third class?

Tip

You can find this content (and more) by registering for the Dataiku Academy course, NLP - The Visual Way. When ready, challenge yourself to earn a certification!