How-to | Secure data connections through Azure Private Link#

For certain plans, Dataiku enables Launchpad administrators to protect access to certain data sources through Azure Private Link.

Azure Private Link provides private connectivity between your Dataiku instance and supported Azure services without exposing your traffic to the public internet. Once activated, Dataiku Cloud will only connect to your data using a private endpoint.

Important

Azure Private Link isn’t available in all Dataiku plans. You may need to reach out to your Dataiku Account Manager or Customer Success Manager.

Dataiku only supports one Private Link per storage account.

If you run into any error, please contact our support team.

Azure Blob Storage#

To configure Azure Private Link for an Azure Blob Storage data source:

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure storage endpoint.

Select the Azure Storage Account region. If the region you need isn’t available, please contact the support team to enable it.

Fill out the Azure Storage endpoint form#

Retrieve the storage account name, the resource group, and the subscription id from your Azure Storage Account page.

Accept the Private Link request on Azure#

Navigate to the Private Link Center in your Azure account and then to the Pending Connections to accept the two connection requests. Private Link functionality will be only enabled after you accept these requests.

Create the Azure Blob Storage connection#

You can now use the endpoint you created both in new and existing Azure Blob Storage connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Select + Add a Connection.

Select Azure Blob Storage and fill the form.

An Azure-hosted Snowflake database#

To configure Azure Private Link for a Snowflake database hosted on Azure:

Ensure your Snowflake region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure Snowflake endpoint.

Select the Azure region of your Snowflake account. If the region you need isn’t available, please contact the support to enable it.

Keep this page open, and continue to the next step in the Snowflake console.

Retrieve the Private Link config from Snowflake#

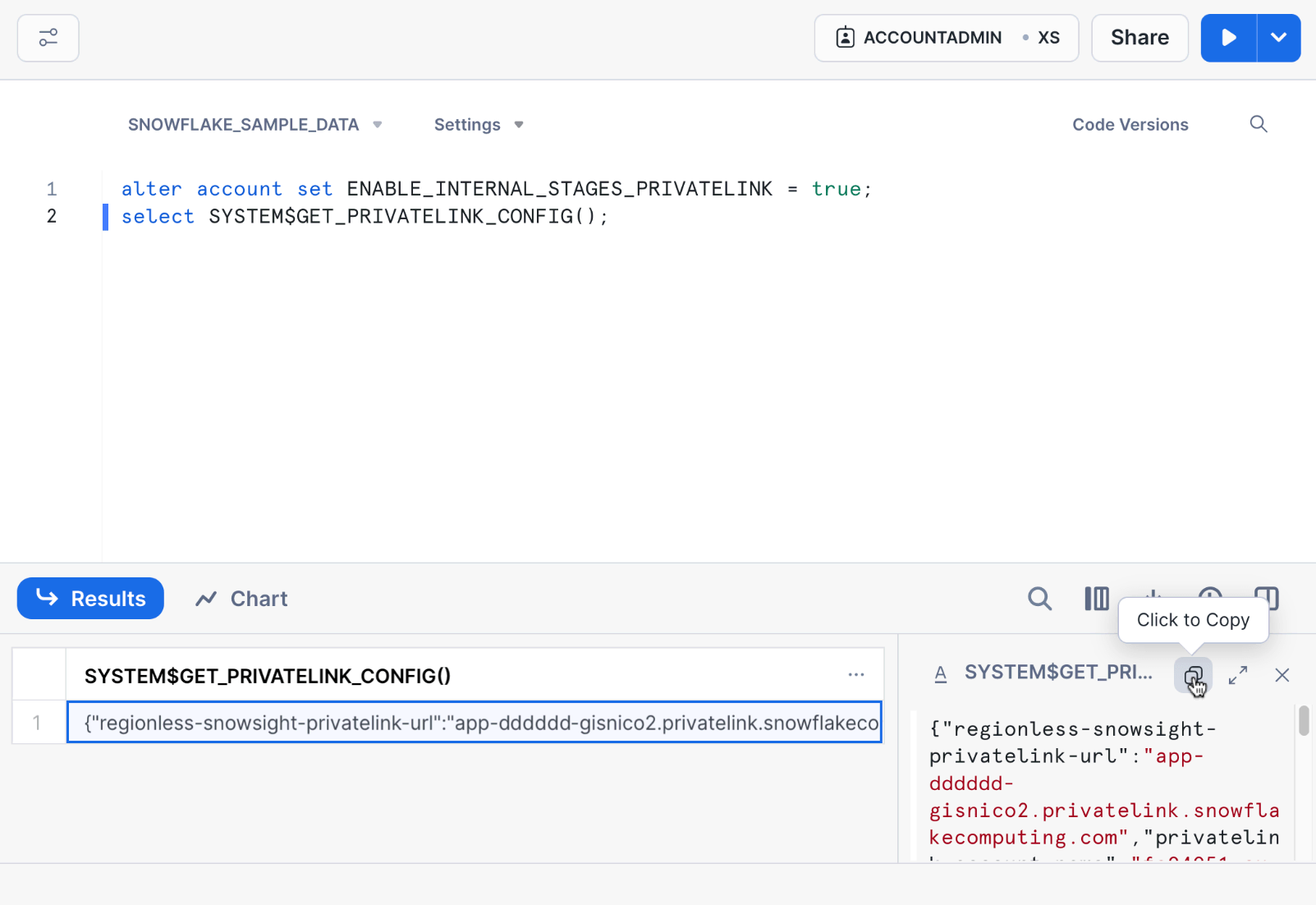

Having completed the above set of instructions, in Snowflake, create a new SQL worksheet.

Run the following SQL commands with the

ACCOUNTADMINrole:alter account set ENABLE_INTERNAL_STAGES_PRIVATELINK = true; select SYSTEM$GET_PRIVATELINK_CONFIG();

Click on the output to open a new panel on the right.

Click on the Click to Copy icon to copy the JSON result.

Create the Azure Snowflake endpoint extension in the Dataiku Cloud Launchpad#

Return to the Extensions tab of the Dataiku Cloud Launchpad.

If not still open from the first section, click + Add an Extension, and select Azure Snowflake endpoint.

Provide any string as the endpoint name. It will be helpful if it’s a unique identifier.

Select your Snowflake Azure region; it should be available by now.

Paste the JSON you copied from the above set of instructions into the Snowflake Private Link config input.

Click Add.

You can close this page while Dataiku creates the underlying infrastructure. This operation usually takes 10 minutes.

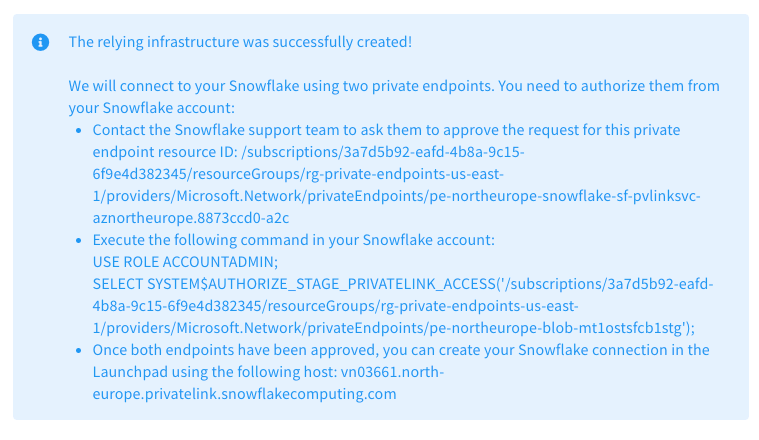

Once the infrastructure is created, refresh the page, click on the endpoint from the list, and then click View Details. It should look like this:

Ask Snowflake support to allow Azure Private Link from Dataiku’s Azure account#

In the Snowflake console, go to the Support section in the left panel.

Create a new support case by clicking on Support Case in the top right corner.

Fill the title with something meaningful, for example

Enable Azure Private Link.In the details section of your Snowflake support case, request the approval of the previous endpoint. The endpoint resource ID can be found in the previous View Details message.

In the Where did the issue occur? section, select Azure Private Link under the Managing Security & Authentication category, leave the severity to Sev-4, and click on Create Case.

Wait for Snowflake support to enable Private Link before continuing to the next set of instructions.

Use the Azure Snowflake endpoint in your Snowflake connections#

You can now use the endpoint you created both in new and existing Snowflake connections. To do that:

In your Dataiku Cloud instance, navigate to a new or existing Snowflake connection.

For the host value, fill the value of the host from the previous View Details message.

Note

Your Snowflake connection may use an Azure Blob fast-write connection. In that case, you have to setup Private Link for it as described in Azure Blob Storage if you also want that traffic to go through Private Link.

An Azure-hosted Databricks database#

To configure Azure Private Link for a Databricks database hosted on Azure:

First, see if your Databricks account and workspace meet Azure’s requirements to enable Private Link.

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure Databricks endpoint.

Select the Location. If the region you need isn’t available, please contact the support team to enable it.

Fill out the Azure Databricks endpoint form#

Retrieve the resource group, the subscription ID, the URL, and the name from your Azure Databricks Service page.

Note

Endpoint nameis the name you want to give to your extension on Dataiku Cloud.Databricks nameis the name of the Databricks Workspace you created on Azure.Databricks domain nameis the domain name in the URL, without thehttps://schema.

Accept the Private Link request on Azure#

Navigate to the Private Link Center in your Azure account and then to the Pending Connections to accept the connection requests. Private Link functionality will only be enabled after you accept this request.

Create the SQL connection#

You can now use the endpoint you created both in new and existing Databricks connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Select + Add a Connection.

Select Databricks and fill the required fields. The

hostshould be your Databricks domain name.

An Azure-hosted arbitrary data source#

Administrators can leverage Azure Private Link to expose any service running inside their VNets to Dataiku Cloud.

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure private endpoint.

Select the Azure Location. If the location you need isn’t available, please contact the support team to enable it.

Configure the private link with Dataiku#

Retrieve the resource group, the subscription ID, and the name for your private link service from the Azure Private Link Center page.

Go to the Extensions panel of the Dataiku Cloud Launchpad.

Click + Add an Extension.

Select Azure private endpoint.

Fill out the Azure private endpoint form.

Note

Nameis the name you want to give to your extension on Dataiku Cloud.Private link service nameis the name of the private link service you created on Azure.

Click Add.

Accept the Private Link request on Azure (Azure Private Link Center > Pending Connections).

Create the connection#

You can now use the endpoint you created both in new and existing connections:

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Find the Azure private endpoint that you want to use. Click on the three dots button on the right. Then select View Details.

Once the private endpoint is created in the background, you should be able to find the private endpoint IP at the bottom of the modal.

Navigate to the Connections panel.

Click Add a Connection or select the one you want to edit.

Fill out the form, and use the retrieved private endpoint IP in the private link extension as the host parameter.

An Azure SQL database#

Administrators can leverage Azure Private Link for the following Azure SQL databases: PostgreSQL, MySQL, or SQLServer.

Note

Depending on your Azure SQL Database type, Private Links can only be available for servers that have public access networking. For example, Azure Database for PostgreSQL and MySQL don’t support creating Private Links for servers configured with private access (VNet integration). This is an Azure limitation. Please refer to Microsoft’s documentation on Private Link for Azure Database for PostgreSQL or MySQL.

When using Azure SQL Database, each database belongs to a logical server. In Dataiku Cloud, every Azure SQL Endpoint is linked to an Azure SQL Database logical server. If your databases are on the same logical server, you can use a single Azure SQL Endpoint for all. For more information, please refer to Microsoft’s article What’s a logical server in Azure SQL Database and Azure Synapse?.

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure SQL endpoint.

Select the SQL location. If the region you need isn’t available, please contact the support team to enable it.

Fill out the Azure SQL endpoint form#

Retrieve the resource group, the subscription ID, the SQL type, and the SQL server name from your Azure database page.

Note

Endpoint nameis the name you want to give to your extension on Dataiku Cloud.SQL typeis the type of the SQL database you created on Azure. Only Azure Database for PostgreSQL flexible servers, Azure Database for PostgreSQL servers, Azure Database for MySQL servers, Azure Database for MySQL flexible servers, and Azure SQL Database are supported.SQL full server namename is the “Server name” found on your database page.

Accept the Private Link request on Azure#

Navigate to the networking tab of your Database to accept the connection requests. Private Link functionality will only be enabled after you accept this request.

Create the SQL connection#

You can now use the endpoint you created both in new and existing PostgreSQL, MySQL, or SQLServer connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Select + Add a Connection.

Select PostgreSQL, MySQL or SQLServer depending on the connection you want to use and fill the form. The value for the host param is the host you can find on your Azure Database.

An Azure managed database#

Administrators can leverage Azure Private Link for an Azure managed database.

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure managed database endpoint.

Select the Azure Location. If the region you need isn’t available, please contact the support team to enable it.

Fill out the Azure managed database endpoint form#

Retrieve the resource group, the subscription ID, and the host from your Azure managed database page.

Note

Endpoint nameis the name you want to give to your extension on Dataiku Cloud.Hostis the host of your managed database. It ends with.database.windows.net.

Accept the Private Link request on Azure#

Navigate to the Private Link Center in your Azure account.

Go to Pending Connections to accept the connection requests.

Private Link functionality will only be enabled after you accept this request.

Create the SQLServer connection#

You can now use the endpoint you created both in new and existing SQLServer connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Select + Add a Connection.

Select SQLServer and complete the form. The value for the host parameter is the same host you use for the endpoint.

An Azure Synapse Analytics workspace#

Administrators can leverage Azure Private Link for an Azure Synapse Analytics workspace.

Ensure your Azure region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select Azure Synapse endpoint.

Select the Azure Location. If the region you need isn’t available, please contact the support team to enable it.

Fill out the Azure Synapse endpoint form#

Retrieve the resource group, the subscription ID, and the workspace name from your Azure Synapse workspace page.

Note

Endpoint nameis the name you want to give to your extension on Dataiku Cloud.Synapse workspace nameis the name of the Synapse workspace you created on Azure.SQL pool typeis the type of the SQL pool type that will be used for the Private Link connection; it can be either serverless or dedicated.

Accept the Private Link request on Azure#

Navigate to the Private Link Center in your Azure account.

Go to Pending Connections to accept the connection request.

Private Link functionality will only be enabled after you accept this request.

Create the Synapse connection#

You can now use the endpoint you created both in new and existing Synapse connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Select + Add a Connection.

Select Synapse and complete the form.

Note

You can find the value for the host from your Azure Synapse workspace page. It depends on the SQL pool type you choose in the extension. You should use:

Dedicated SQL endpointif the SQL pool type isDedicated SQL Pool;Serverless SQL endpointif the SQL pool type isServerless SQL Pool.

An on-premise data source#

You can configure Azure Private Link for on-premise data sources if you have access to an Azure account:

Connect your on-premise data source to your VNet as described in the Azure documentation on connecting an on-premises network to Azure.

Follow the steps from An Azure-hosted arbitrary data source to connect to your data source.