Tutorial | Using AWS AssumeRole with an S3 connection to persist datasets#

You can use an Amazon Simple Storage Service (S3) connection to persist datasets in Dataiku. When working with S3 connections, one of the credential mechanisms available is the native AWS AssumeRole.

About the AWS AssumeRole mechanism#

Assuming a role means obtaining a set of temporary credentials associated with the role and not with the principal that assumed the role.

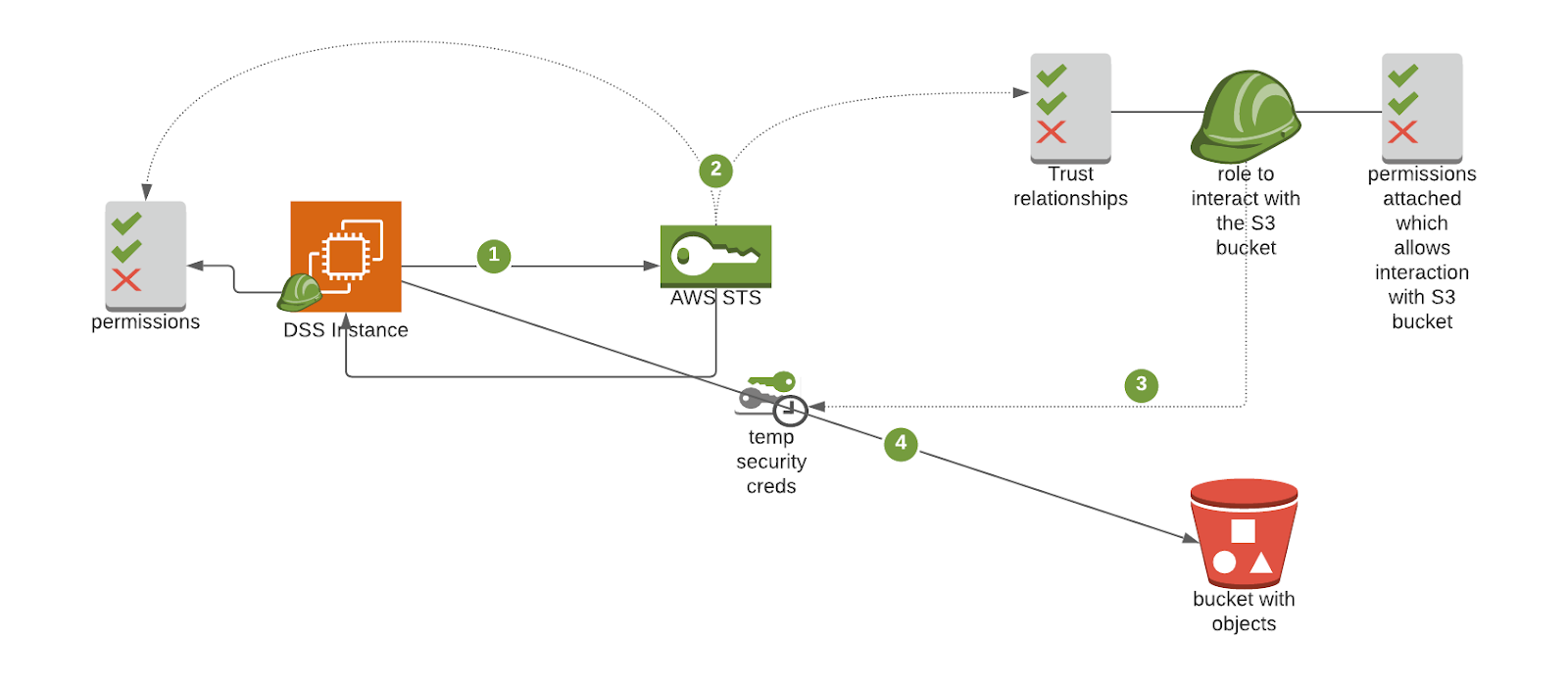

How does the AWS AssumeRole mechanism work to allow DSS to interact with the S3 bucket? The following interactions will be carried out with the role assumed, therefore with the rights associated with it:

An Amazon EC2 instance running DSS is associated with an instance profile. This is basically an AWS role with associated permissions. To interact with an AWS resource–an Amazon S3 bucket, for example–the instance profile is used to request temporary credentials of the relevant role to the AWS Security Token Service (STS).

STS is an AWS web service that allows you to request temporary security credentials for your AWS resources. STS performs the following checks before allowing the assume request:

Is the instance profile allowed to perform this AssumeRole action by checking its own permissions in IAM?

Is the instance profile trusted by the distant role to assume it?

If both checks pass, STS provides temporary security credentials consisting of an Access Key Id, a Secret Access Key, a Security Token, and an Expiration timestamp.

DSS (including Jobs run by DSS) may now interact with the S3 bucket using these credentials and associated permissions. AWS CloudTrail tracks every interaction with a dataset stored on an S3 bucket.

Note

You can use AWS CloudTrail to create a trail for auditing details of S3 interactions. Every interaction concerning the role assumed and the role session is auditable and traceable including the role session name.

An interaction tracked and stored by AWS CloudTrail uses the following pattern:

"arn": "arn:aws:sts::<AccountID>:assumed-role/<IAM role defined in the S3 connection>/dss-conn-<DSS connection name>-assumed-for-<DSS frontend User>"

Configure an S3 connection using STS with AssumeRole#

Prerequisites#

To configure an S3 connection using AWS STS with AWS AssumeRole, the following requirements must be met:

The DSS instance profile must be able to use AWS STS. This is needed to receive temporary credentials.

The role to be assumed has been properly configured, including policies and a trust relationship, to interact with the bucket.

Configure the S3 Connection#

To configure a new S3 connection in DSS:

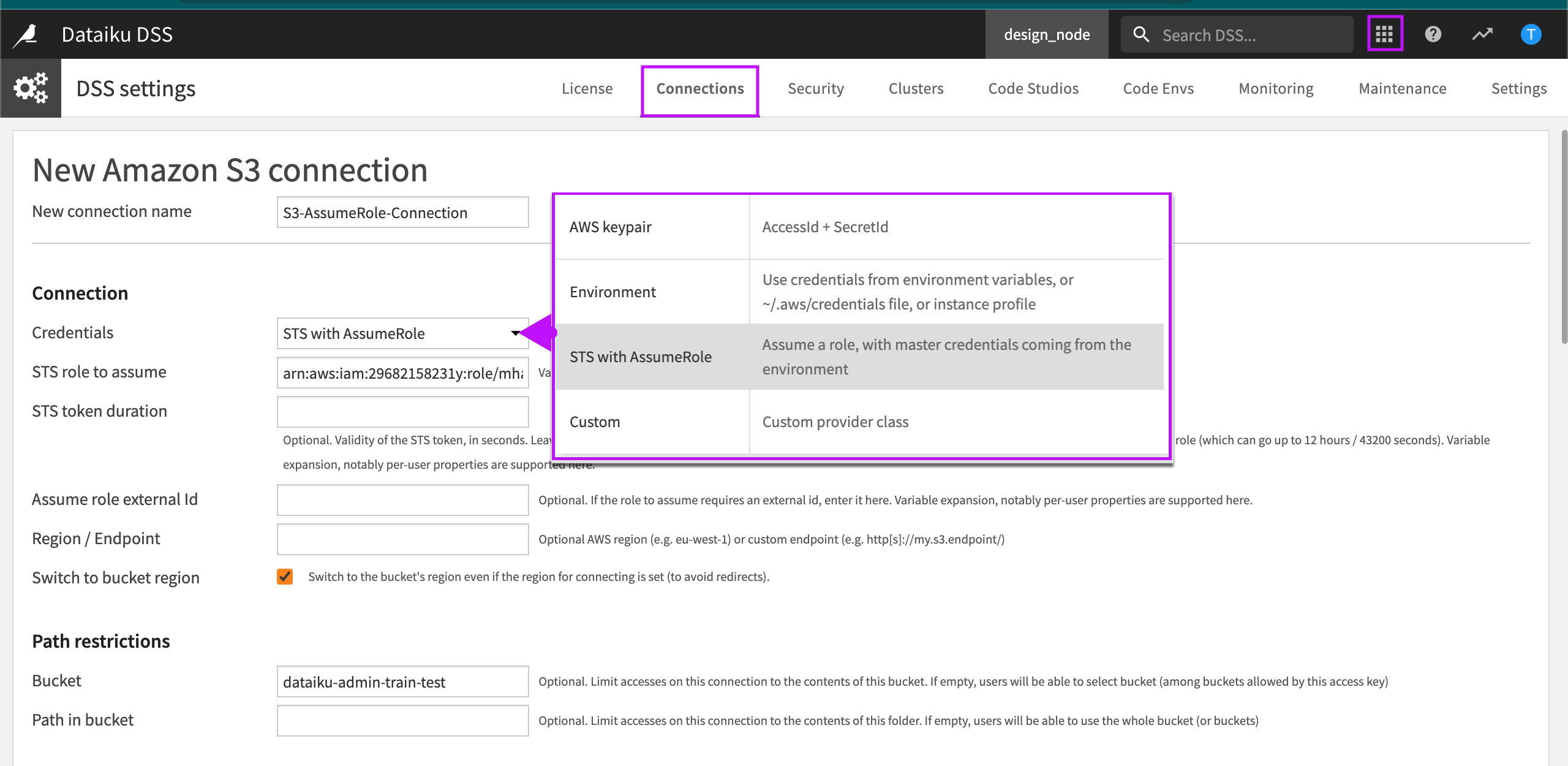

In Credentials, select STS with AssumeRole as the credentials type.

In STS role to assume, enter the Amazon Resource Name (ARN) of the role that will interact with S3 buckets.

Configure optional parameters according to the guidelines.

Select Create.

Optional Parameters

STS token duration. You can override the default setting. For example, to account for long running, enter the number of seconds you want the STS token to remain valid.

Assume role external Id. If the role to assume has been created on another account and requires an external ID then enter it here.

Region / Endpoint. If the S3 API must be reached through a specific VPC endpoint or region then enter it here.

Switch to bucket region. Select this option if the connection is restricted to a specific bucket. If this option isn’t selected then all buckets reachable with the role may be browsed.

Bucket. Enter a bucket if you want to limit accesses on the connection to the contents of a specific bucket.

Path in bucket. You can specify a specific path in the bucket. This provides more granular restriction inside the bucket on a specific prefix.

Modifying “Details readable by” Security Settings to Improve Performance#

Jobs triggered through DSS can be executed locally.

However, if you want to leverage full parallelization and elasticity for workloads, you can execute Spark jobs over EKS. “Details readable by” is a security parameter can have a huge impact on the capability for jobs to parallelize data requests on S3. Jobs include recipes and notebooks executed using Spark over Kubernetes. If these jobs are able to parallelize (as configured by the parameter), they can reach the data source on their own, improving performance.

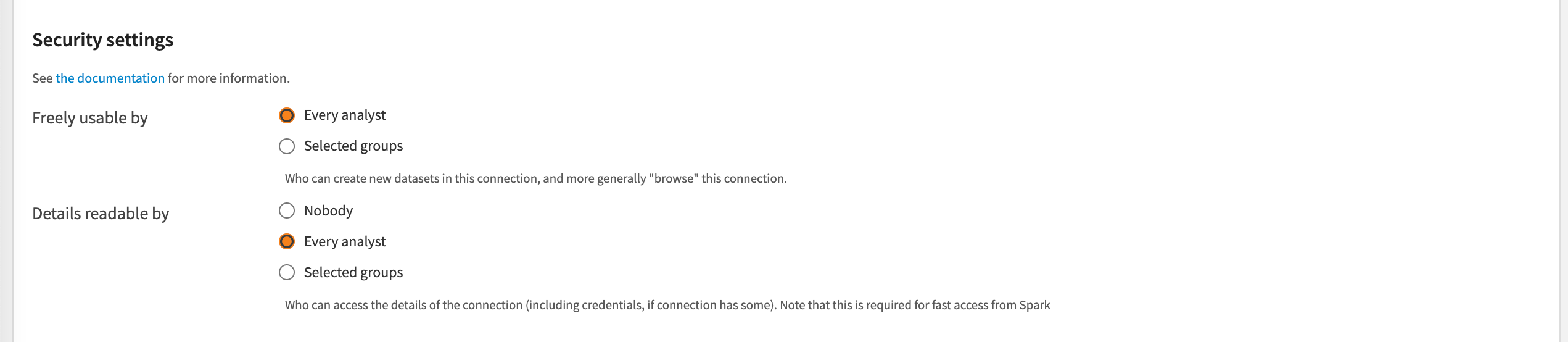

To configure this, scroll down to the Security Settings section. Propagate temporary credentials for distributed compute (Spark jobs over EKS) by changing the Details readable by parameter from Nobody to Every analyst or Selected groups (relevant groups).

How does this parameter work?

The Details readable by parameter defines if a specific group is allowed to retrieve the credentials information from the connection to forward the credentials directly to the Spark executors running on EKS. Every step of this mechanism is handled by DSS.

When this parameter is allowed for a user, jobs can access the data stored on S3 directly by transmitting to the Spark jobs the temporary set of credentials (including the Access Key, Secret Key, and Token) to be used directly in a Spark context. This will create a fast path between executors and partitioned data on S3 increasing drastically the speed of execution.

If this action isn’t allowed for a job, DSS falls back on a streaming mechanism, also called a slow path. This means that DSS instance will be in charge of retrieving the data from S3 and serving it to one of the Spark jobs through an API endpoint. This results in a bottleneck and doesn’t take advantage of available parallelization.