Solution | EU AI Act Readiness#

Overview#

This Solution provides a governance approach aligned to the EU Artificial Intelligence Act (“EU AI Act”), supporting a variety of users, including data scientists, analyst leads, and risk, compliance, and legal teams with qualifying and building out required documentation for their AI systems.

The Solution provides templates specific to the high risk tier. Templates for the limited and minimal risk tier should be developed at the organization level due the Act’s position on the voluntary adoption of all or some of the high risk obligations.

Legal disclaimer#

Dataiku’s EU AI Act Readiness Solution is designed to assist users in evaluating potential compliance with the EU AI Act based on publicly available information, regulatory guidance, and industry practices. However, this tool doesn’t constitute legal advice, regulatory approval, or a definitive determination of compliance. The EU AI Act is subject to ongoing interpretation, regulatory updates, and enforcement guidance, which may affect how compliance is assessed.

Users should be aware that:

This tool provides general informational support and shouldn’t be relied upon as a substitute for professional legal counsel.

Compliance determinations depend on specific use cases, sectoral regulations, and evolving legal interpretations.

Dataiku doesn’t guarantee the accuracy, completeness, or applicability of the tool’s outputs for any specific AI system or regulatory requirement.

By using this tool, users acknowledge that Dataiku isn’t liable for any decisions, actions, or legal consequences arising from reliance on its assessments. We strongly recommend consulting a qualified legal expert or regulatory professional before making any compliance-related decisions.

Introduction to the EU AI Act and AI Act Readiness#

The objective of the EU AI Act is to “promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation.” (Art 1(1))

To achieve this, the Act sets out new obligations for organizations operating within the European Union. This may be organizations either:

Situated within the EU, providing and using AI systems

Situated outside the EU but selling or operationalizing AI systems within the EU.

Its requirements are, therefore, extraterritorial. Different actors will face different responsibilities, depending on whether they’re providers, deployers, authorized resellers, importers, distributors, or importers.

Among its innovations, the AI Act imposes a four-part risk tiering with obligations for each tier:

AI systems characterized as having unacceptable risk are prohibited from being operationalized in the EU.

High risk AI systems face a number of new requirements before being placed on the market.

Limited risk AI systems face transparency obligations.

The minimal risk tier faces no explicit obligations.

The AI Act encourages organizations to adopt the high risk obligations as a matter of good practice for the latter two categories (Art 95).

The EU AI Act was published in the Official Journal of the European Union on July 12 2024. The Act’s new requirements will cascade, hitting the market over a two-year period.

As of February 2025, AI systems with unacceptable risk are prohibited from being placed on the market. Obligations associated with the high risk tier are expected to be in place by August 2026. The time frame serves as a catalyst for organizations to prepare for their compliance, as noncompliance can result in fines as high as €35 million or 7% of annual turnover for the previous financial year, whichever is higher.

In total, a major consequence of the AI Act is a new incentive for organizations to more concertedly and systematically qualify AI systems to ensure the right compliance activities are undertaken. The EU AI Act Readiness Solutions is designed to support multi-stakeholder teams to secure relevant documentation across the AI lifecycle.

Installation#

Please reach out to your Account Team who will support you with the Solution installation.

Technical requirements#

For this Solution to work on Dataiku’s Govern node, the user must have access to Advanced Govern. Organizations can implement the Solution on either cloud or self-managed instances of Dataiku.

We recommend that you are running Dataiku v13.0.0 or later as these versions will have conditional workflow views and table artifact references enabled allowing for the full functionality of the Solution. Earlier versions of Dataiku and Dataiku Govern will require adapting the conditionality, table view, and Python hooks running in the Solution.

Walkthrough#

The EU AI Act Readiness Solution provides users with several templates, and it’s recommended that you create one custom page. The templates to the Solution are discussed here in the context of the prescribed practice for using the Solution.

For clarification: a custom Govern-node artifact doesn’t have an associated Design-node artifact. This is unlike project, model, model version, and bundle artifacts that are linked to their associated artifacts on the Design node. Custom Govern artifacts are denoted as such.

A detailed walkthrough is provided for the Pre-Assessment and Bundle templates and a high-level walkthrough is provided for the Project and Model Version templates.

Pre-assessment workflow#

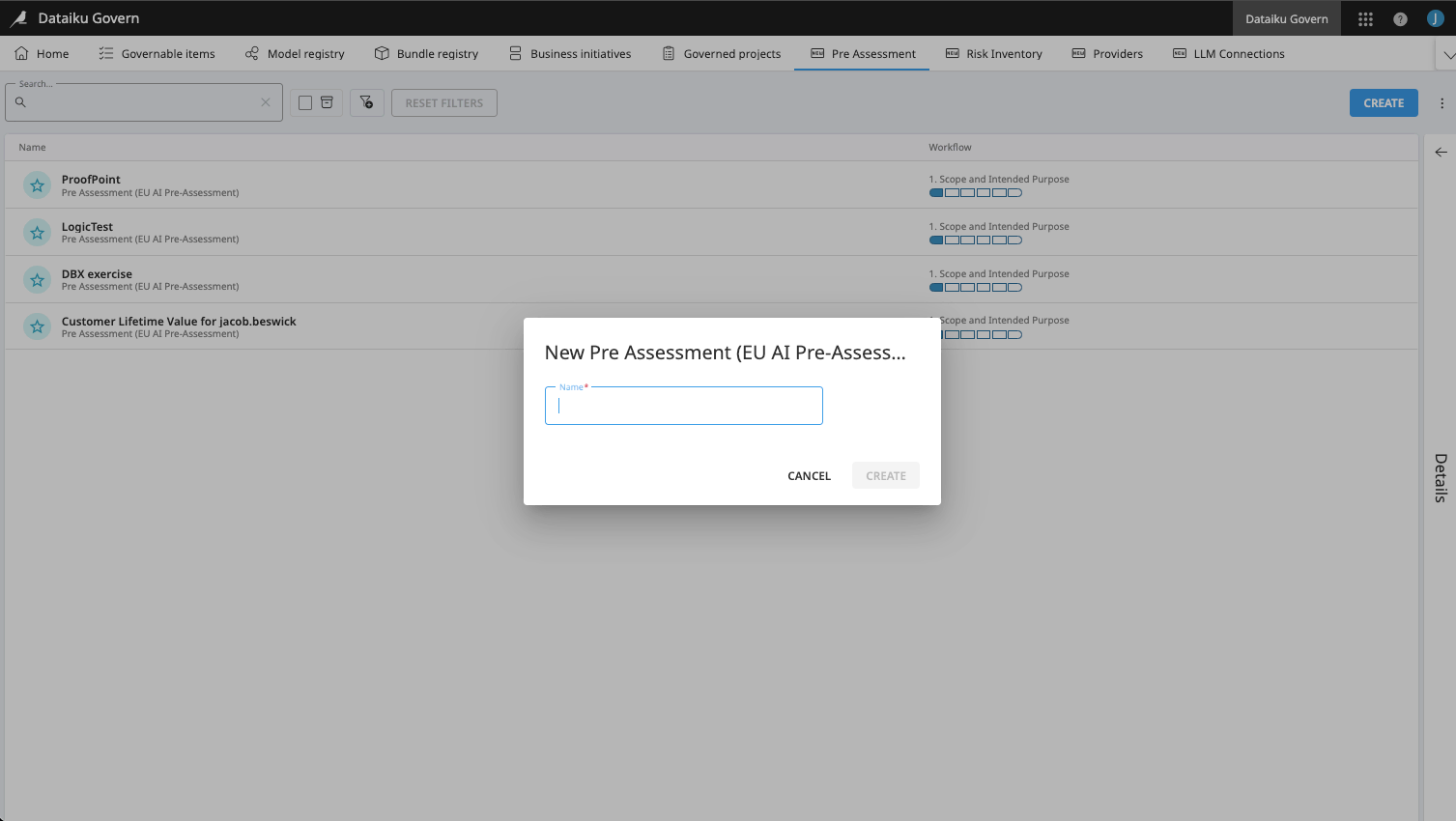

From the newly created “Pre-Assessment” custom page, click on the Create button to generate a new pre-assessment artifact. This artifact provides a workflow that’s essential to qualifying your AI system before work begins in the Design node. We advise that the title provided to the Pre-assessment is maintained in the project artifact.

By completing the pre-assessment, users can qualify the proposal for a Design node project, starting with whether the proposal will be deployed within the EU, and whether it meets the criteria of an AI system as defined by the EU AI Act.

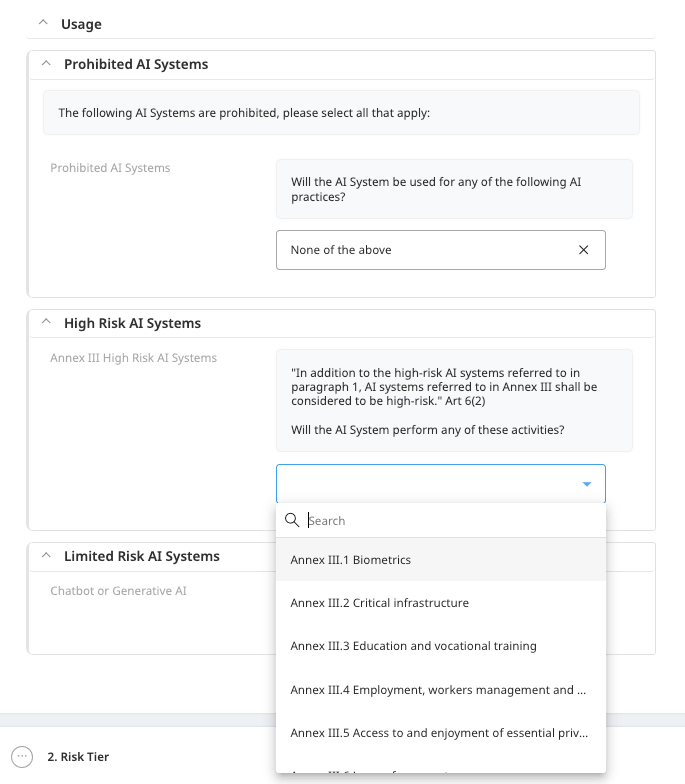

The user then describes the “intended purpose” of the AI system and uses this as a reference when qualifying the proposed AI system against the risk level. The risk tiering is implemented through conditional views. Responding “none of the above” to “prohibited AI systems” will unlock the high risk use cases associated with Annex III.

Where “none of the above” is selected at this stage, the second path to high risk is unlocked, which is associated with other harmonized Union legislation. Where “none of the above” is selected, the background logic results in the AI system being neither at the “prohibited” nor “high” risk level.

Questions relating to “limited risk” are outside of the aforementioned logic. We recommend users address this section whether or not the AI system qualifies as high risk.

If prohibited, high, or limited risk tiers aren’t selected, the background logic concludes that the AI system is “minimal risk.”

The next workflow step, “Usage,” asks the user to confirm that the risk tier produced through the logic is correct. Verifying by clicking the Boolean will unlock later workflow steps.

Once the risk tier is validated, users will add relevant AI system actors using the table view. “Actors” is a customer Govern artifact that enables users to document information about actors involved with the AI system and which will support with documentation gathering down the line. Click “Add New” to create a new Actor and fill out the modal as appropriate.

In the next workflow step, “Risk Management System,” users can identify risks and controls, as well as upload their risk management policy for audit purposes. Like the “Actors” table, the “Risk Management System” leverages a custom artifact, “Risk Flags.” When adding a “Risk Flag,” we recommend naming it the same as your pre-assessment with the suffix - Risk n where n is a numerical count.

Within the Risk Flag, users can assign the location of the risk to the intended purpose or foreseeable misuse, specify the risk from a list which is configurable by an Admin, a control which is configurable by an Admin, describe the risk, and qualify its severity. Click “Add” and fill out the modal as appropriate.

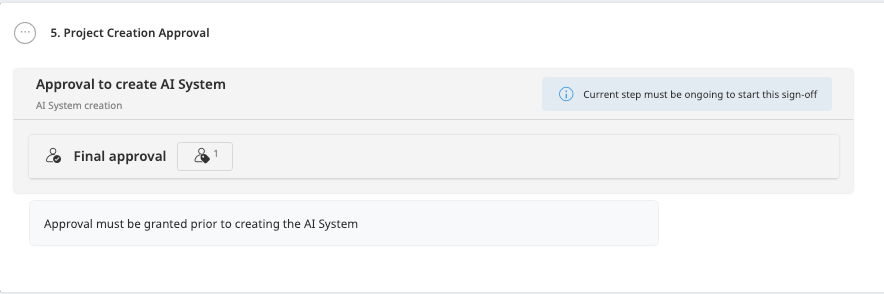

In the “Project Creation Approval” workflow step, users can review the proposed AI system and decide on whether it should be created on the Design node. We recommend that Design node artifacts share the same name as your Pre-Assessment template for consistency.

Once previous workflow steps are complete, you are able to click “Delegate” to select the final approver and request approval. The final approver can then provide their sign-off as appropriate. A sign-off will unlock the final workflow step: “Associated Governed Project.”

Sign-off is a signal to your data science teams that they can create a project. You may also use Dataiku APIs to create a blank project on the Design node with the same name as the pre-assessment. Once the project has been created, govern it using the relevant project template that reflects the risk level determined in the workflow step, Risk Tier.

Returning to the pre-assessment, click + Add at the Associated Governed Project workflow step to associate your pre-assessment and project templates.

Govern project workflow#

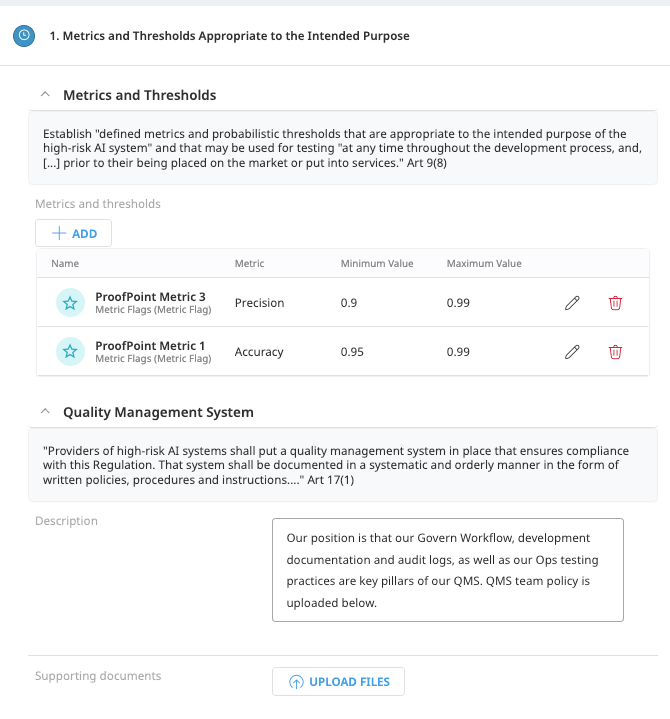

The project artifact is designed to serve as an organizing function for model versions and bundles. Some critical information is created at the project level, in particular “Metrics and Thresholds” and “Quality Management System” at the first workflow step and “Post-Market Monitoring Plan” at the final workflow step.

Model and model version workflows#

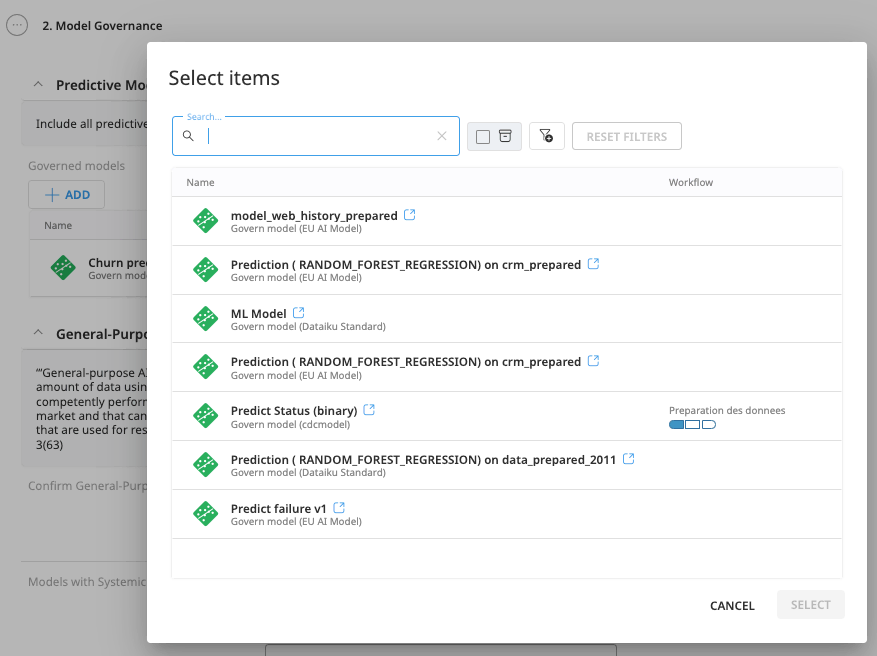

Governance activities at the model version level begin at the second workflow step of the Project. In Govern, the model artifact serves as a coordinating layer for your model versions.

Click + Add to add relevant models and model versions to your project.

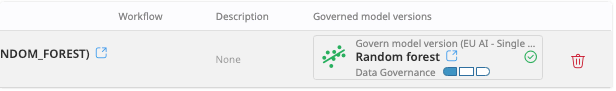

We advise that your active model versions are the ones that require governance. A green encircled checkmark denotes this. Click into the relevant model version that requires governance.

The Model Version workflow has two parts, which are associated with the high risk AI system requirements.

The first part is dedicated to data and data governance requirements.

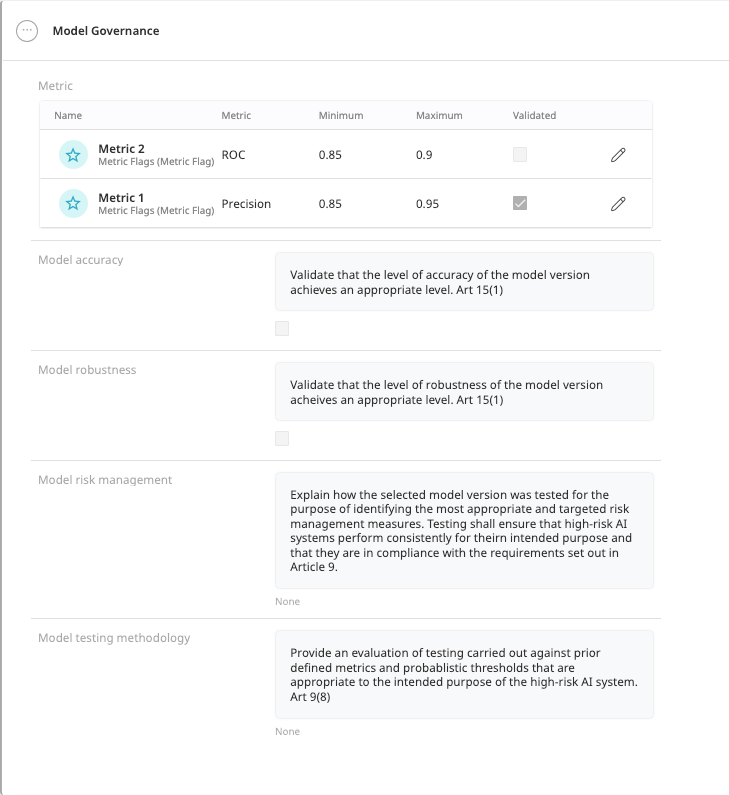

The second part is dedicated to model governance. At this point, the user can validate that:

The model version performs within the parameters set in the metrics table.

Other high risk requirements relating to model robustness, risk management, and testing methodology.

The final workflow step is a sign-off that ensures evidence gathered is sufficient, and that the model version performs in a way that has been stipulated.

Return to the Project template. At this stage, you can evidence whether a “general-purpose AI model” is being used. Select the relevant model, and associate a model card (Please see Solution | LLM Provider Due Diligence on how to build out model cards).

Move to the next step: “Bundled AI System,” click Add, and select the relevant bundle.

Bundled AI system workflow#

In the Solution, the bundle is treated as analogous to an AI system as defined in the Act. In other words, the bundle is a collection of data, model versions, and instructions for their interaction. It’s the object that will be implemented in the real world as an operationalized use case. For this reason, most compliance activities will sit at the bundle level which is supported by the preceding activities at the pre-assessment, project, and model version artifacts.

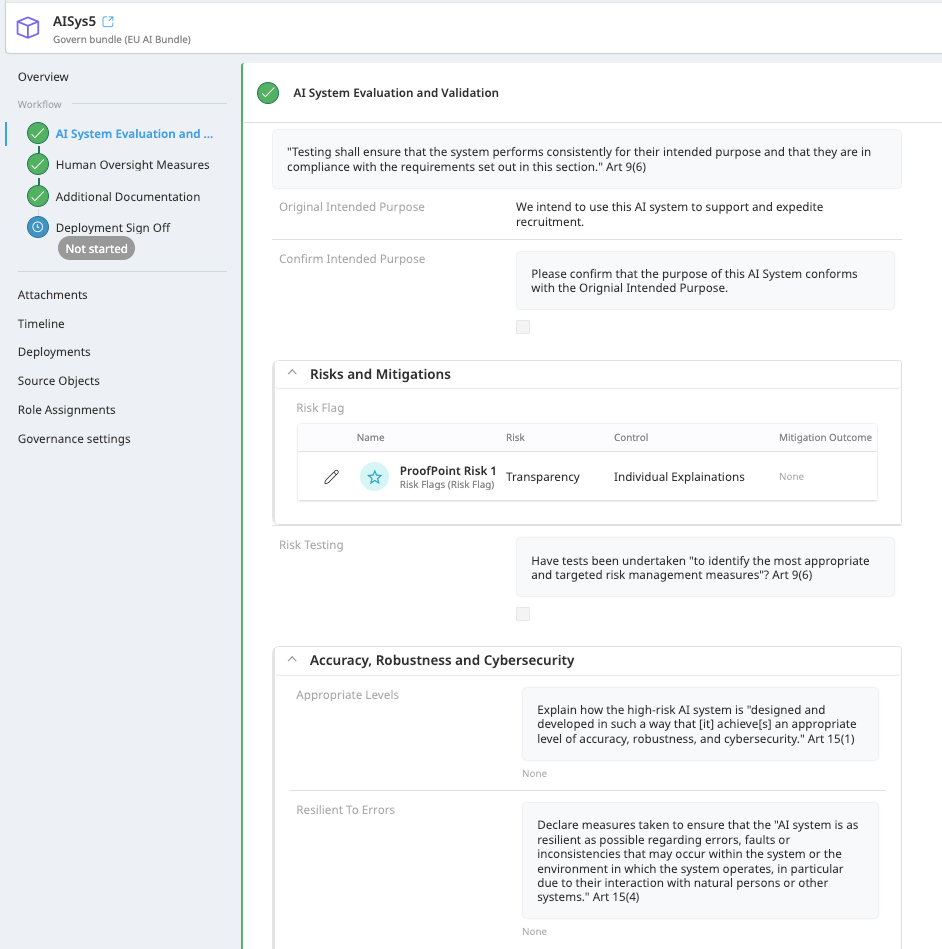

The first step of the bundle template, “AI System Evaluation and Validation,” enables users to validate that the original intended purpose of the AI system remains true and to revisit the risks identified at the pre-assessment by adding additional risks and controls, as well as explaining how these risks have been addressed.

Requirements associated with “Accuracy, Robustness, and Cybersecurity” also sit at the first step of the Bundle.

Following this, requirements relating to “Human Oversight” are addressed.

In the next step, “Additional Documentation,” conformity assessment procedures and critical documentation are covered. Because we treat the bundle object as the AI system, conditional workflow steps lead the user to the appropriate conformity assessment procedure. The logic behind this process is taken from Article 43, which directs organizations to either: a “Conformity Assessment based on Internal control” or “Conformity based on Assessment of the Quality Management System and an Assessment of the Technical Documentation.” We strongly advise that users validate the outcomes of this logic against their expectations and legal team advice.

This section also incorporates high risk AI system deployment requirements relating to specific documentation including: Declaration of Conformity, CE marking, Registration, a Fundamental Rights Impact Assessment, and Technical Documentation.

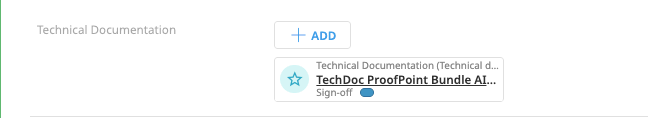

Technical Documentation is a custom Govern artifact and should be created for the bundle. Click + Add to create a new technical documentation artifact or select an existing one. All questions associated with the artifact are drawn from Annex IV and include specifications of the post-market monitoring plan. The technical documentation artifact requires approval through sign-off. This is designed to ensure that the documentation is representative of the AI system and validated before being used toward conformity assessment.

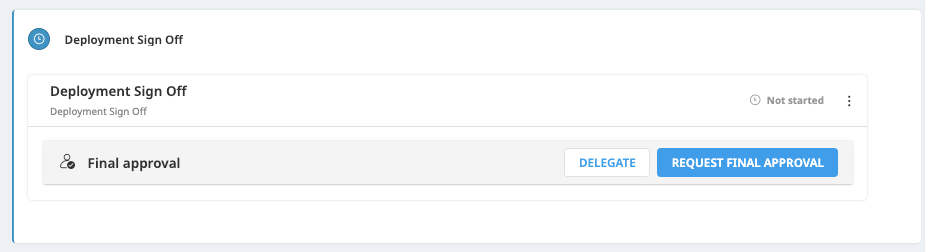

The final step of the bundle workflow is the review and sign-off. This sign-off gates deployments, and as such should be treated as the final guardrail with respect to ensuring your organization is satisfied with the AI system’s compliance before it’s placed on the market.

As is the case at the pre-assessment, delegate and request final approval so that the relevant user is notified.

Once approval is granted, the bundle can be deployed.

Return to the Project artifact.

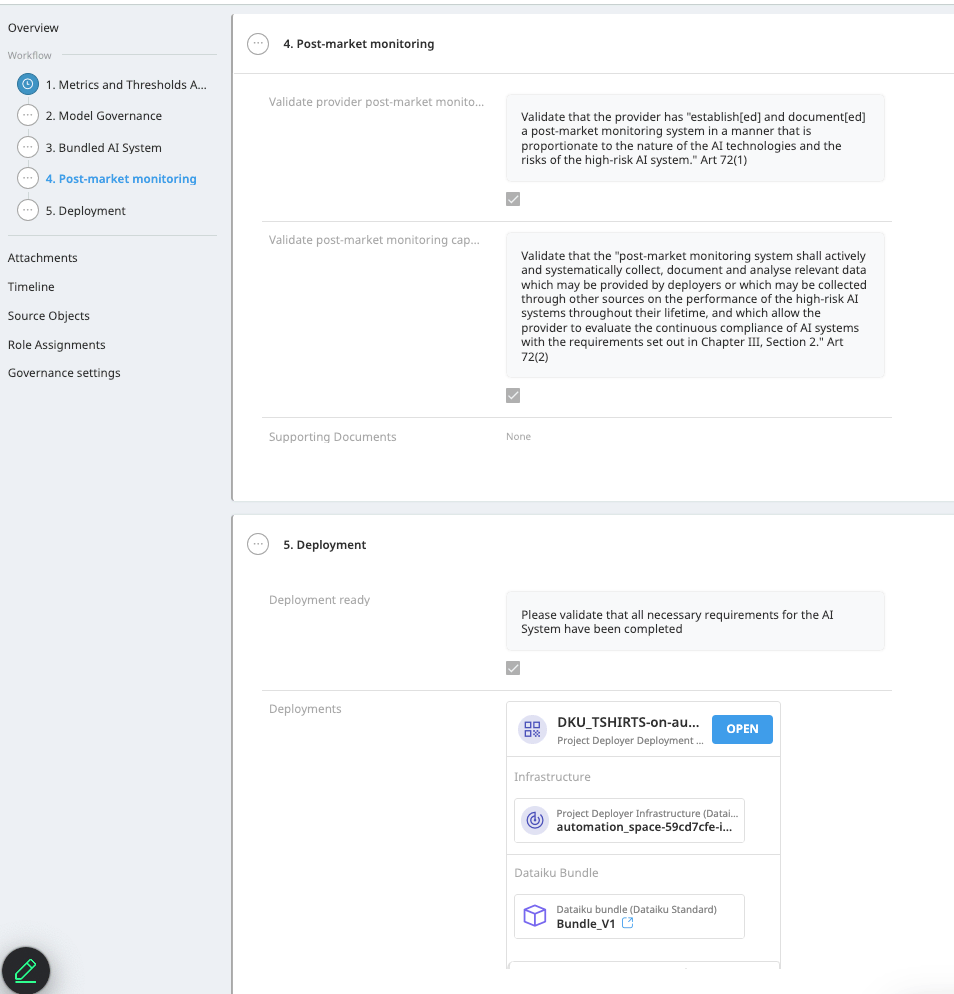

In Step 4 of the Project artifact, “Post-Market Monitoring,” you can validate your post-market monitoring plan and provide any relevant documentation.

The final step requires that you signal the AI system has been deployed. Relevant deployments can be viewed at this stage.

Outside of Govern#

AI system post-deployment monitoring is executable through the Deployer node’s Unified Monitoring feature.

Any changes to the AI system that require new model or bundle versions will create new versions of these artifacts on the Govern node.

The process specified above should be repeated where this occurs. Any legal obligations around updating AI systems should also be followed by your organization.