Concept | Data pipeline optimization#

Programmers will be familiar with the process of code refactoring. The goals of refactoring include improving readability, reducing complexity, and increasing efficiency — all while maintaining the same functionality of the original code.

You can apply a similar logic to refactoring a Dataiku project. Before moving a project to production is an excellent opportunity to think about optimization techniques.

This article highlights a number of strategies to optimize the Flow of a project, in particular with regards to computational efficiency.

Basic cleanup#

You can begin to optimize a data pipeline by doing a basic cleanup of the project. This includes deleting any unnecessary objects created during the project’s development, such as discarded Flow branches, draft notebooks, or unused scenarios.

If you want to retain artifacts not required for production, Dataiku allows you to safely copy Flow items into other projects. You can then delete them from the original.

Readability#

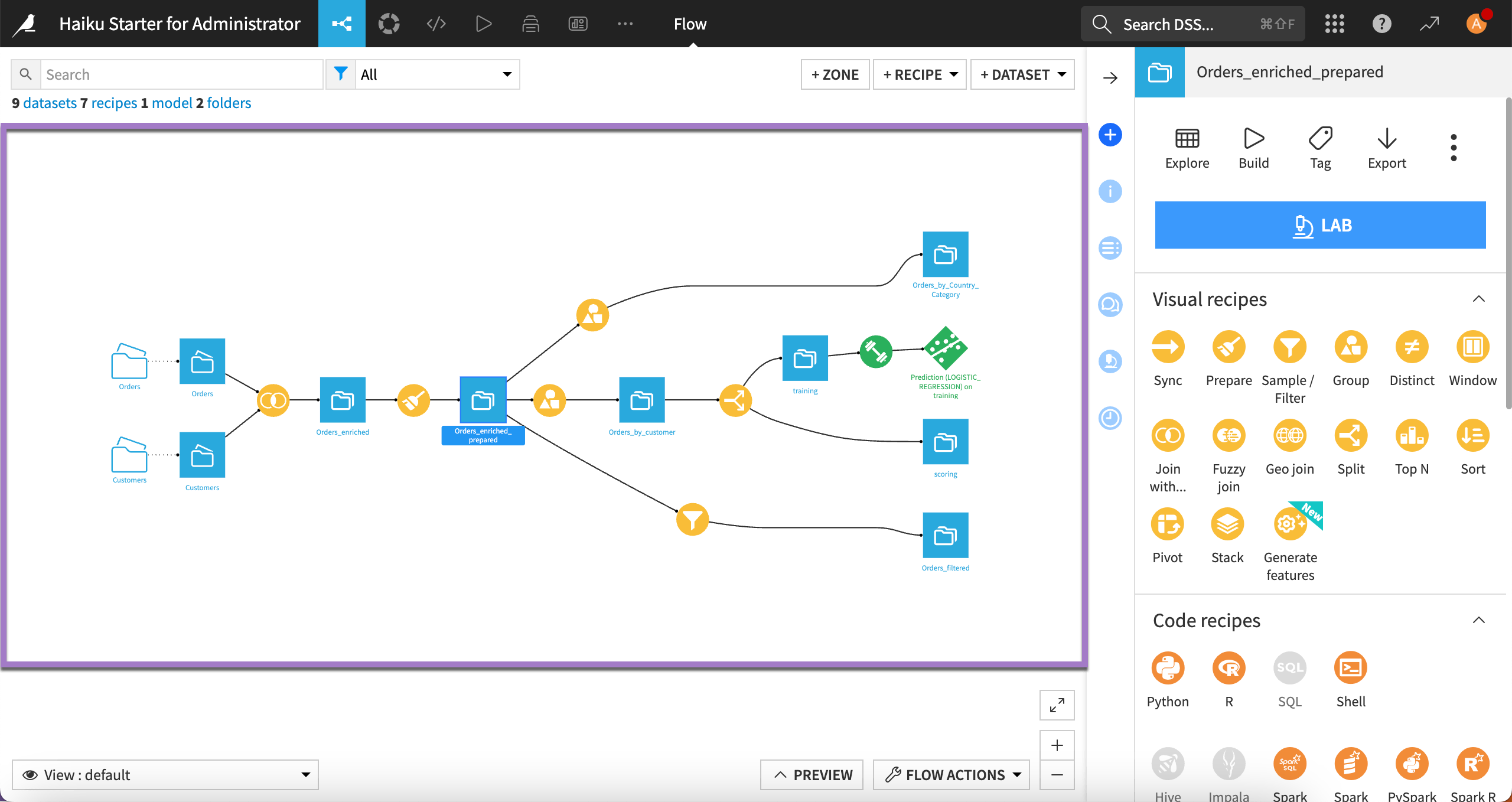

You might also review the project’s readability, particularly as new contributors may join the project as it moves to production. For large Flows, add Flow zones to help communicate the main phases of a project from a high level.

Flow zones can improve the high-level readability of a project before moving it to production.#

Variables#

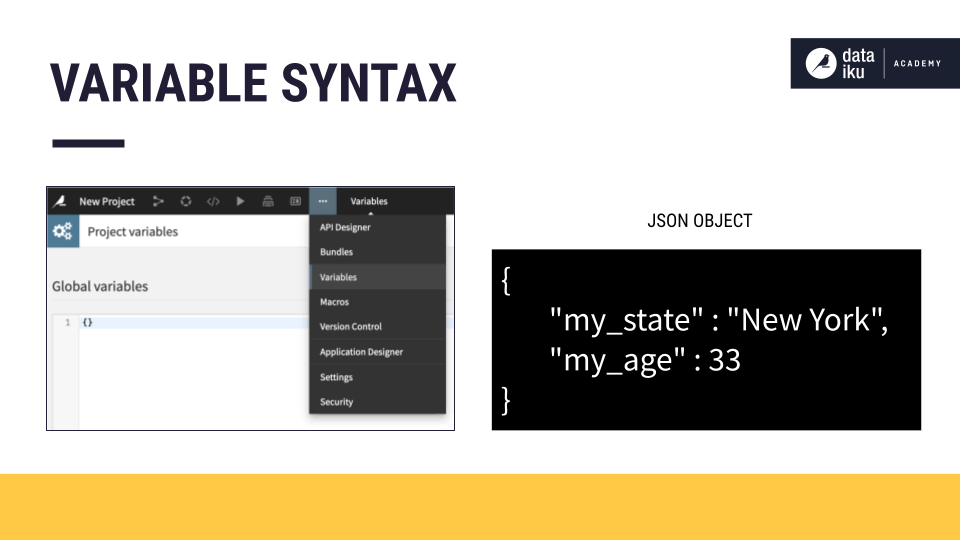

Consider the project’s maintainability. If you’ve hard-coded values in recipes, try replacing them with variables.

You can define project variables as JSON objects.#

Computational efficiency#

A key optimization consideration is the project’s computational efficiency. Is the Flow running in the most optimized way possible?

Recall that Dataiku is an orchestrator of workflows. With this in mind, verify that the project satisfies two general requirements:

It leverages any external computing power at its disposal.

It minimizes resource consumption on the Dataiku host.

Recipe engine checks#

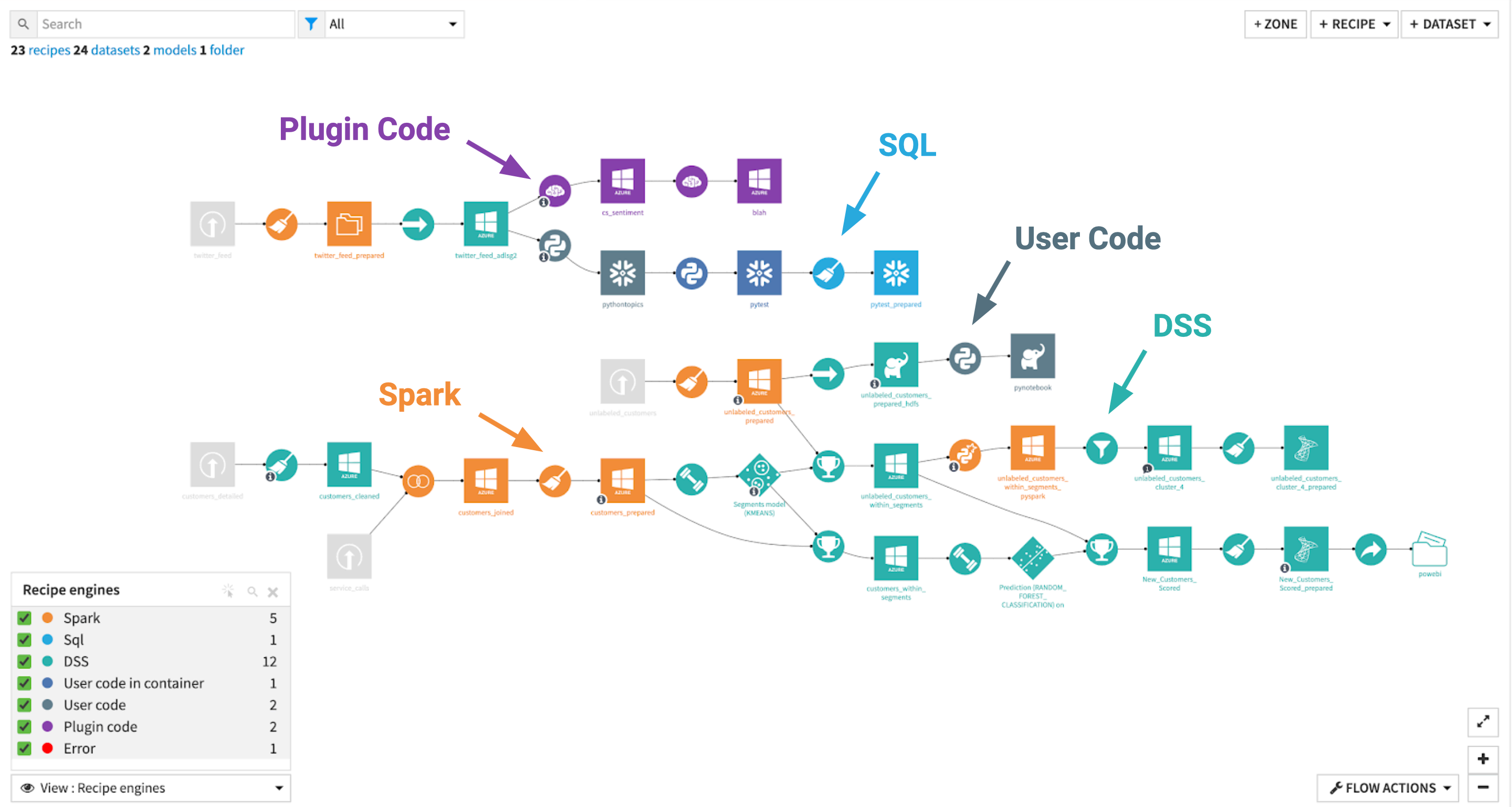

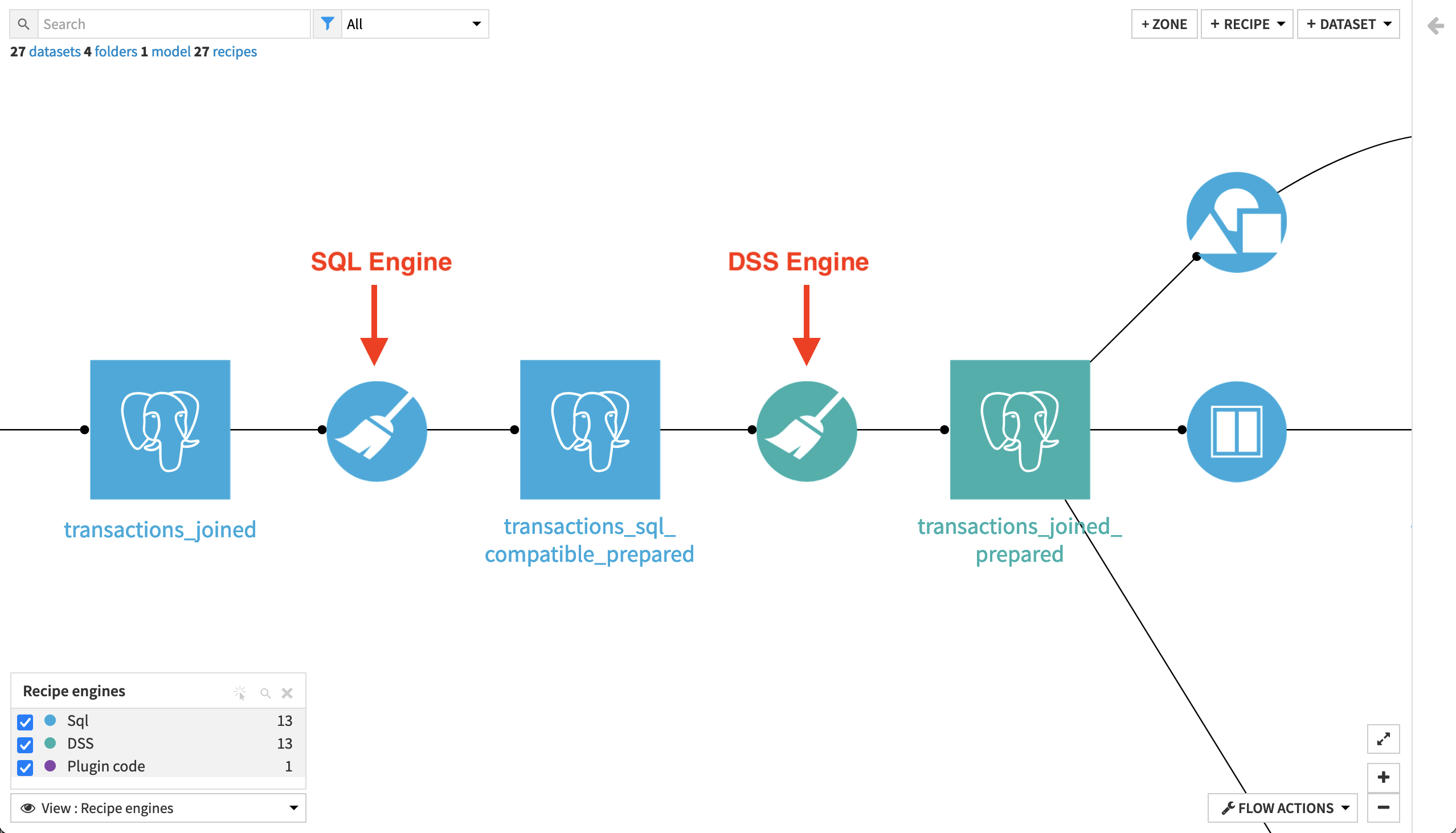

To ensure the optimization of your project’s Flow, you might start with the recipe engine Flow view to evaluate the current engine selections, and in particular, identify where the DSS engine is being used.

Recall that Dataiku selects the engine based on the storage location of the input and output datasets, as well as on the operation being performed. However, you should confirm that each engine is truly the “best” for your particular use case.

The recipe engine Flow view provides a visual way to see the associated engine of each recipe in the Flow.#

Keep in mind that certain computation engines can be more appropriate than others depending on several factors. These include the scale of data, the available databases or architecture at your disposal, and the operations being performed.

Start with these guiding principles when reviewing recipe engines:

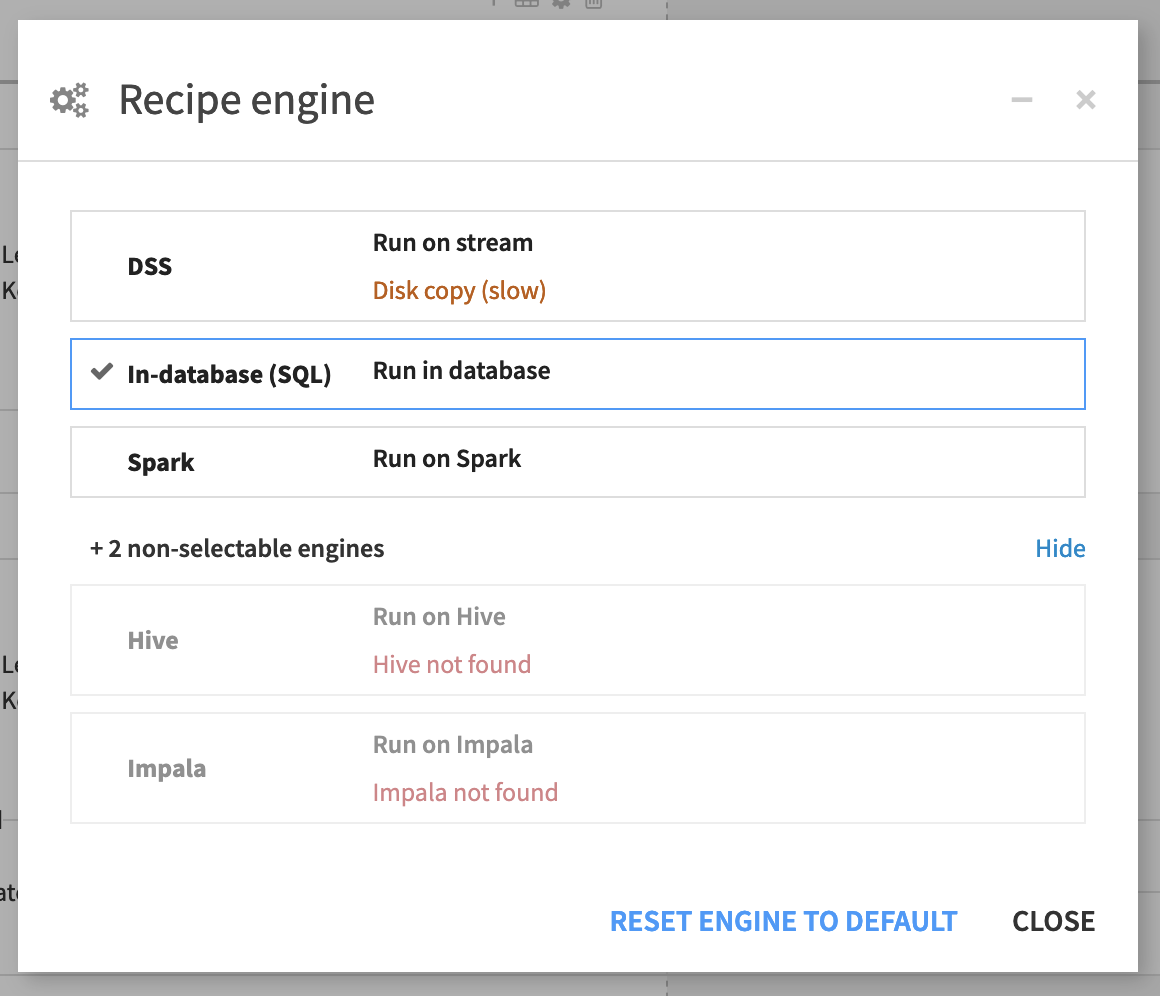

If you have datasets stored in SQL databases, make sure that the corresponding recipes run in-database. Or in some cases, confirm why they can’t.

If you have a large amount of data stored as files in cloud object storage, you may be able to use Spark, which allows you to scale the computation by distributing it across multiple machines in a cluster. In less frequent scenarios, you can apply the same reasoning with on-premise Hadoop clusters where Spark and Hive can process data stored in HDFS at scale.

If you need to offload visual machine learning model training or code recipes, make sure that containerized execution is enabled and configured accordingly.

Beneath the Run button within the Settings tab of every visual recipe is the recipe engine setting. Available engines depend on the instance’s architecture.#

Is Spark required?#

The second guiding principle above suggests using Spark for a “large amount” of data stored as files in cloud object storage. The definition of “large” will obviously vary, but if your dataset fits in the memory of a single machine, other recipe engines might be faster. For large datasets, Spark can indeed be fast because it can run distributed computation on clusters.

However, this advantage doesn’t come without consequences. Spark’s overhead is non-negligible. It takes time for Dataiku to tell Spark what to run, how many clusters to spin up, etc. For smaller datasets, with simpler computation (that is, not a join or window operation), the DSS engine may actually be faster than Spark. Sticking with Dataiku’s selection — at least without substantial evidence — is often a good approach.

Other Flow views, such as the file size Flow view, may yield further information to help you assess whether Spark is the right-sized solution for a particular job. Ultimately though, testing — first for stability with sample-sized data — and then with more realistic data, is the only way to definitively answer the question of whether Spark is the best choice for a particular situation.

See also

Review the reference documentation for more information on Dataiku and Spark.

Spark and SQL pipelines#

One corollary to the principles above is that you should try to avoid the reading and writing of intermediate datasets.

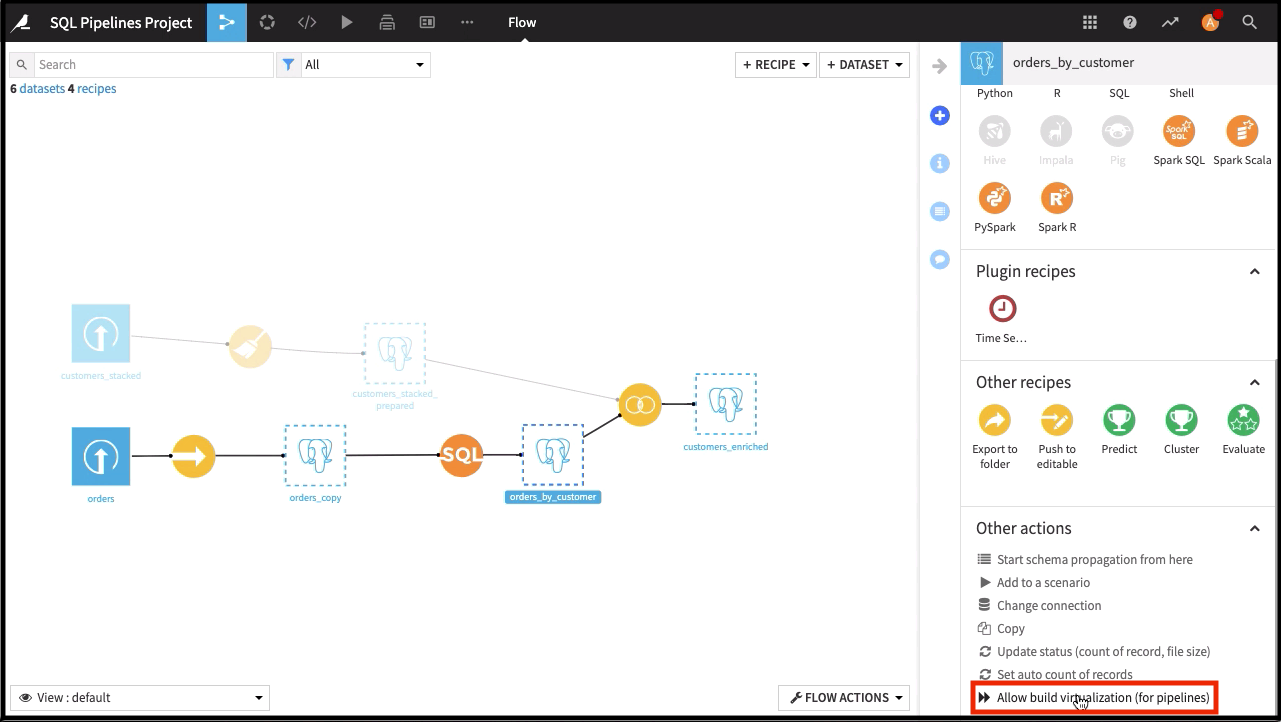

In certain situations, you can minimize the repeated cycle of reading and writing intermediate datasets within a Flow by setting up either Spark or SQL pipelines.

For example, when several consecutive recipes in a Flow use the Spark engine (or alternatively the SQL engine), Dataiku can automatically merge these recipes. Then, you can run them as a single Spark (or SQL) job. These kinds of pipelines can strongly boost performance by avoiding needless reads and writes of intermediate datasets.

See also

See How-to | Enable SQL pipelines in the Flow for an introduction.

Back-to-back Prepare recipes#

Having back-to-back Prepare recipes in the Flow requires some justification for doing so. One longer Prepare recipe — with one input and one output — may be more efficient than two shorter Prepare recipes. This strategy reduces the number of intermediate reads and writes.

An exception to this rule could be back-to-back Prepare recipes that enable one recipe to run in-database. If a Prepare recipe contains only SQL-compatible processors, it can take advantage of in-database computation.

The first Prepare recipe contains only SQL-compatible processors, and so can use the SQL engine. On the other hand, the second Prepare recipe has non SQL-compatible processors, and so defaults to the DSS engine.#

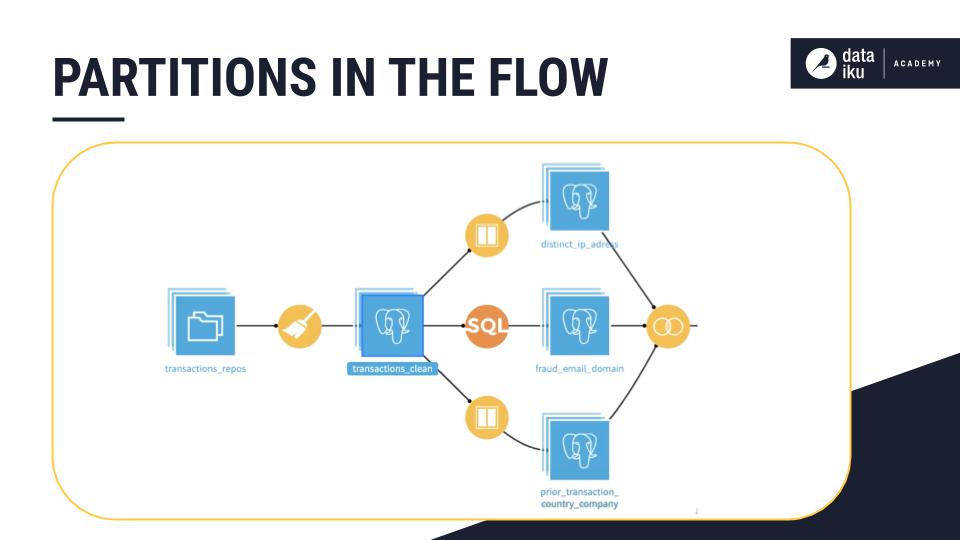

Partitioning#

Finally, for specific use cases, partitioning can be a valuable optimization tool. For example, consider the common scenario where you need reports and dashboards updated on a periodic basis, such as daily. Without partitioning, the datasets in the Flow would continue to grow larger, consuming greater computational resources.

Instead of redundantly recomputing this historical data, you could instead partition the Flow so that only the latest segment of interest is rebuilt, keeping aside the historical partitions. If you do have a clear key on which to partition your Flow, partitioning is definitely an option worth considering before moving to production.

Stacked dataset icons in the Flow signal that it’s a partitioned dataset.#

See also

To learn more, see the Partitioning course in the Advanced Designer learning path.

Testing runtime#

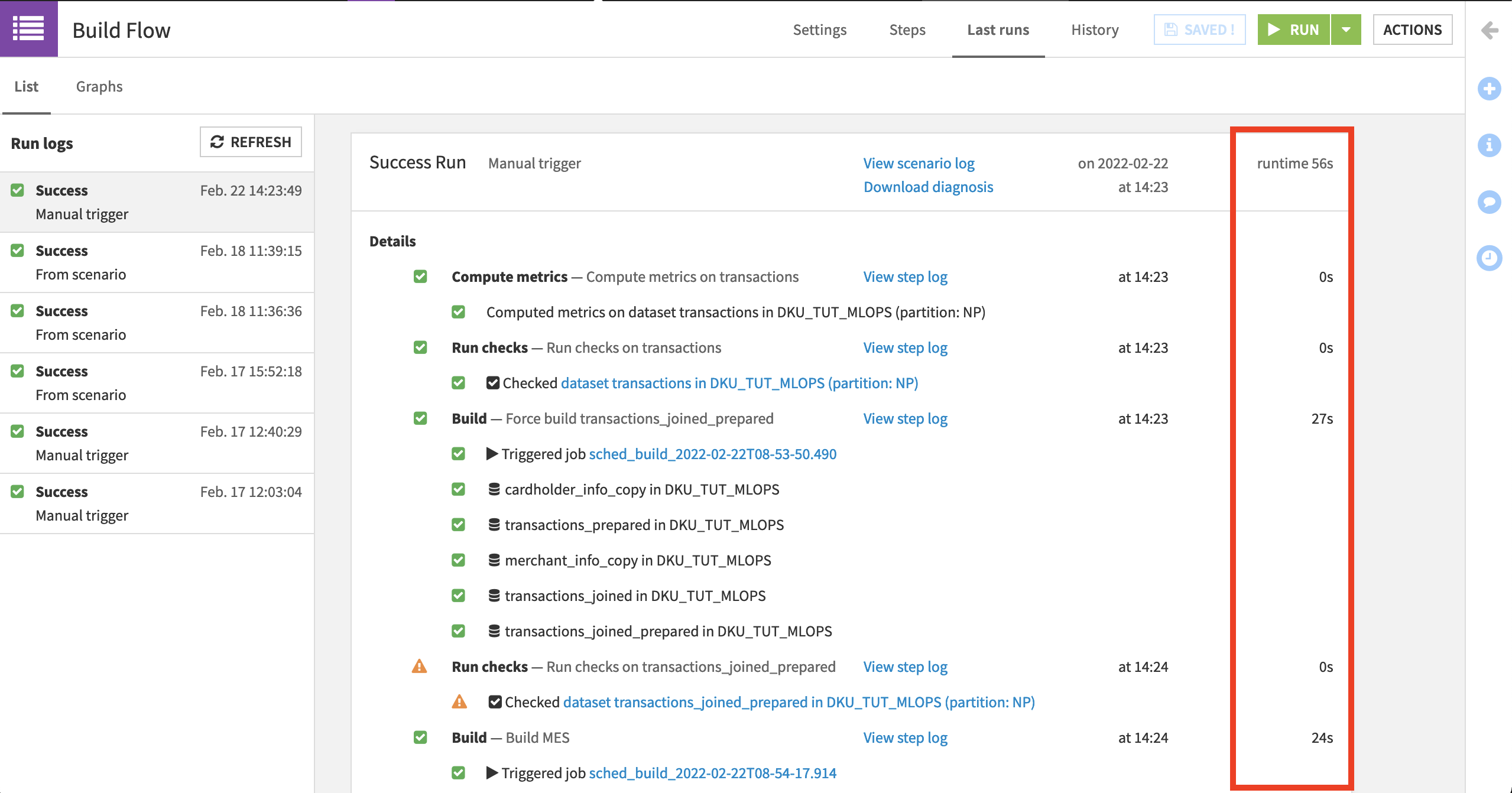

Offloading computation using the strategies described in this article has the potential to add complexity. With this in mind, you will have to weigh the pros and cons with respect to the infrastructure, the type, and the volume of data you will be using in production.

Testing the runtime of your Flow with scenarios can provide the basic information needed to think through these tradeoffs as you identify potential bottlenecks. You can find a quick visual comparison in the Last Runs tab of a scenario, which reports the runtime of each step. Many Flow views, such as last build duration, count of records, or file size, can also help identify bottlenecks.

Next steps#

Alongside optimization concerns, you should also devote sufficient attention to documenting a project before putting it into production. Learn more about how to do so in an article on workflow documentation.