Solution | Real Estate Pricing#

Overview#

Business case#

Assets invested in Real Estate have x3 in the past ten years to exceed 3,3 trn$ AUM. The pandemic has triggered significant volatility on real estate pricing: on average, commercial real estate indexes have seen their prices drop by -25% in a year.

In this non-regulated market, the importance of strong valuation strategies is critical to support opportunity identification, fuel impactful negotiation strategies, and optimize P&L management. Teams focused on developing effective valuation models need to manage complex data integrations, detailed modelling challenges, while ensuring the output results are consumable by other teams and clients.

This work can often involve distinct skill sets and underlying technologies, making collaboration challenging and creating project inefficiencies. By removing the need for upfront investment in Webapp and API design, allowing data scientists, data analysts and asset managers to interact collaboratively, and providing a flexible and complete project framework with real-world data, this Solution accelerates time to insight and minimizes unnecessary development and effort.

The goal of this adapt-and-apply Solution is to show asset management organizations how they can use Dataiku to predict the price of residential real estate. Using publicly available data, this Solution enables them to identify key factors in real estate pricing at individual and portfolio levels.

Installation#

From the Design homepage of a Dataiku instance connected to the internet, click + Dataiku Solutions.

Search for and select Real Estate Pricing.

If needed, change the folder into which the Solution will be installed, and click Install.

Follow the modal to either install the technical prerequisites below or request an admin to do it for you.

Note

Alternatively, download the Solution’s .zip project file, and import it to your Dataiku instance as a new project.

Technical requirements#

To use this Solution, you must meet the following requirements:

Have access to a Dataiku 14.0+* instance.

A Python 3.9 code environment named

solution_real-estate-pricingand the following required packages:

numpy==2.0.2

scikit-learn==1.6.1

geojson==2.5.0

Shapely==1.8.5.post1

geopy==2.2.0

Flask==2.1.3

werkzeug==2.0.3

pyproj==3.2.1

Data requirements#

You should use the project as a template to build your own analysis of real estate prices. Still, you can change the input dataset to your own data, and run the Flow as-is. The data initially available in this project comes from public sources.

Input data |

Description |

|---|---|

real_estate_sales |

Was provided by Demandes de valeurs foncières (Requests for real estate values), published and produced by the general directorate of public finances. It originally contained data for the entire French metropolitan territory and its overseas departments and territories, except for Alsace, Moselle and Mayotte. For this project, we sampled on transactions in the city of Paris. |

census_data |

Contains information about the French IRIS (Ilots Regroupés pour l’Information Statistique <-> spatial areas grouped for the statistical information) provided by the French National Institute of Statistics and Economic Studies (INSEE) and the French National Institute of Geography (IGN). The data is limited to years before 2017 so for this project the census data of years 2018, 2019, and 2020 are copies of the 2017 data. |

properties_portfolio |

Contains a CSV file with a portfolio of properties and their characteristics. It’s later used in the Flow as the dataset on which you apply a predictive model for scoring. |

subway_stations and city_districts |

Contains data retrieved using a web scraping tool applied to Wikipedia. |

Note

Due to package size constraints, all intermediate datasets are intentionally empty. All datasets needed to support the dashboard and webapp are built. To build the full Flow, use the Build Full Flow scenario after import.

Workflow overview#

You can follow along with the Solution in the Dataiku gallery.

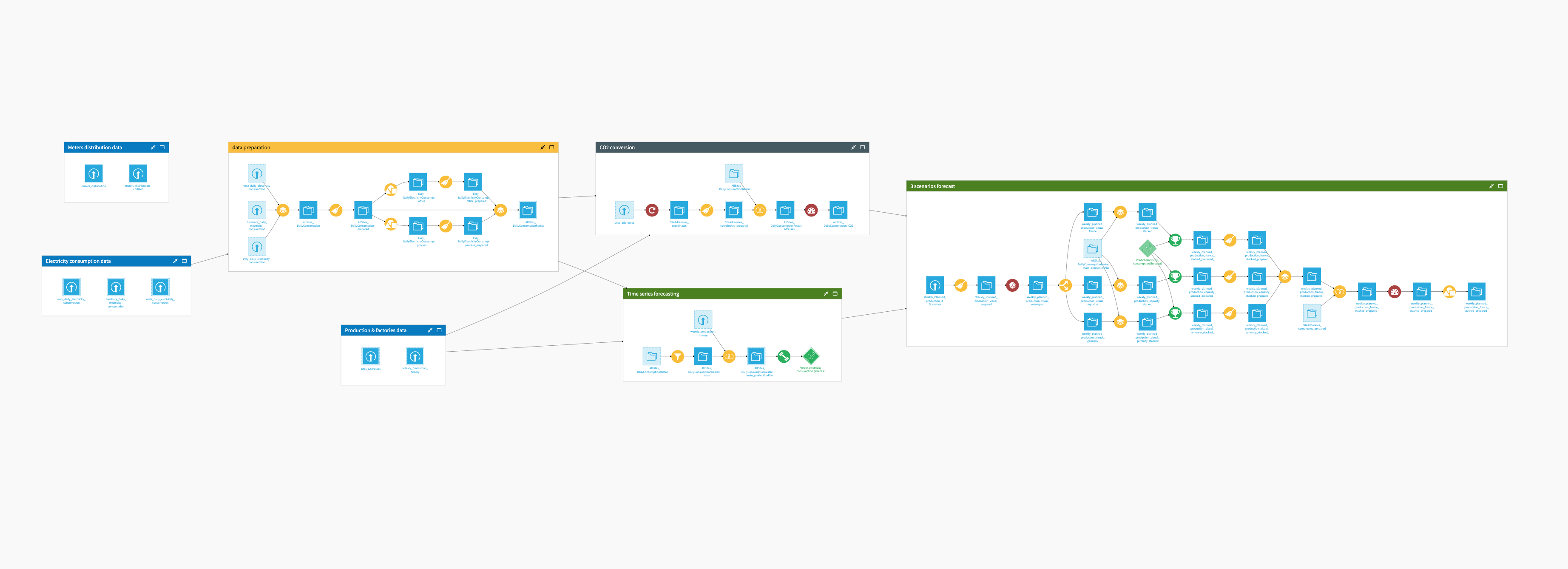

The project has the following high level steps:

Process the property data, census data, and subway station data.

Join all the spatial data (census, stations, city center) to the property sales data.

Apply time feature engineering techniques.

Train and score a model for predicting the price of real estate.

Forecast price per square meter.

Explore detailed visual and technical insights into underlying models and integrated datasets.

Interactively generate prices and leverage API and bulk-export functionality.

Walkthrough#

Note

In addition to reading this document, it’s recommended to read the wiki of the project before beginning to get a deeper technical understanding of how this Solution was created and more detailed explanations of Solution-specific vocabulary.

Predict real estate prices with public data#

The first branch of the project focuses on preparing data to train a model for predicting the price of real estate. To begin, you must preprocess and feature engineer all input datasets detailed above. Preprocessing of the data involves:

Parsing transaction dates.

Cleansing the data and renaming columns for consistency across all input datasets.

Creating new features based on existing columns (for example GeoPoints, property per square meter price, computing district polygons, using the graph analytics plugin to turn the subway data into a network graph, etc.).

Geographical Data enrichment to compute, for all properties, their distance from the center of Paris.

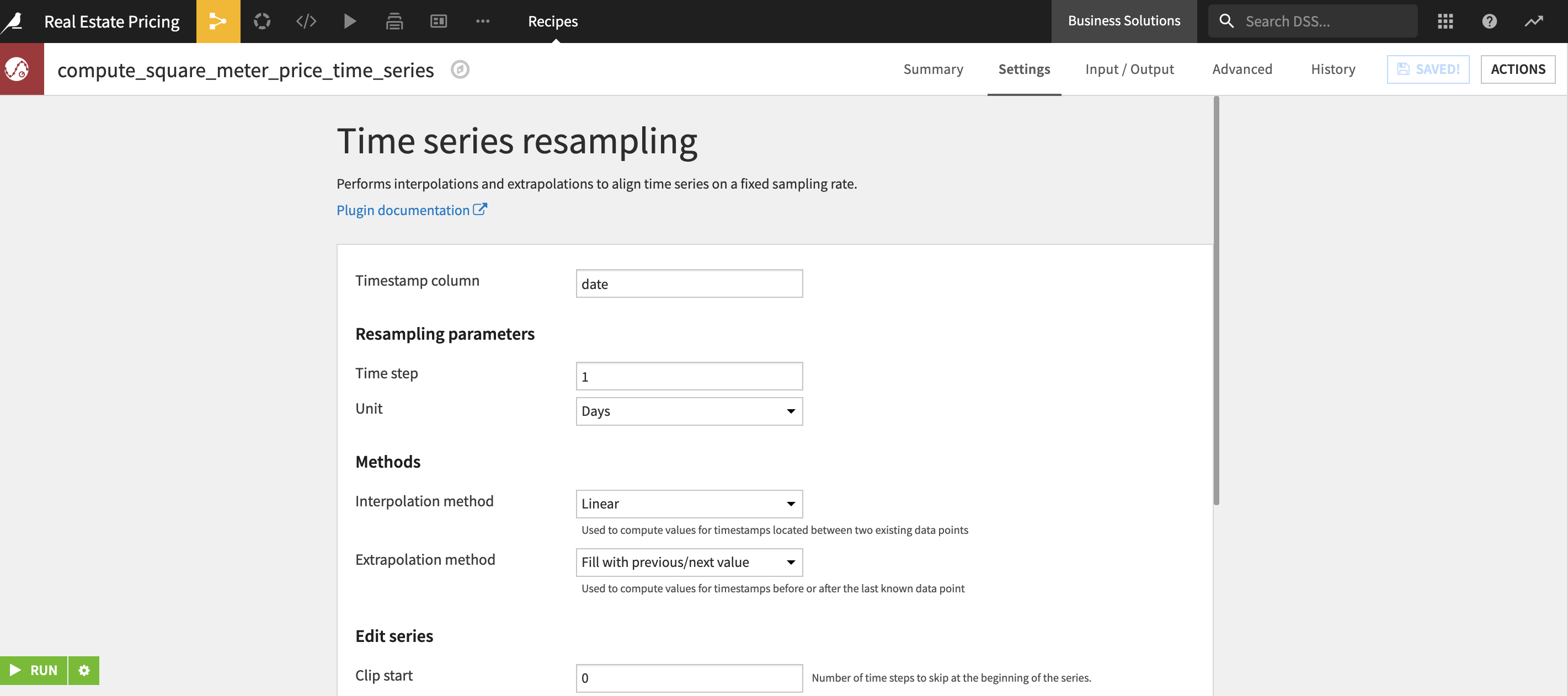

Once you have cleaned and unified all elements of the input datasets into a single dataset properties_with_geospatial_features_prepared, you can apply time-based feature engineering to the properties sales history. With the knowledge that three main factors influence a property price (characteristics, location, and real estate market) you want to compute the final factor before training a predictive model. In other words, you need to compute the influence of the real estate market at the time of a property’s purchase.

You do this by applying windows of 30, 60, 90, and 180 days in the past, across several different locations, looking for the average square meter price. When information is missing, the closest value available in time replaces the missing value. That is, if the 30 day square meter price is missing, you replace it with the 60 days square meter price. After finding all values, you create price estimates for each time frame T and location L by computing:

T days average square meter price in L x property_living_surface

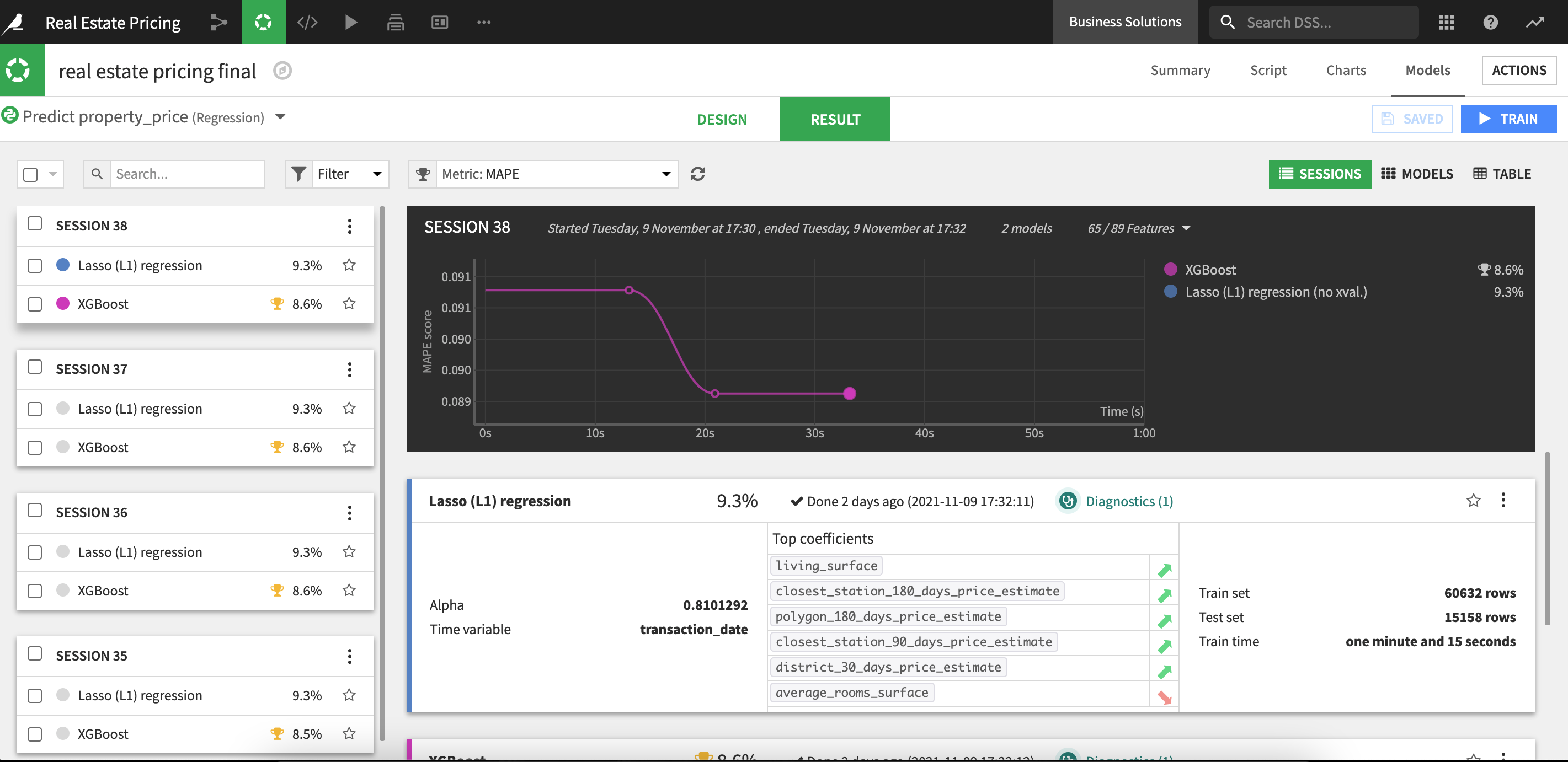

With the data now appropriately prepared and contextualized, you can train the machine learning model responsible for predicting residential real estate prices. The model splits the data coming from properties_windows_prepared with 80% making up the train set and the other 20% used for the test set.

We train the model to be optimized on the mean absolute percentage error (MAPE) before deploying it on the Flow for evaluation. The evaluation results in an observed drift of -1.3% between the first and last deployment.

This same branch also preprocesses a portfolio of properties and then scores all the properties using the trained machine learning model. This serves as an example of how, when adapting this project to your own needs, you can perform batch scoring on a large portfolio of properties.

The api_&_webapp Flow zone includes additional data cleaning and data isolation to make data available for dashboard visualizations, which will be discussed later in this article.

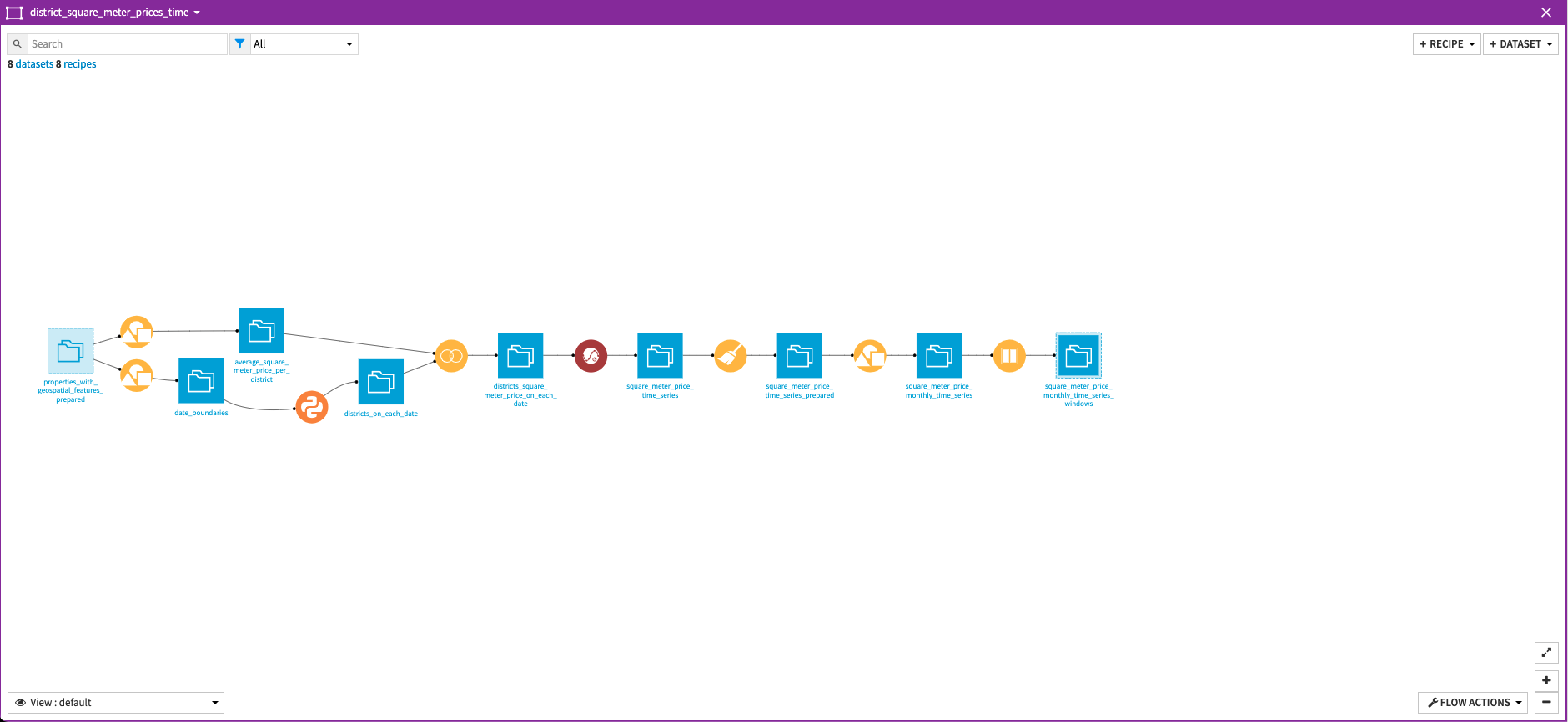

Forecast the square meter price for Paris districts#

A parallel branch exists in this Solution that’s dedicated to forecast the square meter price in each distinct Paris district. The overall trend of prices in Paris is increasing, but the evolution of those prices differ based on the district (that is, neighborhood) observed. The Solution attempts to capture those trends by training a linear model to forecast property prices.

The Flow preprocesses the data to turn it into an exploitable time series dataset with properties separated by district and the square meter price computed. The trained model uses only the time information (that is, the month) as a predictor to forecast the square meter price. The train set is monthly data between 2016 and 2019, and the model is tested on 2020 data.

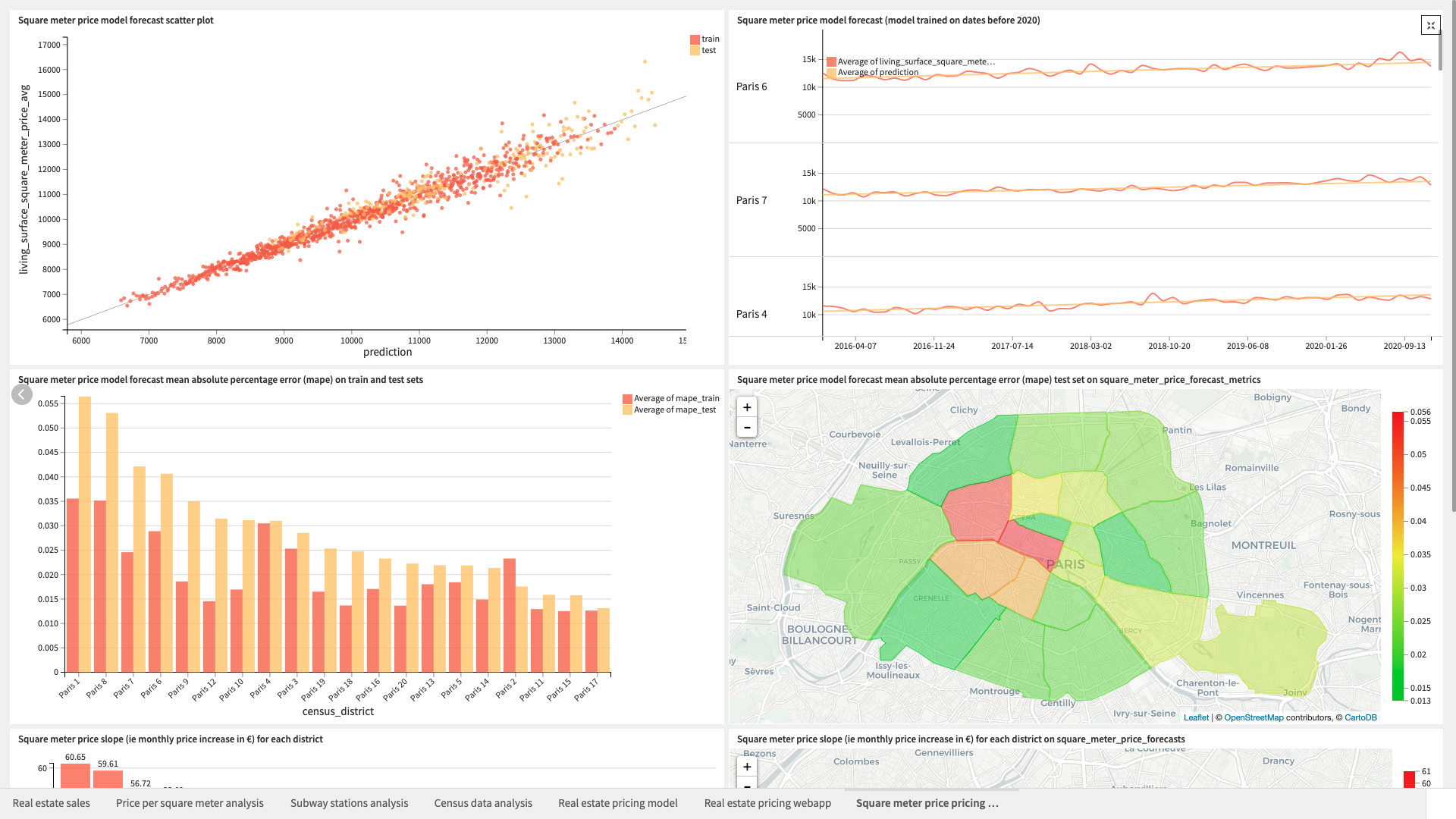

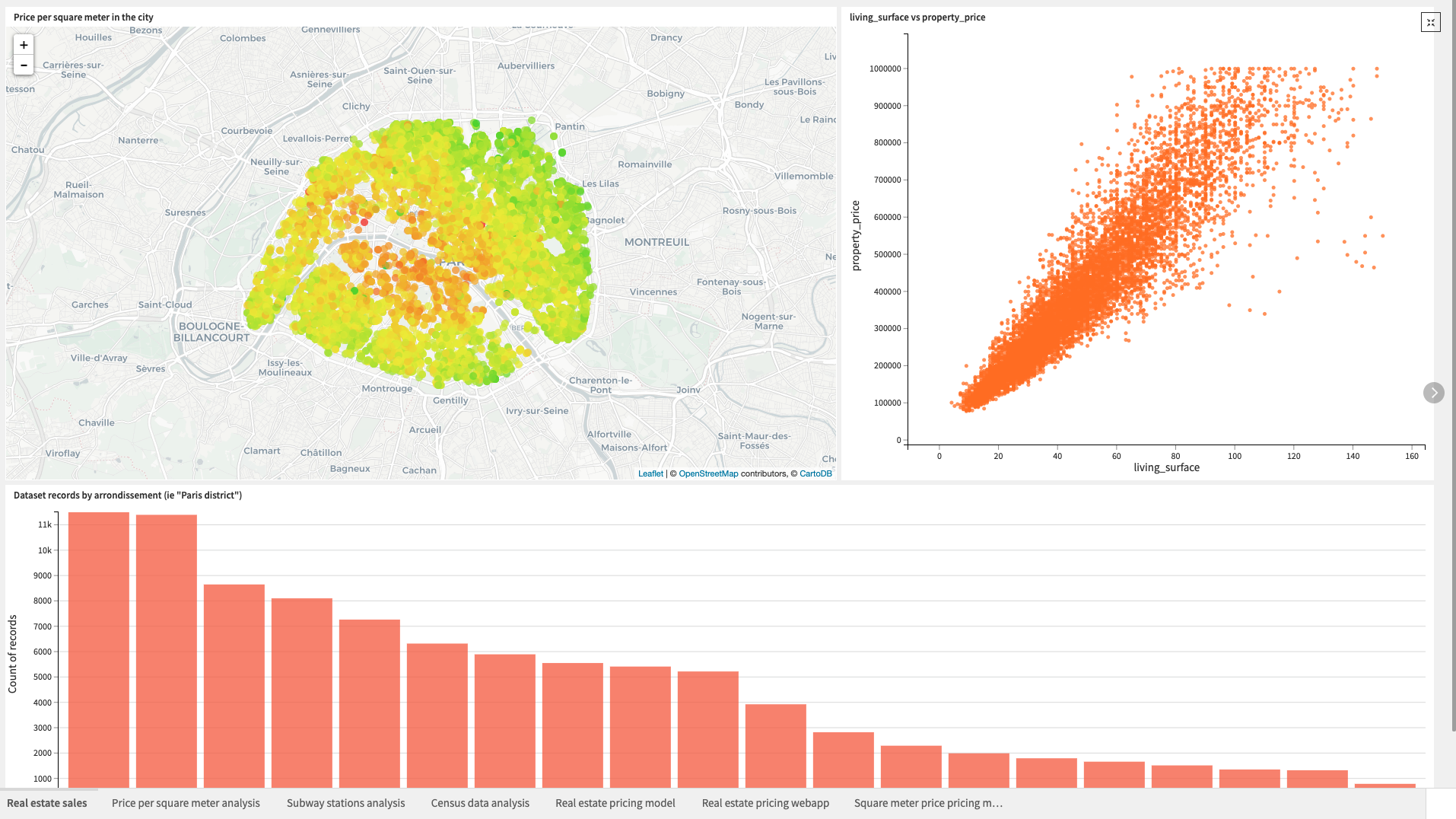

Leverage rich visualizations to explore your data#

The Real Estate Pricing dashboard consists of multiple pages containing Dataiku charts for analysis of the project’s core information and a single page containing an interactive webapp.

The first five pages include visualizations resulting from the real estate pricing prediction branch of the Solution.

The table below presents all pages of the dashboard.

Page |

Description |

|---|---|

Real Estate Sales |

Gives an overview of the initial real estate dataset. |

Price per square meter analysis |

Focuses on analyzing the sold properties’ square meter prices over time and by district. |

Subway Stations Analysis |

Helps in analyzing the Paris subway network, specifically the importance of subway stations, by visualizing the graph database we created. |

Census Data Analysis |

Analyzes the project’s census data with a focus on population density and population density as they relates to Paris districts. |

Real estate pricing model |

Presents several ways to analyze the performance of the model for real estate price prediction including, most importantly, an analysis of the model variable importance (that is, the characteristics of a property that most influence their price) |

Real estate pricing webapp |

Presents an interactive webapp in which we can enter a property address in Paris, as well as characteristics like its living surface, rooms, etc. to get real time price predictions.

Note We intend this Solution to be a template to guide development of your own analysis in Dataiku. Accordingly, you shouldn’t use the predictions from the webapp as actionable insights or an accurate prediction. |

Square meter price metrics |

Uses data from the square meter price forecast model to show the overall model performance and price per square meter forecasts for each Paris district. |

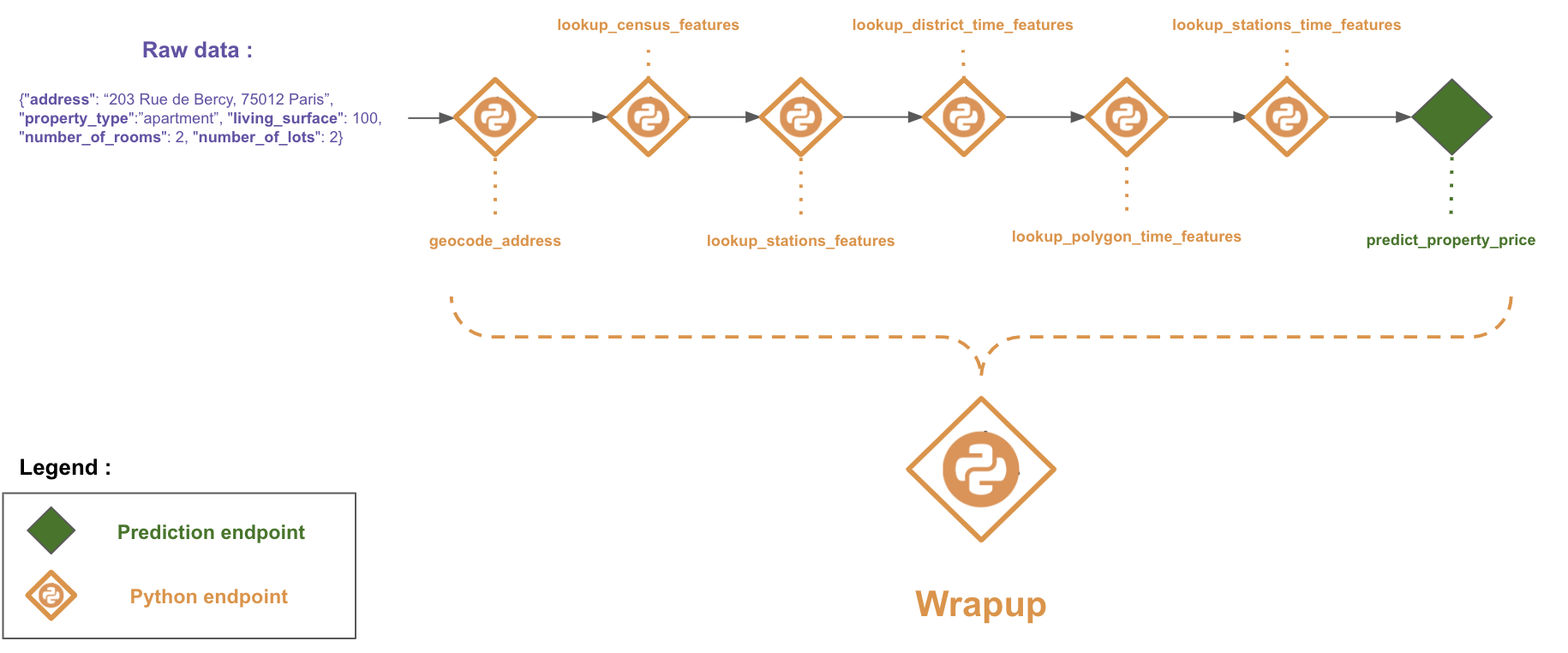

Access price predictions via an API#

We’ve also designed this Solution to show how you can put a real estate pricing prediction project in Dataiku into production via an API service. The API service is composed of seven Python endpoints and one prediction model endpoint. You can call each endpoint separately by passing the required information as parameters.

You can call a final endpoint wrapup with the property characteristics (address, property type, number of rooms, living surface, and number of lots). It enriches the data using all the endpoints before calling the prediction model endpoint to output a price prediction. It’s not currently possible to use the API node as the backend prediction process within the webapp.

Reproducing these processes with minimal effort for your data#

This project equips asset management teams to understand how they can use Dataiku to predict the price of their existing property portfolios with publicly available data. By creating a singular Solution that can benefit and influence the decisions of a variety of teams in a single organization, you can design smarter and more holistic strategies to reduce staff costs, achieve faster time-to-action, and more deeply integrate know-how.

This documentation has provided several suggestions on how to derive value from this Solution. Ultimately however, the “best” approach will depend on your specific needs and data. If you’re interested in adapting this project to the specific goals and needs of your organization, Dataiku offers roll-out and customization services on demand.