How-to | Secure data connections through AWS PrivateLink#

For certain plans, Dataiku enables Launchpad administrators to protect access to the following data sources through AWS PrivateLink.

AWS PrivateLink provides private connectivity between your Dataiku instance and supported AWS services without exposing your traffic to the public internet. Once activated, Dataiku Cloud will only connect to your data using one virtual private cloud (VPC) endpoint.

Important

Dataiku Cloud uses AWS PrivateLink by default to connect to specific AWS services in your Dataiku Cloud region, currently S3 and Bedrock.

For other AWS services, you will need additional services, and their availability depends on your plan. You may need to reach out to your Dataiku Account Manager or Customer Success Manager.

If you run into any error, please contact the support team.

Amazon S3 data#

To configure AWS PrivateLink for an Amazon S3 data source:

First, contact the support team so they can provide you with the endpoint to use. You will need to know the AWS region of your S3 buckets.

Add or edit an S3 connection in your instance’s Connections panel, check the box to the Use Path mode, and fill in the Region or Endpoint field with the value provided by support.

Ensure your S3 policy authorizes access to the endpoint.

Note

Athena’s Glue feature won’t work with S3 connections using AWS PrivateLink.

An example of an S3 policy configured to only accept requests from a VPC endpoint:

{

"Version": "2012-10-17",

"Id": "Policy1415115909152",

"Statement": [

{ "Sid": "Access-to-specific-VPCE-only",

"Principal": "ARN-OF-IAM-USER-ASSUMED-BY-DATAIKU",

"Action": "s3:*",

"Effect": "Deny",

"Resource": ["S3-BUCKET-ARN",

"S3-BUCKET-ARN/*"],

"Condition": {"StringNotEquals": {"aws:sourceVpce": "VPCE-ID"}}

}

]

}

An AWS Bedrock service#

Note

The PrivateLink feature is enabled by default for Bedrock connections in Dataiku Cloud regions where AWS PrivateLink is supported. (The list is in the Network Access Control panel of the Settings tab). It doesn’t require the purchase of an add-on, isn’t counted in the number of extensions, and doesn’t require additional settings.

It’s possible Dataiku Cloud may transition additional regions to PrivateLink in the future. Accordingly, any Bedrock traffic that previously originated from Dataiku Cloud public IPs could begin coming from a private endpoint instead. To control access to your Bedrock models with Dataiku Cloud, you should prefer using an IAM condition on the VPCE ID rather than public IP filtering.

To restrict access to your AWS Bedrock models using IAM conditions, you can do so using the Dataiku Cloud VPCE ID. To determine which VPCE ID your instance will use to connect to your Bedrock model, first navigate to your Launchpad. Then, you will find it listed in the Network Access Control panel of the Settings tab. It will depend on which AWS region your Bedrock service is hosted.

If you don’t find the right region in the list, it means PrivateLink hasn’t been enabled for this region yet. Please contact the support team if you want to enable it.

An AWS-hosted Snowflake database#

To configure AWS PrivateLink for a Snowflake database hosted on AWS:

Ensure your Snowflake region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select AWS Snowflake endpoint.

Select the AWS region of your Snowflake account. If the region you need isn’t available, please contact the support to enable it.

Keep this page open, and continue to the next step in the Snowflake console.

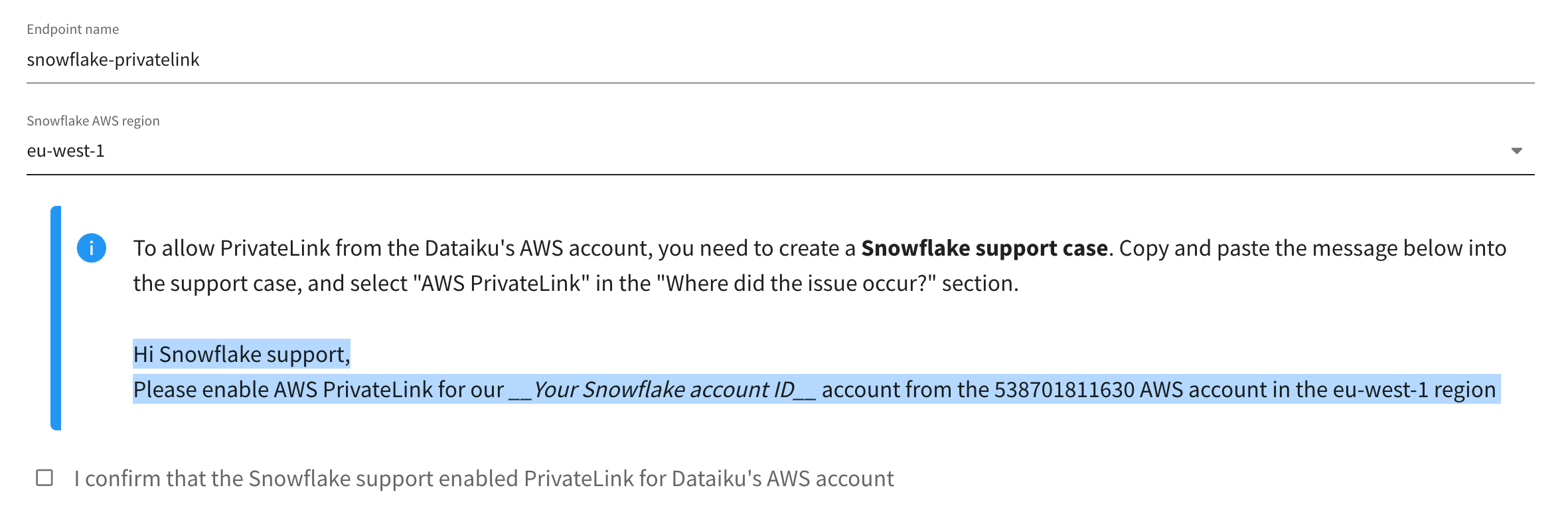

Ask Snowflake support to allow AWS PrivateLink from Dataiku’s AWS account#

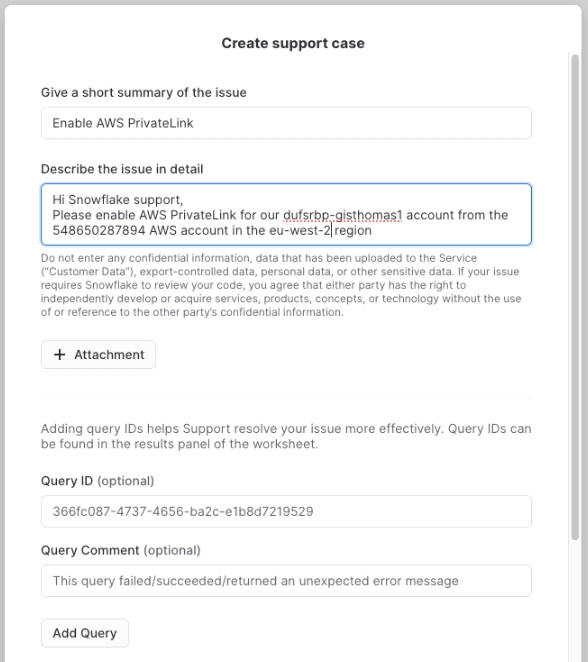

In the Snowflake console, go to the Support section in the left panel.

Create a new support case by clicking on Support Case in the top right corner.

Fill the title with something meaningful, for example

Enable AWS PrivateLink.Copy the message from the Dataiku Cloud Launchpad extension page to the support case detail.

Adapt the message with your Snowflake account ID and region with the correct information. You can find both in the bottom left corner of the Snowflake console.

In the Where did the issue occur? section, select AWS PrivateLink under the Managing Security & Authentication category, leave the severity to Sev-4, and click on Create Case.

Wait for Snowflake support to enable PrivateLink before continuing to the next set of instructions.

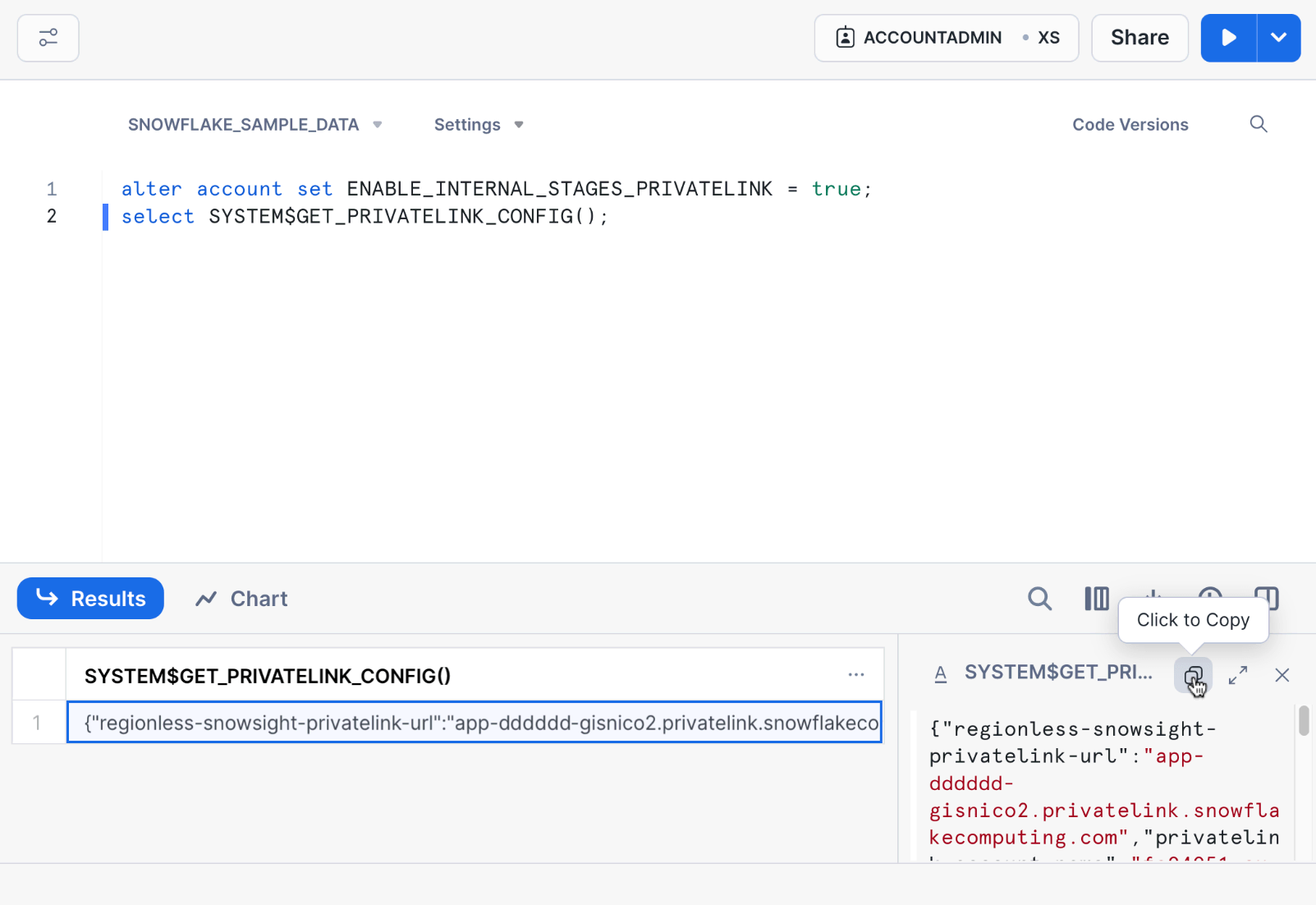

Retrieve the PrivateLink config from Snowflake#

Having completed the above set of instructions, in Snowflake, create a new SQL worksheet.

Run the following SQL commands with the

ACCOUNTADMINrole:alter account set ENABLE_INTERNAL_STAGES_PRIVATELINK = true; select SYSTEM$GET_PRIVATELINK_CONFIG();

Click on the output to open a new panel on the right.

Click on the Click to Copy icon to copy the JSON result.

Create the AWS Snowflake endpoint extension in the Dataiku Cloud Launchpad#

Return to the Extensions tab of the Dataiku Cloud Launchpad.

If not still open from the first section, click + Add an Extension, and select AWS Snowflake endpoint.

Provide any string as the endpoint name. It will be helpful if it’s a unique identifier.

Select your Snowflake AWS region; it should be available by now.

Check the box to confirm that Snowflake support has enabled PrivateLink for Dataiku’s account.

Paste the JSON you copied from the above set of instructions into the Snowflake PrivateLink config input.

Click Add.

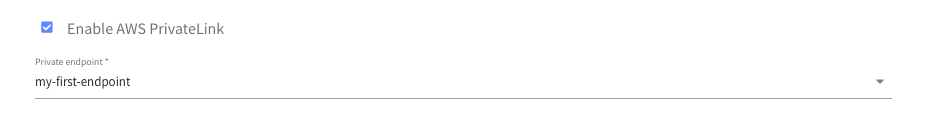

Use the AWS Snowflake endpoint in your Snowflake connections#

You can now use the endpoint you created both in new and existing connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Add a Connection or select the one you want to edit.

Fill the form, and use the value Connection Host displayed in the PrivateLink extension as the host param.

Note

Your Snowflake connection may use an S3 fast-write connection. In that case, you have to setup PrivateLink for it as described in Amazon S3 data if you also want that traffic to go through PrivateLink.

An AWS-hosted Databricks database#

To configure AWS PrivateLink for a Databricks database hosted on AWS:

First, see if your Databricks account and workspace meet the requirements to enable PrivateLink.

Ensure your Databricks region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select AWS Databricks endpoint.

Select the Databricks AWS region of your Databricks account. If the region you need isn’t available, please contact the support team to enable it.

Keep this page open, and continue to the next step in the Databricks console.

Configure your Databricks account for PrivateLink#

A Databricks administrator must perform the following steps in your Databricks console.

Register the Dataiku’s VPC endpoint provided in the extension form. You can refer to Databricks’s documentation to do so. Note that the region to fill in is your Databricks workspace AWS region — not Dataiku’s.

Ensure your Private Access Settings (PAS) configuration allows this registered VPC endpoint to connect to your workspace. See Databricks’s article to learn more.

Create the AWS Databricks endpoint extension in the Dataiku Cloud Launchpad#

Return to the Extensions tab of the Dataiku Cloud Launchpad.

If not still open from the first section, click + Add an Extension, and select AWS Databricks endpoint.

Provide any string as the endpoint name. It will be helpful if it’s a unique identifier.

Select your Databricks AWS region; it should be available by now.

Check the box to confirm that your Databricks account is configured for PrivateLink.

Fill in the URL of your Databricks workspace.

Click Add.

Use the AWS Databricks endpoint in your Databricks connections#

You can now use the endpoint you created both in new and existing connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Add a Connection or select the one you want to edit.

Fill the form, and use the URL of your Databricks workspace as the host param.

Note

Your Databricks connection may use an S3 fast-write connection. In that case, you have to setup PrivateLink for it as described in Amazon S3 data if you also want that traffic to go through PrivateLink.

An AWS-hosted arbitrary data source#

Administrators can leverage AWS PrivateLink to expose any service running inside their VPCs to Dataiku Cloud.

Ensure your data source region is available in Dataiku Cloud#

In the Dataiku Cloud Launchpad, navigate to the Extensions panel.

Click + Add an Extension.

Select AWS private endpoint.

Select the AWS region of your source. If the region you need isn’t available, please contact the support to enable it.

Once your region is available, select it to display the availability zone IDs and the IAM role of your instance.

Create the VPC endpoint service#

You will need a VPC endpoint service in one of the regions and availability zone IDs supported by Dataiku. To create it:

Follow the AWS documentation to create the NLB in one of the availability zone IDs that were displayed from the previous step.

Once your VPC endpoint service is created, add the IAM role of your instance from the previous step to the Allow Principals section.

Note

If security group rules are enforced, you must allow Dataiku’s internal CIDR range on network ACLs and security groups (10.0.0.0/16 and 10.1.0.0/16).

Configure the PrivateLink with Dataiku#

Return to the Extensions tab of the Dataiku Cloud Launchpad.

If not still open from the first section, click + Add an Extension, and select AWS private endpoint.

Provide any string as the endpoint name. It will be helpful if it’s a unique identifier.

Select your Endpoint AWS region; it should be available by now.

Check the box to confirm that your service is configured for PrivateLink.

Fill in your service name.

Click Add.

Accept the PrivateLink request on AWS (Endpoint services > Your endpoint > Endpoint connections).

Click on the PrivateLink extension to retrieve the value Connection Host to use in your downstream connections.

Create the connection#

You can now use the endpoint you created both in new and existing connections:

In your Dataiku Cloud instance, navigate to the Connections panel.

Add a Connection or select the one you want to edit.

Fill the form, and use the value Connection Host displayed in the PrivateLink extension as the host param.

An AWS-hosted RDS#

To setup a AWS PrivateLink with an RDS, you will need to expose it through a network load balancer, and follow the steps from An AWS-hosted arbitrary data source.

Note

Sometimes, the IP address of your RDS can change. See the AWS blog post on Access Amazon RDS across VPCs using AWS PrivateLink and Network Load Balancer for more details and a possible solution.

An on-premise data source#

You can configure AWS PrivateLink for on-premise data sources if you have access to an AWS account:

Connect your on-premise data source to your VPC as described in this AWS white paper on Network-to-Amazon VPC connectivity options.

Follow the steps from An AWS-hosted arbitrary data source to connect to your data source.