Concept | Real-time APIs#

Watch the video

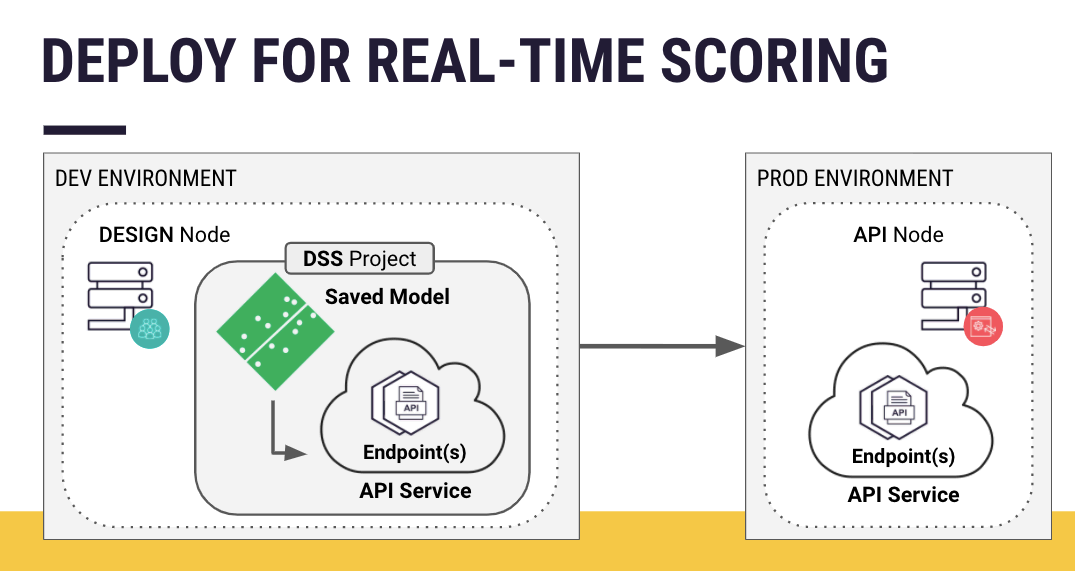

On your Design node, suppose you have a Dataiku project that includes a saved model in the Flow. You can deploy this model from the Design node into a production environment using a batch or real-time scoring framework. Let’s focus on the latter.

Use case#

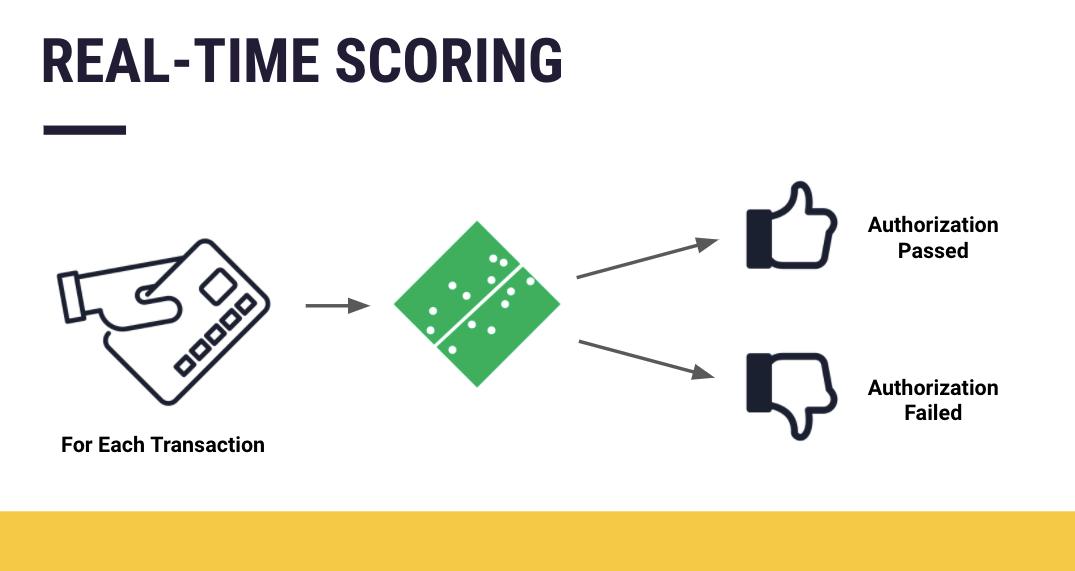

Consider a credit card fraud use case. A model receiving an incoming credit card transaction can immediately generate a prediction as to whether the transaction should be authorized or flagged as fraudulent.

Dataiku implements this type of real-time scoring strategy by exposing a model as an API endpoint. You can package this endpoint, along with possibly other endpoints (that perform tasks not limited to scoring), on an API service. You can then deploy this API service on an API node (or potentially an external infrastructure).

It can be helpful to compare real-time processing with batch processing. Recall that batch processing works on available records together (or in batches) and often at specified times (such as daily, weekly, etc.) to return a batch of results.

You implement batch deployment in Dataiku by deploying a project bundle to an Automation node. In contrast, for real-time processing, you deploy an API service to an API node (or external infrastructure).

Note

For review, return to Concept | Dataiku architecture for MLOps.

Key terminology#

When implementing a real-time processing workload, there are some important terms to know.

Term |

Definition |

|---|---|

API (Application Programming Interface) |

An API is a software intermediary that allows two applications to talk to each other and exchange data over a network. For example, one can use a Google API to get weather data from a Google server. |

API endpoint |

An API endpoint is a single path on the API: that is, a URL to which HTTP requests are posted, and from which a response is expected. It’s the place where two applications can interact. Each endpoint fulfills a single function, for example returning a prediction or looking up a value in a dataset. |

API service |

A Dataiku API service is the unit of management and deployment for the API node. One API service can host several API endpoints. |

API Designer |

The API Designer is available in each Dataiku project. You use the API Designer to create API services — and within those services, API endpoints. |

API Deployer |

The API Deployer is one component of the Deployer. It’s the interface for deploying API services to API nodes or other external infrastructures. The API Deployer manages several deployment infrastructures that can either be static API nodes or containers that run API nodes in a Kubernetes cluster. |

API node |

An API node is the Dataiku app server that does the actual job of answering HTTP requests. Once you have designed an API service, you can deploy it on an API node via the API Deployer. |

Summary#

To tie it all together:

You design API services in one or more Design or Automation nodes using the API Designer in a Dataiku project.

Each API service can contain several API endpoints, with each endpoint fulfilling a single function.

The API service is then pushed to the API Deployer, which in turn deploys the API service to one or more API nodes (if not some external infrastructure).

Finally, the API nodes are the application servers that do the actual job of answering API calls.

Next steps#

You’ve now seen an introduction to real-time APIs and how they work in Dataiku. Learn more about the API endpoints within an API service.

See also

See the reference documentation for more details about API Node & API Deployer: Real-time APIs.