Concept | Jupyter notebook#

Watch the video

A code notebook is a tool that allows users to combine executable code and rich text for interactively writing code, performing exploratory analysis, or developing and presenting code projects.

A notebook integrates code and its output into a single document that can combine code, narrative text, visualizations, and other rich media. In other words, in one single document, you can run code, add explanations, display the output, and make your work more transparent.

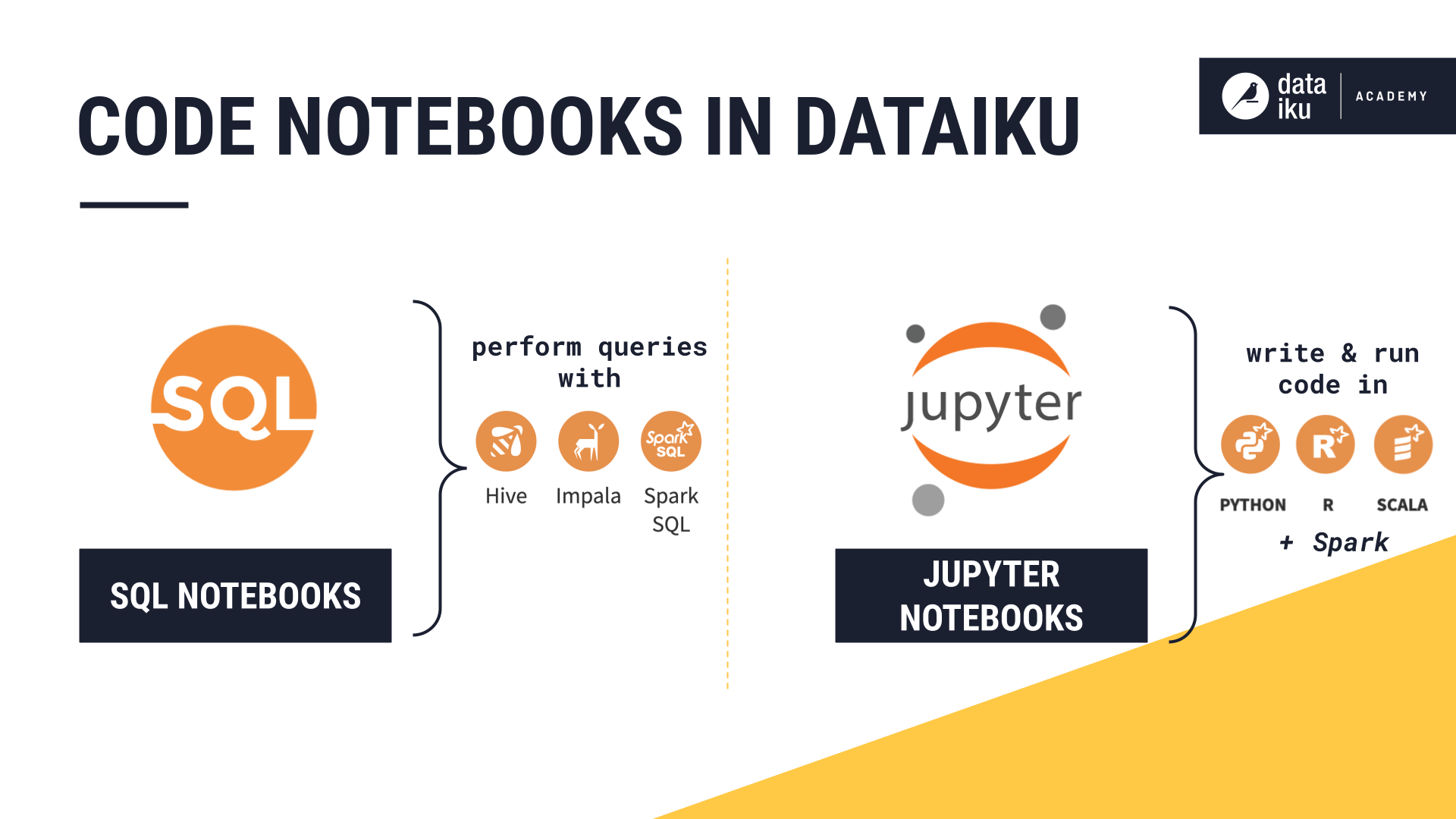

Dataiku features the following code notebooks for exploratory or experimental work:

SQL notebooks for exploring and manipulating tabular data on SQL databases, which can also be used to perform queries on Impala, Hive, and Spark SQL.

Jupyter notebooks to run Python, R, or Scala code, which can also be executed in Spark, interactively.

This article is about the latter: Jupyter notebooks.

Note

This article presents Python notebooks as an example, but the process is similar for R and Scala notebooks as well.

Creating Jupyter notebooks#

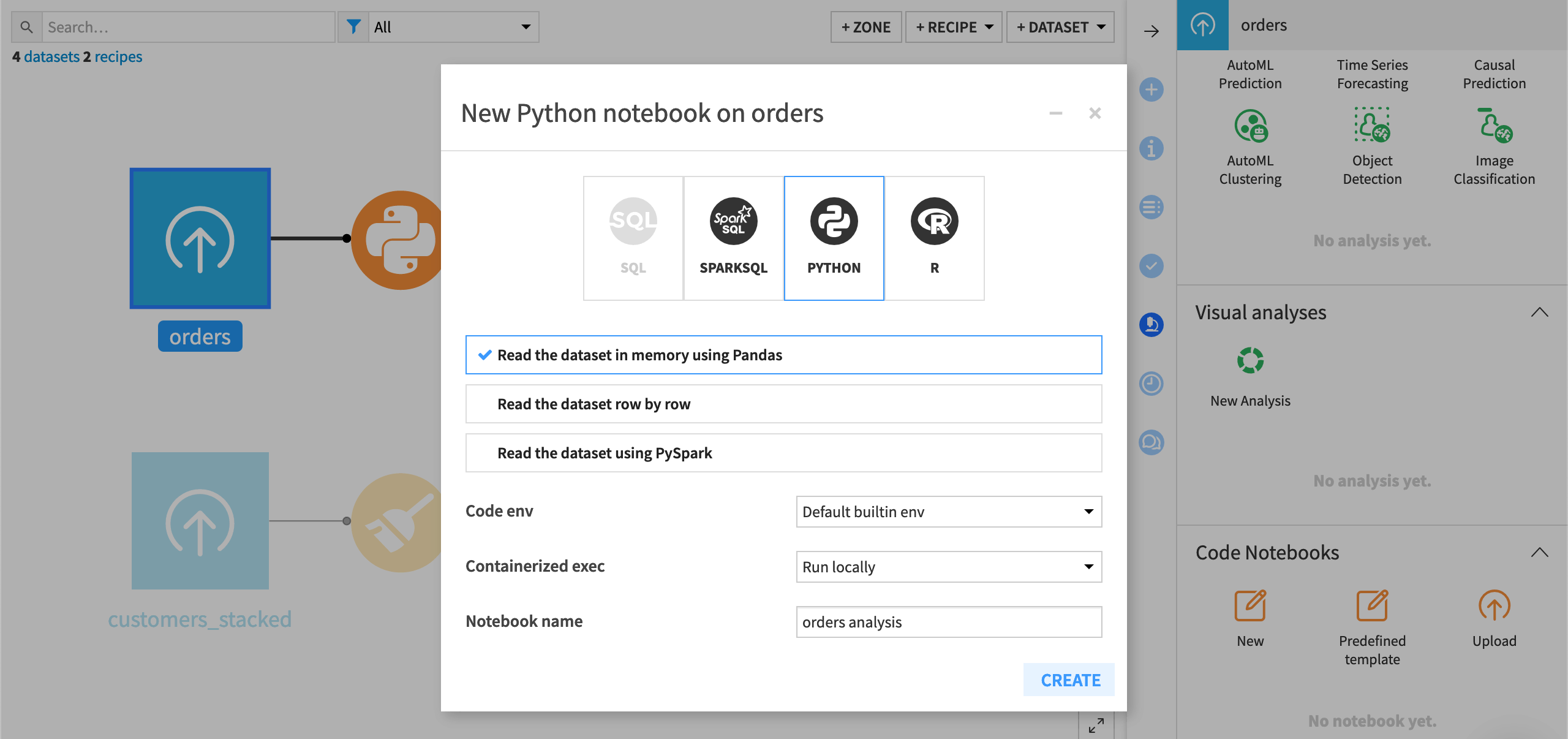

Similar to SQL notebooks, you can create Jupyter notebooks from:

The Notebooks menu of the Code dropdown (

).

The Lab (

) menu of a dataset.

In both cases, Dataiku prompts you to enter a name for the notebook, select a code environment, and choose a starter template depending on your use case. For example, for Python notebooks, Dataiku provides starter templates for reading a dataset in memory and using PySpark.

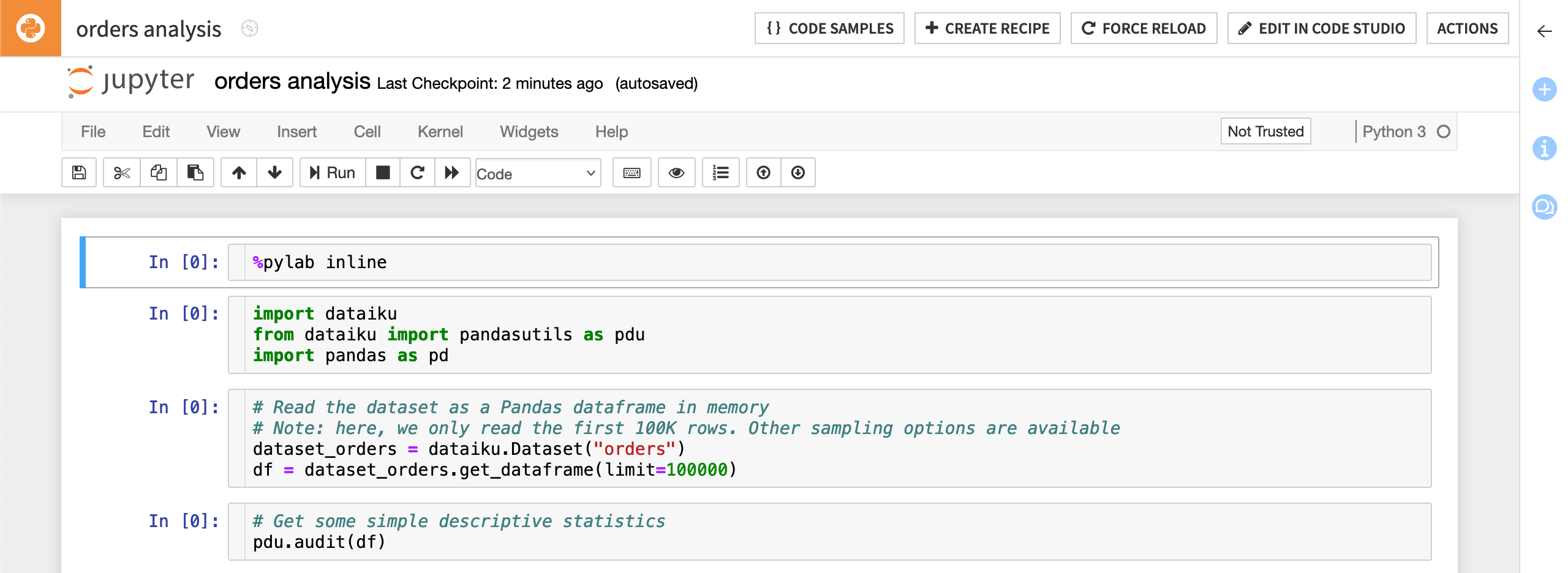

The starter code in Python notebooks generally contains a few import statements for the dataiku and pandas packages, as well as sample code for loading a Dataiku dataset as a DataFrame.

If you create a notebook from the Lab menu of a dataset, the starter code recognizes and loads this specific dataset as a DataFrame.

Once you have created a notebook, you can write your code in the same way that you would if you were using Jupyter notebooks outside of Dataiku. In addition, Dataiku provides multiple code samples to help you get started.

Jupyter kernels#

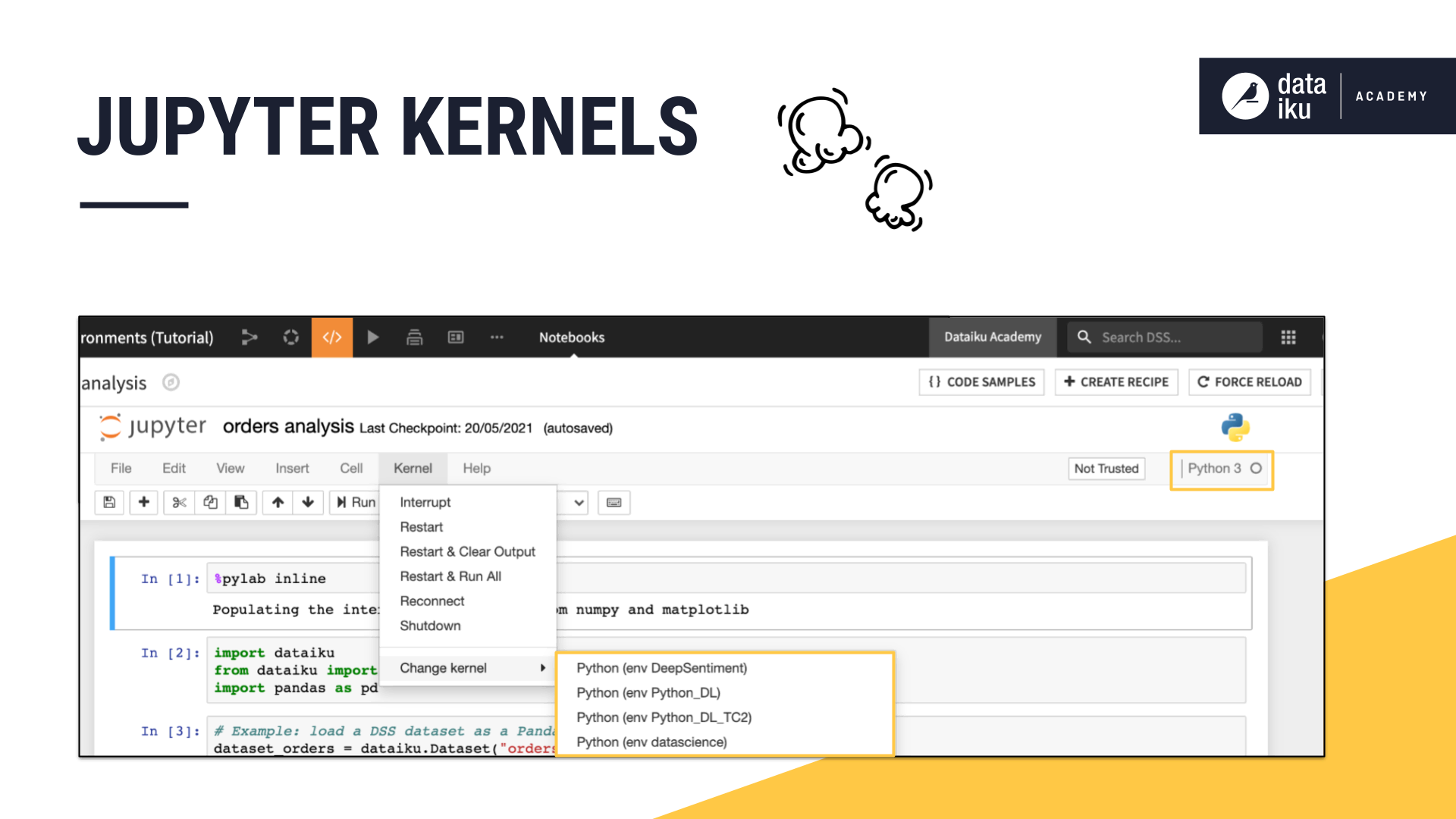

To successfully manage multiple Jupyter notebooks across projects, it’s important to keep the notion of kernels in mind. A Jupyter kernel is a specific process created each time that a user opens a Jupyter notebook and which holds the computational state of the notebook.

The user can also change the kernel, which is equivalent to changing the code environment that the notebook is using.

Note

You can see the name of the kernel (or code environment) that’s currently in use in the upper right corner of the notebook’s navigation bar.

When the user navigates away from the notebook, the kernel remains alive, which ensures that long running computation continues without having to always keep the notebook open. If left unchecked, however, this process can be computationally inefficient.

This is why it’s important to stop kernels when it’s not necessary to keep them alive.

Users can stop their kernels by selecting a Jupyter notebook from the Notebooks menu and clicking the Unload button. This destroys the process running the code and all its state, but it preserves the code itself in the notebook.

Administrators can manage Jupyter notebook kernels in two ways:

Manually: To list and stop kernels, navigate to the Administration menu, then to the Monitoring section, and open Background tasks.

Automatically: Kernels can be automatically killed after a certain number of days by using a Macro.

Both administrators and users with appropriate access can also kill running notebooks programmatically, using the public Dataiku API.

Deploying a Jupyter notebook as a code recipe#

Jupyter notebook allow users to interactively work with python code in the exploratory stage. However, once you are ready to deploy the code into production, it needs to be convertible from a notebook to a code recipe.

Dataiku makes this easy by allowing you to navigate between notebooks and recipes. You can open and edit the contents of a code recipe in a notebook, experiment and test your changes, and then deploy them back to the recipe. This makes for a smooth two-way navigation between experimental and production work.

See also

For a hands-on example of using Jupyter notebooks in a Dataiku project, follow the Quickstart Tutorial of the Developer Guide.

Next steps#

To learn more, you can follow the Tutorial | Code notebooks and recipes or the Concept | Python recipe.

See also

Find more in the reference documentation on Python and R code environments.