Tutorial | Pivot recipe#

The Pivot recipe transforms datasets into pivot tables, which are tables of summary statistics. This operation can also be referred to as reshaping from long to wide format.

Get started#

Objectives#

In this tutorial, you will:

Create a pivot table using the visual Pivot recipe.

Understand how the Pivot recipe computes a schema using a pre-filter step.

Prerequisites#

To complete this tutorial, you’ll need the following:

Dataiku 12.0 or later.

Create the project#

From the Dataiku Design homepage, click + New Project.

Select Learning projects.

Search for and select Pivot Recipe.

If needed, change the folder into which the project will be installed, and click Create.

From the project homepage, click Go to Flow (or type

g+f).

Note

You can also download the starter project from this website and import it as a ZIP file.

You’ll next want to build the Flow.

Click Flow Actions at the bottom right of the Flow.

Click Build all.

Keep the default settings and click Build.

Use case summary#

Let’s say we’re a financial company that uses some credit card data to detect fraudulent transactions.

The project comes with three datasets, described in the table below.

Dataset |

Description |

|---|---|

tx |

Each row is a unique credit card transaction with information such as the card that was used and the merchant where the transaction was made. It also indicates whether the transaction has either been:

|

merchants |

Each row is a unique merchant with information such as the merchant’s location and category. |

cards |

Each row is a unique credit card ID with information such as the card’s activation month or the cardholder’s FICO score (a common measure of creditworthiness in the US). |

Create the Pivot recipe#

In this exercise, we will focus on reshaping the tx_prepared dataset from long to wide format using these bins.

The cardholder_fico_range column in the tx_prepared dataset includes a column that categorizes FICO scores (a US rating system of creditworthiness) into four bins (bad, excellent, fair, and good).

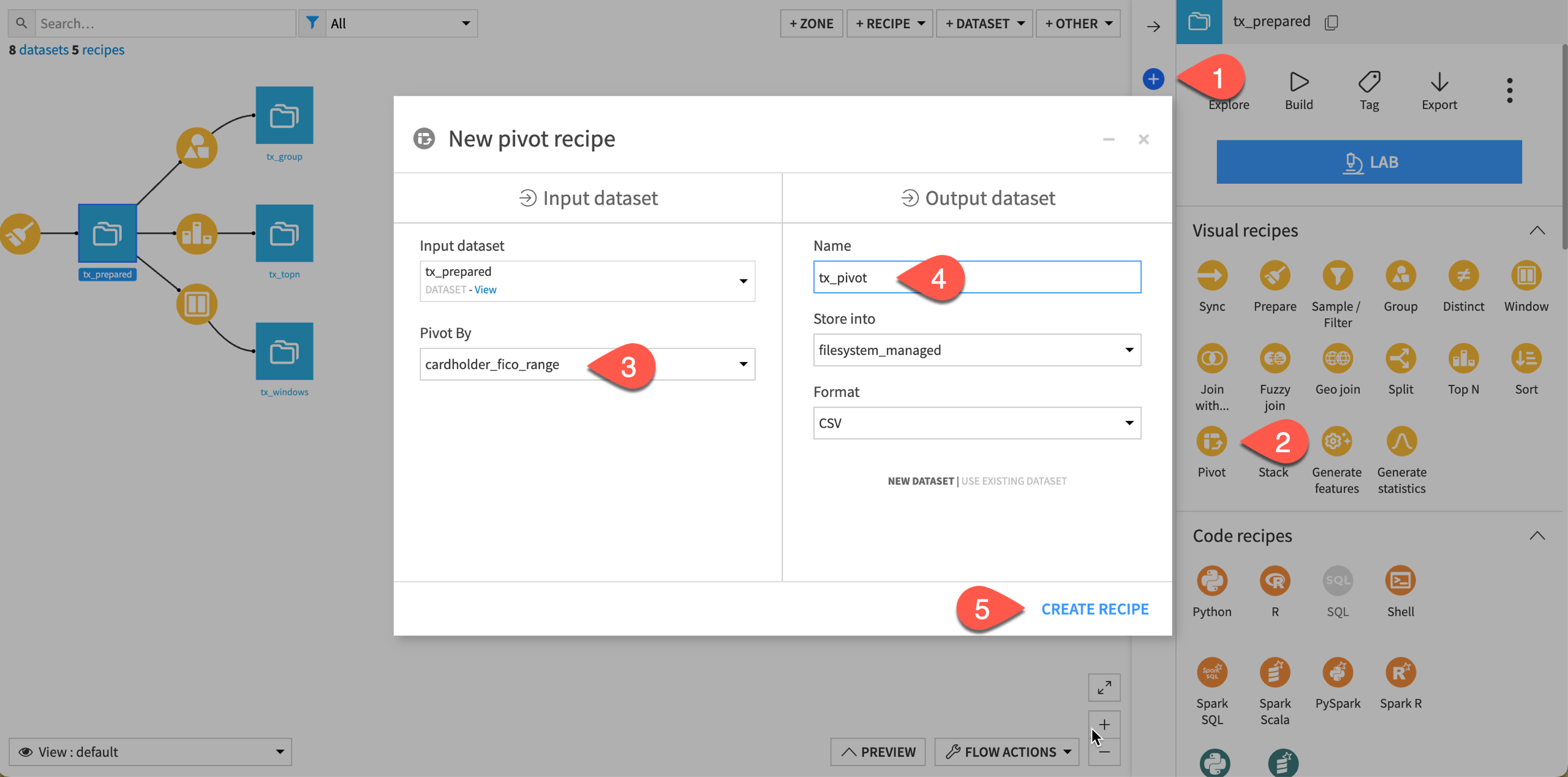

From the Flow, select tx_prepared.

In the Actions tab on the right, select Pivot from the menu of visual recipes.

In the New pivot recipe dialog, choose to pivot by cardholder_fico_range.

Name the output dataset

tx_pivot.Click Create Recipe.

Configure the Pivot step#

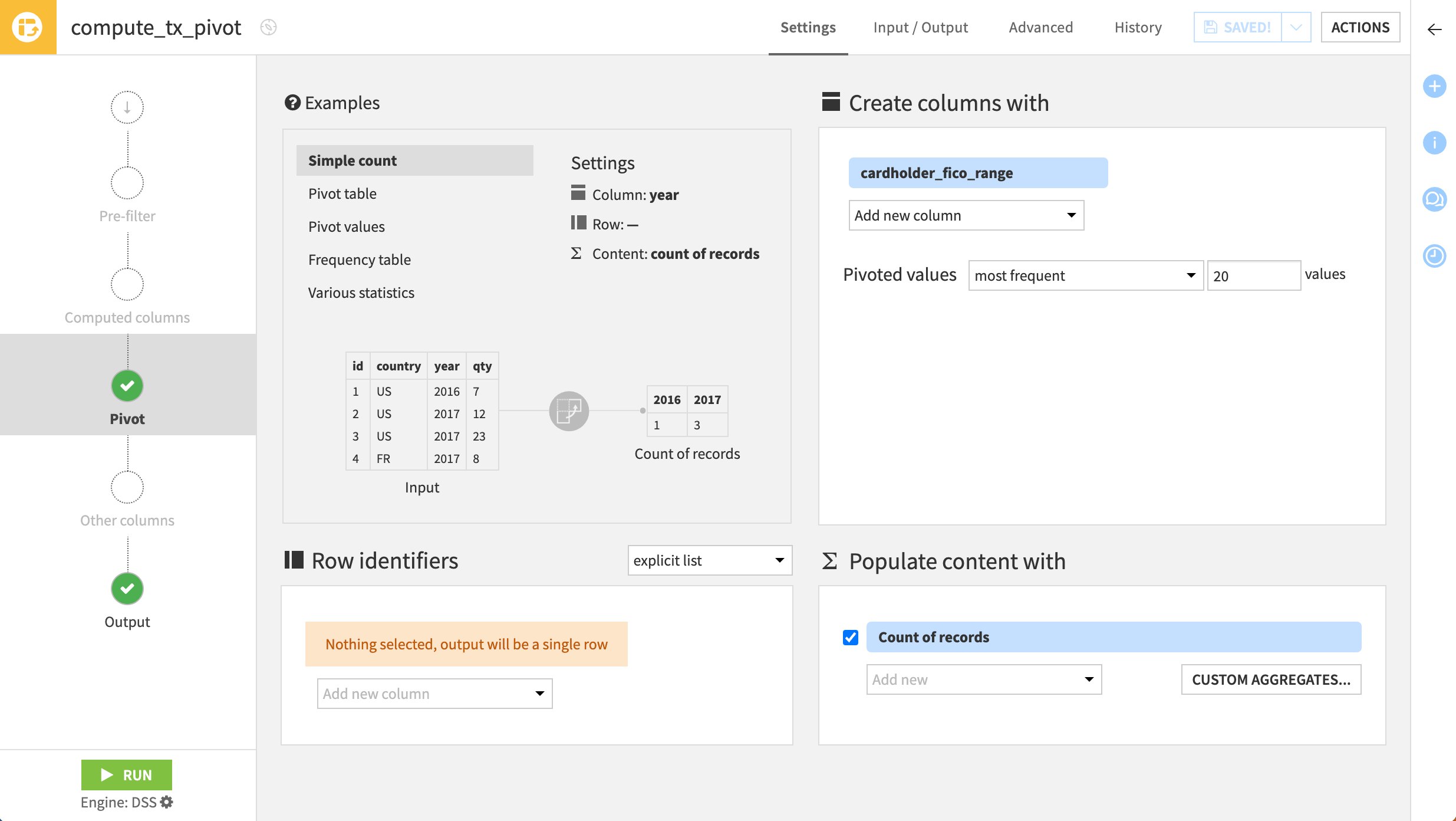

On the Pivot step, cardholder_fico_range already populates the Create columns with field at the top right because of the choice in the recipe’s initial creation.

For each distinct value of this pivot column, the Pivot recipe will create a new column for each aggregation specified in the Populate content with section in the bottom right.

This includes Count of records by default. Before we add any row identifiers or more aggregations, let’s first see how the four unique values in the selected pivot column get transposed into columns.

On the Pivot step, make no changes.

Navigate to the Output step, and observe how the schema couldn’t be inferred. We’ll explore this soon.

Click Run at the bottom left, and open the output dataset when the recipe finishes running.

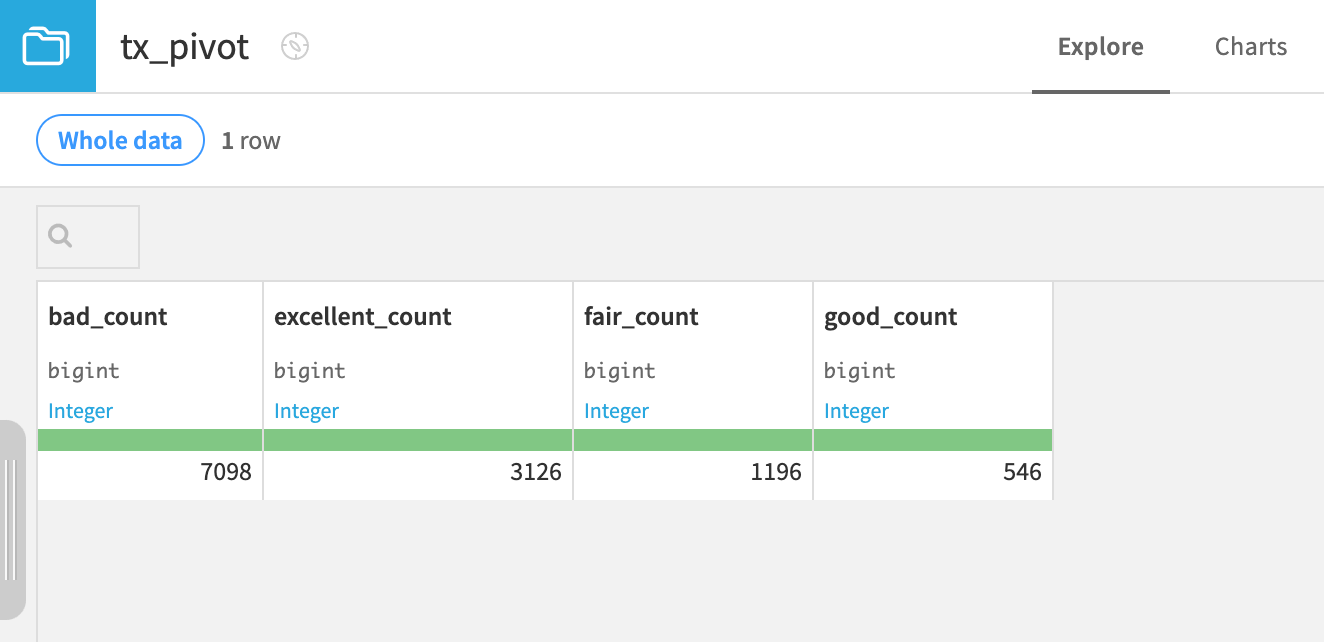

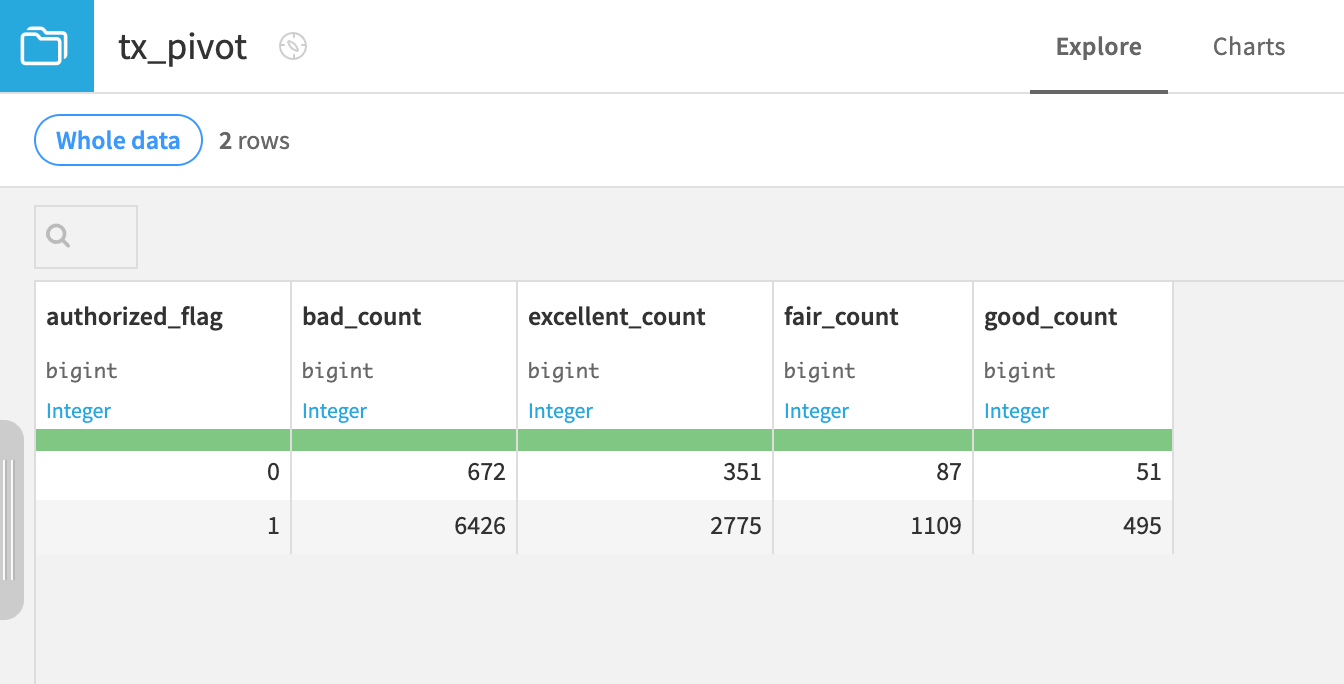

Without selecting any row identifiers, the output to this Pivot recipe is one row. So far, we only have the record counts for each bin. Because we have four values in the pivot column, one aggregation, and no row identifier, the output dataset has four columns.

Add a row identifier#

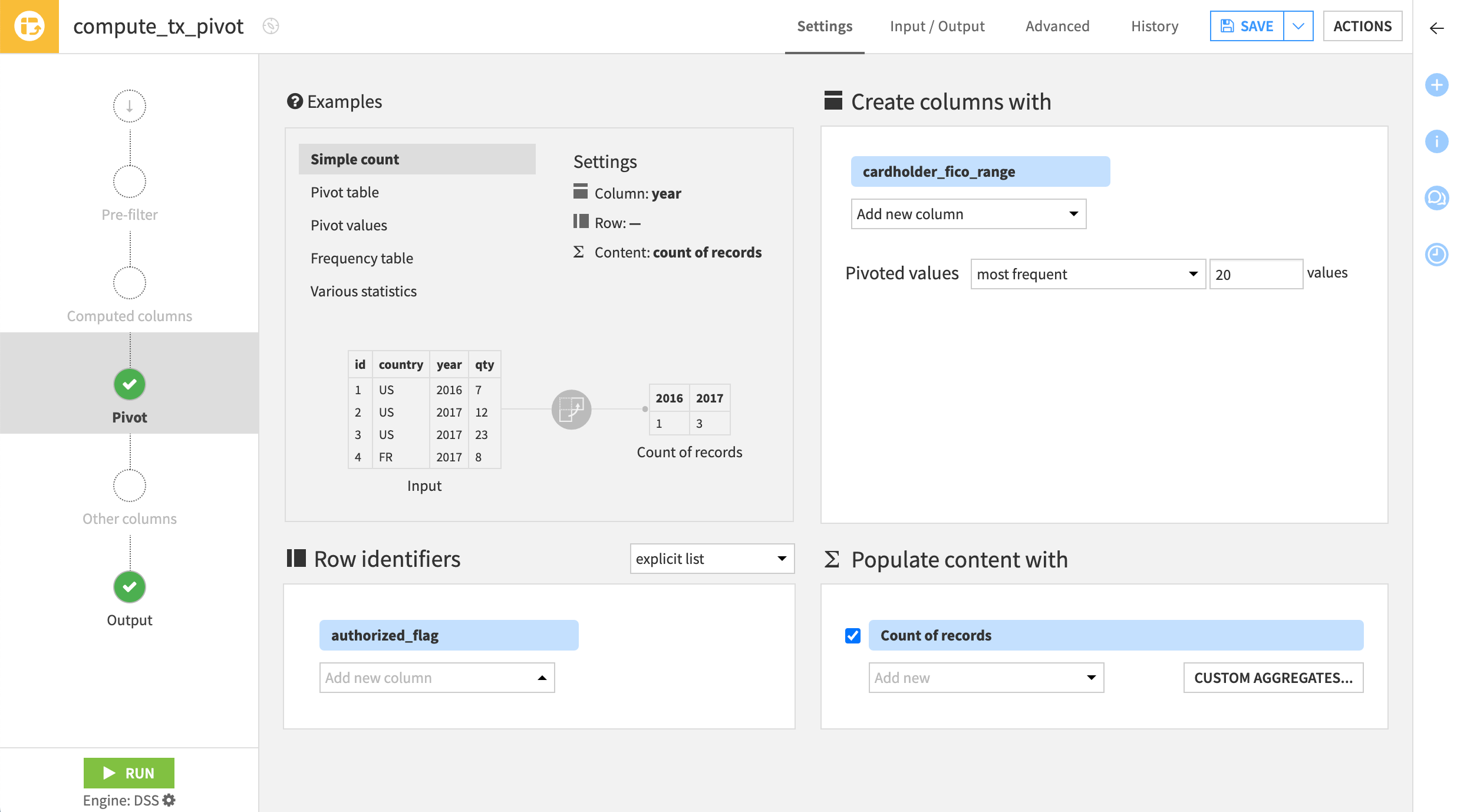

What’s typically more useful is to add row identifiers. These allow us to produce aggregations according to specific groups.

Click Parent Recipe to return to the Pivot step.

On the Pivot step, select authorized_flag as a row identifier.

Click Run at the bottom left, and open the output dataset when the recipe finishes running.

Because authorized_flag has two values, we now have two rows in the output dataset—producing a table of counts by FICO score for both authorized (1) and unauthorized (0) transactions.

Tip

To go further on your own, experiment adding additional row identifiers, such as item_category!

Populate content with aggregations#

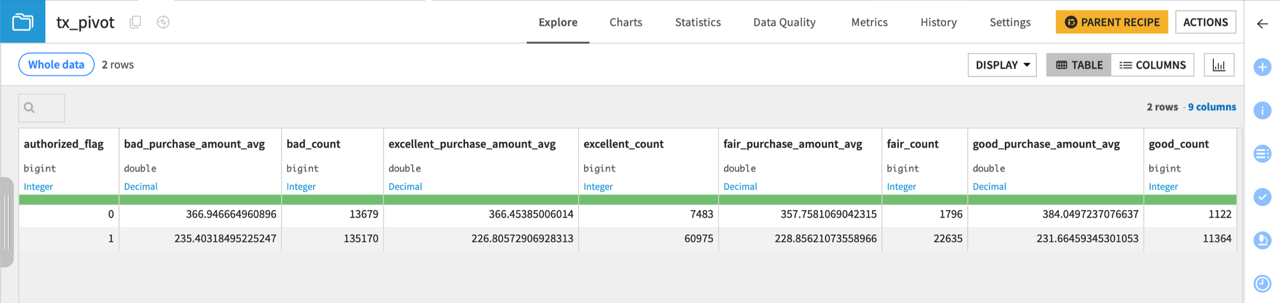

Now, let’s select an additional aggregation: the average purchase_amount of authorized and unauthorized transactions across FICO categories.

Click Parent Recipe to return to the Pivot step.

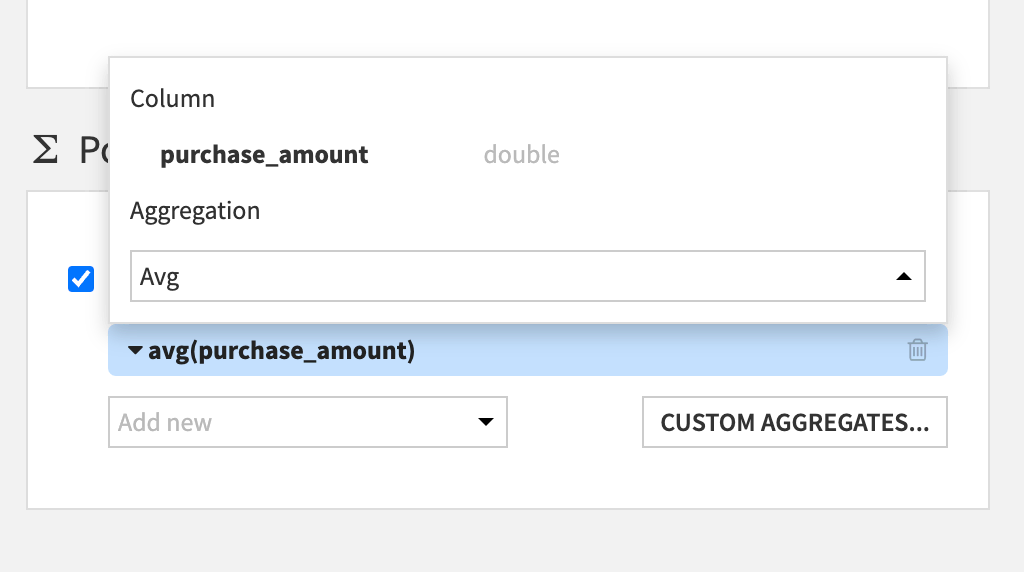

On the Pivot step, in the Populate content with tile, click Add new, and select purchase_amount.

Click on count(purchase_amount) to change the aggregation. Switch to Avg.

Click Run at the bottom left, and open the output dataset when the recipe finishes running.

The addition of one aggregation has added four columns to the output dataset (one for each unique value of the pivot column).

When new values appear#

The Pivot recipe is a two-pass algorithm:

On the first pass, it finds the values for new output columns and generates the dataset schema.

On the second pass, it populates the pivot table.

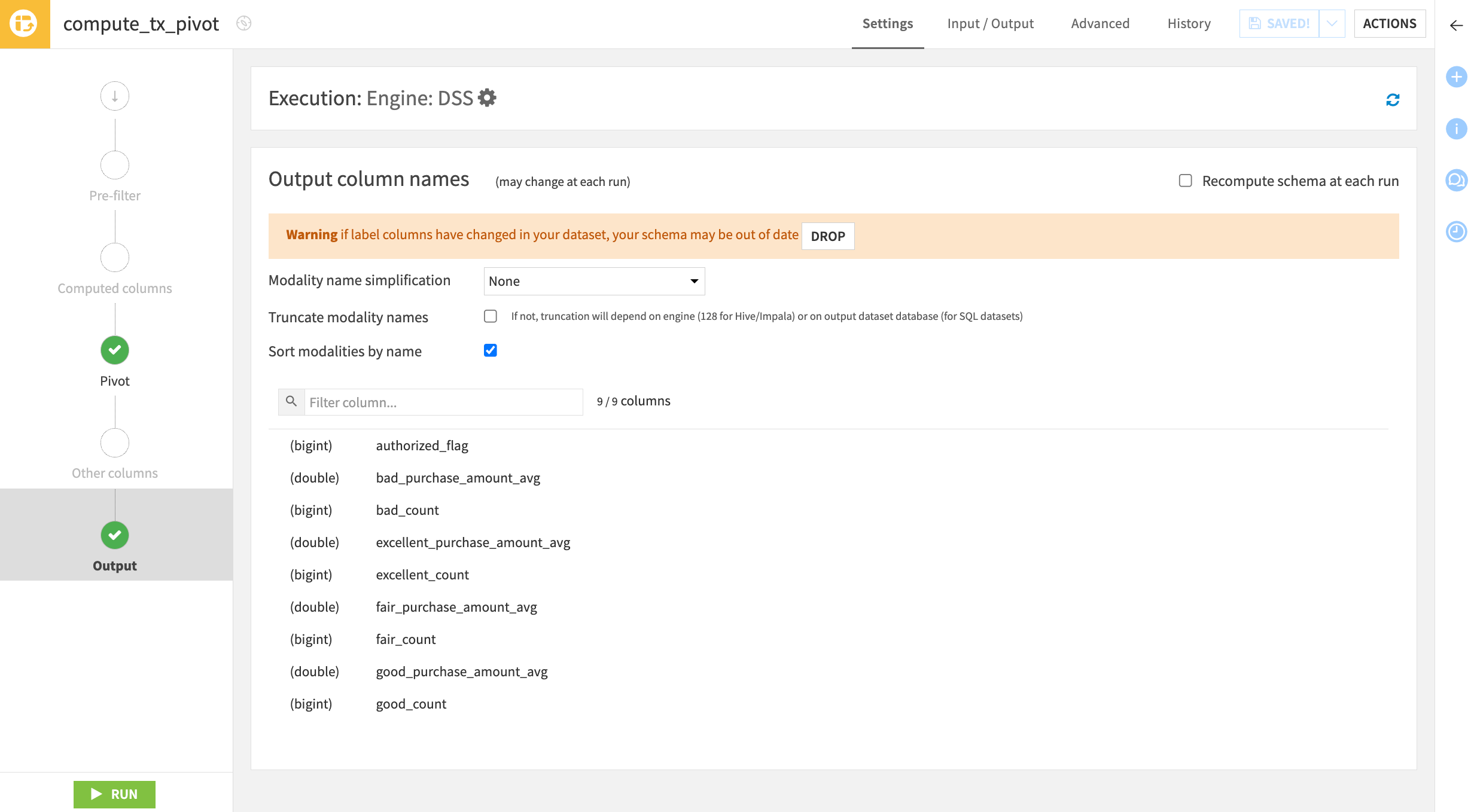

If you return to the Output step, you can see the output column names listed — the same as many other visual recipes.

Click Parent Recipe to return to the Pivot step.

Navigate to the Output step on the left.

What’s different is that in most visual recipes, only the column names of the input dataset impact the schema of the output dataset. In the Pivot recipe, however, the actual values in the pivot column impact the output schema.

In this case, the four distinct values in the cardholder_fico_range column (bad, fair, good, and excellent) determine the number of columns in the output dataset. But what happens if another FICO range category — such as extraordinary — is added?

By default, rebuilding the dataset skips the first pass described above. It doesn’t look for any new values of the pivot column and just re-populates the table (the second pass).

Two options exist for updating the schema from the Output step of the Pivot recipe:

Manually drop the existing schema by clicking the Drop button.

Select Recompute schema at each run to automatically update every time the tx_pivot dataset is built.

Caution

With this second method, an updated schema can break the Flow if the following recipes expect the old schema.

Apply a pre-filter to the Pivot recipe#

These options will become clearer through the use of a pre-filter. When using the Pivot recipe, if there are too many distinct values in the column to pivot by, the number of columns in the output dataset can grow out of control.

Caution

By default, the Pivot recipe pivots only the 20 most frequently occurring values. The Pivot recipe can create a maximum of up to 200 columns.

Let’s define a pre-filter to keep only rows where the card holder’s FICO range is bad or fair. Then, the first pass of the Pivot recipe’s algorithm will only find two instead of four values.

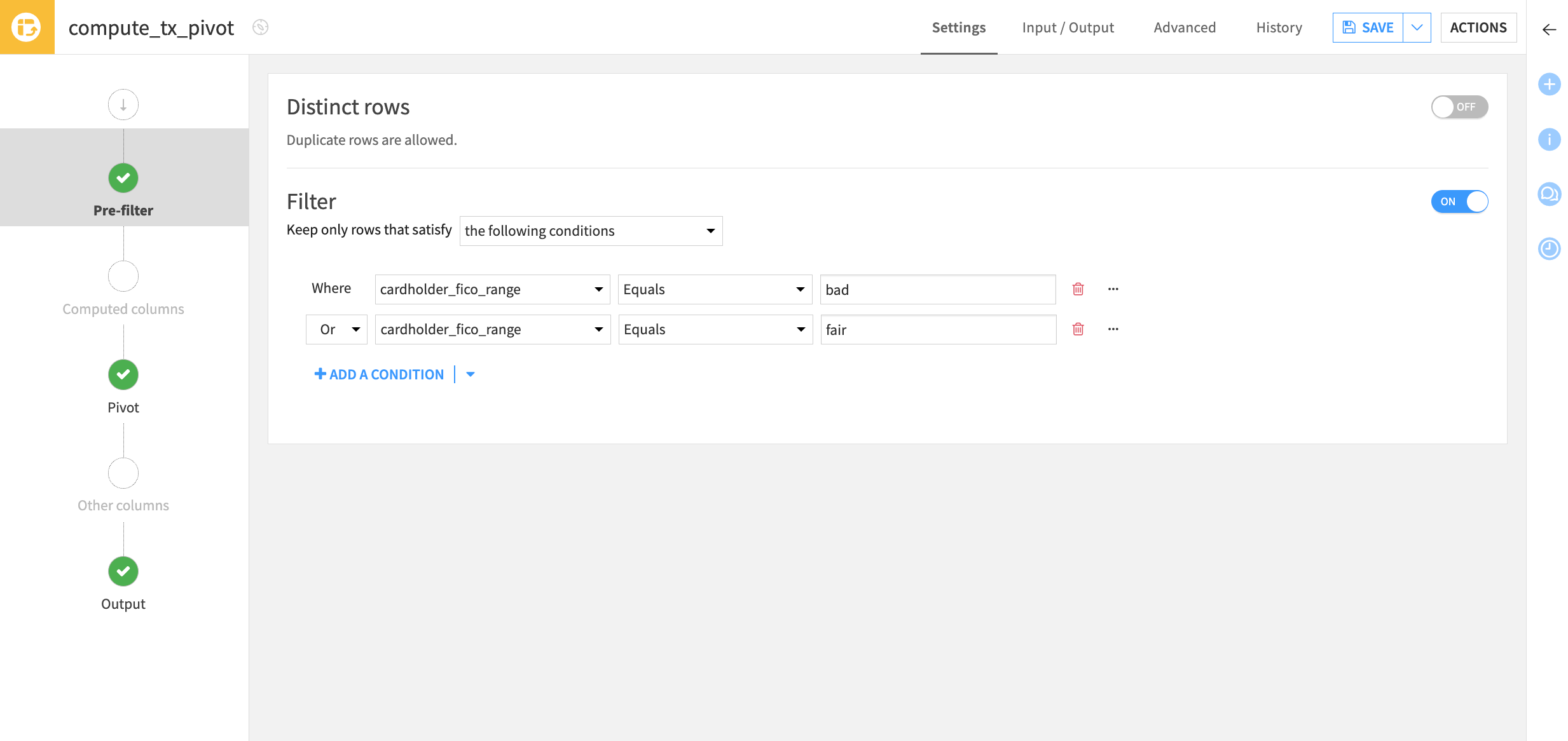

Navigate to the Pre-filter step of the Pivot recipe.

Toggle the Filter On, and choose to keep rows that satisfy the condition:

cardholder_fico_range EqualsbadOrcardholder_fico_range Equalsfair

Before running the recipe, navigate to the Output step.

Notice the warning that if label columns have changed, then the schema may be out of date. After setting the pre-filter, the dataset will have only

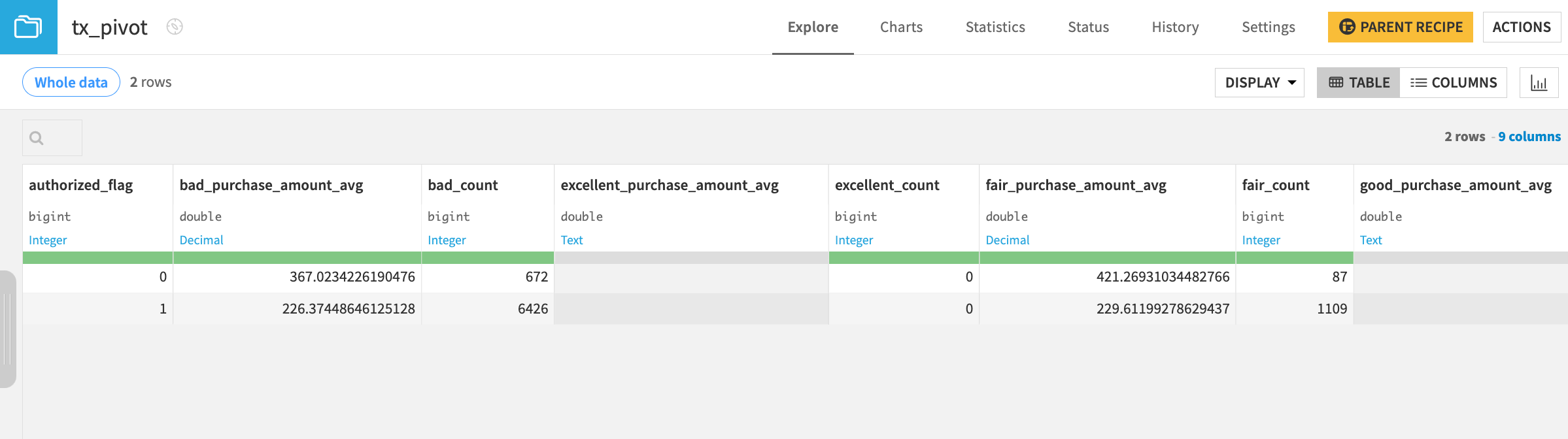

badandfairvalues in the pivot column. However, thegoodandexcellentcolumns remain in the schema.Click Run to execute the recipe again without dropping the existing schema or recomputing it. Then, open the output dataset.

The good and excellent columns remain in the output dataset, but the values are empty. Often this may be a fine outcome. The Flow didn’t break!

Drop the existing schema of a Pivot recipe#

To remove the empty columns, let’s drop the existing schema before re-running the recipe.

Click Parent Recipe to return to the Pivot step.

Navigate to the Output step.

Click on the Drop button to manually update the schema and Confirm.

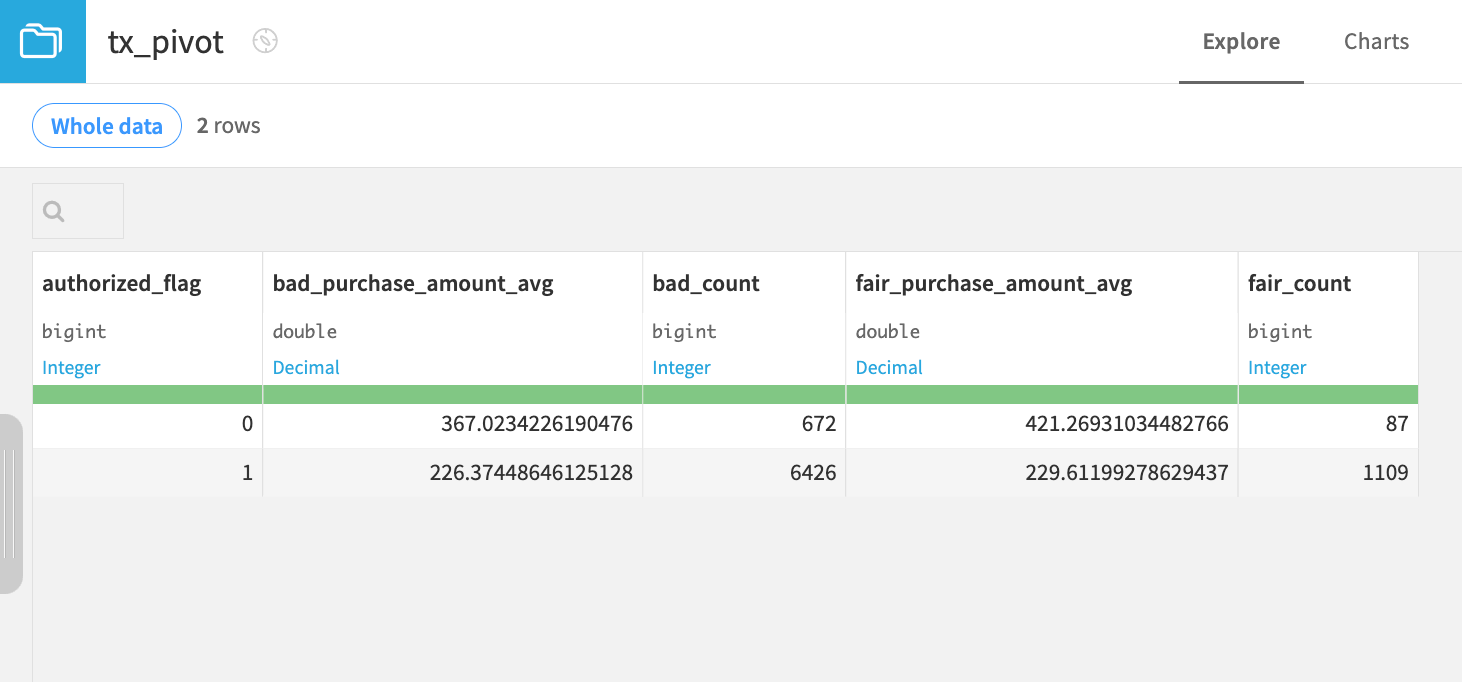

Click Run again, and open the output dataset.

The schema of the output dataset now only contains columns for the bad and fair values of the cardholder_fico_range column. After dropping the good and excellent columns, if there were further downstream datasets, we’d need to check for incompatible schemas.

Next steps#

In this tutorial, we learned how to create pivot tables to report summary statistics using the Pivot recipe in Dataiku. We also learned how to apply a pre-filter in a Pivot recipe to understand how the output schema is computed.

See also

For more information on this recipe, see also the Pivot recipe article in the reference documentation.

There are still many other visual recipes. Try the Distinct recipe next!