Tutorial | Repartition a non-partitioned dataset#

Get started#

In Dataiku, partitioning refers to the splitting of a dataset along meaningful dimensions. Each partition contains a subset of the dataset. With partitioning, you can process different portions of a dataset independently and have some incrementality for operations based on a dataset.

We recommend that you have a good understanding of the two partitioning models of Dataiku before reading this article:

File-based partitioning

Column-based partitioning for non-file datasets

There is one case which isn’t covered by these two models: when you have a files-based dataset (i.e: a filesystem, HDFS, S3 or uploaded dataset), where the files don’t map directly to partitions.

For example, if you have a collection of files containing unordered timestamped data, and want to partition on the date. In the regular files-based partitioning model, each file must belong to a single partition, which isn’t the case here.

To solve this, you have to use the partition redispatch feature of Dataiku. This feature allows you to go from a non-partitioned dataset to a partitioned dataset. Each row is assigned to a partition dynamically based on columns.

Note

Unlike regular builds, when you build a redispatched dataset, you build all partitions at once. Normally in Dataiku, each activity builds a single dataset partition.

Objectives#

In this tutorial, you will partition a non-partitioned dataset using the partition redispatch feature in two ways:

Via a Sync recipe (on an existing column)

Via a Prepare recipe (on a new column)

We’ll be using an extract from the “Blue book for Bulldozers” Kaggle competition data. It’s a dataset where each row represents the sale of a used bulldozer.

Create the project#

From the Dataiku Design homepage, click + New Project.

Select Learning projects.

Search for and select Redispatch partitions.

If needed, change the folder into which the project will be installed, and click Create.

From the project homepage, click Go to Flow (or type

g+f).

Note

You can also download the starter project from this website and import it as a ZIP file.

Redispatch by year (Sync recipe)#

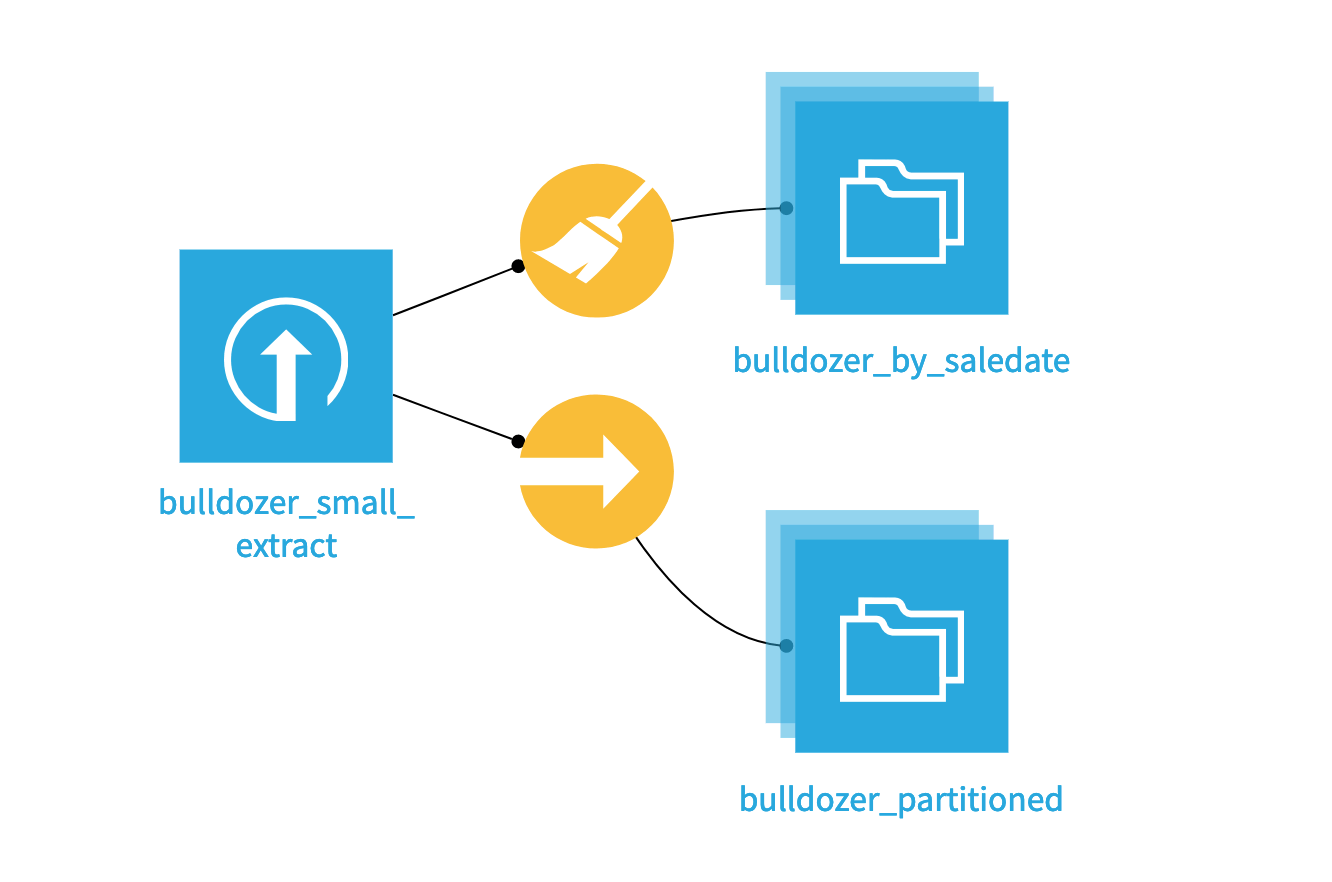

The source dataset of bulldozers isn’t partitioned (it’s one CSV file). To redispatch it, let’s first use a Sync recipe, enabling a specific repartitioning mode.

Create the Sync recipe#

From the Flow, select the bulldozer_small_extract dataset, and click on Sync from the visual recipes section of the Actions bar.

Name the output dataset

bulldozer_partitioned.Click Create Recipe, accepting the default storage location.

The name of the output dataset might seem strange because, at this point, we’re leaving the output dataset unpartitioned. That’s because, since there is no partitioning yet in the project, Dataiku can’t suggest an existing partitioning scheme. We’ll fix that shortly!

Partition the output dataset by year#

Let’s partition the yet-to-be-built output dataset.

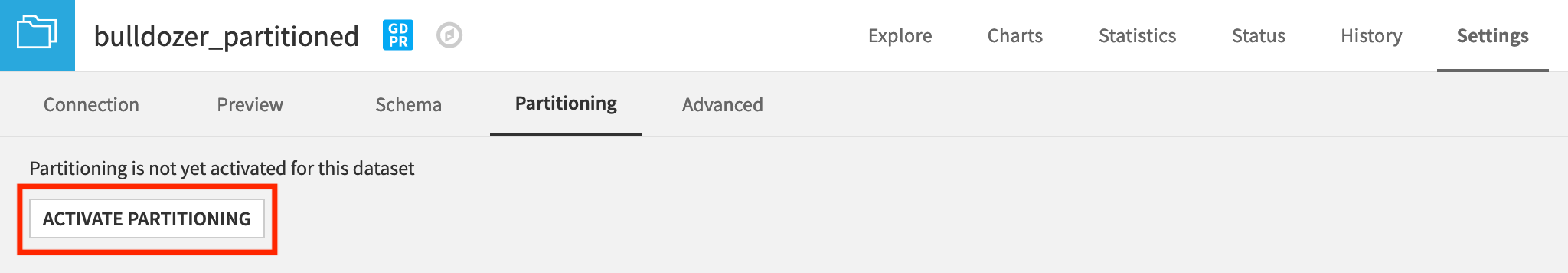

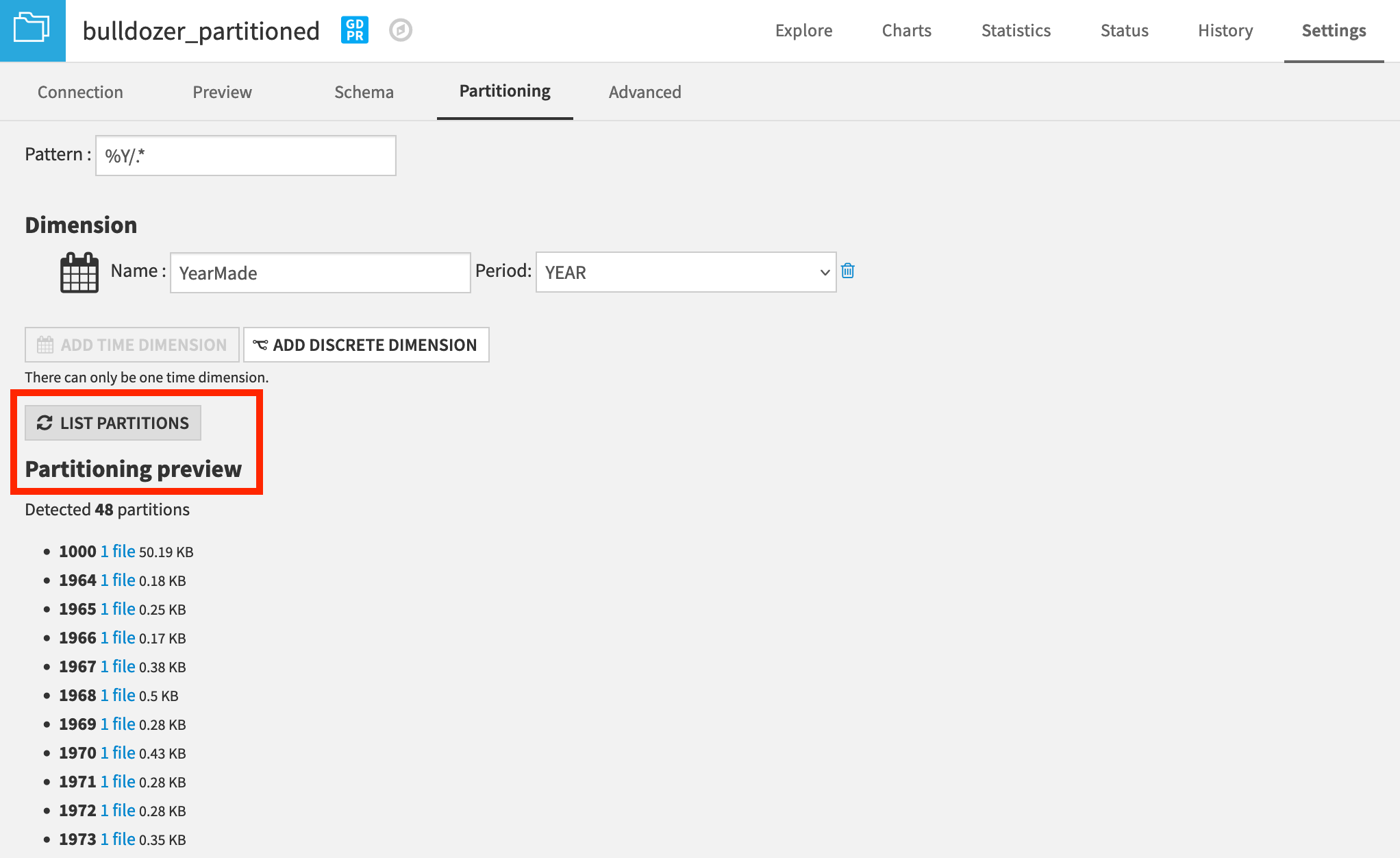

Navigate to the Settings tab of the bulldozer_partitioned dataset.

In the Partitioning subtab, click Activate Partitioning.

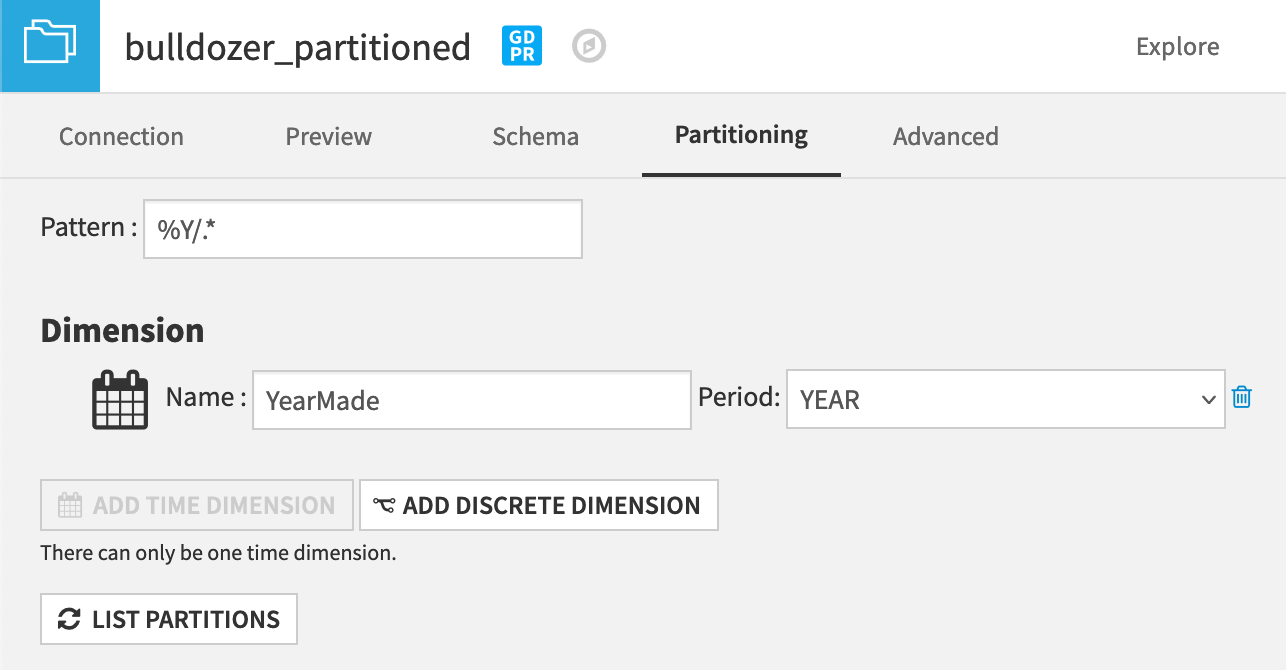

Let’s add a time-based (year) dimension.

Click Add Time Dimension.

Provide

YearMadeas the dimension name. YEAR should already be selected as the period.Click on the Click to insert in pattern link to automatically prepare the file paths pattern.

Save the settings, and return to the parent Sync recipe.

Warning

The name of the partitioning dimension MUST match the name of the column in the source dataset. In the source dataset, the column is called YearMade, so that’s how we name the partitioning dimension.

Note

The pattern here was %Y/.*. You can learn more about defining patterns for file-based partitioned datasets in the reference documentation.

Activate redispatch mode#

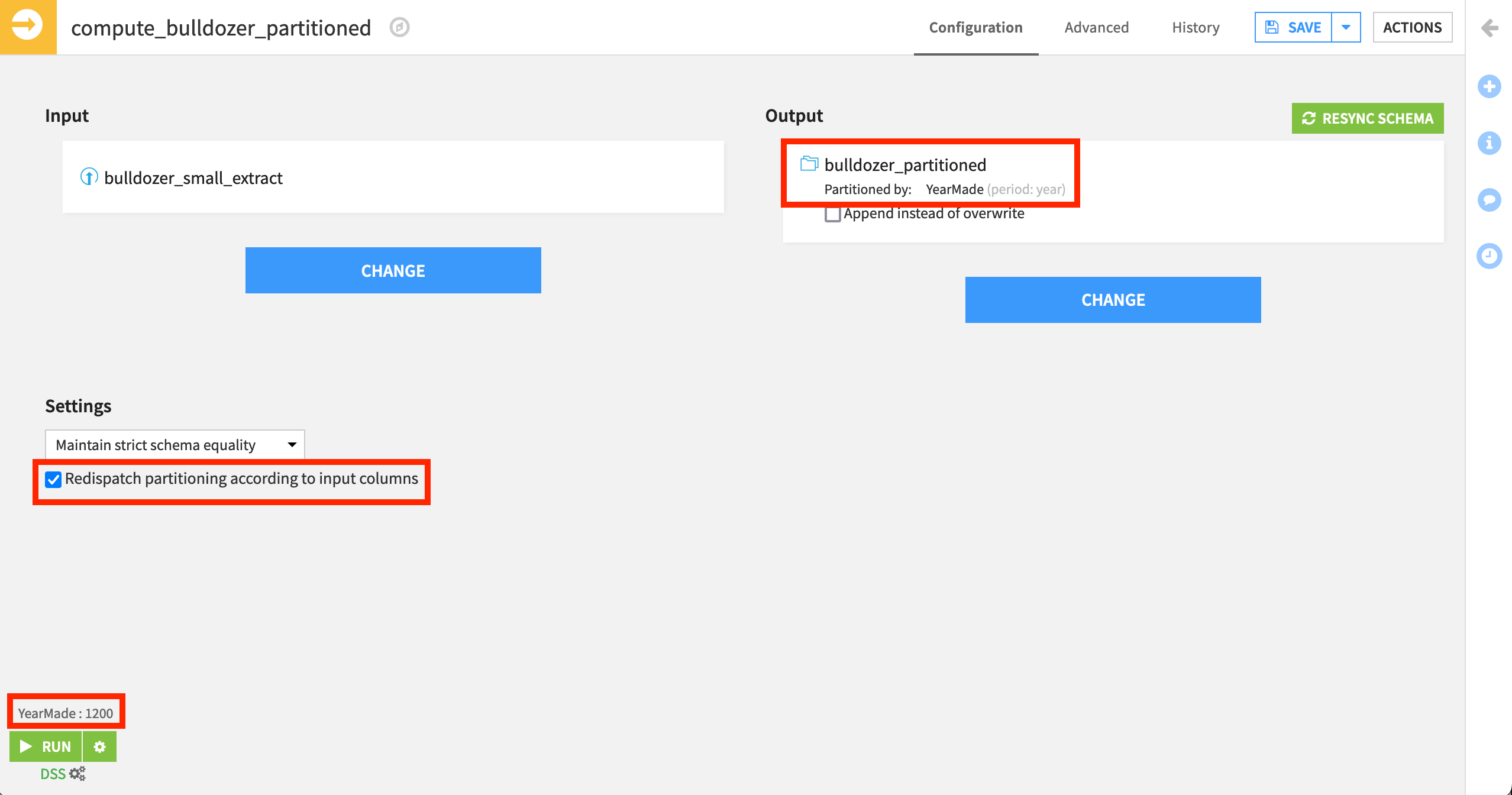

Now that our output dataset is partitioned, a new option has appeared in the Sync recipe: Redispatch partitioning according to input columns.

This is the option that we want: it will tell Dataiku to dispatch each row of the input dataset to its own partition, and that a build must actually build ALL partitions.

On the Configuration tab of the Sync recipe, check the option to Redispatch partitioning according to input columns.

You’ll notice that the Run button is still grayed out. Even if the recipe will build ALL partitions, you must still select one because that’s the “normal” way of building.

Click on the Click to select partitions link, and enter anything for YearMade (for example: 1200, even though there is no bulldozer where YearMade=1200).

Click Run.

You should expect a warning to update the schema. For now, update the schema.

Explore partitions#

Once the job is complete, we can explore the output dataset.

Within the Settings tab of the bulldozer_partitioned dataset, navigate to the Partitioning subtab.

Click List partitions.

Dataiku now detects 48 partitions. Each partition now only has the bulldozers of a given year.

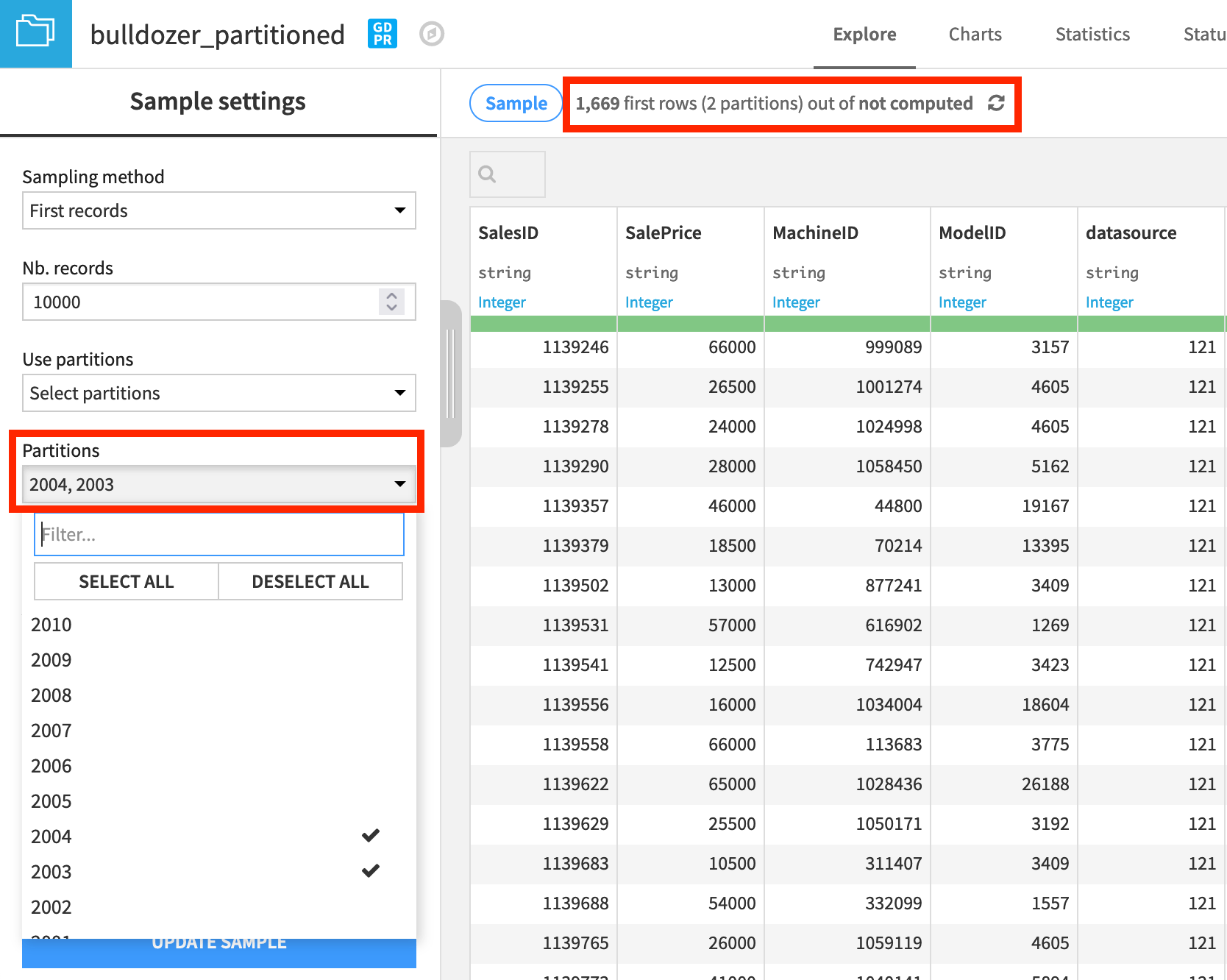

In the Explore tab, click on the left to open the sample configuration.

Under Use partitions, click Select partitions.

Click to retrieve the full list of partitions.

Select 2003 and 2004 for demonstration.

Click Update Sample, and note how the sample size reduces.

Redispatch by day (Prepare recipe)#

We have successfully redispatched an unpartitioned and unsegregated dataset, based on the values in one column.

What if we need to redispatch, but not based directly on the values in an existing column? What if we need some preprocessing? The good news is, you can! Just like the Sync recipe, the Prepare recipe allows you to redispatch. Let’s redispatch our dataset by the day on which the sale was made.

Prepare the partition identifier column#

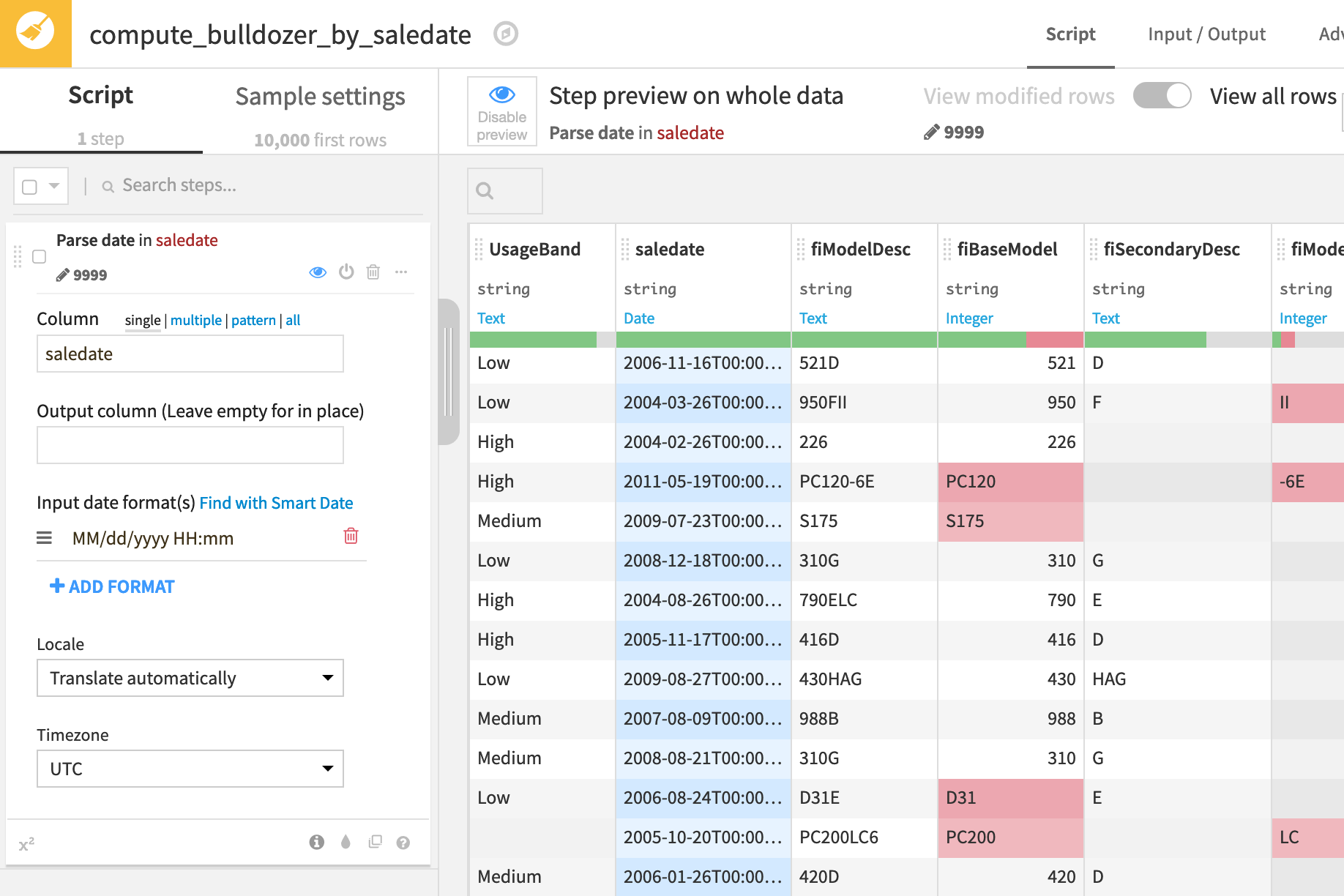

The saledate column contains the date of sale, but it’s not in the format we want. Let’s fix this with a Prepare recipe.

From the bulldozer_small_extract dataset, create a new Prepare recipe.

Name the output dataset

bulldozer_by_saledate.From the saledate column dropdown, add the suggested Parse date processor.

Click Use Date Format to accept the first suggested format.

Leave the output column empty to parse the column in place.

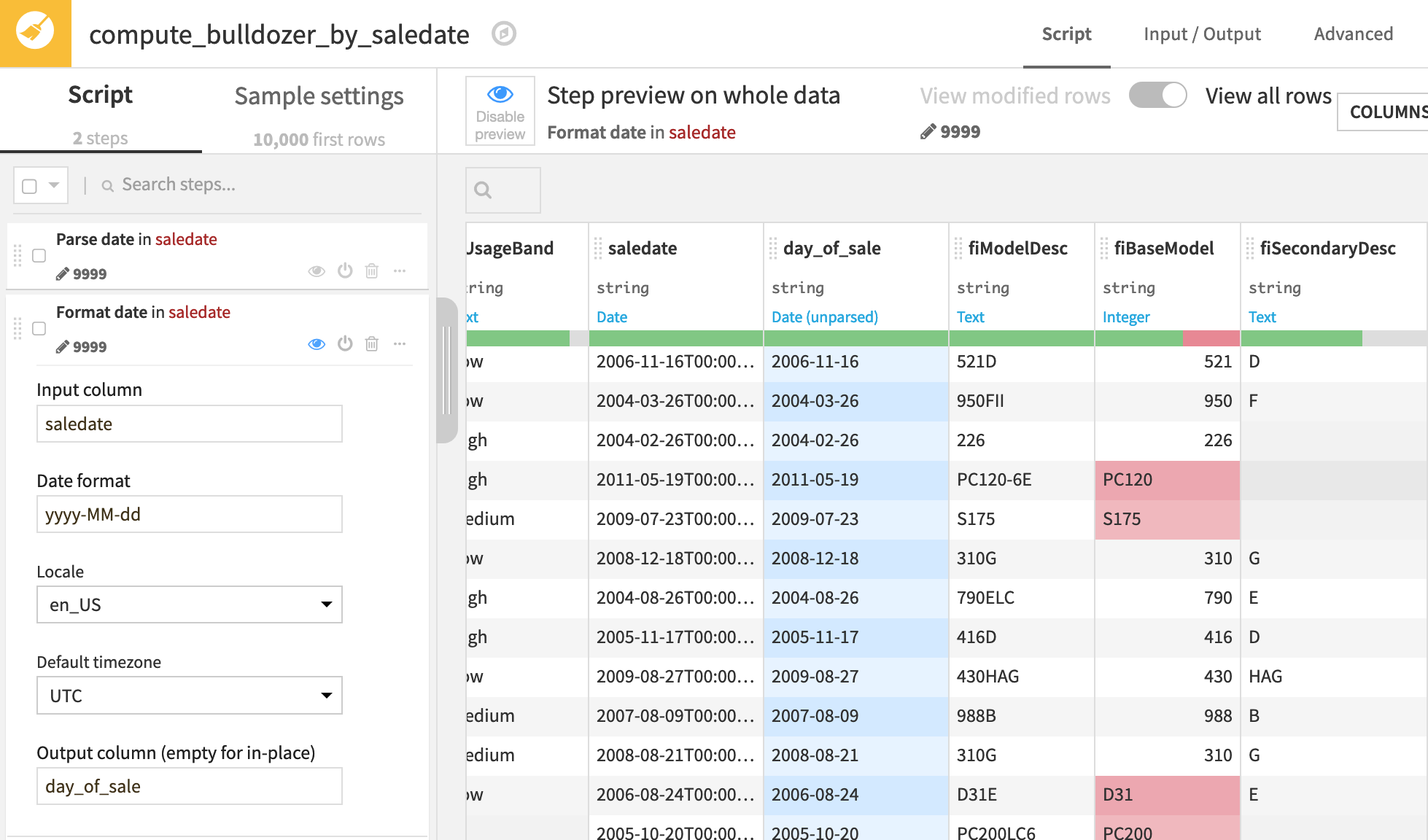

After this step, saledate is now a timestamp column. For our partitioning example, we want a partition identifier in the form yyyy-MM-dd.

Click on the header of the saledate column, and select More actions > Reformat date.

Reduce the date format to

yyyy-MM-dd.Name the output column

day_of_sale.

We now have the column we want to use as the partition identifier. This is one advantage of using a Prepare recipe instead of a Sync recipe!

Let’s save our recipe, and partition the output dataset by the new identifier column.

Partition the dataset, activate, and run#

Now partition the bulldozer_by_saledate dataset by the day_of_sale column, and adjust the Prepare recipe to redispatch those partitions.

Try this on your own, and refer to the detailed notes below if necessary.

Detailed steps

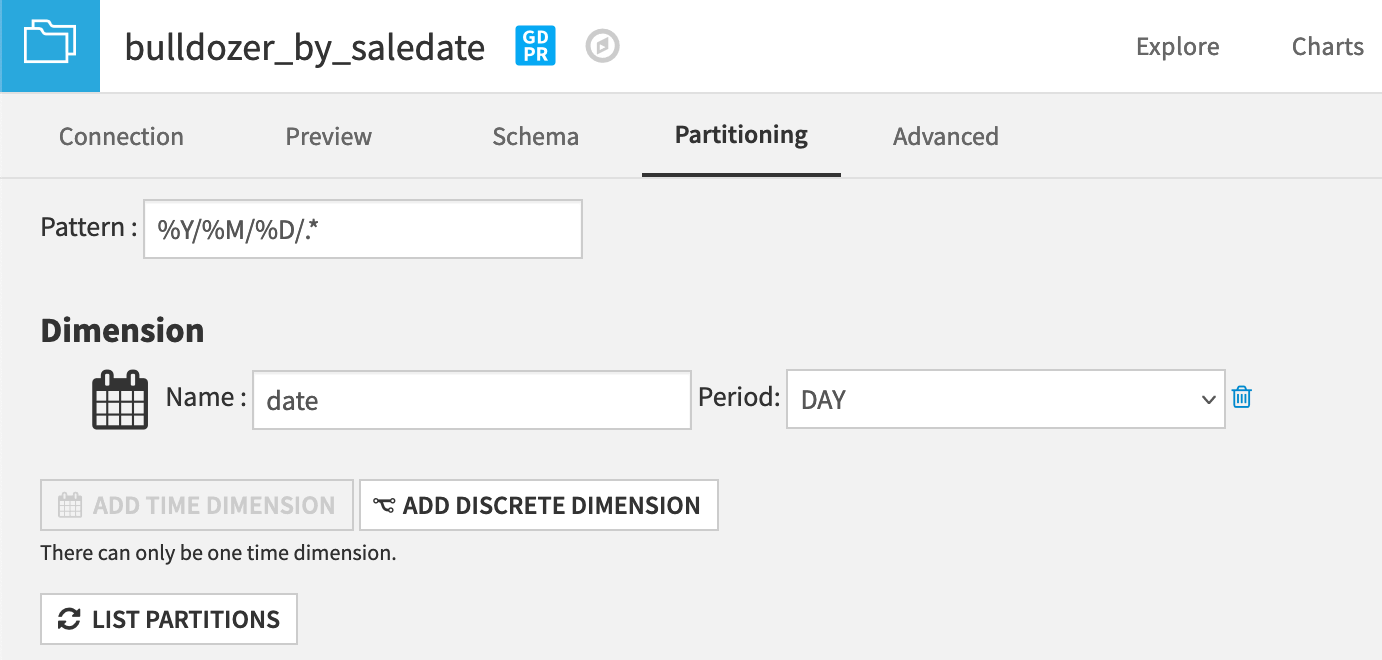

From the Settings tab of the bulldozer_by_saledate dataset, navigate to the Partitioning subtab.

Click Activate Partitioning.

Click Add Time Dimension.

Provide

day_of_saleas the name of the partitioning dimension and DAY as the period.Click to insert the pattern

%Y/%M/%D/.*.Click Save.

Return back to the parent Prepare recipe.

In the Advanced tab, click to enable the partition redispatch option.

From the Script tab, run the recipe.

Note

Once again, the actual value that appears for day_of_sale doesn’t matter when redispatching partitions.

After running the Prepare recipe, you’ll see in the Status tab of the output dataset that you now have 588 partitions!

Recap#

We’ve demonstrated two methods for using the partition redispatch feature to create a partitioned dataset from a non-partitioned input.

By using a Prepare recipe rather than a Sync recipe, we’ve been able to create our partitioning dimension column, rather than being restricted to the columns already present in the dataset.

Redispatch partitioning is a powerful feature that allows you to deal with datasets that haven’t been designed in a way that makes them partitionable, and transform them into partitioned datasets.

Redispatch partitioning is also available on non-file sources and targets.

The redispatch recipe is automatically rerun each time you use a Recursive smart build, if the input data has changed (provided that the input data is files-based).