Concept | Labeling recipe#

The Labeling recipe provides a structured, collaborative workflow to label tabular, text, or image data for use in training a machine learning model. For Generative AI models, the recipe can also be used to keep humans in the loop and review the model’s responses for accuracy.

See also

For more information on labeling, see the Labeling article in the reference documentation.

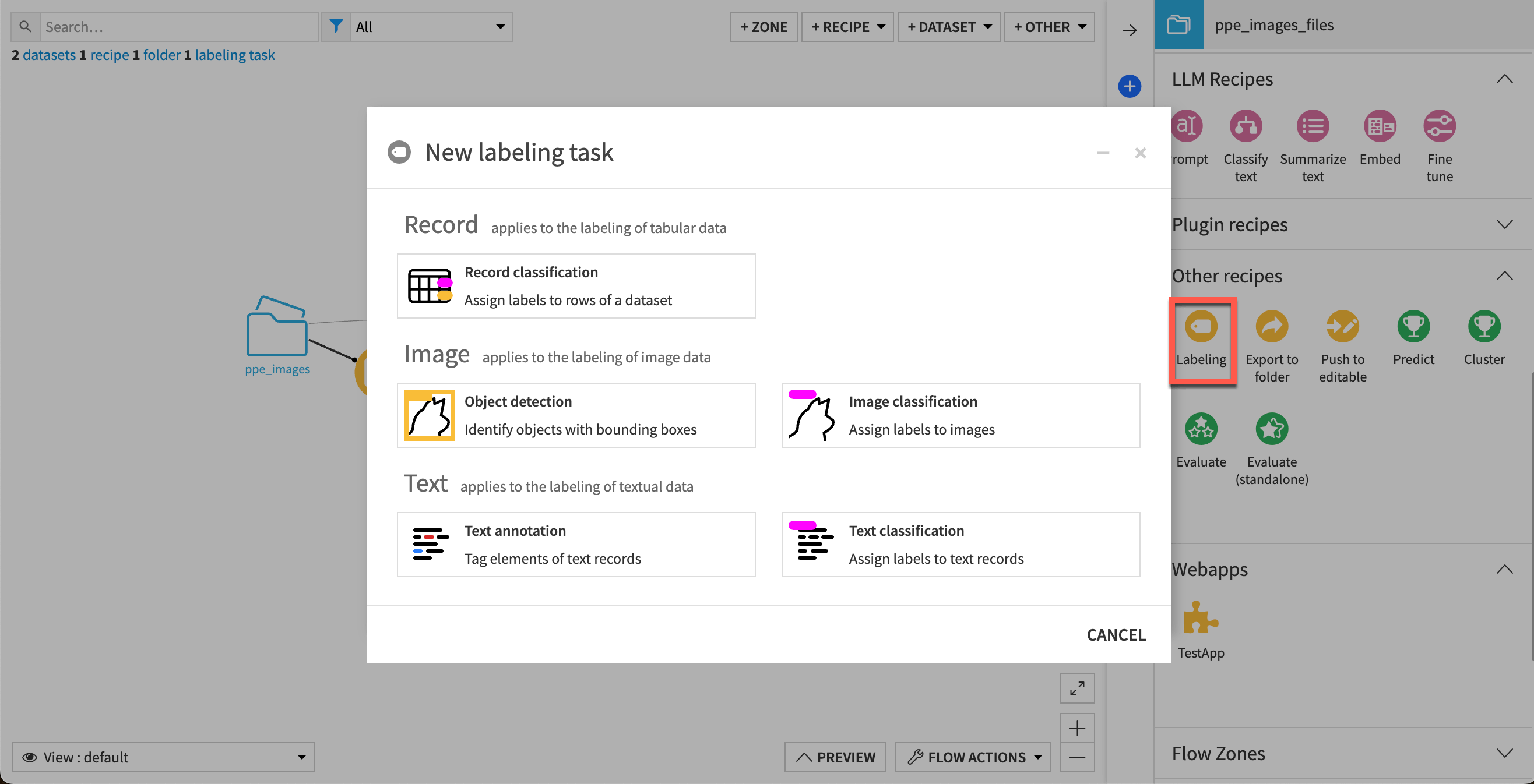

The Labeling recipe supports five types of labeling tasks:

Object detection: Identify objects with bounding boxes.

Image classification: Assign labels to images.

Text annotation: Tag elements of text records.

Text classification: Assign labels to text records.

Record classification: Assign labels to rows of a dataset.

Though the annotation interface differs depending on the type of task, the general process is the same:

A project manager sets up the labeling task.

Annotators create labels for the images, texts, or records.

Reviewers resolve conflicts and validate labels.

Project managers can track status and performance.

The labeled output dataset can be used to train a machine learning model, for instance.

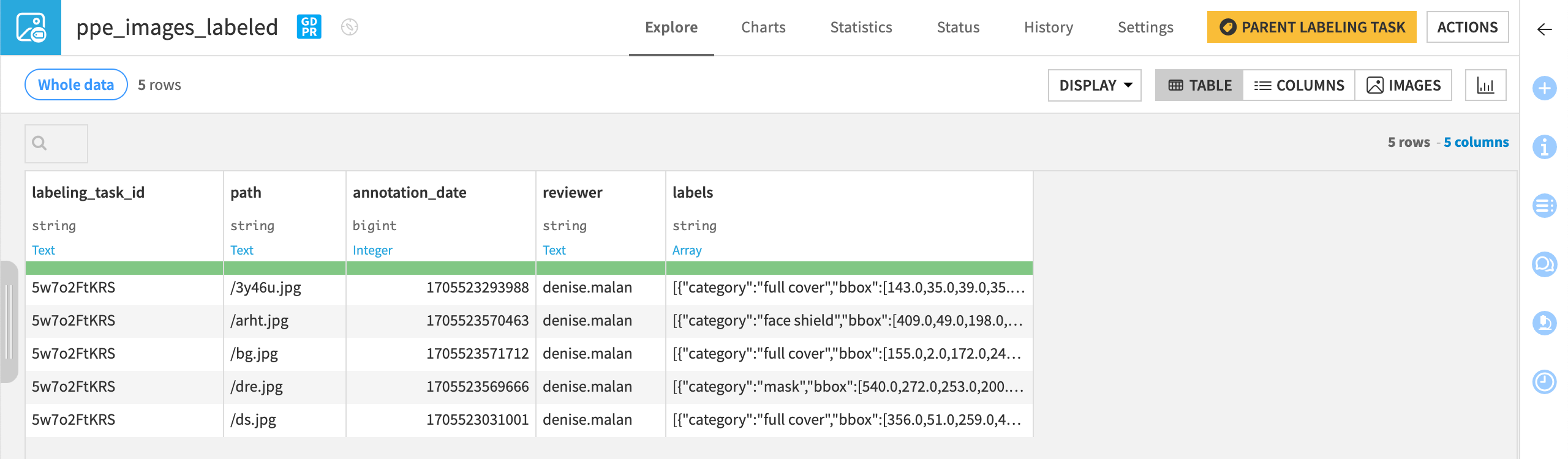

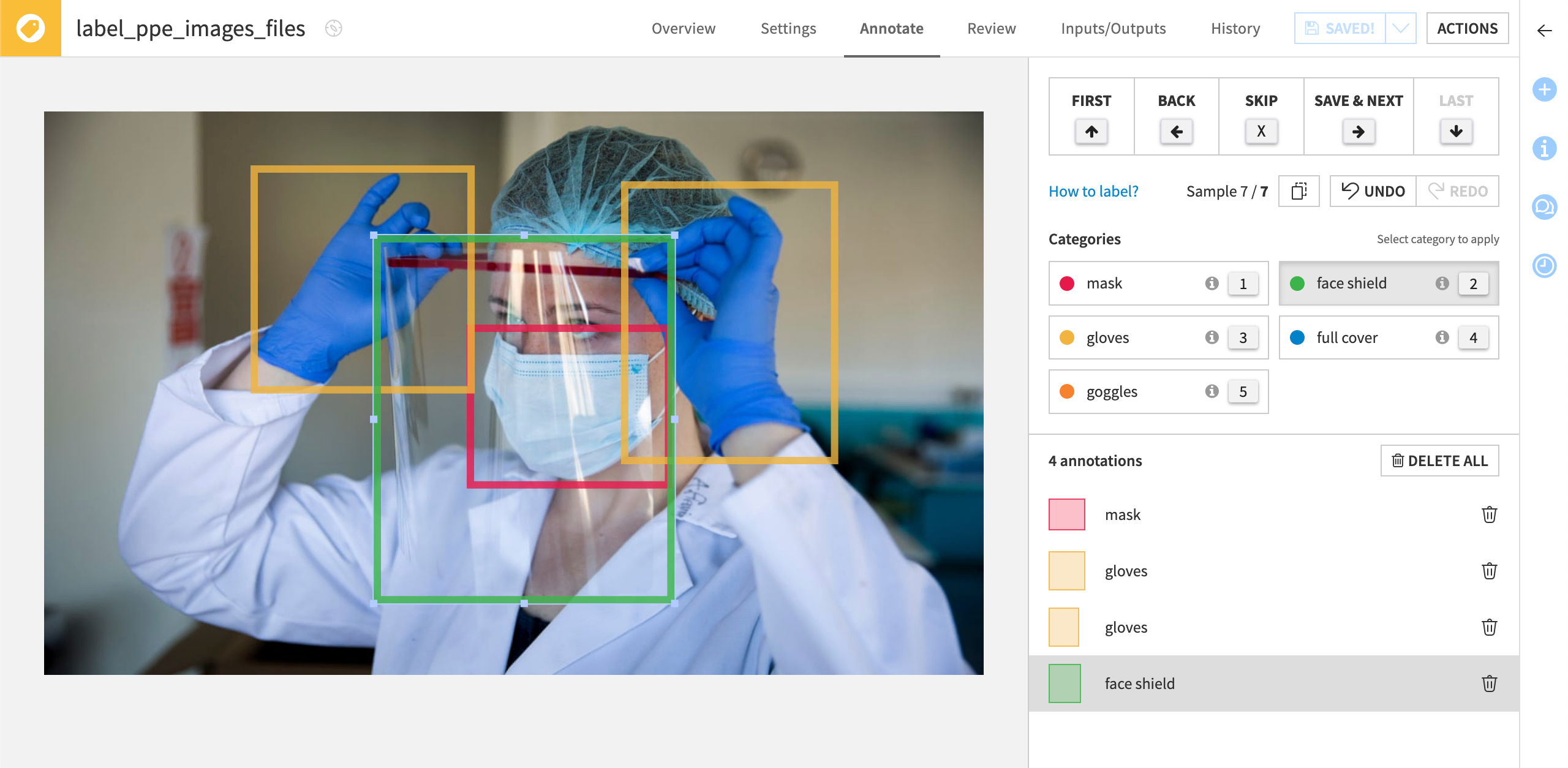

For example, a health provider might want to train a model to detect in images whether employees are wearing proper personal protective equipment (PPE) of different kinds, such as masks, gloves, or suits. In this case, the recipe input is a dataset pointing to a managed folder that contains the unlabeled images.

Setting up a labeling task#

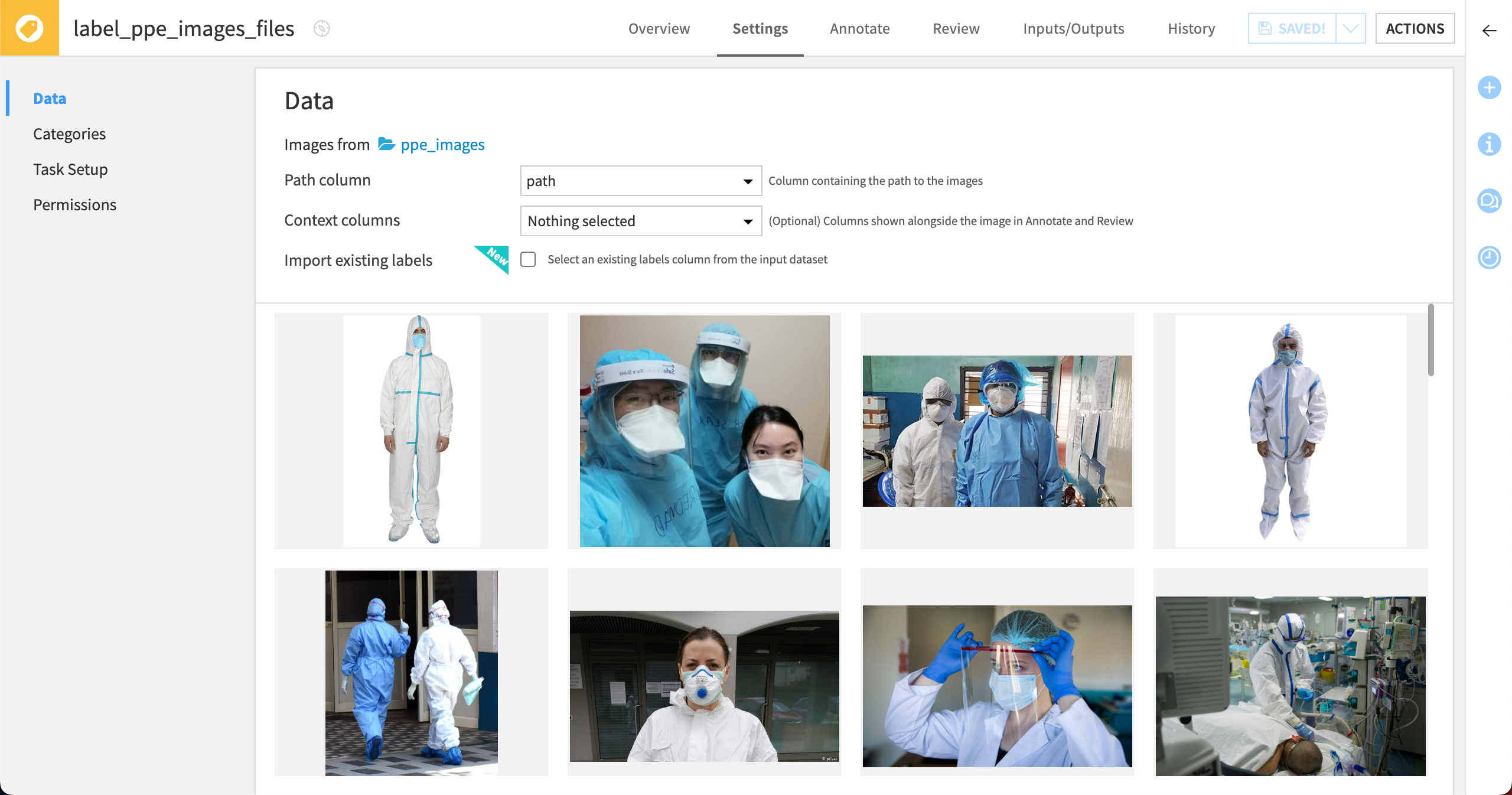

When creating a Labeling recipe, the project manager first selects the type of labeling task, in this case an object detection task. Then, in the Settings tab, the manager sets up the task and instructions for labelers. In the Data panel, we’ll make sure that the recipe input is a dataset pointing to a managed folder that contains the unlabeled images.

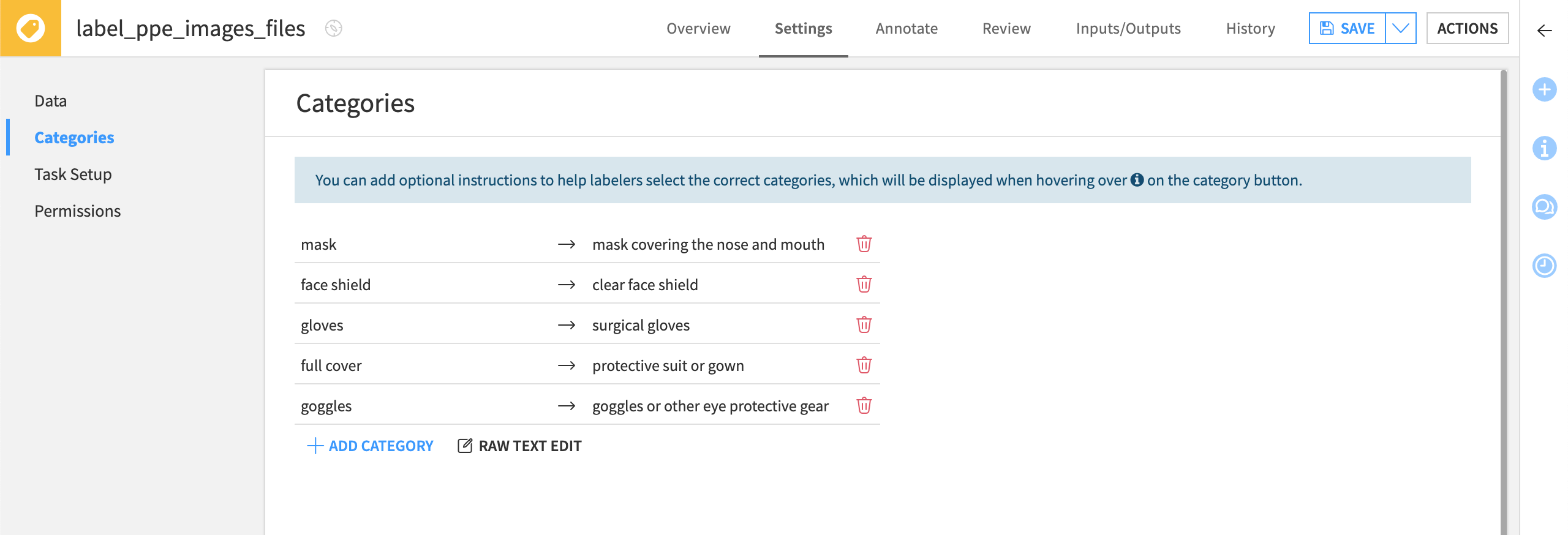

In the Categories panel, the manager creates the desired labels and optional instructions to help the labelers choose the correct categories.

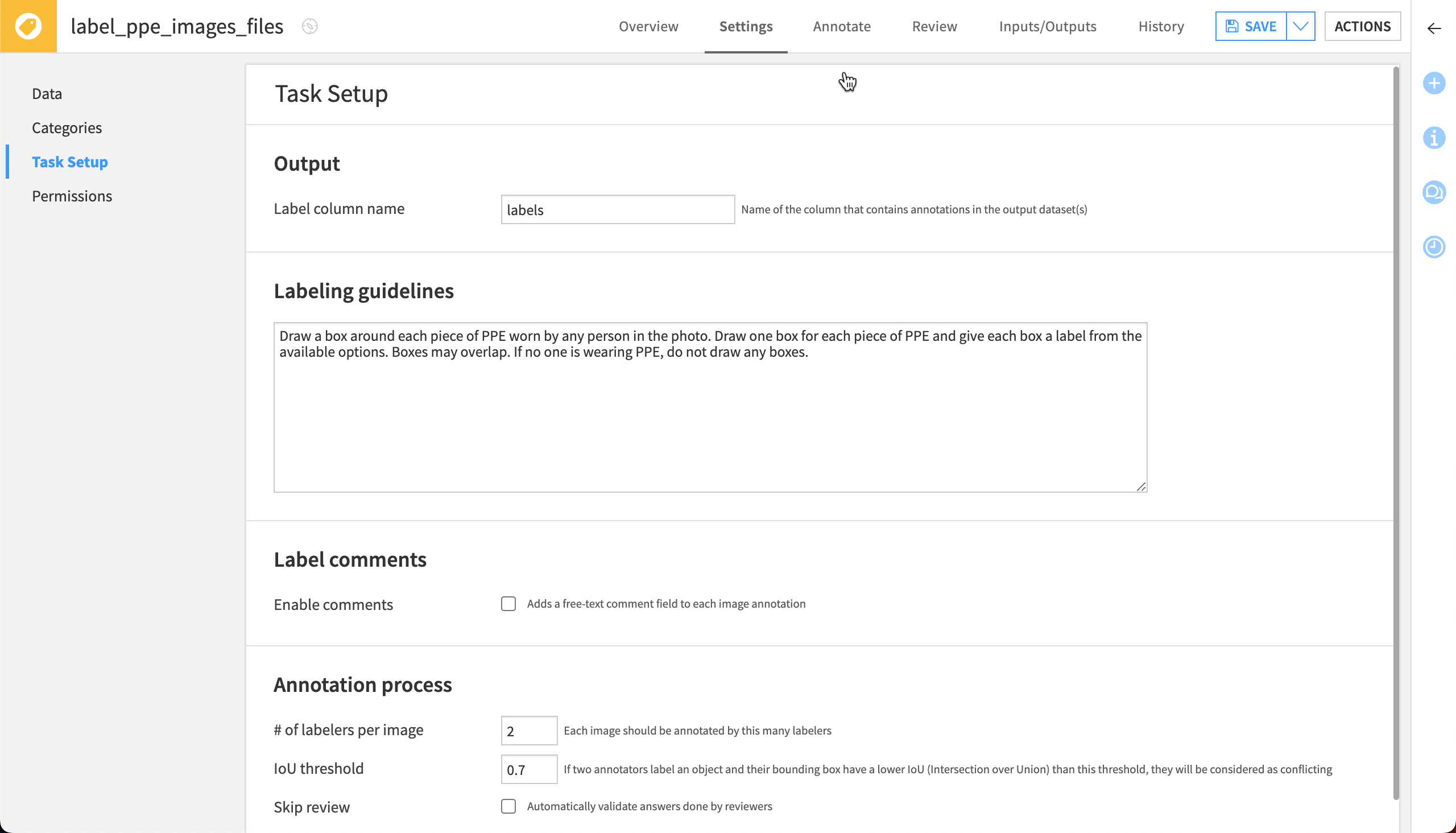

In the Task Setup screen, the manager can write overall guidelines for the annotators, choose the number of labelers for each image, enable comments, and change other settings.

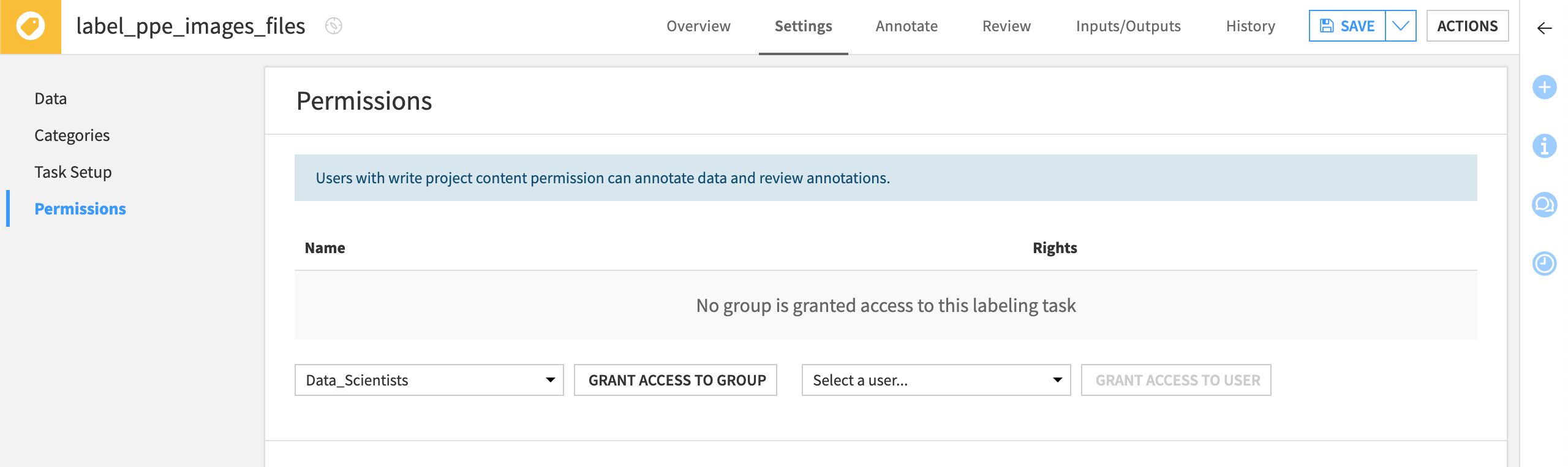

In Permissions, the manager can grant access to individual users or groups of users for the task. Annotate or Review permissions can be granted on each specific task and require users to be granted at least Read dashboards permission on the parent project.

Note

See the reference documentation for more information about permissions on labeling tasks.

Annotating data#

The assigned labelers can then annotate the image files, texts, or records in the Annotate tab of the recipe. The Annotate interface includes the overall instructions written by the manager, along with the definitions of classes the manager set up in the Categories page.

If comments are enabled by the project manager, labelers can also add free-text comments to each document. This is useful to allow the labelers to communicate uncertainty or other important information to the reviewers. Reviewers can view comments in the Review tab and output dataset.

Reviewing labels#

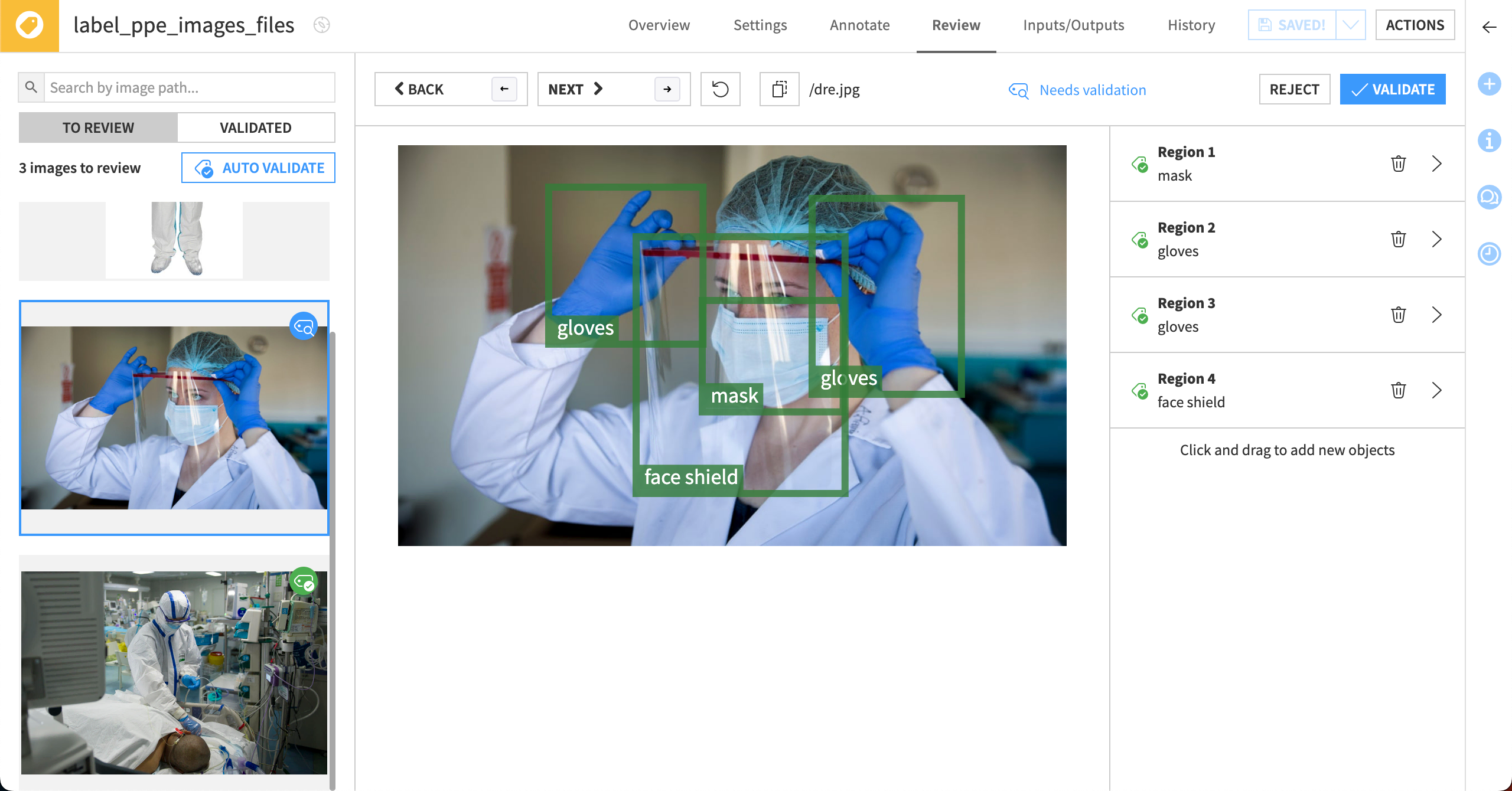

After the text or images have been labeled, they’re available to check in the Review tab.

The reviewer can see conflicts when annotators don’t assign the same class, bounding box, or annotation (depending on the type of task). The reviewer can also:

Resolve conflicts

Validate labels

Create their own annotations if needed

Send a document back to be labeled again

Progress and performance overview#

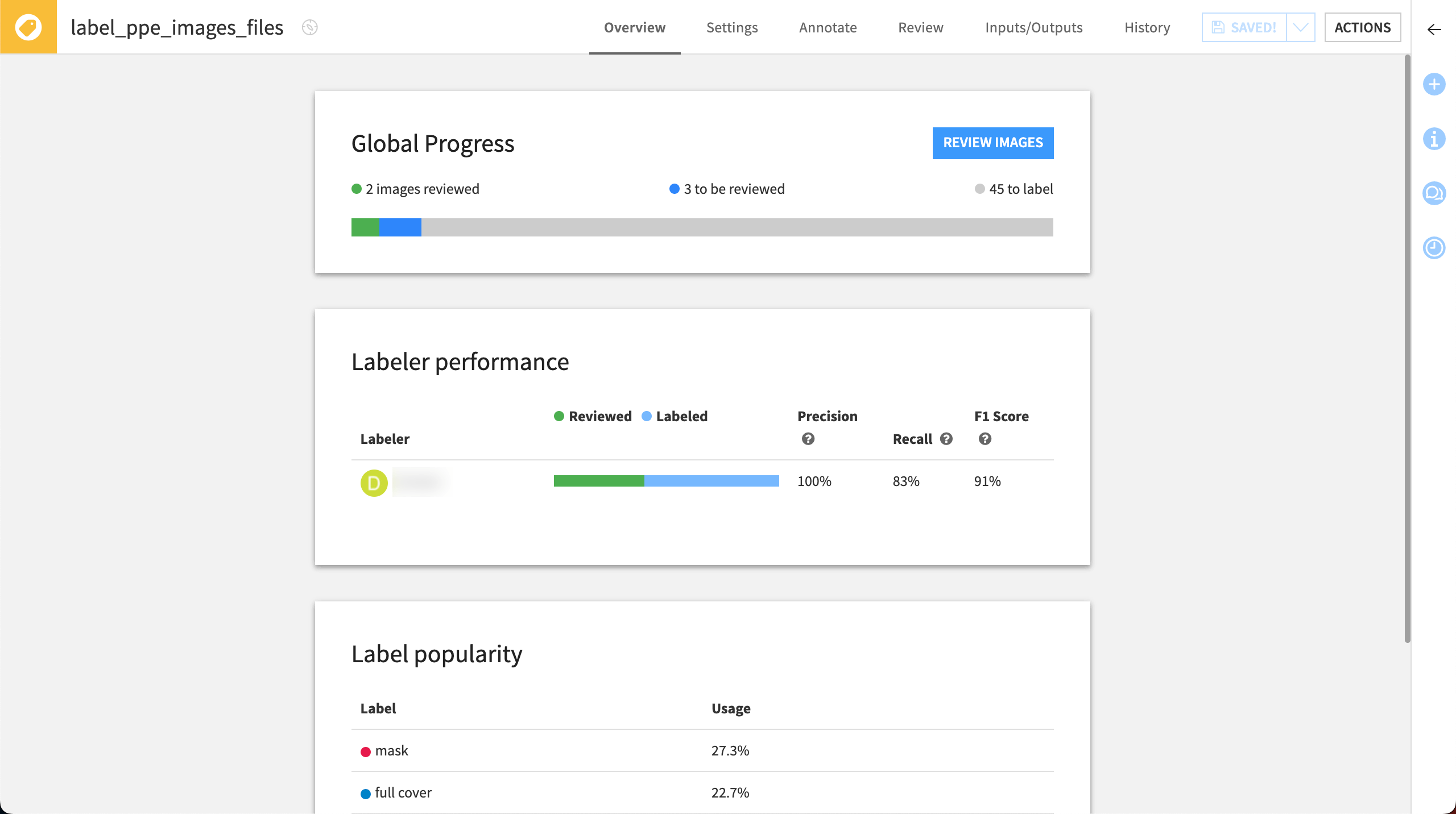

The Overview screen is available for all labeling types. It allows the project manager to follow the project with indicators on how many documents have been labeled and reviewed, the performance of each labeler, and the popularity of each label.

Output#

The output dataset is continually updated with all reviewed documents. Labels are included in the column created by the manager in the Task Setup panel.