Concept | Partitioned models#

Watch the video

In Dataiku, you can take a partitioned dataset and train a prediction model on each partition.

The motivation for partitioned models#

Partitioned or stratified models may result in better predictions than a model trained on the whole dataset.

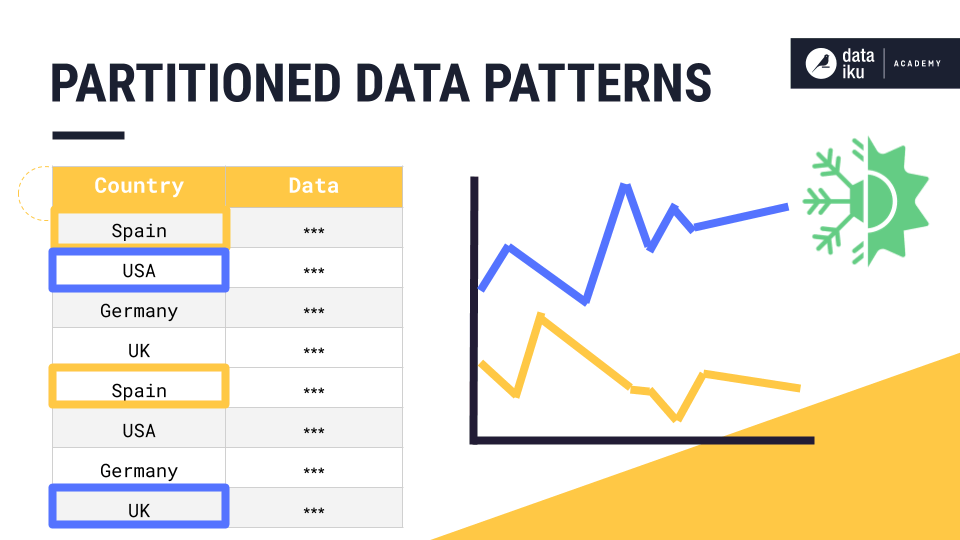

This is because subgroups related to a dataset’s partitions can sometimes have dissimilar behaviors. Therefore, they draw different patterns over the features.

Using a country as an example of a subgroup, customers in different countries could have different purchasing patterns impacting sales predictions. This could be due to differences in characteristics such as seasons.

Therefore, partitioning data by country and training a machine learning model for each partition could result in a higher-performing model. If you trained the model using the whole dataset instead of using partitions, the model might not be able to capture the nuances for each country or subgroup.

Use case#

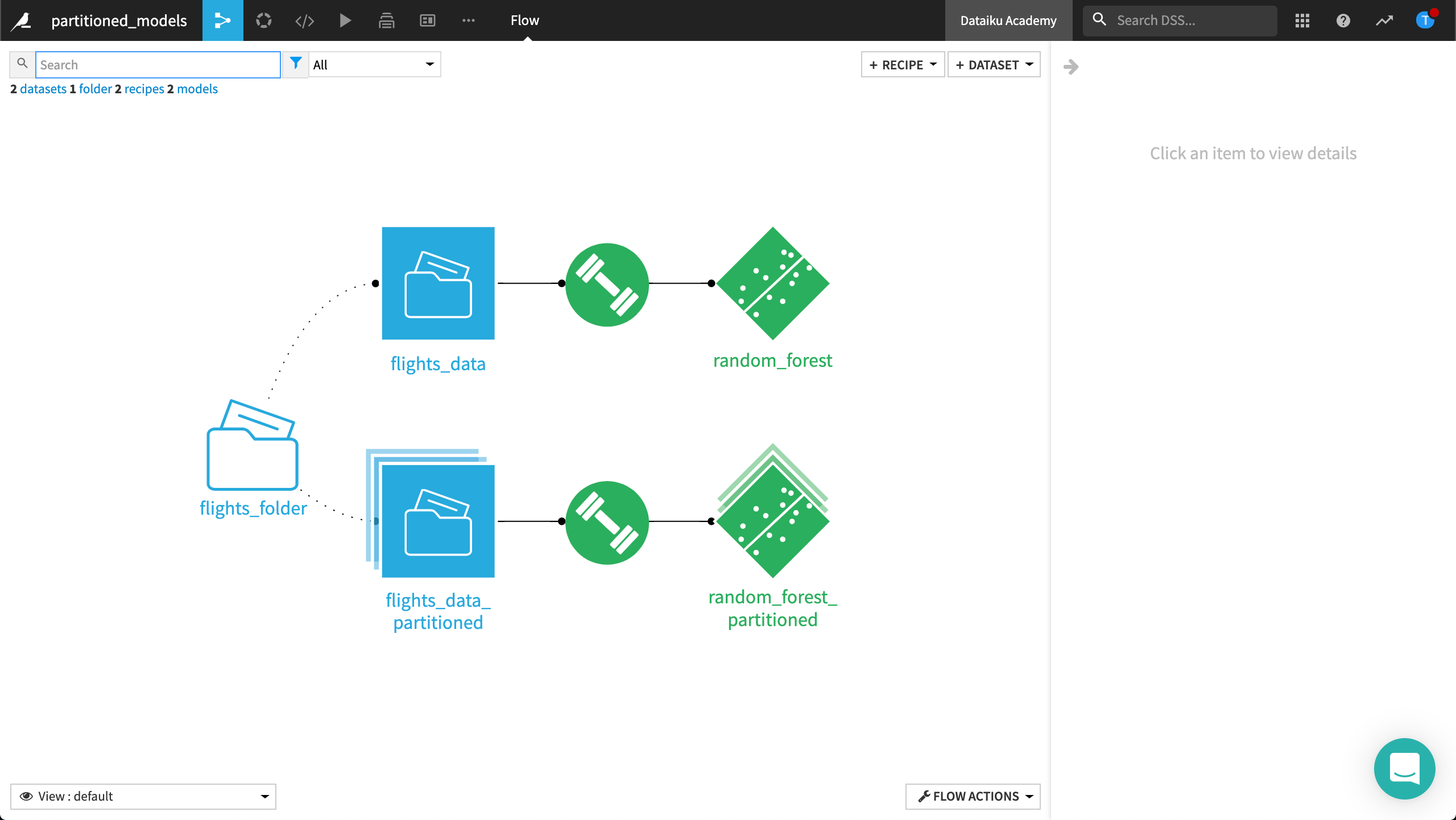

Let’s test this hypothesis in Dataiku. In this example, we’ve trained and deployed two machine learning models to predict flight arrival delay time. The flight arrival dataset has two partitions: Florida and California.

The saved model Random_forest has been trained over the whole data.

The saved model random_forest_partitioned has been trained over the partitioned data.

Let’s compare the difference between the two deployed models and observe the results.

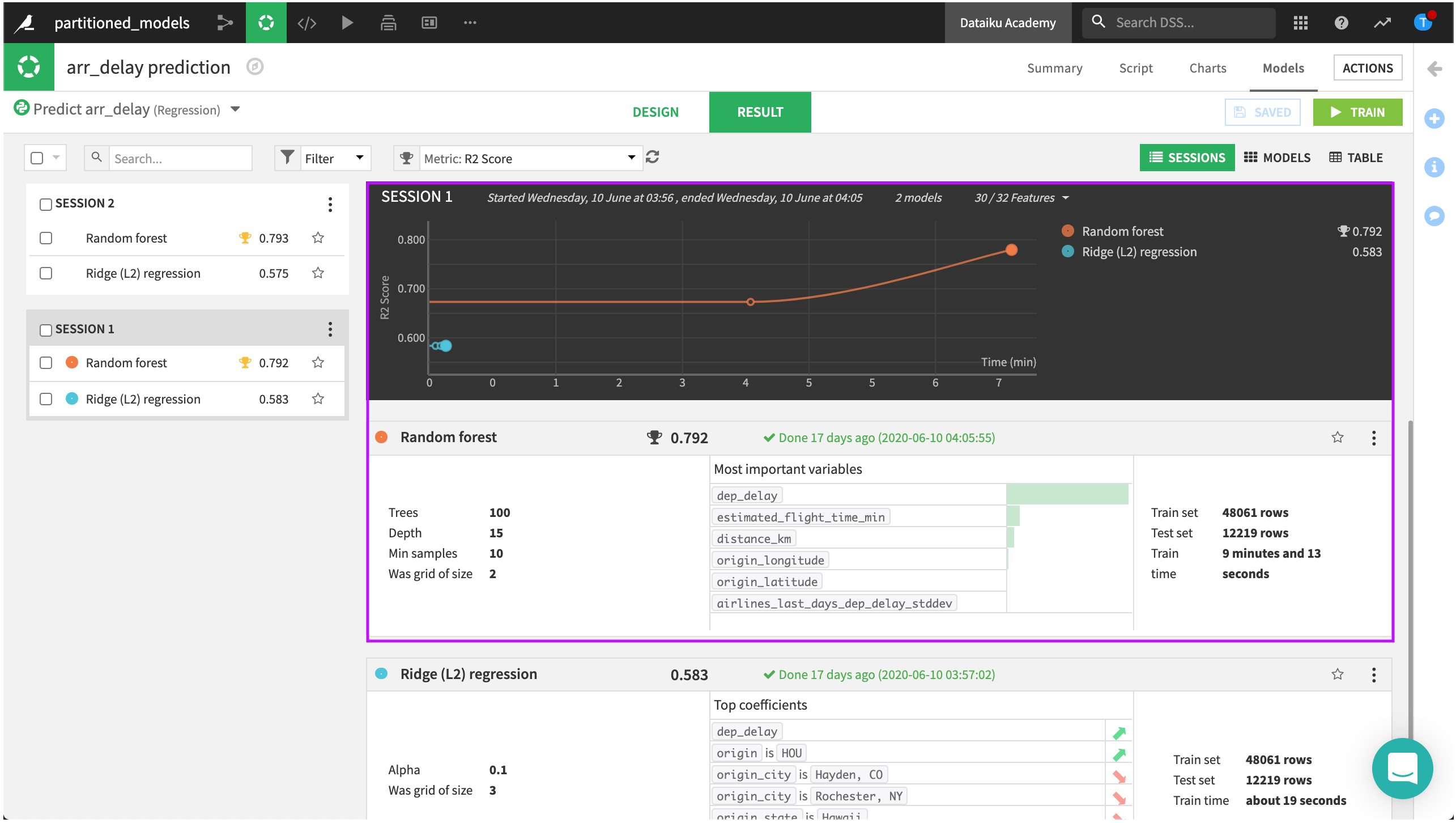

Look at the non-partitioned model first. In the performance summary, it looks like the best model is the random forest. Its R2 score is pretty good.

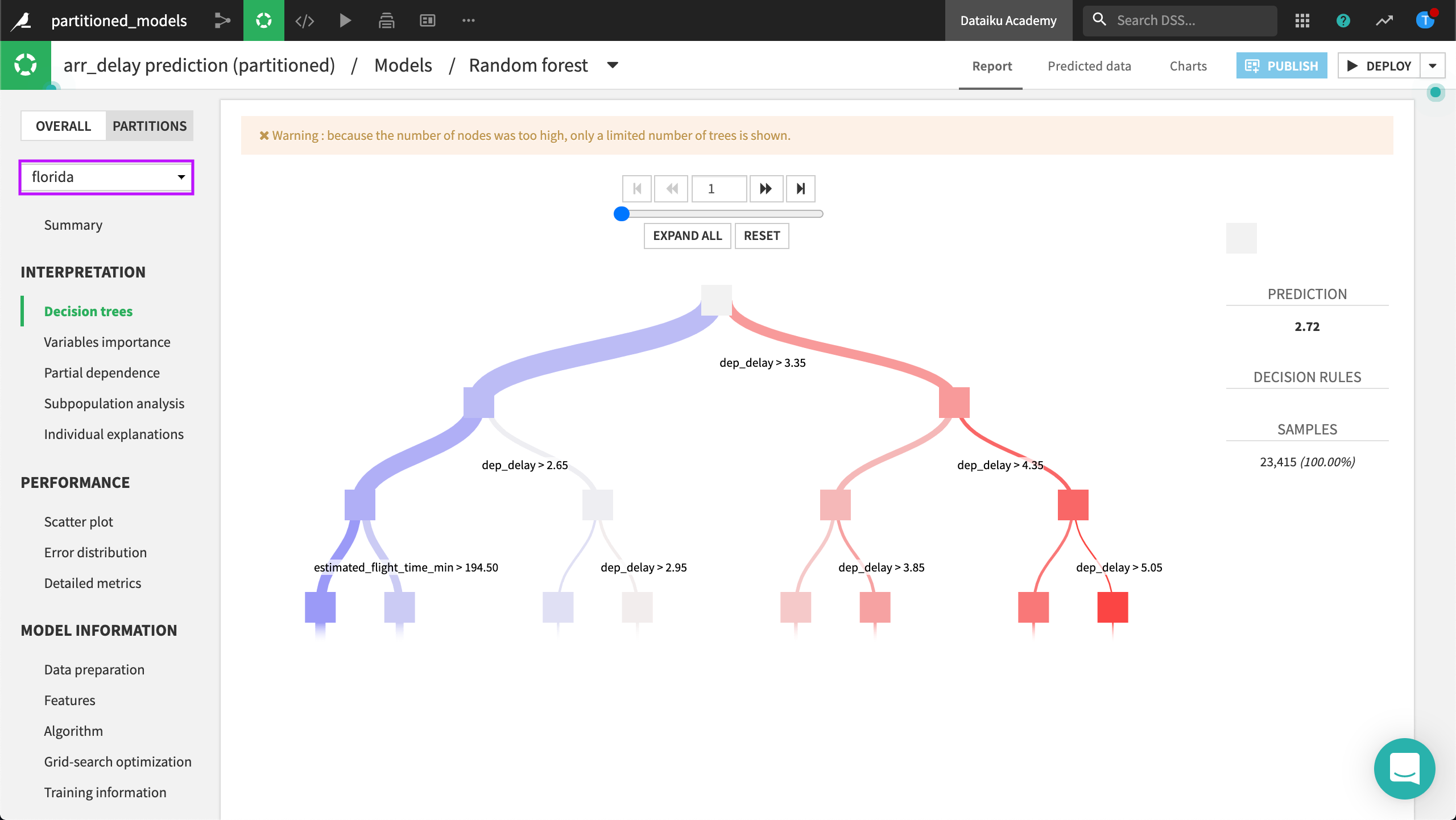

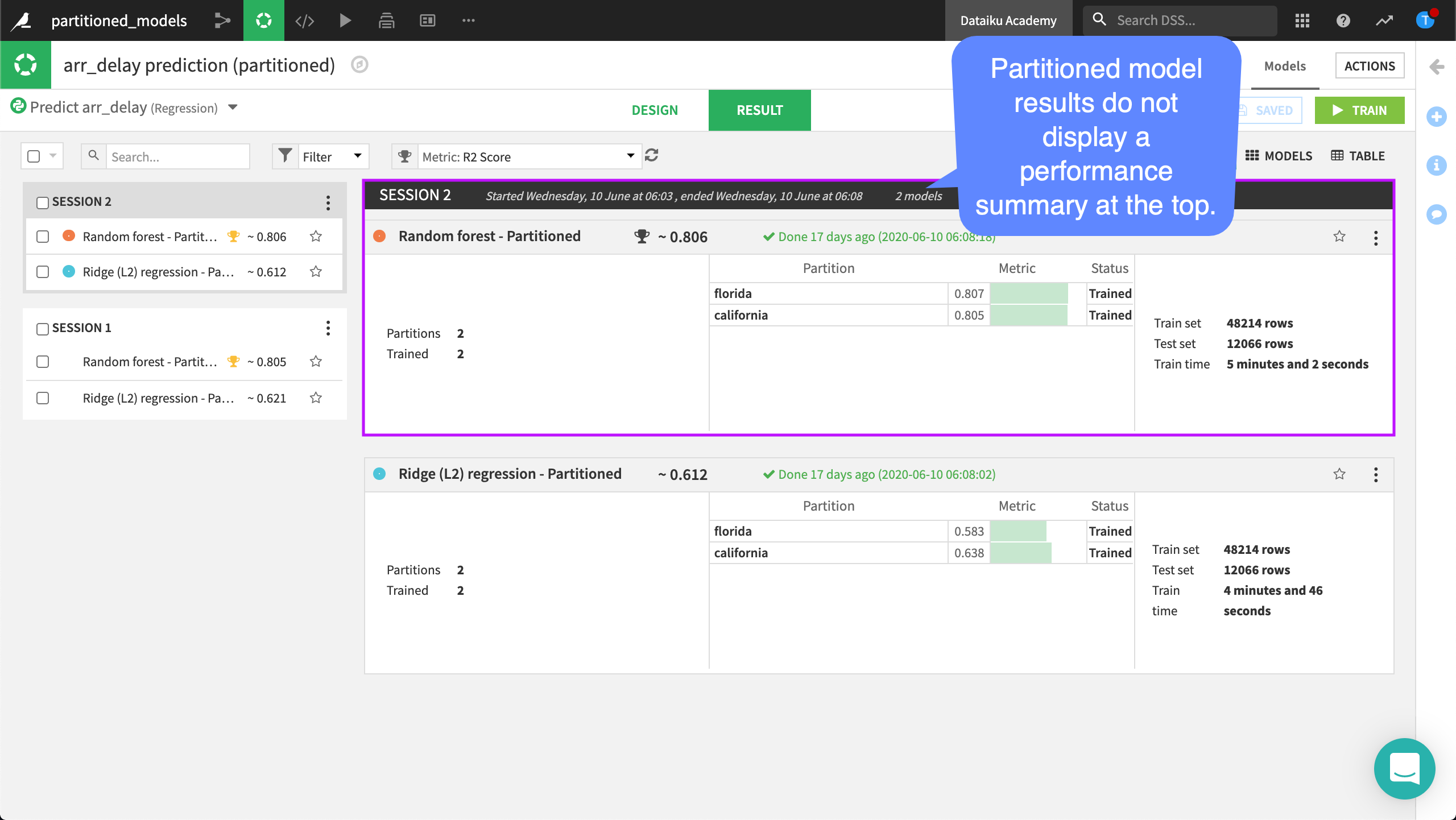

Let’s compare this with the results of the partitioned model. Once again, the best model is the random forest.

Notice that Dataiku doesn’t display the performance summary at the top. This is expected. We have trained not only one model, but as many models as partitions in the dataset times the number of algorithms. The summary for all models would not be readable.

Furthermore, Dataiku displays the R2 score as an approximation. This is because it’s the summation of the results of two separate models: one trained on the Florida partition, and one trained on the California partition. It’s good to see that the overall score of the partitioned model exceeded that of the non-partitioned model.

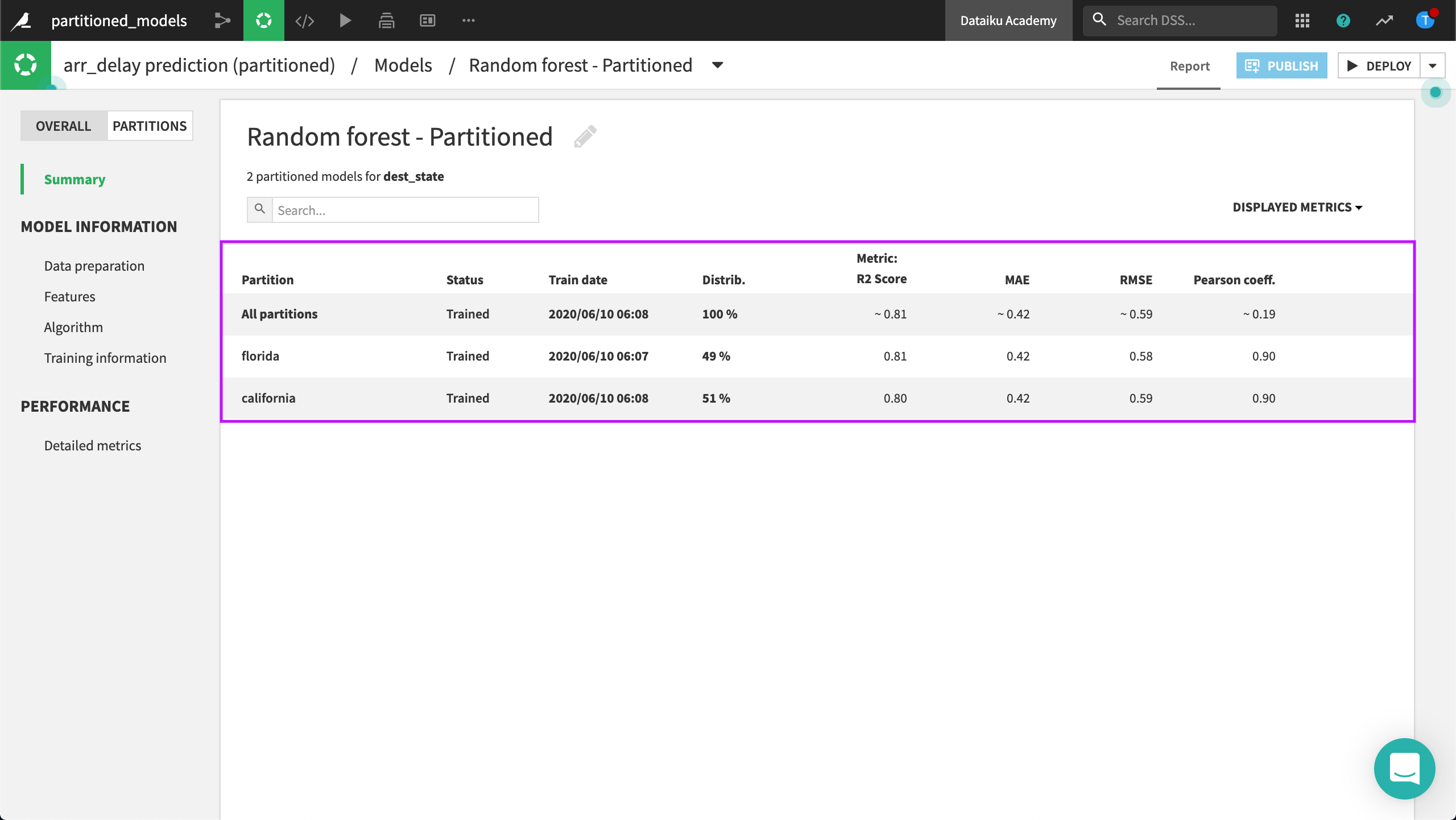

To compare the overall results with the results of the partitions, you can review the Summary page of the random forest model.

You can also examine results by partition in a partitioned model. This is more detailed than the overall results because a partitioned model is an expert of only its learning partition.