Tutorial | Deep learning for time series#

Get started#

As an alternative to traditional time series models like ARIMA, you can also use deep learning for forecasting.

Objectives#

In this tutorial, you will:

Build a long short-term memory (LSTM) network using Keras code within Dataiku’s visual machine learning.

Deploy it to the Flow as a saved model and apply it to new data.

Prerequisites#

Dataiku 12.0 or later.

A Full Designer user profile.

A code environment with the necessary libraries. When creating a code environment, add the Visual Deep Learning package set corresponding to your hardware.

Some familiarity with deep learning, and in particular, Keras.

Create the project#

From the Dataiku Design homepage, click + New Project.

Select Learning projects.

Search for and select Deep Learning for Time Series.

If needed, change the folder into which the project will be installed, and click Create.

From the project homepage, click Go to Flow (or type

g+f).

From the Dataiku Design homepage, click + New Project.

Select DSS tutorials.

Filter by ML Practitioner.

Select Deep Learning for Time Series.

From the project homepage, click Go to Flow (or type

g+f).

Note

You can also download the starter project from this website and import it as a zip file.

You’ll next want to build the Flow.

Click Flow Actions at the bottom right of the Flow.

Click Build all.

Keep the default settings and click Build.

Use case summary#

The project contains a dataset of the daily minimum temperatures recorded in Australia over the course of a decade (1981-1990). The only data preparation that has been done is to parse the dates from a string into a date format.

Tip

In the Charts tab of the temperatures_prepared dataset, you’ll find a line chart of the temperature by date. It reveals that the data is quite noisy. Therefore our model will probably only learn the general trends.

Prepare the data#

The next step is to create windows of input values. We’re going to feed the LSTM with windows of 30 temperature values, and expect it to predict the 31st.

Create windows with a Python recipe#

We can do this with a Python code recipe that serializes the window values in string format. The resulting dataset should have three columns:

The date of the target measurement.

A vector of 30 values of input measured temperatures.

The target temperature.

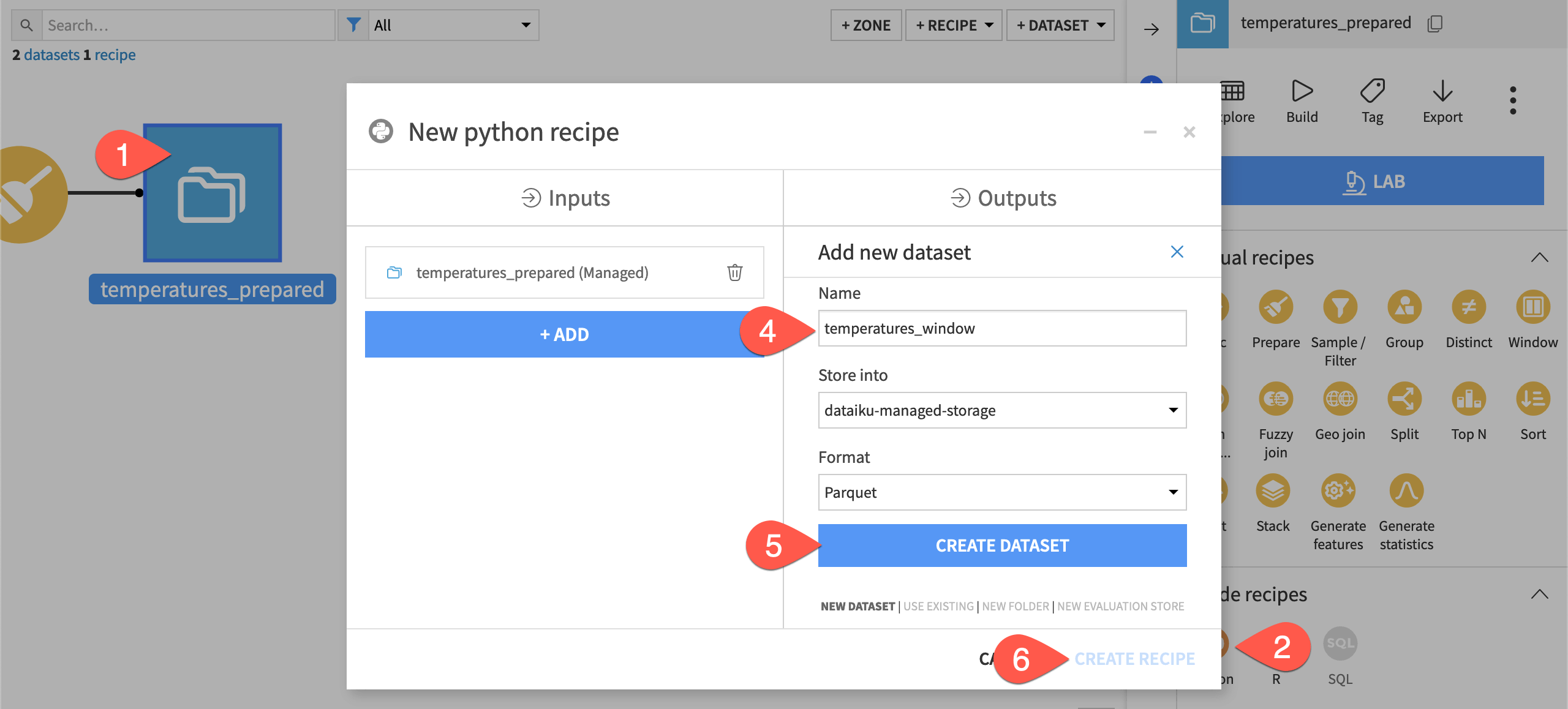

From the Flow, select the temperatures_prepared dataset.

In the Actions tab of the right panel, select the Python recipe.

Under Outputs, click + Add.

Name the output dataset

temperatures_window.Click Create Dataset.

Click Create Recipe.

Replace the starter code with the code below.

import dataiku import pandas as pd, numpy as np from dataiku import pandasutils as pdu # Read recipe inputs generated_series = dataiku.Dataset("temperatures_prepared") df_data = generated_series.get_dataframe() steps = [] x = [] y = [] ## Set the number of historical data points to use to predict future records window_size = 30 ## Create windows of input values for i in range(len(df_data) - window_size - 1): subdf = df_data.iloc[i:i + window_size + 1] values = subdf['Temperature'].values.tolist() step = subdf['Date'].values.tolist()[-1] x.append(str(values[:-1])) steps.append(step) y.append(values[-1]) df_win = pd.DataFrame.from_dict({'date': steps, 'inputs': x, 'target': y}) # Write recipe outputs series_window = dataiku.Dataset("temperatures_window") series_window.write_with_schema(df_win)

Click Run (or type

@+r+u+n) to execute the recipe, and then explore the output dataset.

Create a Split recipe#

Next, we can divide the dataset into training and testing sets. For convenience, we’ll use a visual recipe.

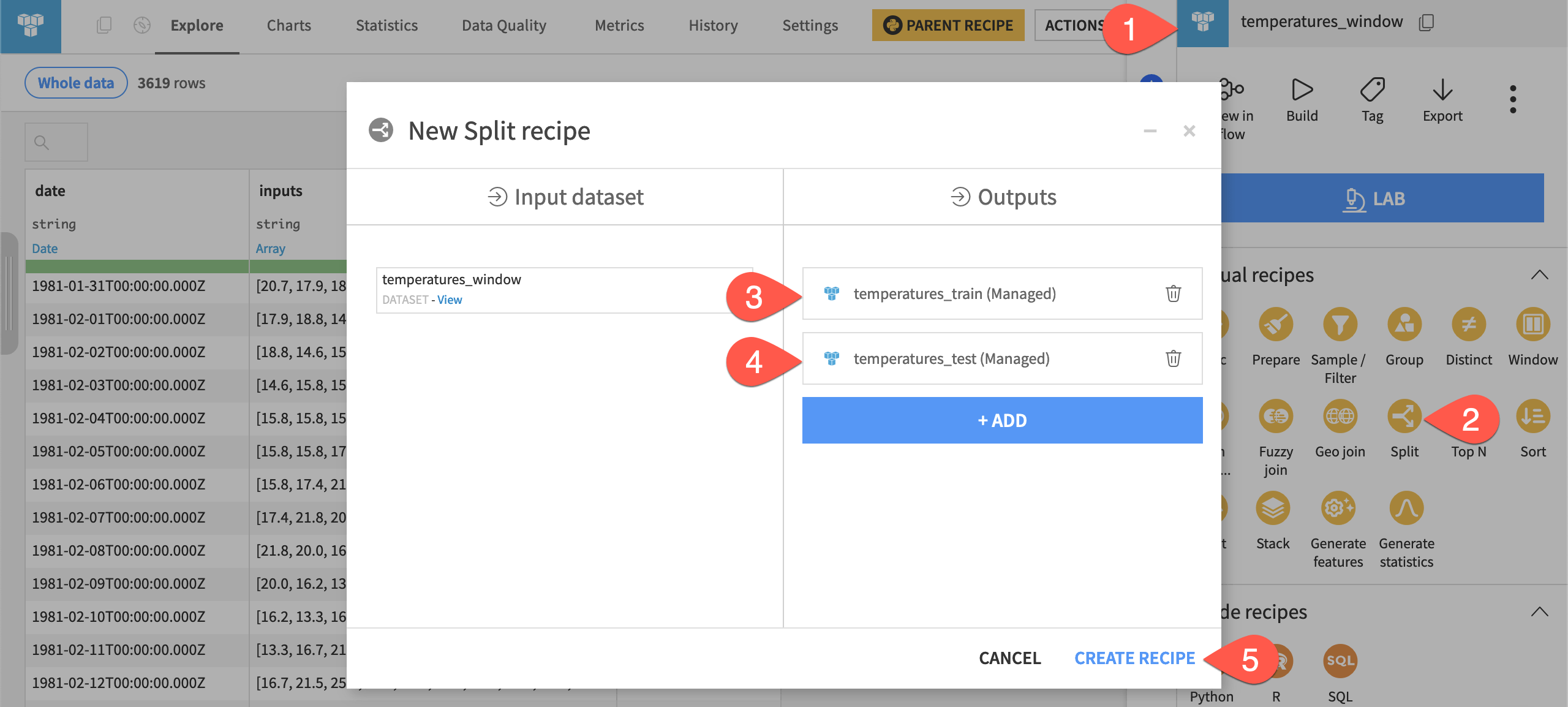

If not already open, select the temperatures_window dataset.

In the Actions tab, select Split from the menu of visual recipes.

Click + Add, name the output

temperatures_train, and click Create Dataset.Click + Add again, name the second output

temperatures_test, and click Create Dataset.Once you have defined both output datasets, click Create Recipe.

Configure the Split recipe#

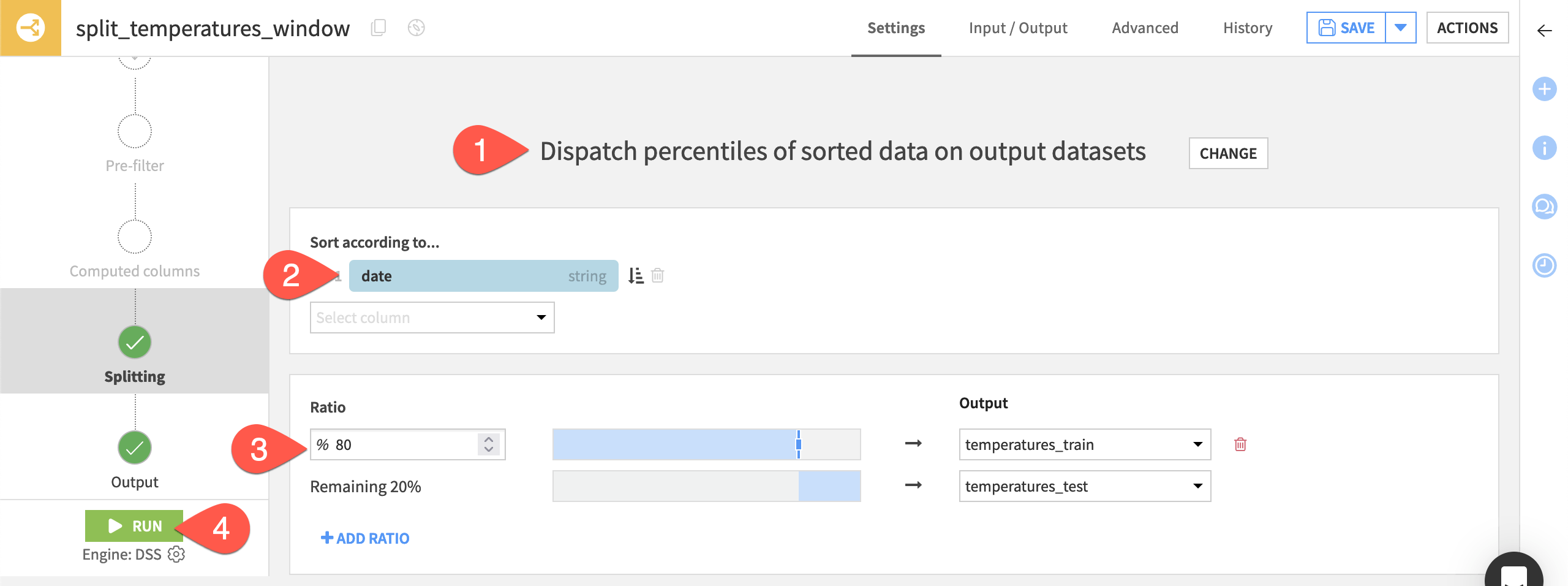

The model will be trained on the first eight years of data and then tested on the final two years of data.

On the recipe’s Settings tab, select Dispatch percentiles of sorted data on output datasets.

Select date as the sort order column.

Assign

80% to the temperatures_train dataset, leaving 20% for the temperatures_test dataset.Click Run to execute the recipe.

Train a deep learning model#

Once we have training and testing datasets, we can proceed to create the model.

Create a deep learning task#

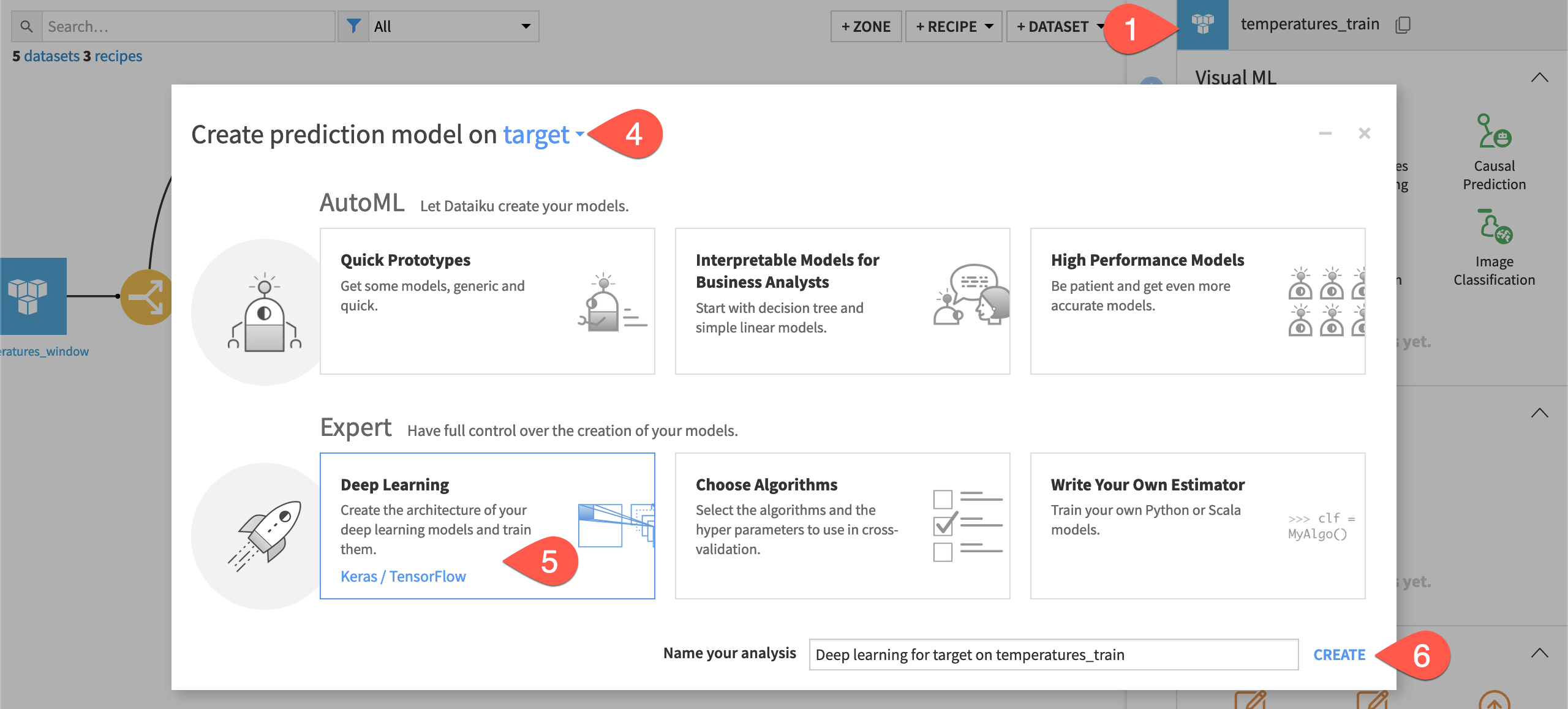

We start by creating a prediction task in the Lab — just like any other AutoML model.

From the Flow, select the temperatures_train dataset.

From the Actions tab of the right side panel, click Lab.

Select AutoML Prediction from the menu of visual ML tasks.

Select target as the feature on which to create the prediction model.

In the Expert section, select Deep Learning.

Click Create.

Handle features with a custom processor#

After creating the task, you’ll encounter an error that no input features have been selected.

Navigate to the Features handling panel.

Confirm both columns date and inputs have been rejected for being unique IDs.

We need a custom processor that unserializes the input string to a vector, and then normalizes the temperature values to be between 0 and 1.

From the top navigation bar, go to the menu Code (

) > Libraries (

g+l). (If prompted, it’s safe to leave the page without saving).Click Add > Create file.

Name it

python/windowprocessor.py, and click Create.Copy-paste the code below.

import numpy as np class windowProcessor: def __init__(self, window_size): self.window_size = window_size def _convert(self, x): m = np.empty((len(x), self.window_size)) for i in range(len(x)): c = np.array(eval(x[i])) m[i, :] = np.array(eval(x[i])) return m def fit(self, x): m = self._convert(x) self.min_value, self.max_value = m.min(), m.max() def transform(self, x): m = self._convert(x) return (m - self.min_value) / (self.max_value - self.min_value)

Click Save All.

Important

The windowprocessor.py file implements the following methods:

Method |

Purpose |

|---|---|

|

Computes the maximum and minimum values of the dataset. |

|

Normalizes the values to be between 0 and 1. |

|

Transforms the data from an array of strings to a 2-dimensional array of floats. |

Use the custom preprocessing file#

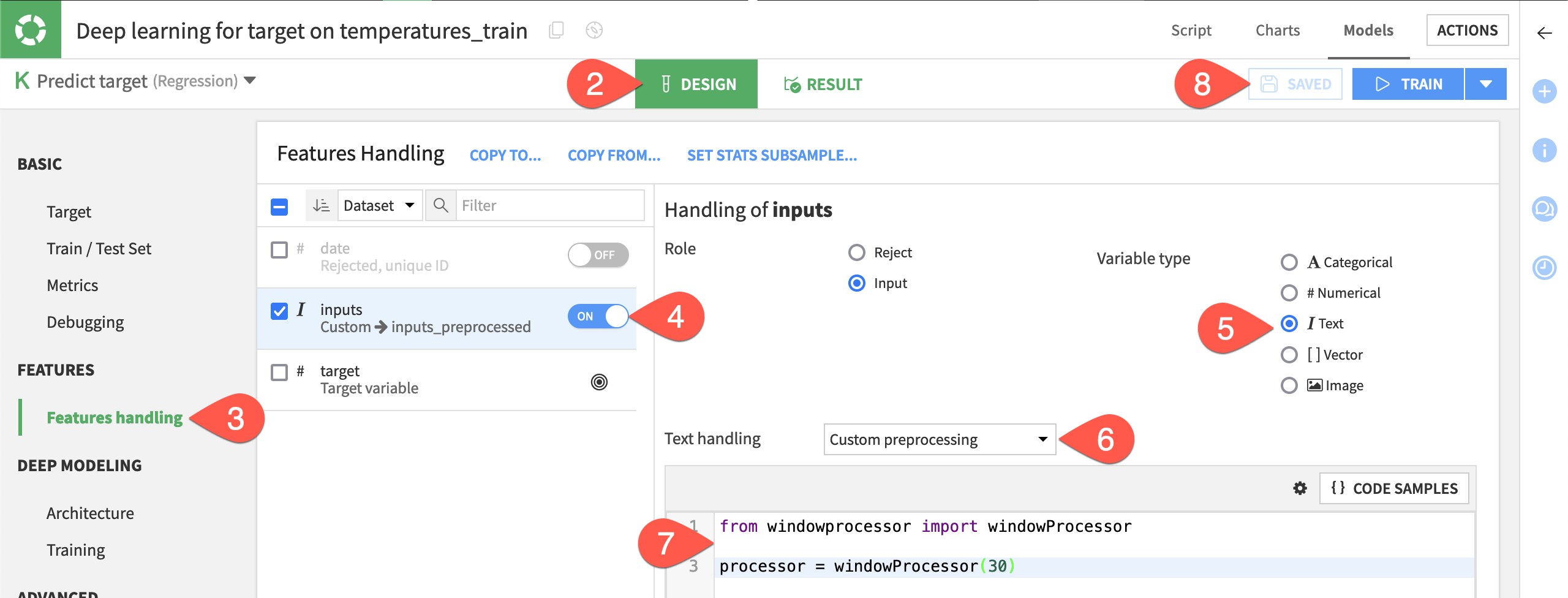

In the model’s design, we must specify to use the custom processor and tell it that our window has 30 values.

Return to the visual analysis Deep learning for target on temperatures_train.

Go to the Design tab.

Navigate to the Features handling panel.

Turn ON the inputs feature.

Select Text as the variable type.

For text handling, select Custom preprocessing.

Replace the starter code with the snippet below.

from windowprocessor import windowProcessor processor = windowProcessor(30)

Click Save.

Important

This custom features handling creates a new input to the deep learning model called inputs_preprocessed. We’ll use that in the specification of the deep learning architecture.

Create the deep learning architecture#

Finally, we need to import the LSTM and Reshape layers to specify our architecture. We then have to create our network architecture in the build_model() function. We’ll make no changes to the compile_model() function or the Training panel.

Still in the Design tab, navigate to the Architecture panel.

Replace the first line of code with the following:

from keras.layers import Input, Dense, LSTM, Reshape

Replace the

build_model()function with the following:def build_model(input_shapes, n_classes=None): # This input will receive all the preprocessed features window_size = 30 input_main = Input(shape=(window_size,), name="inputs_preprocessed") x = Reshape((window_size, 1))(input_main) x = LSTM(100, return_sequences=True)(x) x = LSTM(100, return_sequences=False)(x) predictions = Dense(1)(x) # The 'inputs' parameter of your model must contain the # full list of inputs used in the architecture model = Model(inputs=[input_main], outputs=predictions) return model

Check the Runtime environment panel to ensure you have a code environment that supports visual deep learning.

Click Train.

Click Train again to confirm.

Important

There are three hidden layers. First is a Reshape layer, to convert from a shape of (batch_size, window_size) to (batch_size, window_size, dimension).

Since we only have one input variable at each time step, the dimension is 1. After the reshaping, we can stack two layers of LSTM. The output layer is a fully connected layer, Dense, with one output neuron. By default, its activation function is linear, which is appropriate for a regression problem.

Deploy and apply a deep learning model#

Although this may be a deep learning model, the process for using it’s just like that of any other visual model.

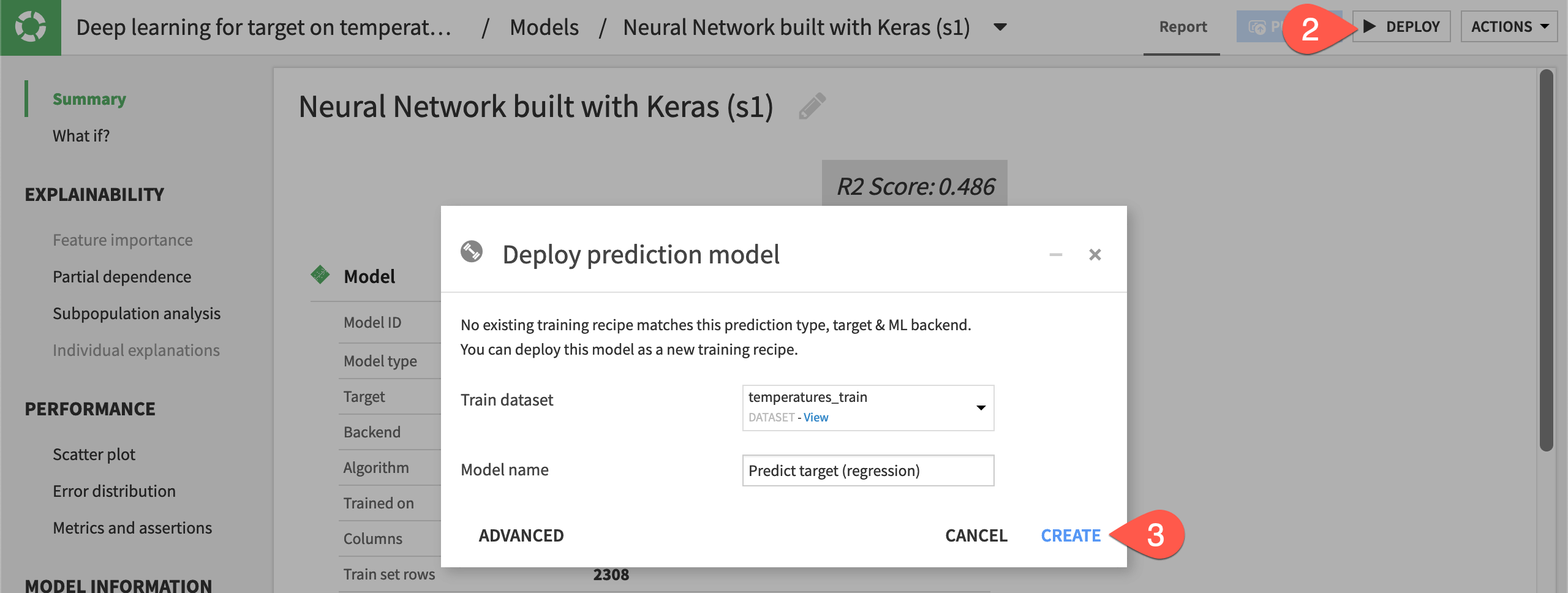

Deploy the model#

We first deploy it from the Lab to the Flow as a saved model.

From the Result tab, open the model report.

Click Deploy.

Click Create.

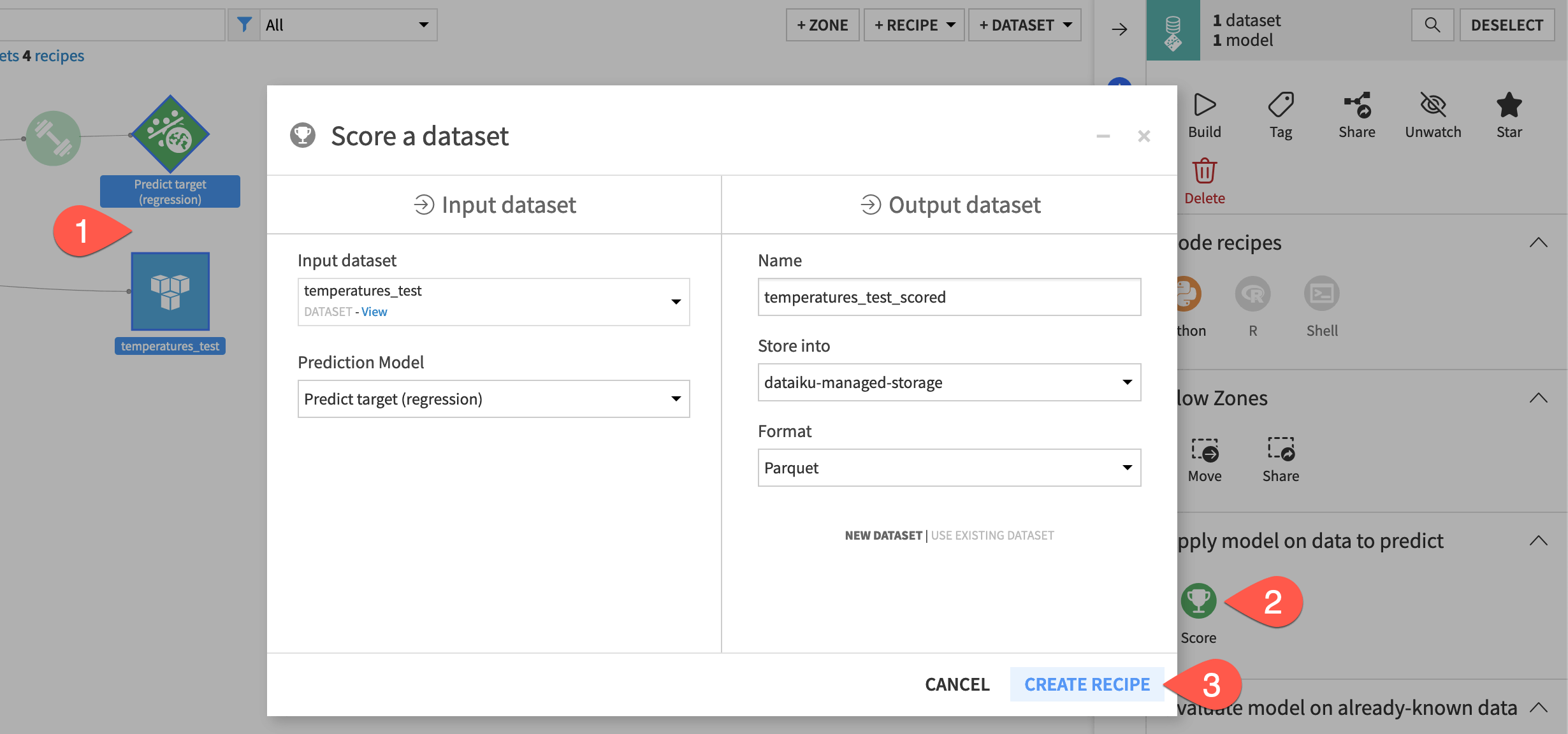

Apply the model to data#

Let’s now use a Score recipe to apply the model to new data.

From the Flow, select the temperatures_test dataset and the saved model.

From the Actions panel, select the Score recipe.

Click Create Recipe.

Click Run, and then open the output dataset.

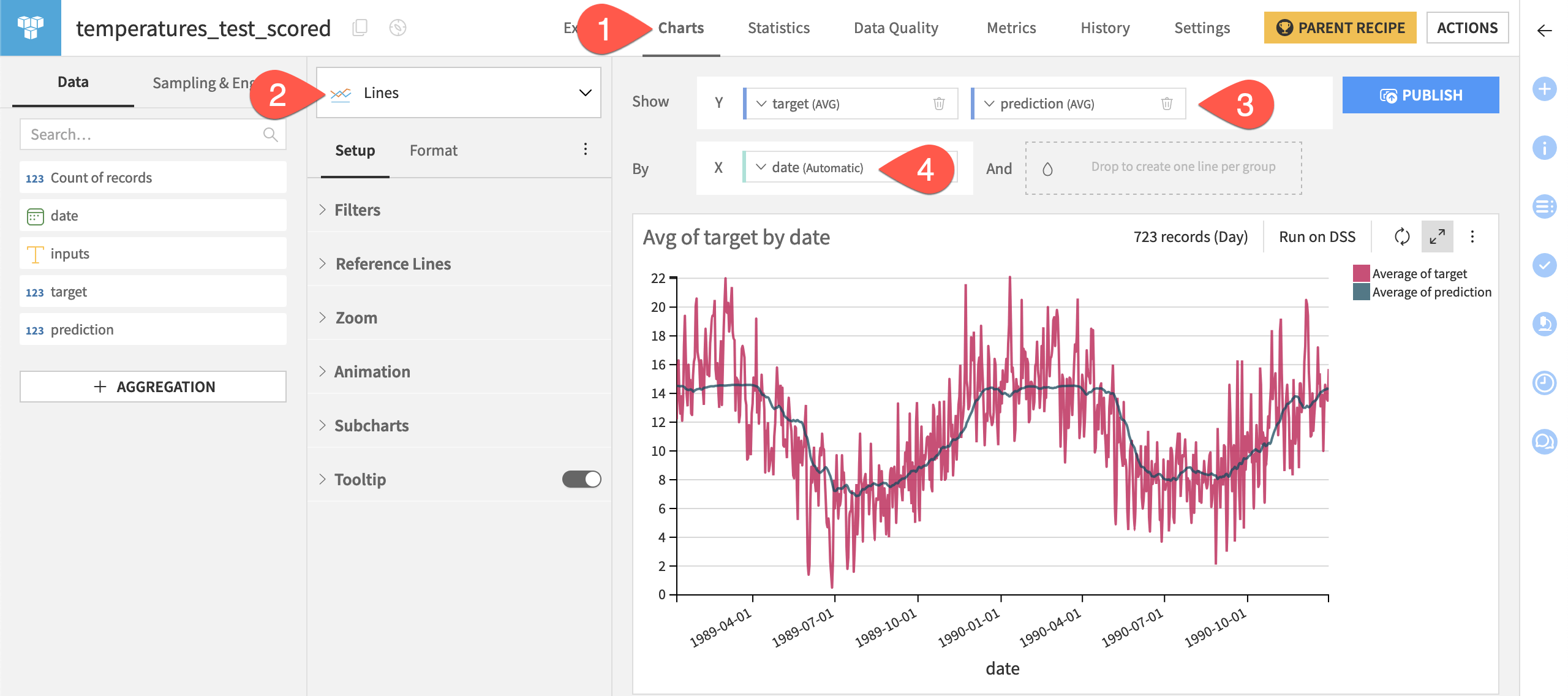

Check the results with a chart#

Lastly, we can check the results with a chart.

From the temperatures_test dataset, navigate to the Charts tab.

From the chart picker, select Lines.

Drag target and prediction to the Y axis.

Drag date to the X axis.

Tip

The line chart shows that the model managed to pick up the general trend. It doesn’t perfectly fit the curve because it’s generalizing. The minimum temperature in a country as vast as Australia can fluctuate a lot in a pseudo-random fashion!

Next steps#

Congratulations! You built a deep learning model on time series data with Dataiku’s visual ML tool. You then deployed it to the Flow and used it for scoring like any other visual model.

Next, you might want to build a deep learning model on structured data by following Tutorial | Deep learning within visual ML.

See also

See the reference documentation on Deep Learning to learn more.