Visitor analysis#

Now let’s attempt to segment visitors into categories and direct customers to the most appropriate channel. Our visitor analysis has the following high-level steps:

Group visits by unique visitors

Segment visitors using a clustering model

Join the cluster labels with customer data

Send the segmented data to appropriate channels for further engagement

Grouping visitors#

Using a similar technique as for referrers, we will now examine the behavior of website visitors across time (sessions).

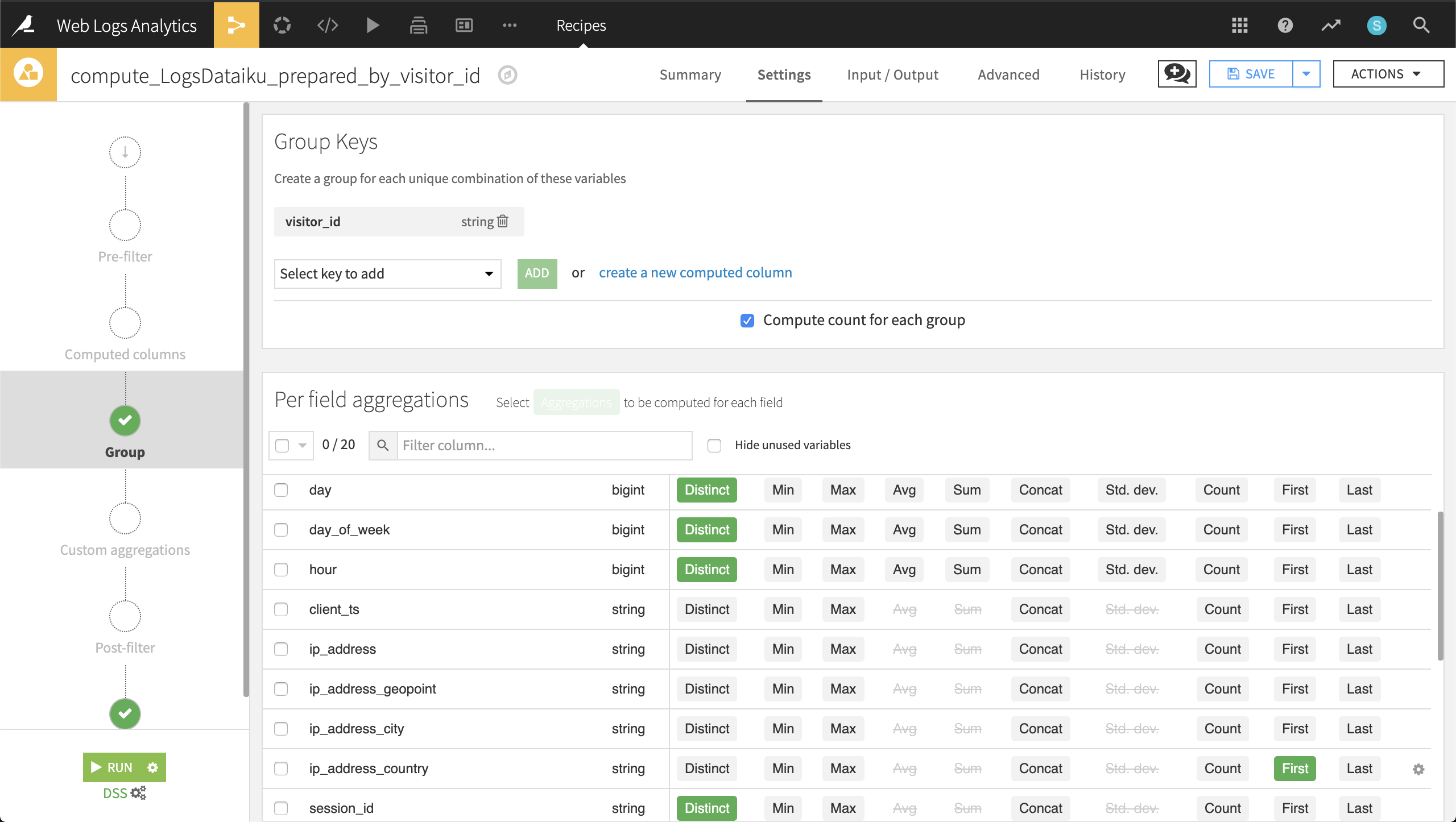

Returning to the LogsDataiku_prepared dataset, create a new Group recipe with visitor_id as the column to group by. Keep the default output name,

LogsDataiku_prepared_by_visitor_id.At the Group step, keep Compute count for each group selected, and add the following per-field aggregations:

For day, day_of_week, hour, session_id, and url: Distinct

For ip_address_country and user_agent_type: First

For blog, applications, home, company, products, and data-science: Sum

Run the recipe, updating the schema to 15 columns.

Now for each unique visitor, we know information such as their IP address, their number of visits, the specific pages they visited, and their device (browser vs. mobile).

Clustering web visitors#

Let’s use this data to cluster visitors into certain categories that may help our colleagues in Marketing and Sales to be more targeted in their outreach efforts.

From the output dataset grouped by visitor_id, go into the Lab and create a Quick Model under Visual analysis.

Then choose a Clustering task and, in a Quick model style, a K-Means clustering model. Keep the default analysis name.

Click Train to train the first model. You don’t need to provide a session name or description.

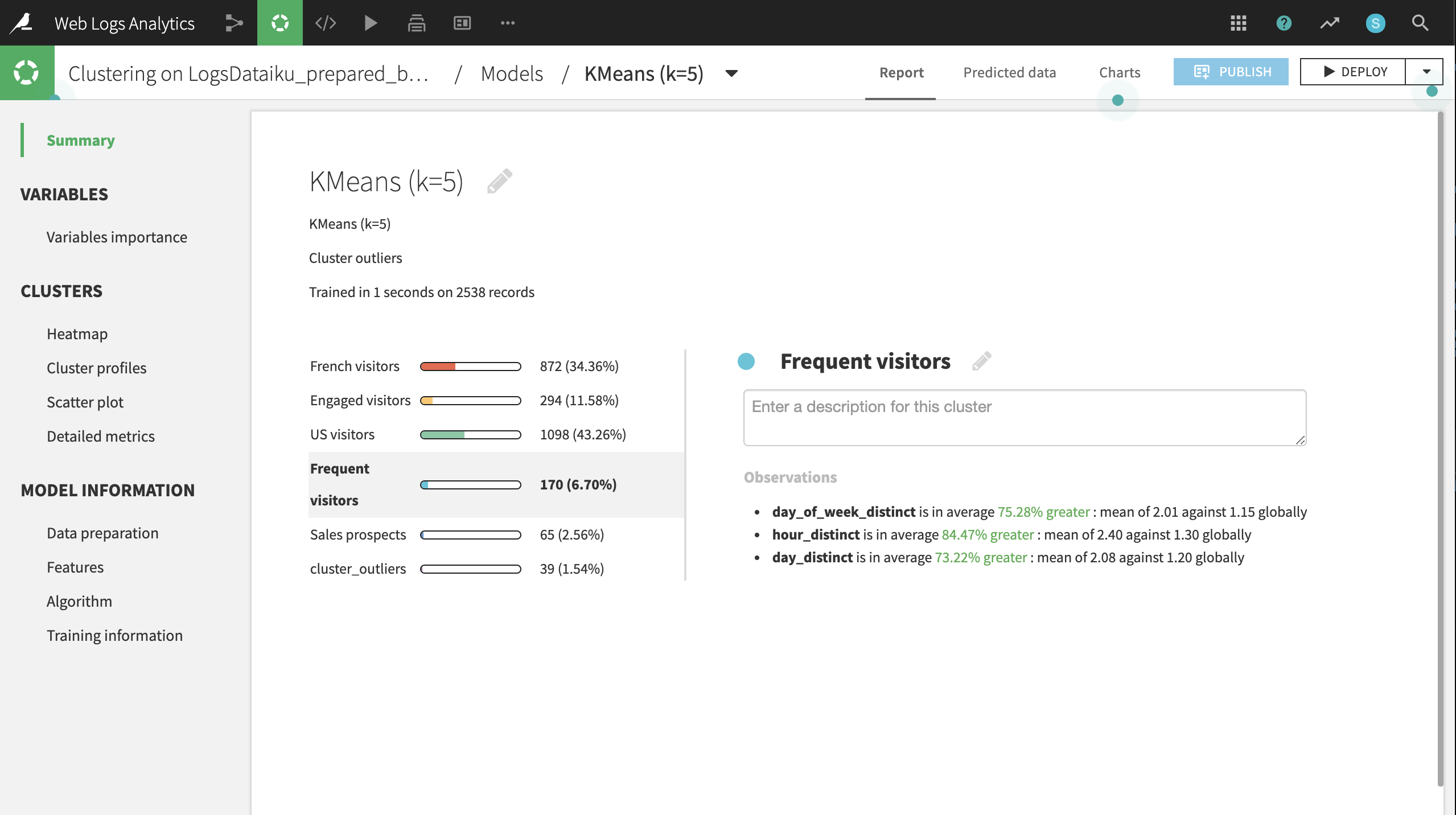

Dataiku will recognize the data types and use Machine Learning best practices when training a default clustering model. Expect the final cluster profiles to vary because of sampling effects, but there will be five primary clusters, plus a sixth cluster containing observations that don’t fit any of the primary clusters.

Clicking on the model name, here KMeans (k=5), brings up the Summary tab, where you can find a wide array of model metrics and information. In the Summary tab, click the pencil icon next to each default cluster name to rename the clusters according to the suggestions below:

Cluster |

Larger-than-usual numbers of: |

|---|---|

US visitors |

Americans |

French visitors |

French |

Frequent visitors |

Distinct visits |

Engaged visitors |

Distinct URLs visited |

Sales prospects |

Visits to the products page |

From the button at the top right, Deploy the model to the Flow as a retrainable model.

Apply it to the LogsDataiku_prepared_by_visitor_id dataset. Keep the default model name.

Selecting the model from the Flow, initiate an Apply recipe. Choose LogsDataiku_prepared_by_visitor_id as the input.

Name the output dataset

LogsDataiku_segmented.Create and run the recipe.

Now, one column, cluster_labels, has been added to the output LogsDataiku_segmented.

Joining the clusters and customer data#

To map these segments to known customers, we’ll need our customer data.

If not already having done so, create a new UploadedFiles dataset from the customer data (CRM.csv.gz) and name it

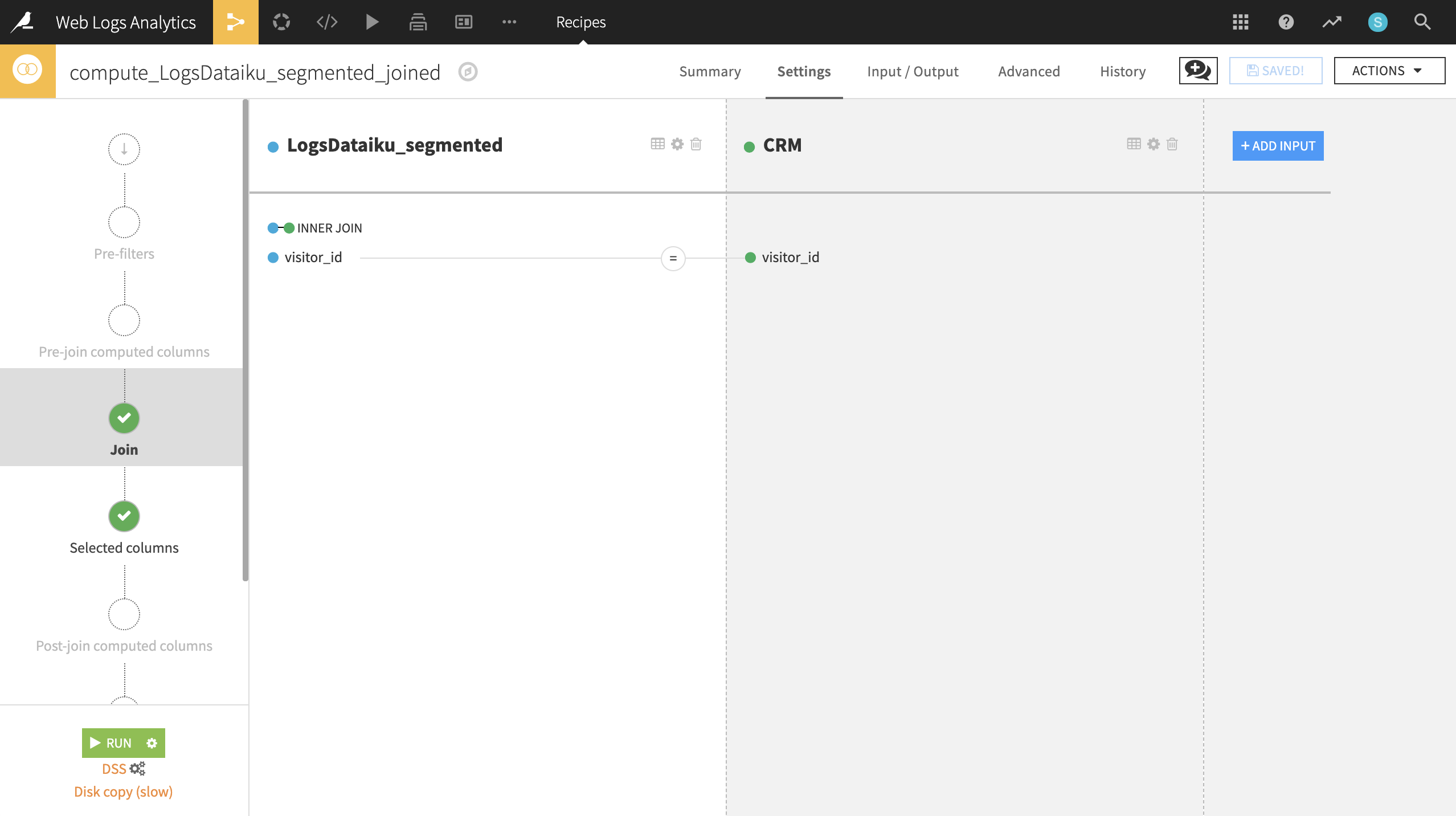

CRM.From LogsDataiku_segmented, initiate a Join recipe, adding CRM as the second input. Keep the default output, LogsDataiku_segmented_joined.

In the Join step, visitor_id should be automatically recognized as the join key. Change the type of join to an Inner Join to keep only records where a customer can be successfully matched with website visits.

After completing the join, expect an output of 5602 rows and 35 columns.

Customizing and activating segments#

Now we want to feed these customers into the right channel: Marketing, Prospective Sales, or Sales.

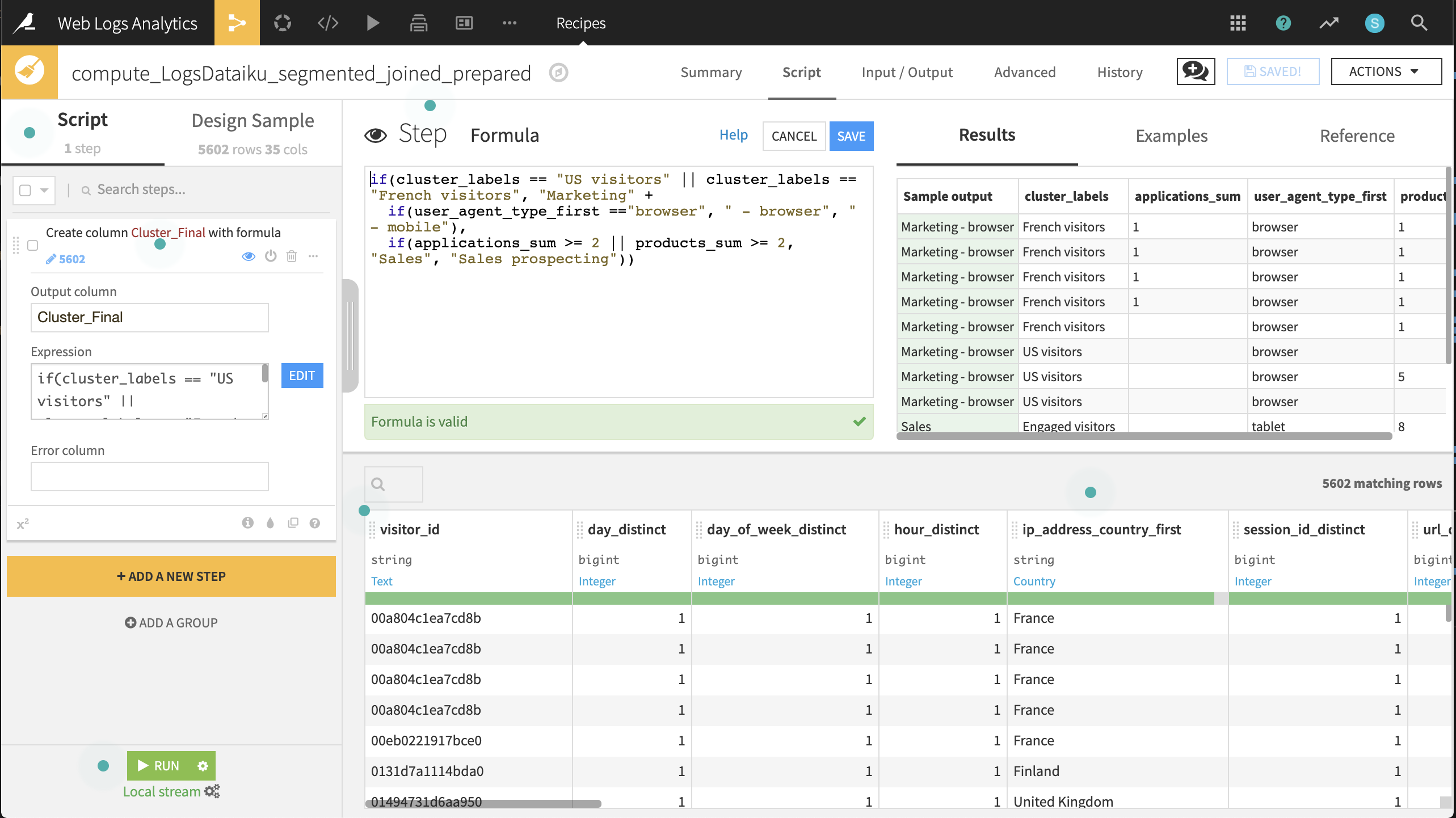

Create a Prepare recipe from the LogsDataiku_segmented_joined dataset. Keep the default output name.

Add a new step with the Formula processor creating a new column, Cluster_Final, using the expression defined below:

if(cluster_labels == "US visitors" || cluster_labels == "French visitors", "Marketing" + if(user_agent_type_first =="browser", " - browser", " - mobile"), if(applications_sum >= 2 || products_sum >= 2, "Sales", "Sales prospecting"))

Run the recipe, updating the schema to 36 columns.

Now let’s split the dataset on this newly created variable so we can ship the smaller subsets to the right teams.

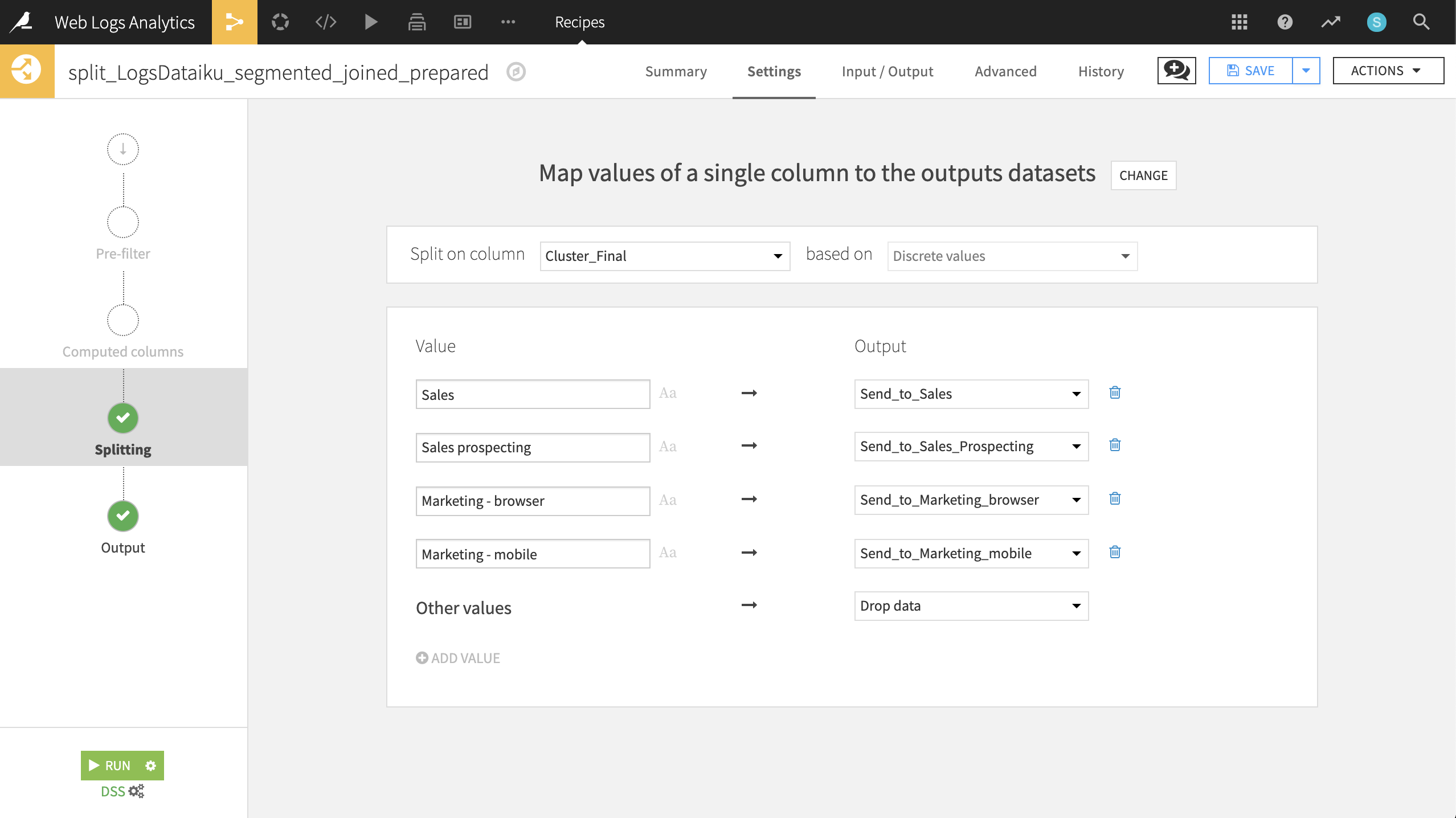

From the output dataset, LogsDataiku_segmented_joined_prepared, create a Split recipe that sends each of the four values of Cluster_Final to individual datasets. Use the names:

Send_to_SalesSend_to_Sales_ProspectingSend_to_Marketing_browserSend_to_Marketing_mobile

Choose Map values of a single column as the Splitting method.

Choose Cluster_Final as the column on which to split.

Add the values and outputs according to the screenshot below.

Run the recipe.

These newly generated datasets are immediately usable by customer-facing team members to send targeted emails!