Concept | Feature generation & reduction#

Watch the video

After processing the features, we can review the design of our model session and look at two ways to enrich our features: Feature generation and Feature reduction.

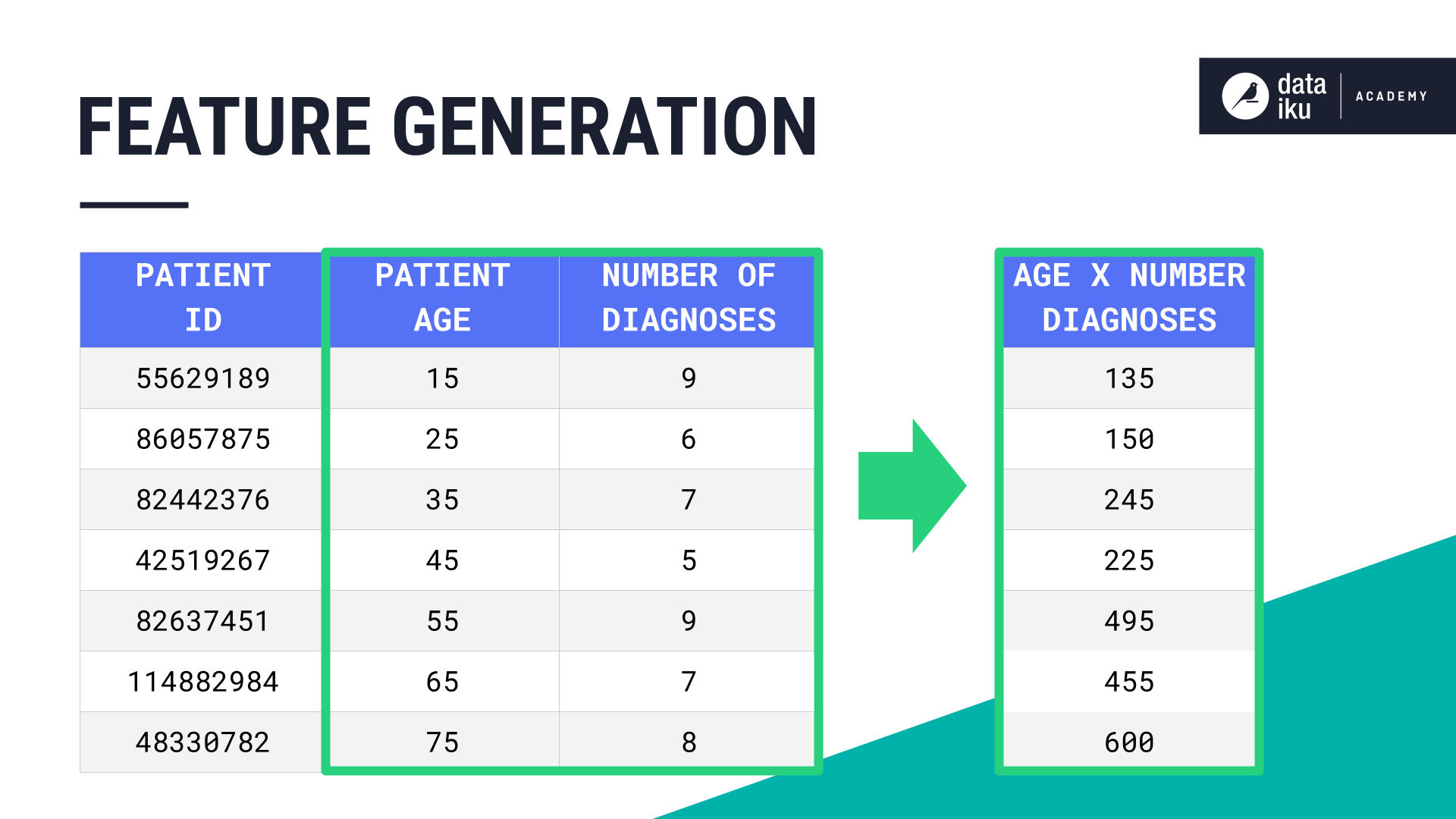

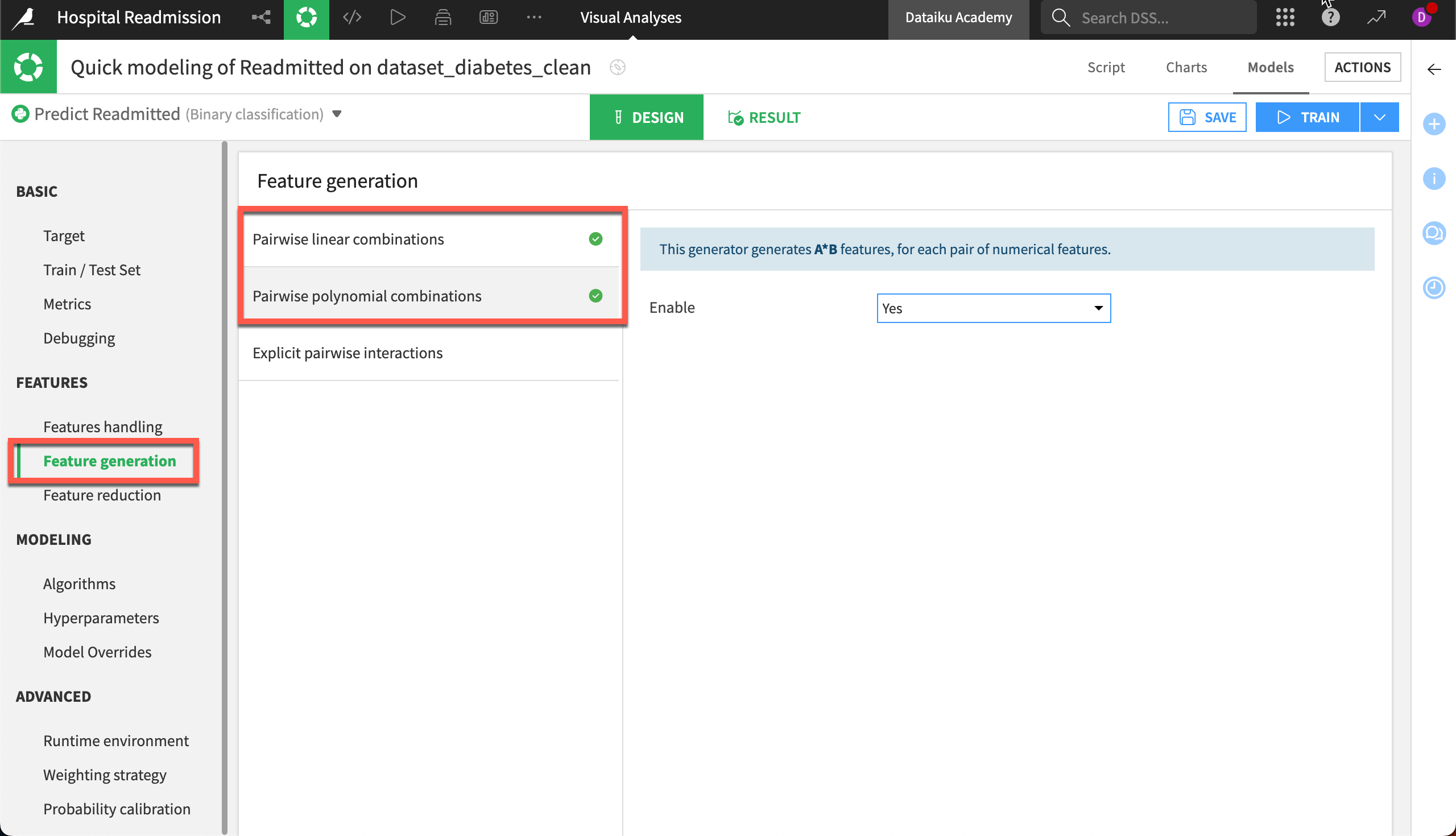

Feature generation#

Feature generation is the process of constructing new features from existing ones. The goal of feature generation is to derive new combinations and representations of our data that might be useful to the machine learning model.

By generating polynomial features, we can uncover potential new relationships between the features and the target and improve the model’s performance.

Dataiku can compute interactions between features, such as pairwise linear combinations which compute the sum and difference of two numerical features, and pairwise polynomial combinations which multiply two numerical features. Dataiku will build pairwise polynomial interactions between all pairs of numerical features. To reduce the resulting number of new columns, we can specify the pairwise interactions we’re interested in.

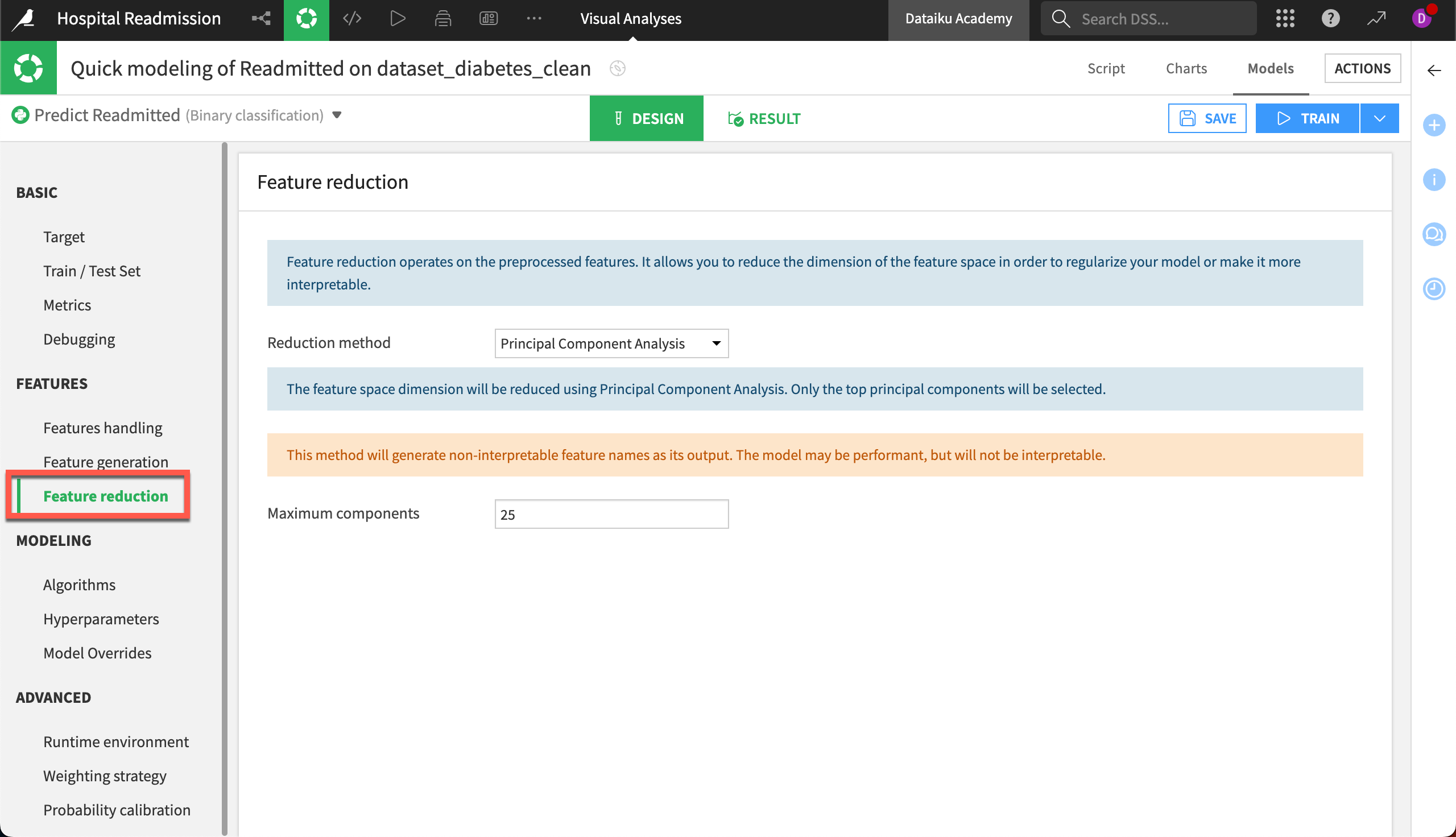

Feature reduction#

Feature reduction is the process of reducing the dimension of the feature space. Its goal is to streamline the number of features our model has to ingest without losing important information.

The following feature reduction techniques are available:

In Correlation with target, only the features most correlated with the target will be selected.

With Principal Component Analysis, only the top principal components will be selected.

Using a Tree-based technique will create a Random Forest model to predict the target. Only the top features will be selected.

With the Lasso regression technique, Dataiku will create a LASSO model to predict the target. Only the features with non-zero coefficients will be selected.

We can also select the parameters of our feature reduction technique. For example, we can select the number of principal components we want to keep for PCA.