Concept | Interpretability#

A key aspect of responsible model experimentation is being able to understand or interpret the way in which a model has determined its predictions.

Interpreting a model is being able to understand the general mechanics of association for variables and the outcome. (Note: This isn’t the same as causal prediction.) This interpretation happens at a global model level, providing context on how the model makes predictions overall. It can be applied to even the most “black box” of models.

Model interpretability is often discussed in relation to black box models, or models that don’t provide much insight into the way features and distributions affect final predictions.

There has been a recent push toward using what some call inherently interpretable models that provide either variable importance or coefficients to describe how much features influence outcomes at a global level for a model. Interpretable models include logistic and linear regression, decision trees (including random forest models), GLM, and naive Bayes classifiers.

Don’t be fooled! Interpretability is distinct from the notion of explainability.

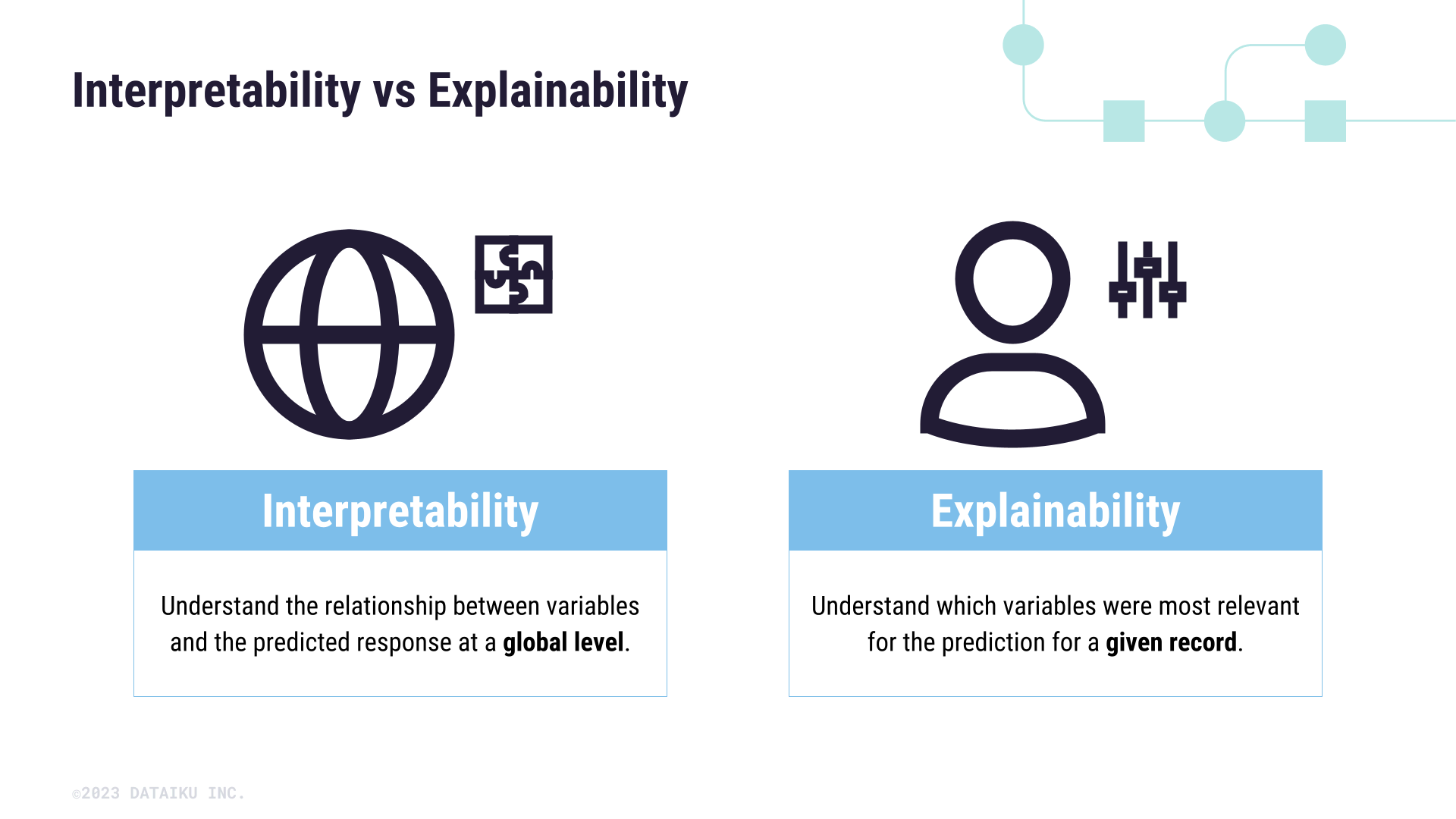

Interpretability is understanding the relationship between variables and the predicted response at a global level.

Explainability is understanding which variables were most relevant for the prediction for a given record.

In other words, explainability encompasses knowing why a model made a specific prediction on a record.

Both are useful—and necessary— in the scope of Responsible AI. However, they come into play at different stages of the AI lifecycle.