Concept | Time series forecasting#

Time series forecasting is a type of predictive modeling that you can perform natively in Dataiku.

While time series data can be used for a variety of objectives, many use cases fall under the category of forecasting. Time series forecasting can be used to predict weather, disease transmission, traffic patterns, real estate cost — the use cases are endless. In this article, we’ll learn about forecast models using a weather prediction use case.

Use case#

Let’s say we want to predict a 7-day moving average for temperature of a few different cities. Let’s also assume that we already have a dataset with daily temperatures from the past two years.

After calculating the moving average, we can start building a forecasting model. There are some key decisions we need to make in the model building process, so let’s learn a bit about model design.

Note

In this case, the moving average serves to smooth out the data and reduce noise or outliers. You can learn more about preparing a dataset with moving average in Concept | Time series windowing. For more general information about time series datasets, visit Time Series.

Important parameters#

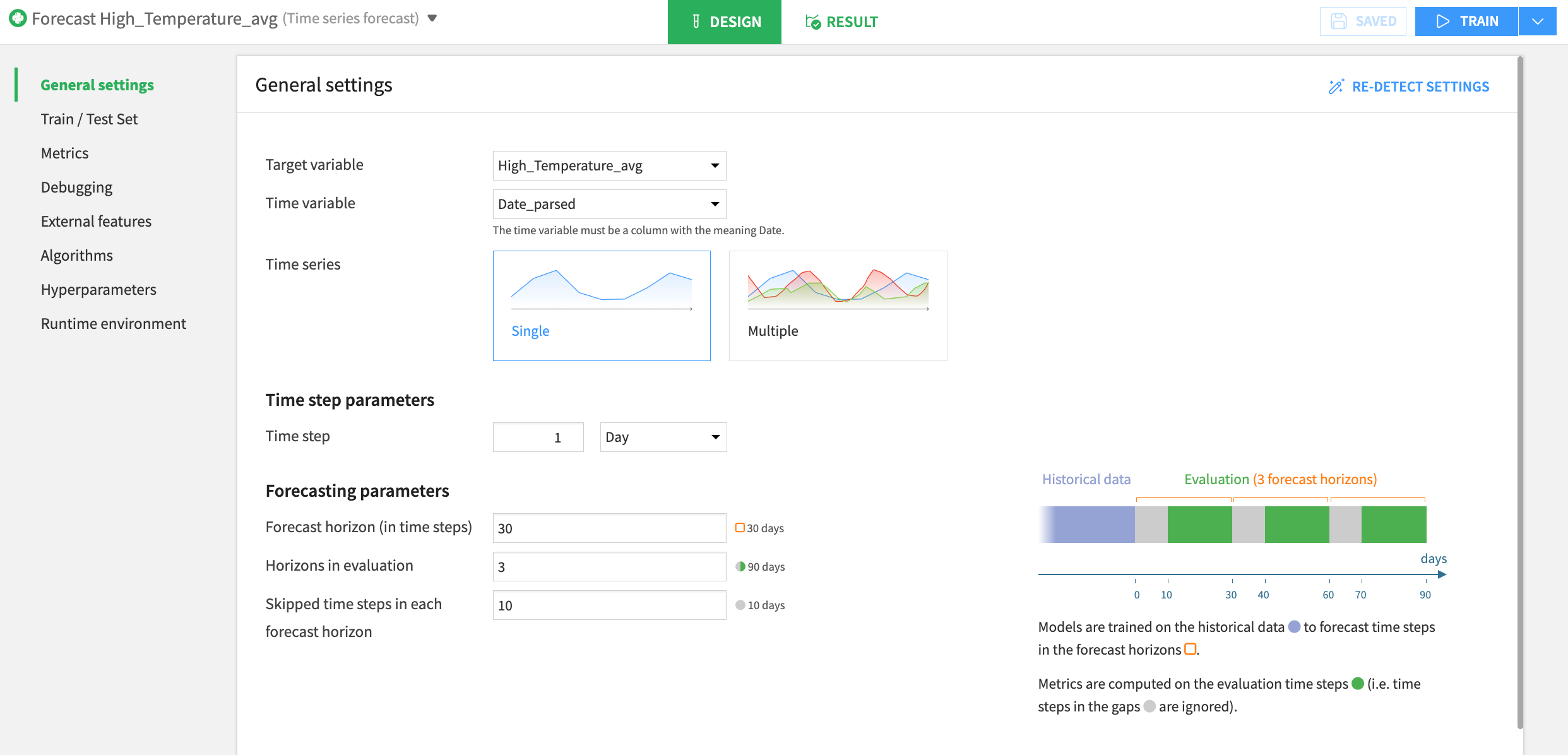

Before training a time series forecasting model in Dataiku, there are a few model parameters to configure. Let’s review an example using our weather forecasting use case.

Parameter |

Description |

|---|---|

Time step |

The time step defines the specific intervals in the chronological data sequence for recording observations. In our case, we want the time step to be set to Another option would be to choose a weekly time step if we only wanted to predict one average per week. |

Forecast horizon |

The forecast horizon determines the length of the forecast, or the future time period for which the model will generate predictions. Short-term forecasts are often more accurate than long-term forecasts, so we have to keep that in mind when choosing our forecast horizon. In this case, since we have a good amount of historical data, we can set our forecast horizon to 30. This means that the model will generate predictions for the next 30 days, or approximately one month. A good guideline to follow is that the time series should contain data including at least two cycles of the horizon length. So, if the horizon is 30 days, we should have at least 60 days of training data. |

Horizons in evaluation |

We also have to decide how often we want to evaluate our model. In our case, one can argue that since weekly temperatures have somewhat consistent patterns, our model should remain accurate for at least 30 days or one forecast horizon. However, we might want to be conscious of more long-term changes, such as global warming. In this case, we might decide to check in every 90 days to see if our model is still doing well. This would put Note that the date of your last horizon can’t be past the last date that’s in your time series data. |

Skipped time steps in each forecast horizon |

This value determines the number of time steps within each horizon to skip during model evaluation. In this case, we might not need to evaluate the model for each time step in 90 days. |

Algorithms#

Another thing to consider before training a model is which algorithm to use.

Dataiku lets you train using a few different algorithms at a time so you can compare results. You can learn about the different algorithms available for time series forecasting in the reference documentation.

When choosing an algorithm for your forecasting, it’s important to consider model complexity and interpretability as well as training times. Deep learning models and statistical models such as ARIMA and Prophet will take longer to train than simple seasonality models.

Features#

The algorithm you choose will also depend on whether you have additional external features that might be informative in running your forecasts. In our example, the running average temperature might depend on the precipitation or humidity levels. Therefore, we can include those features as additional predictors into our model. As for target time series values, we can create new features, like multiple lagged features (lag=1, lag=2, etc.) or running averages over a certain period of time. Running averages help reduce the noise in the predictors and often make forecasts more accurate.

You can apply similar preprocessing steps for your time series features as you would for usual regression or classification problems in the Lab.

If you use external features in your model, you will need to provide those features before running your forecast. In other words, to forecast the average temperatures for 7 days in the future, we will need to provide 7 future values for the humidity level if we decide to use it as a predictor in our model.

Note

Some of the simple models in Dataiku can’t incorporate external features.

Cross-validation#

We can also enable cross-validation during training to evaluate model performance more accurately. How does this work?

In a typical regression or classification model, at each iteration, a random sample of the data is used as test data and the remaining data is used for training.

Instead of just splitting the data once into training and testing, K-Fold cross-validation does this multiple times (K times). In each fold:

We use all the data except the last chunk (called the forecasting horizon) to train the model.

Then, we test the model’s accuracy by seeing how well it predicts that last chunk of data.

We slide this window forward one step: the previous prediction becomes part of the training data, and a new chunk becomes the testing data.

This is usually used when you have limited data. For our temperature forecast, one could argue that given the quantity of our data, there’s probably no need to invest extra time in cross-validation.

Note

Splits are independent, meaning each fold shouldn’t overlap with data used in other folds.

Evaluation episodes are distinct. These are separate periods used to assess the final model’s forecasting performance. They’re not the same as the folds used in cross-validation. Therefore, K-fold cross-validation helps estimate the model’s overall performance, but it doesn’t directly tell you how well it forecasts on specific future periods.

See also

A more in-depth explanation of the K-fold cross-test method is available in the reference documentation.

Next steps#

Now that you know a little more about building and training a forecasting model, try it yourself in Tutorial | Time series forecasting (visual ML)!