Concept | Responsible AI#

Today, when you hear the phrase Responsible AI what comes to mind? Do you think of big concepts like ethics or trust? Maybe your thoughts go to the number of regulations that are rolling out globally. You might also think about the public AI failures that are constantly coming to light.

In the past decade, a growing number of investigations and reports have revealed dangerous flaws in AI pipelines, whether as a product of biases, poor security practices, or misuse of AI in general. At the same time, compliance with new regulation on the building and deployment of AI means that companies must manage their AI pipelines more effectively, efficiently, and to a specified standard — especially at scale.

Knowing that unintended consequences of AI occur everyday, how do we make sure our intentions align with the AI we’re building? To accomplish this, we’ll need to use Responsible AI.

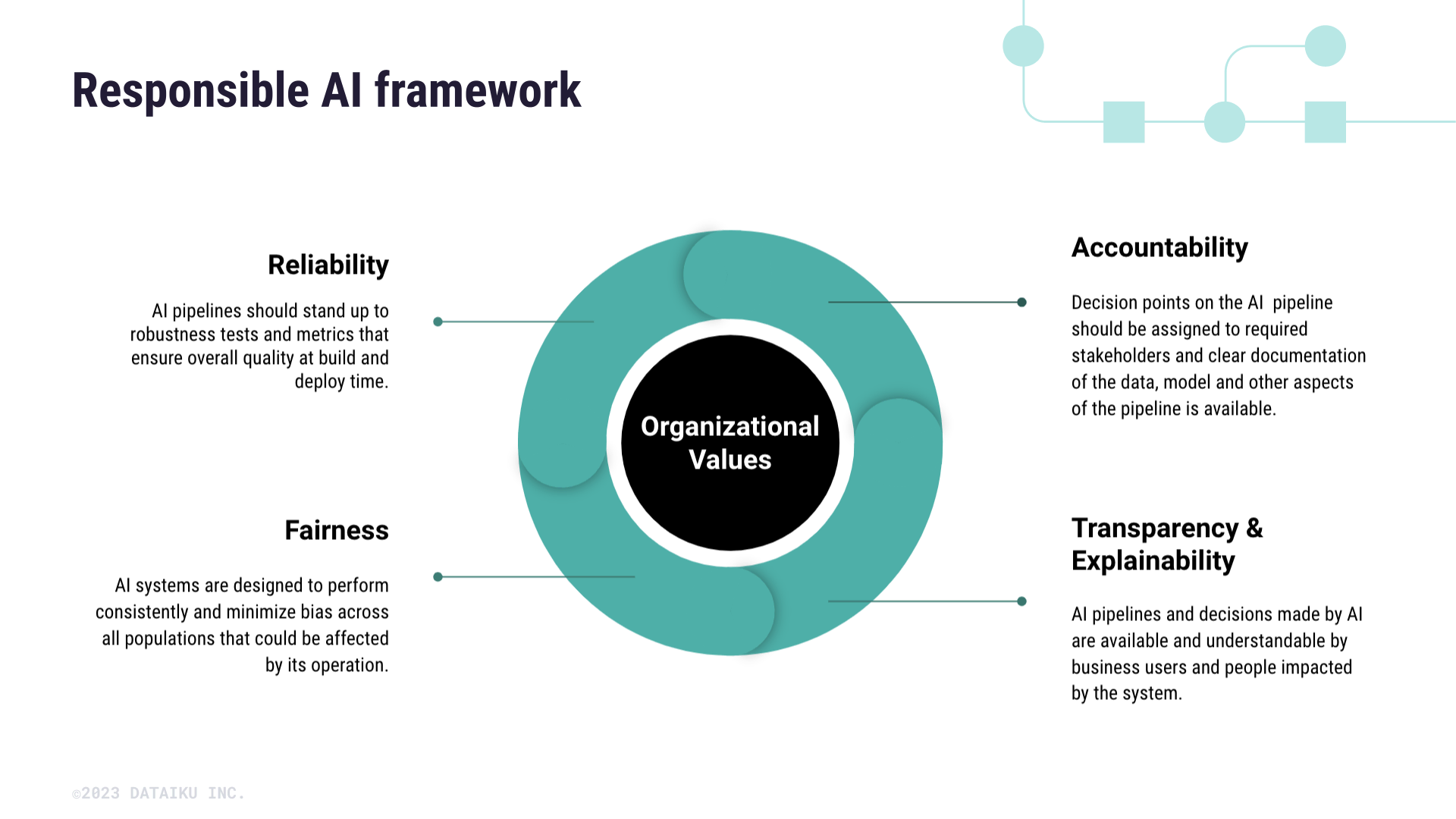

Responsible AI (RAI) is a framework that aligns AI with an organization’s values by proactively building systems that are reliable, accountable, fair, transparent, and explainable.

Why Responsible AI matters#

AI technologies allow us to explore new potentials and achieve our ever-scaling visions. Yet, we must be wary of any unintended outcomes that can arise along the way.

Some real-world outcomes of flawed AI include:

Assigning different credit limits for women and men with otherwise similar profiles.

Defining disparate medical risks based on race in healthcare settings.

Filtering out qualified candidates because of certain keywords during resume screening.

You might be asking yourself: Why does this matter to the average data practitioner? Big corporations often make mistakes, and it seems like these are problems on which we as individual AI builders or end users have no impact. However, the moment you make decisions when assessing data or outputs of a model is exactly when Responsible AI comes into play.

It’s easy to think that this is a simple top-down issue, where someone at the top has oversight and thus absolves all others of the responsibility to make sure AI is used in a fair and transparent way. Despite that, each of our decisions as data users impacts overall outcomes, and our individual actions have tangible effects on the world around us.

Preventing and managing unintended consequences of AI requires everyone involved in the pipeline to be aware of the potential risks and dangers of irresponsibly built AI.

Next steps#

We’ll explore this topic further in Concept | Dangers of irresponsible AI.