Concept | Introduction to natural language processing (NLP)#

Watch the video

The goal of NLP#

Although human language is not a recent invention, humans have only recently developed sophisticated methods to process it. This has given rise to the field of computer science called natural language processing, or NLP.

Note

The goal of NLP is to automatically process, analyze, interpret, and generate speech and text.

As more data that depicts human language has become available, the field of natural language processing within the machine learning ecosystem has grown.

NLP use cases can be reduced to more than one type of machine learning task:

At times, it might be a simple classification task. Is this email spam or not? Is the customer’s review positive or negative?

It might resemble an unsupervised clustering problem. What are the main topics within this corpus of documents?

It could be a complex prediction task. What’s the next word the user is going to type?

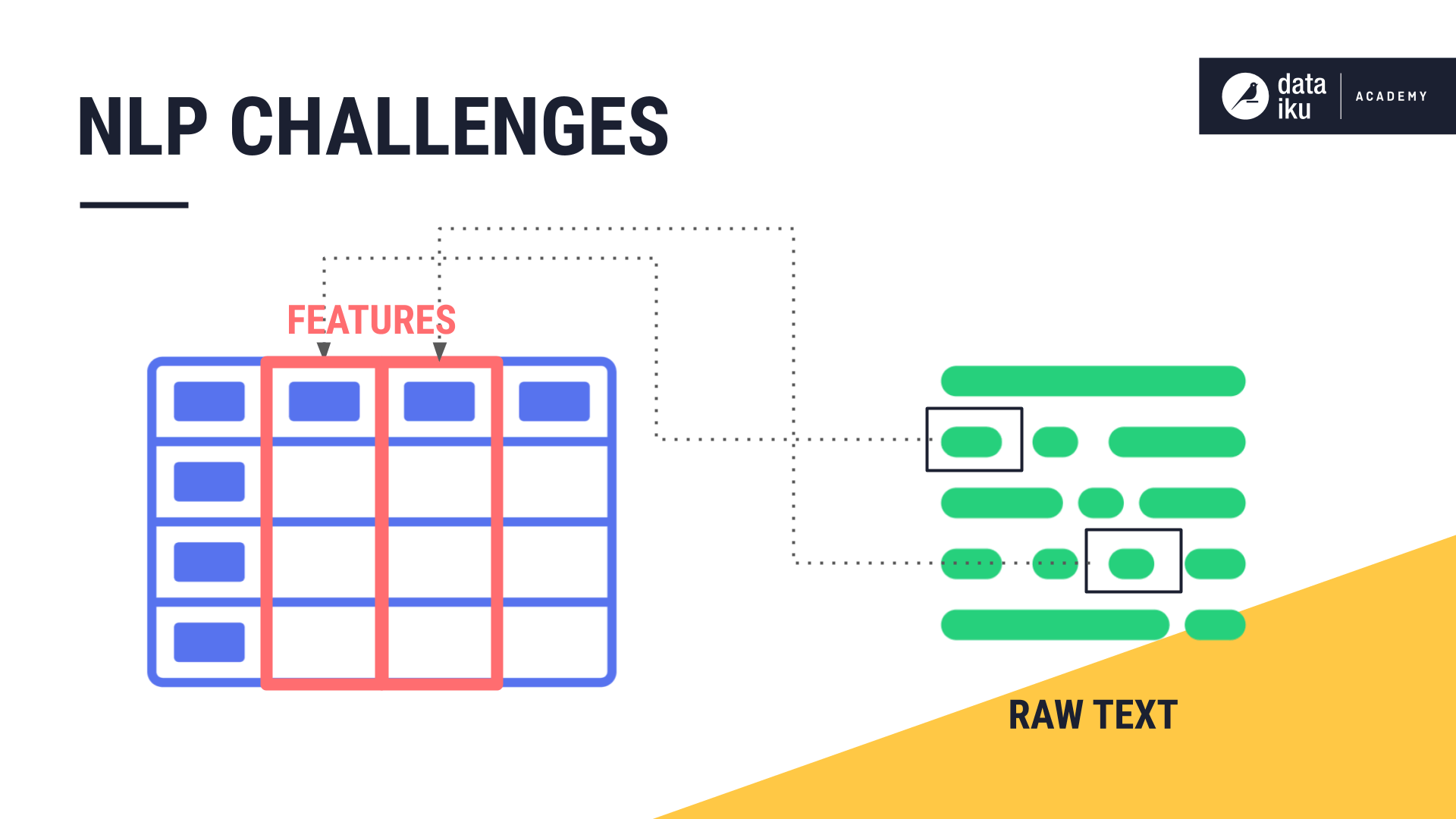

NLP is one of the most challenging domains of machine learning. Human language is extraordinarily complex. Consider that the same sequence of words can have the exact opposite meaning if spoken sarcastically. How can a computer understand this subtlety? Human language data doesn’t resemble the orderly rows and columns you might find in a time series, for example. Instead, human language is unstructured and messy.

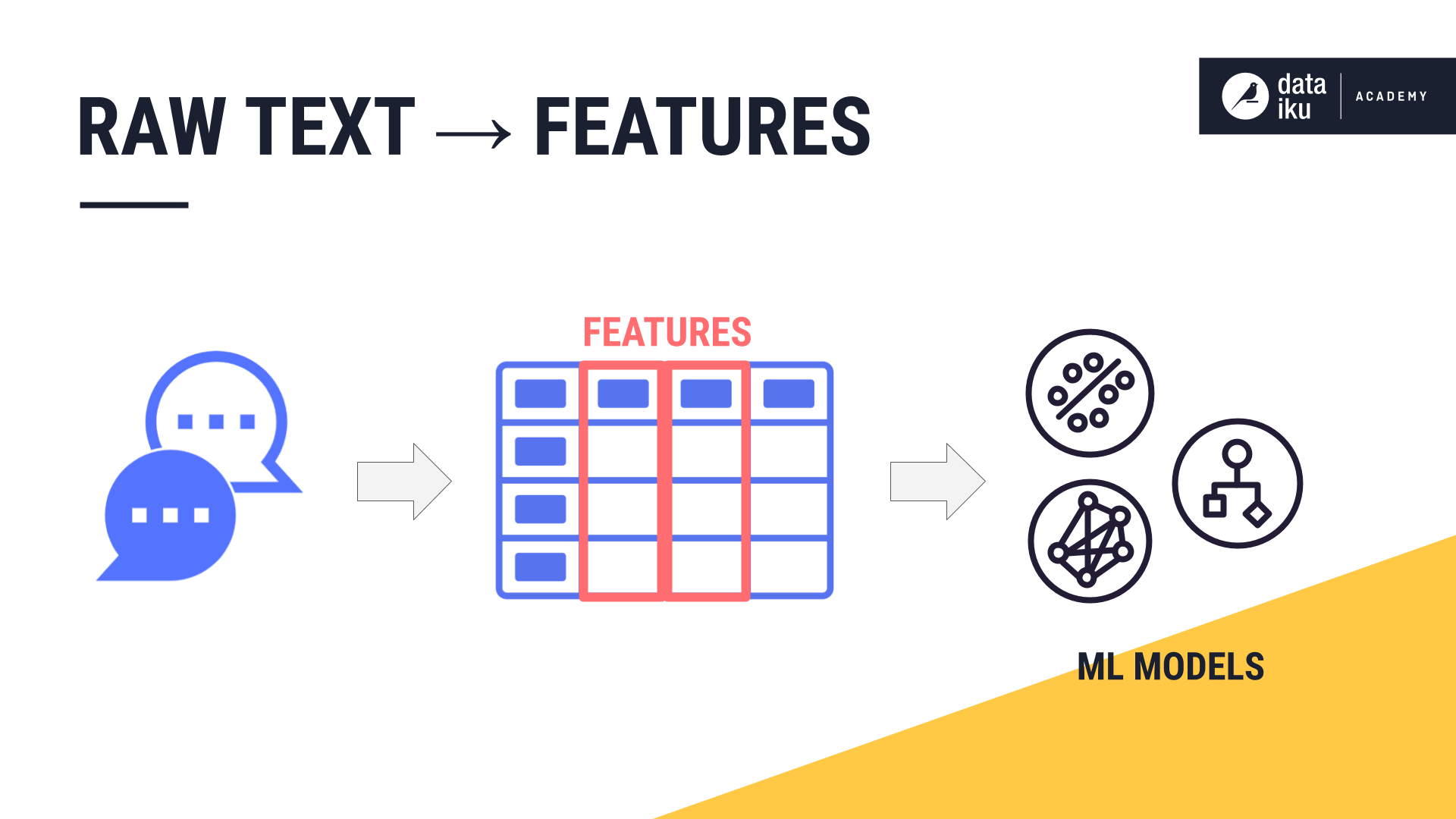

The features in the training data make machine learning possible. The challenge of NLP is turning raw text into features that a machine learning algorithm can process and search for patterns.

The bag of words approach#

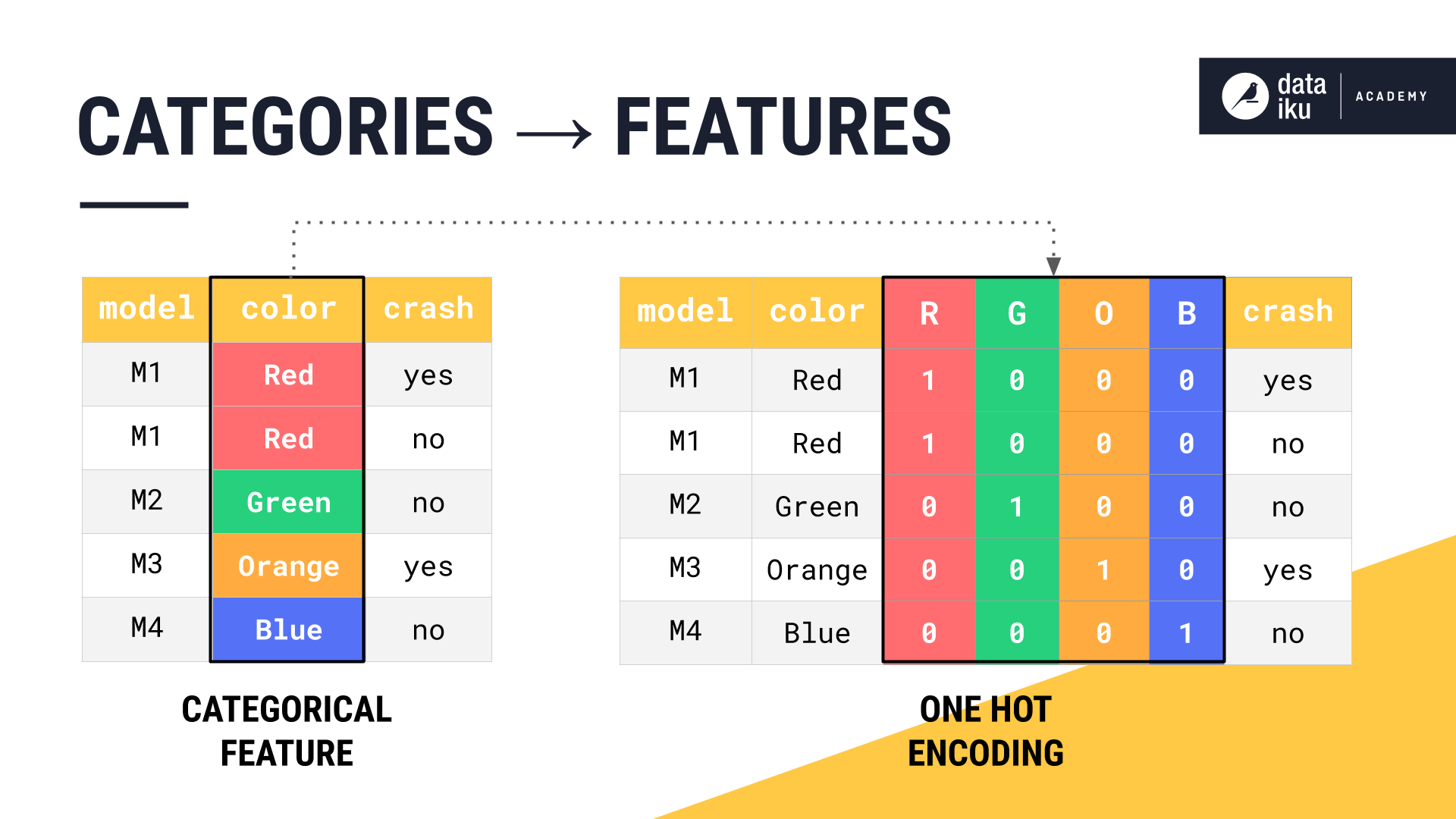

Recall how Dataiku handles categorical features, such as color. That is a similar problem of turning text into a numeric format that an algorithm can understand. Strategies like one-hot encoding allow for numerical representation of categorical classes. With natural language, the approach is similar.

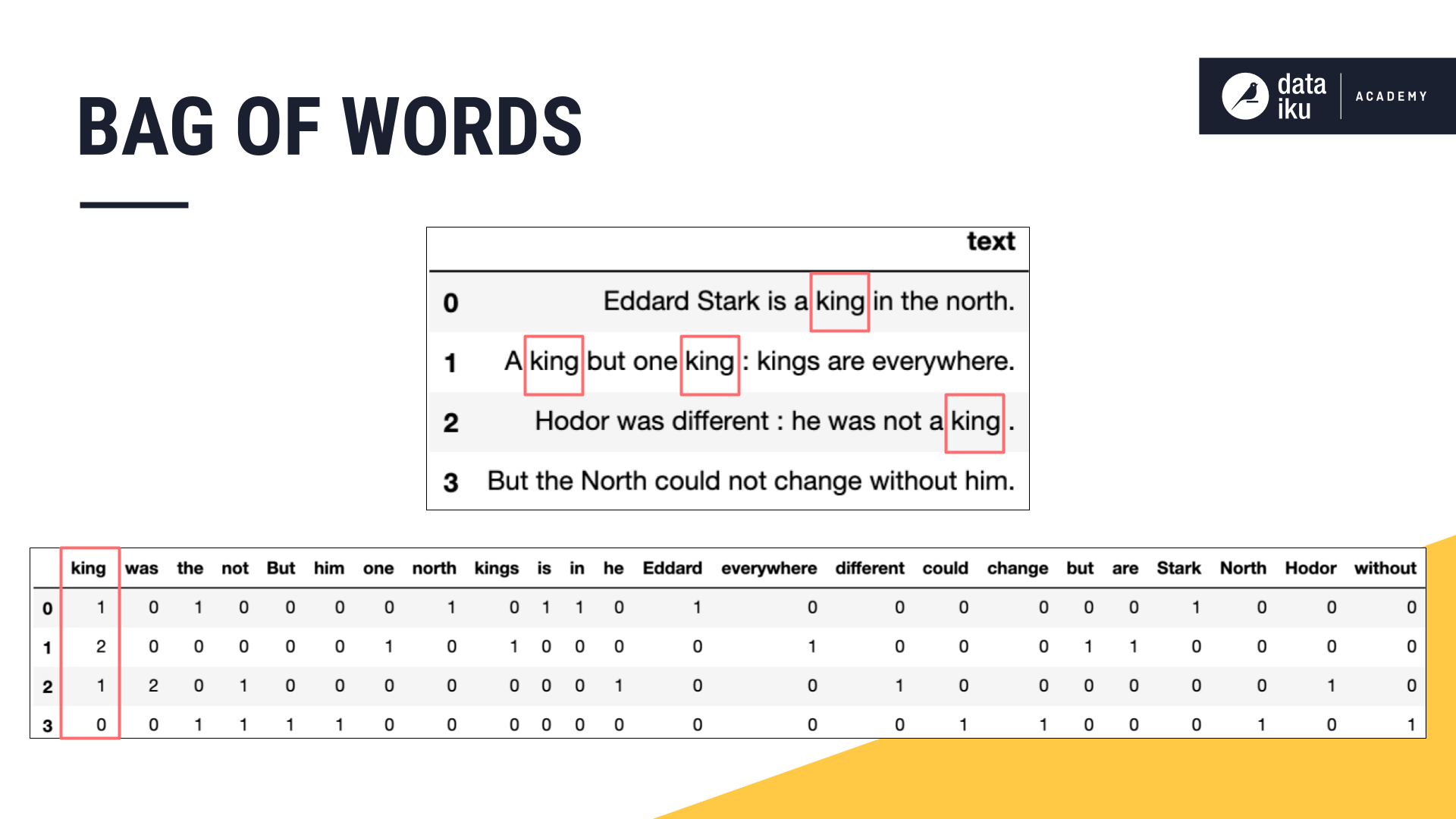

You can consider natural language as a collection of categorical features, where each word is a category of its own. Once that’s complete, you can start counting words in clever ways to build features. For some use cases, treating the text as a bag of words, and just counting the words in the bag, may be all that’s needed.

In this example, the word “king,” appears once in the first sentence, twice in the second, once in the third, and none in the fourth.

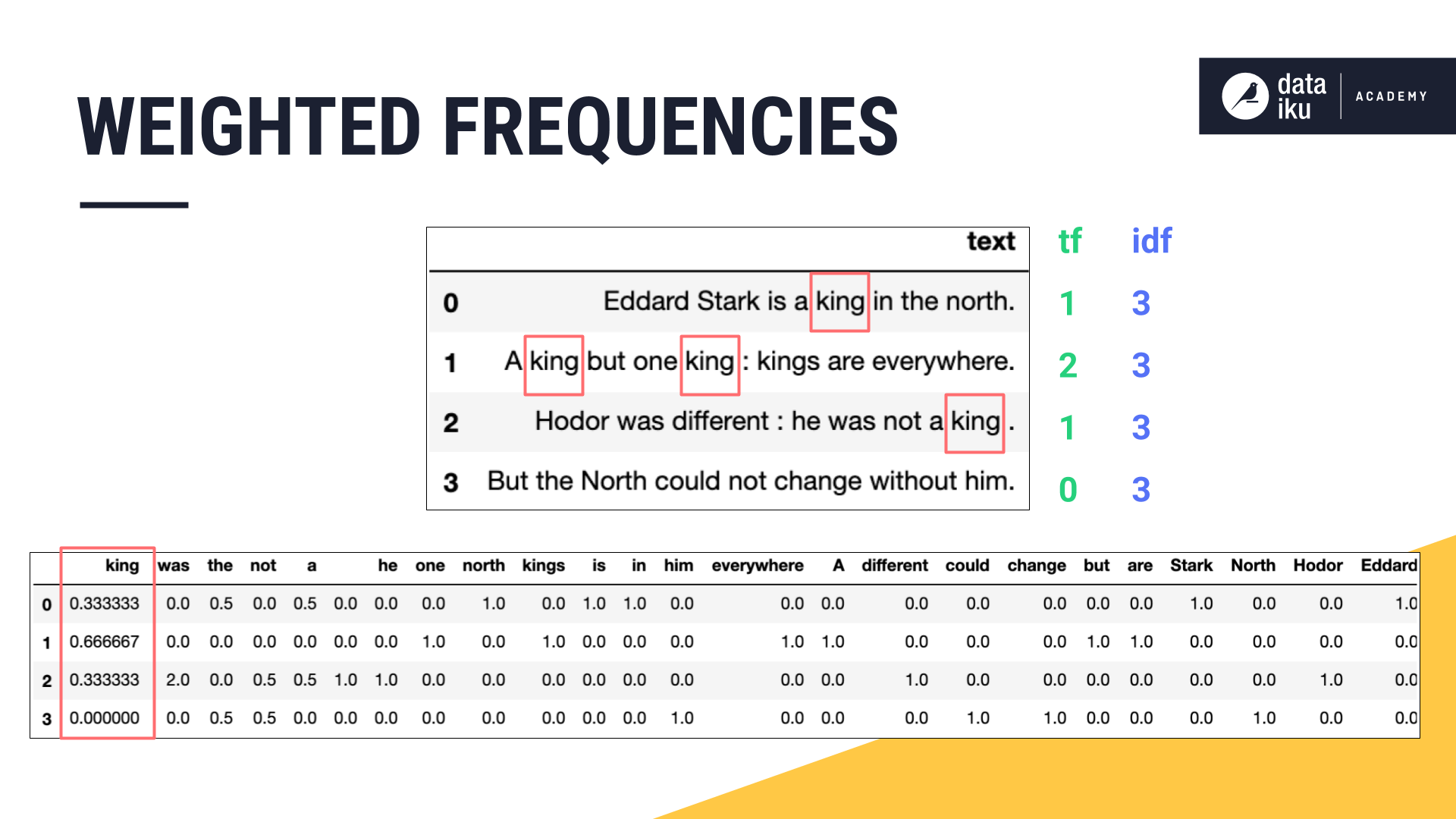

Other cases may see better performance if features represent not just the frequency of a word, but that frequency weighted by how common it is for that word to appear. This method is known as term frequency - inverse document frequency, or TF-IDF.

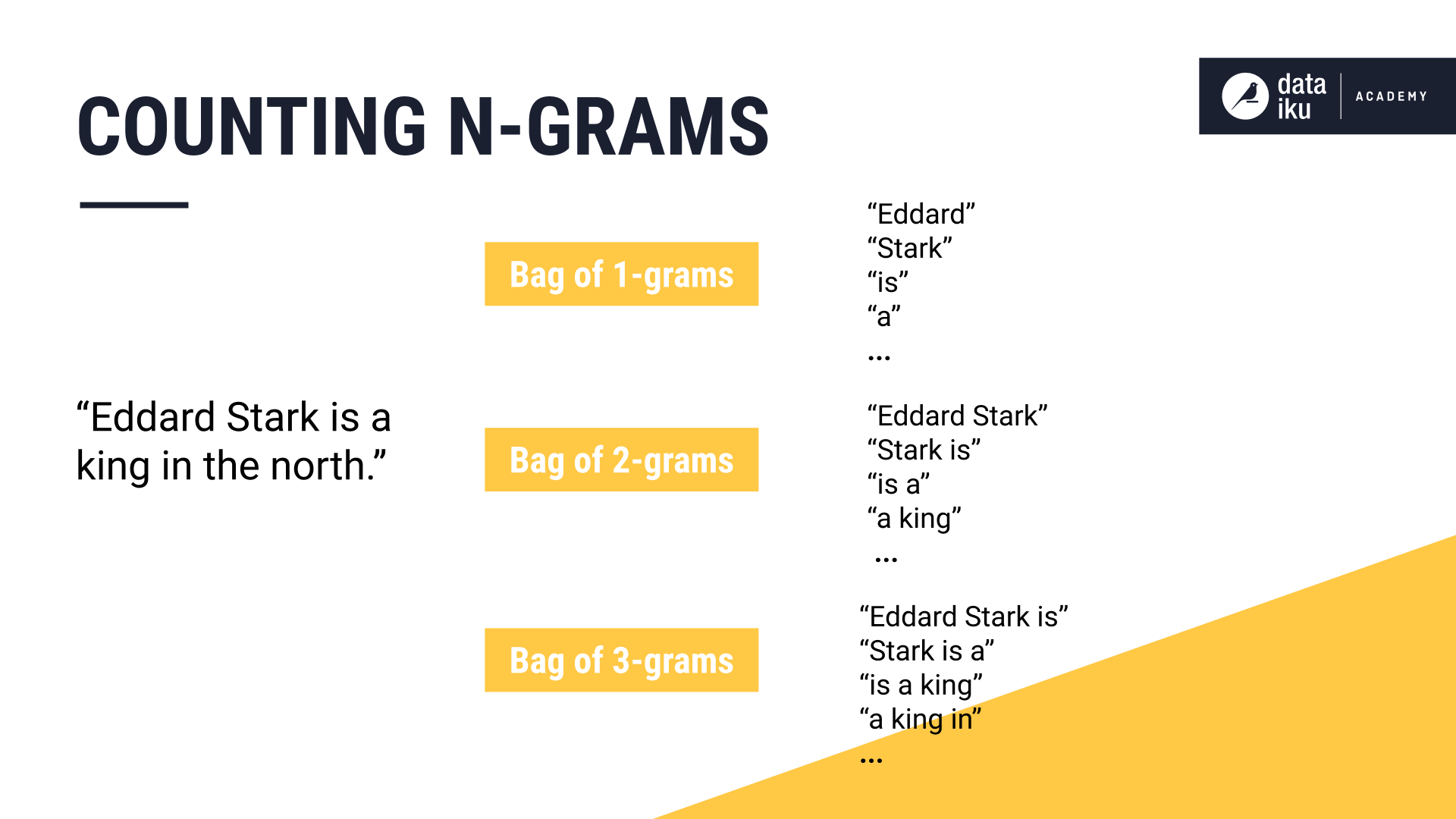

In other cases, you may want to not only count individual words, but also group every two, or even three words as a single unit.

With these techniques, the underlying goal is the same: develop ways to transform raw text into numeric features that can be understood by machine learning algorithms.

Next steps#

For each of these approaches, you can probably already anticipate problems you’ll face.

Forge ahead to see how to deal with them in Dataiku!