Concept | Algorithm and hyperparameter selection#

Watch the video

As part of the model design process, you’ll need to select different machine learning algorithms, understand model hyperparameters, and choose a search strategy for optimizing hyperparameters.

We’ll teach you how to do this by focusing on the Modeling section of visual ML design in Dataiku.

Algorithms#

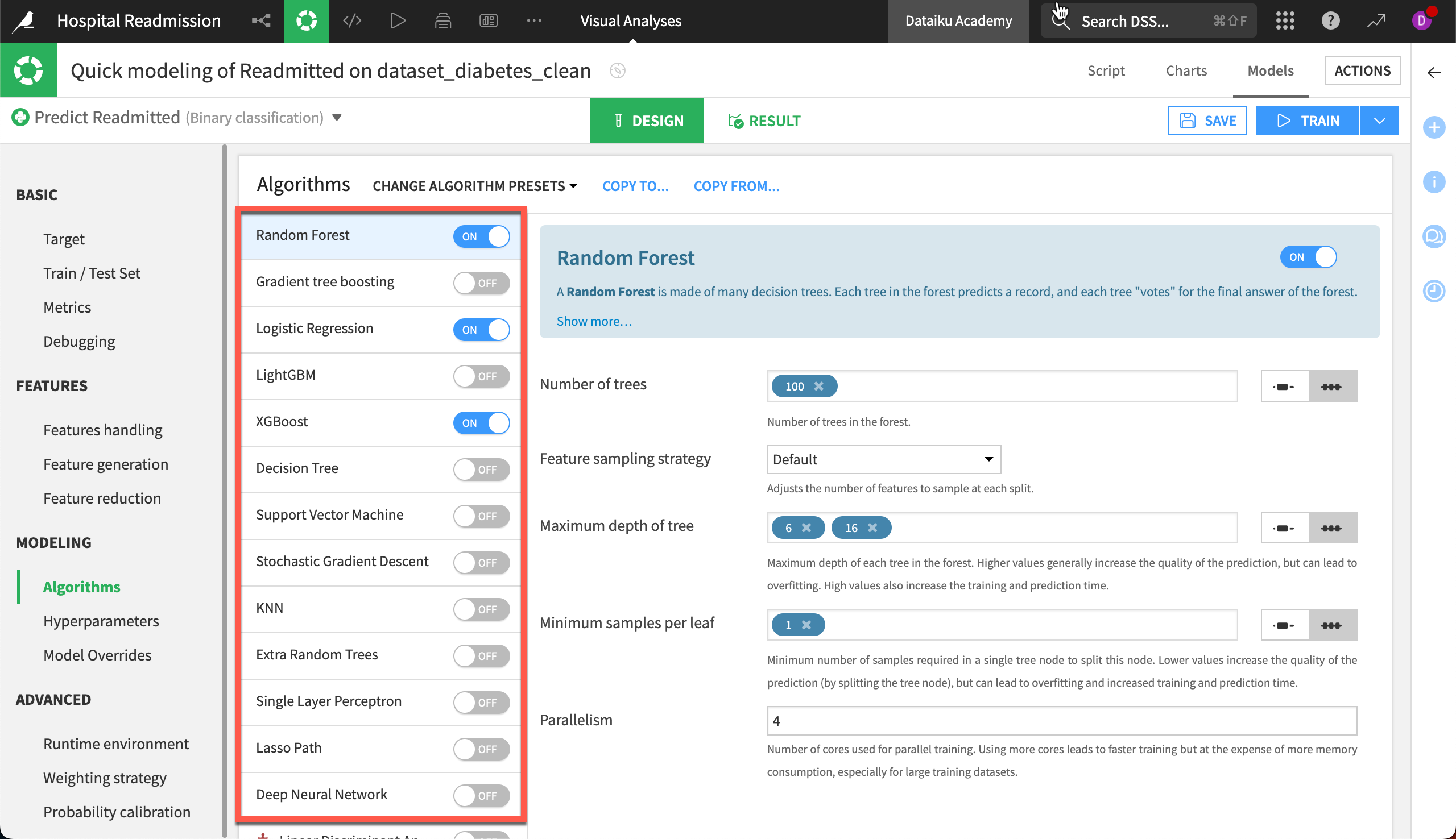

Dataiku natively supports several algorithms that can be used to train predictive models depending on the machine learning task:

Clustering, or

Prediction (Classification or Regression).

We can also choose to use our own machine learning algorithm by adding a custom Python model.

Hyperparameters#

For each of the algorithms available, there are a set of hyperparameters you can optimize to improve the model performance. Hyperparameters are values that you can explicitly specify to control the machine learning process.

Some hyperparameters to look at include the maximum depth of tree for tree-based models, learning rate for models that learn and converge iteratively, or regularization parameters.

Finding the right hyperparameters for a use case often means striking a balance between model performance, complexity, and ability to generalize well to new data. In machine learning, this is known as the bias-variance tradeoff. Note that the hyperparameter selection also depends on the engine used to train the model.

Engines#

Visual machine learning in Dataiku supports the use of several different machine learning engines. Most algorithms are based on the scikit-learn or XGBoost machine learning library, and use in-memory processing. Usually this is preferred for faster learning and more extensive options and algorithms.

Optimization strategies#

Now that you are familiar with hyperparameters, you’ll want to know how to optimize them.

This is no easy task. Ultimately, there can be millions of combinations of hyperparameters that could possibly improve your model. Therefore, after listing hyperparameter values to explore, it’s important to use a search strategy that will compute these for you.

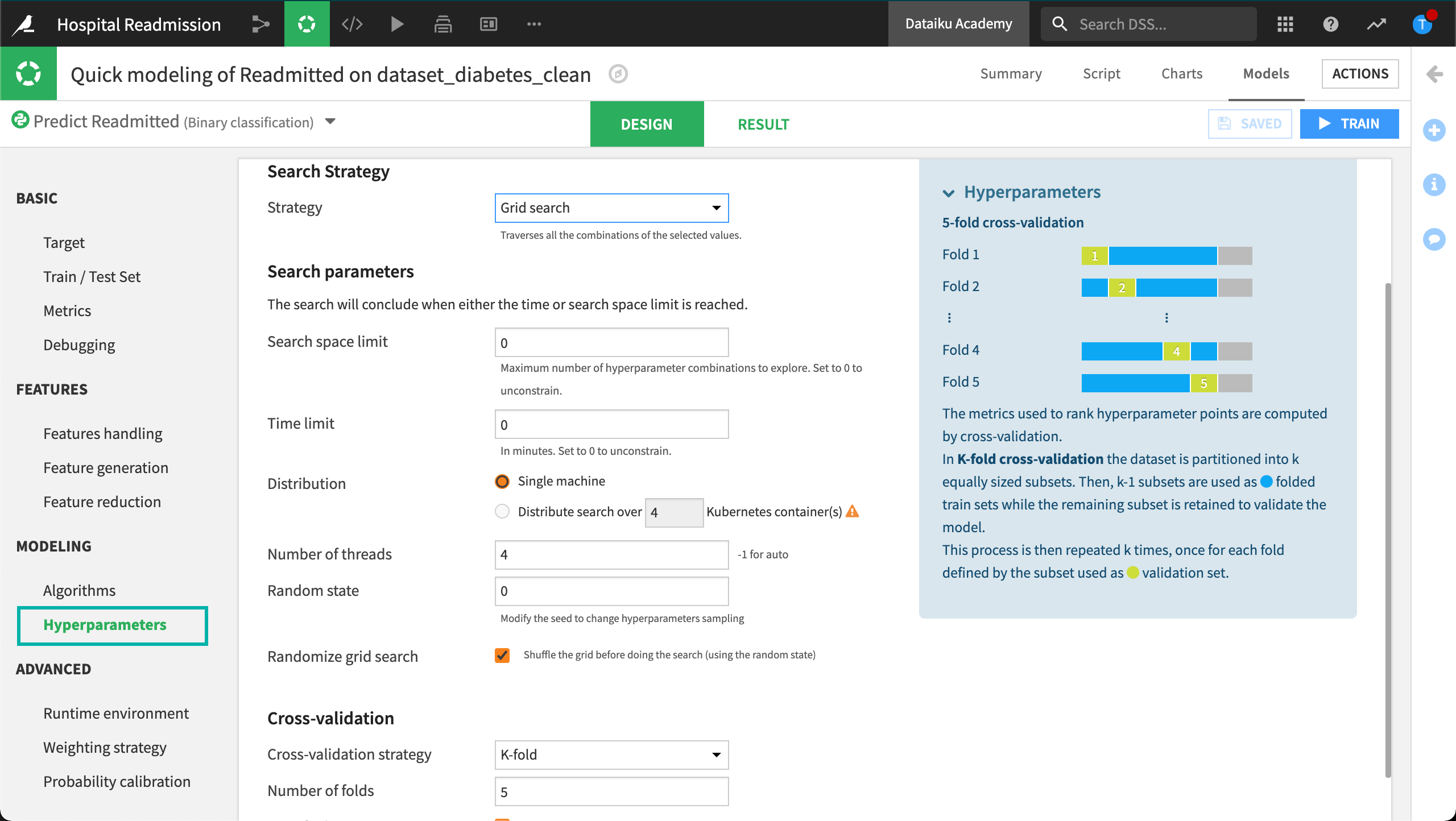

Dataiku provides various search strategies, such as:

Grid search, where we explore all possible combinations of hyperparameter values provided.

Random search, where we randomly select some combinations to explore.

Bayesian search, which uses a probabilistic approach to converge toward a good combination candidate.

Note

To learn more about search strategies, visit Advanced Models Optimization — Search Strategies.

We can also select our preferred validation strategy for the search:

A simple train/test split.

A K-fold cross validation method.

Our own custom cross-validation code.

For a more powerful search, check out how to distribute computations across containers.

View the tuning results#

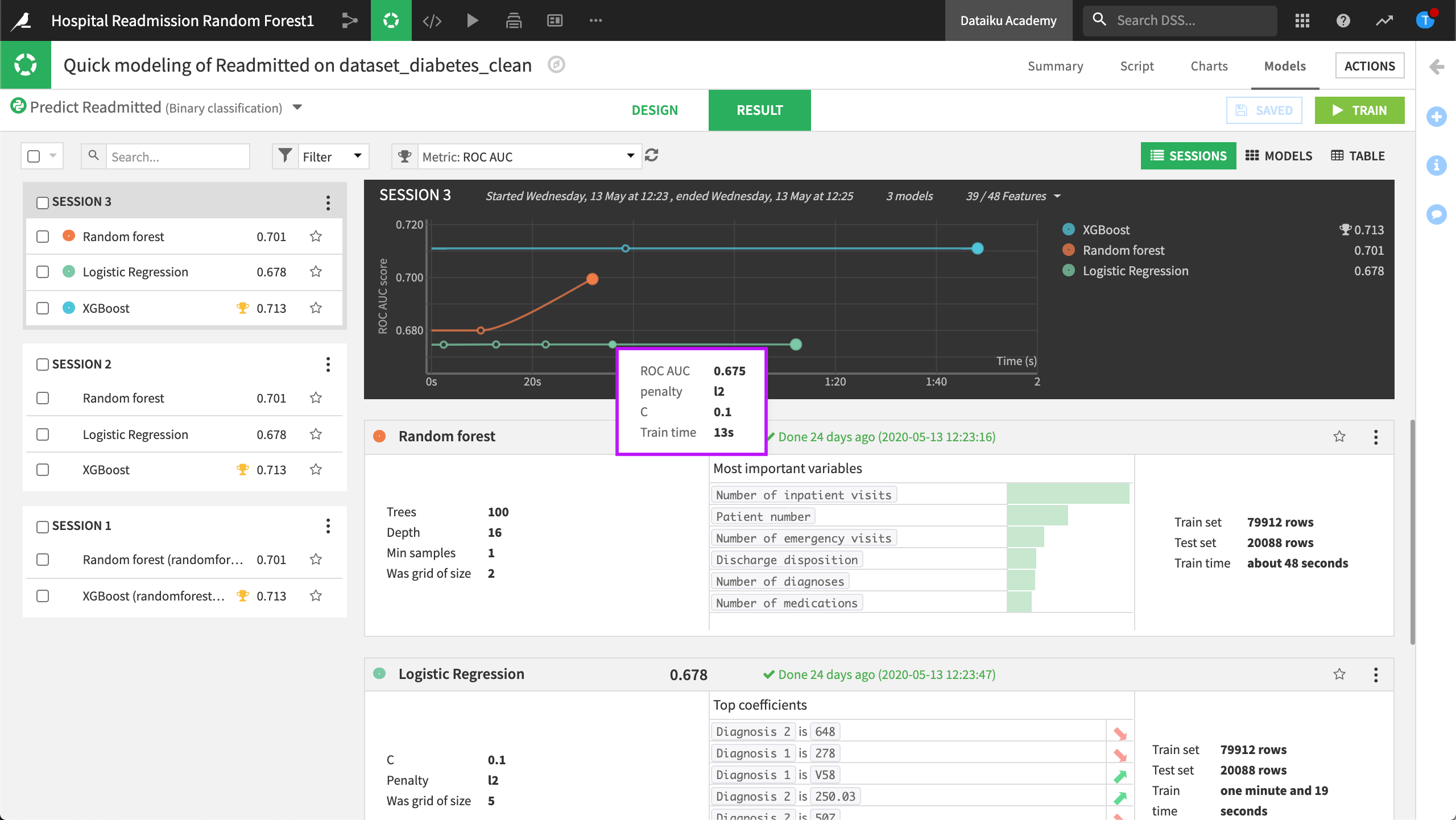

Once you are happy with the settings and train the model, you will land on the Results tab where you can visualize the evolution of your hyperparameter search.

If you hover over any point in the graph, you’ll see the evolution of hyperparameter values that yielded an improvement.

At any time while Dataiku is executing the hyperparameter search, you can choose to interrupt the optimization. Dataiku will then finish what it’s evaluating, and will train and evaluate the model on the best hyperparameters found so far.

Later, you can resume the search, and Dataiku will continue the optimization using only the hyperparameter values that haven’t been tried.