Concept | Flow#

Watch the video

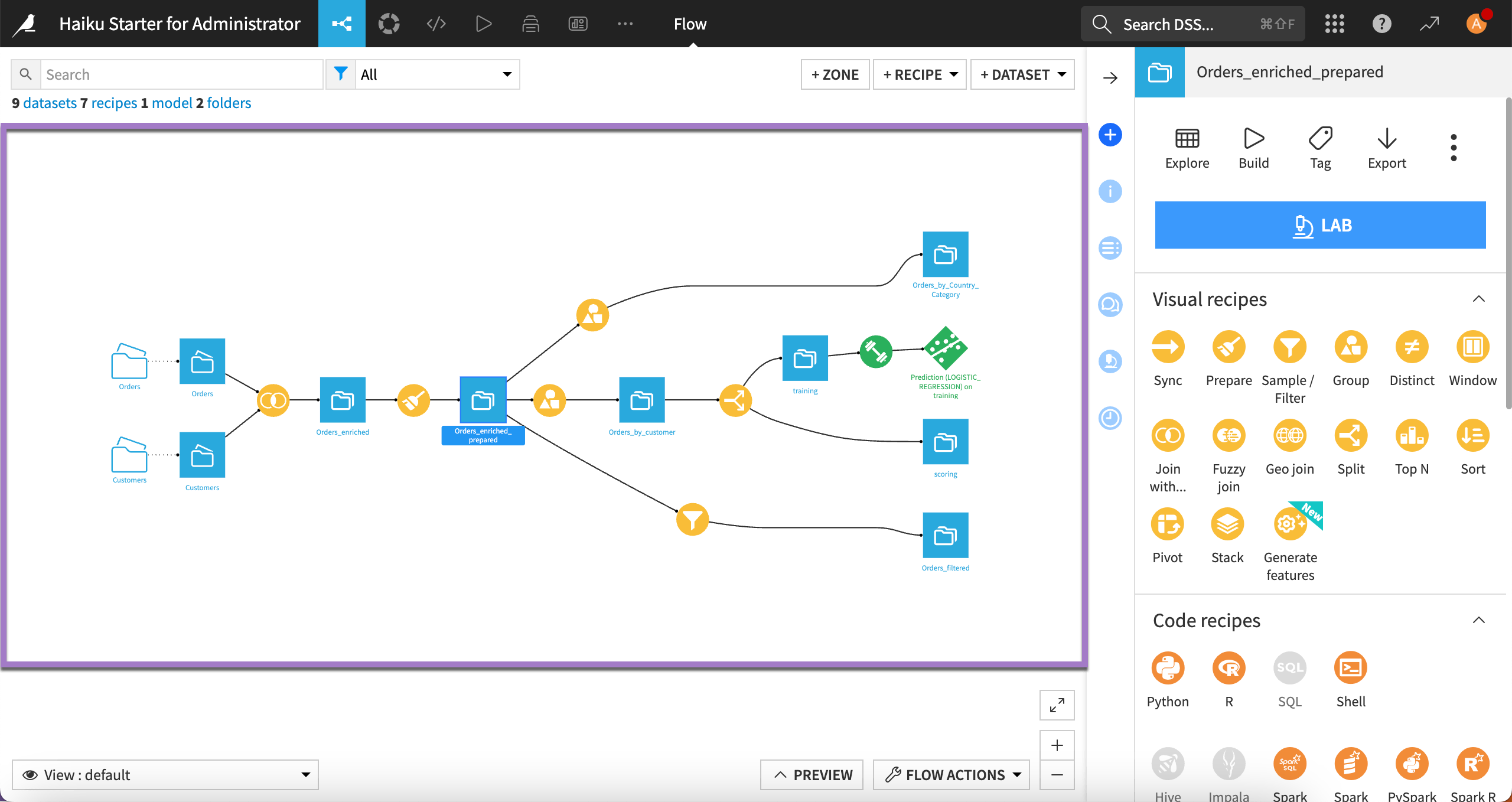

In Dataiku, the Flow is the visual representation of how data, recipes, models, and agents work together to move data through an analytical pipeline.

From the initial data to the final output, the Flow in Dataiku allows you to trace the dependencies among the different items and becomes a visual narrative of your data’s journey.

Improving the Flow readability#

Sometimes, the Flow can be quite complex, which can impact readability.

In such cases, for more clarity, you can use Flow zones, tags, and filters.

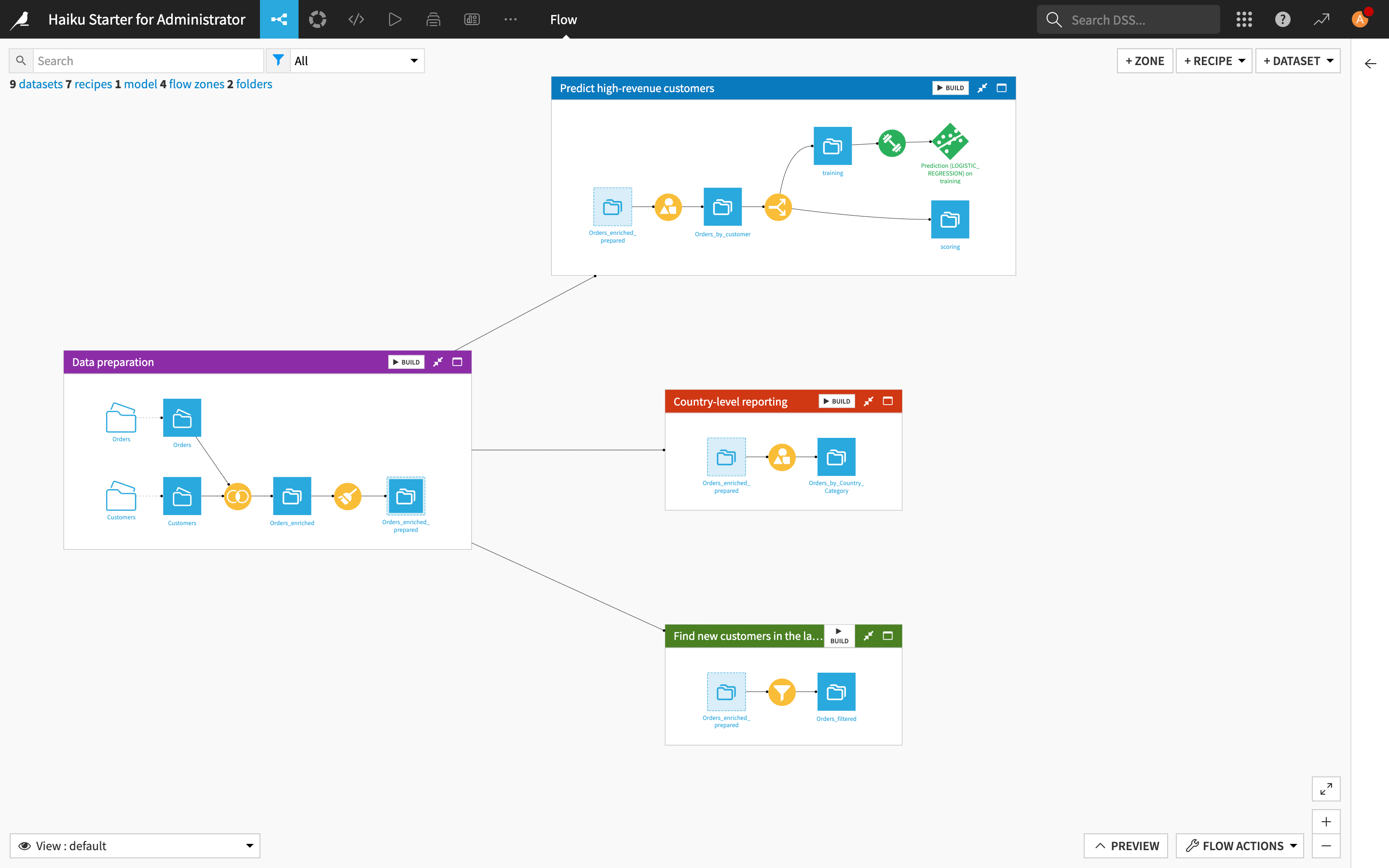

Using Flow zones#

By default, a Flow is displayed in a single zone.

At any time, clicking the + Add Item button at the top right of the Flow allows you to add zones to the Flow to organize the objects.

See also

For hands-on experience with Flow zones, complete the Tutorial | Flow zones.

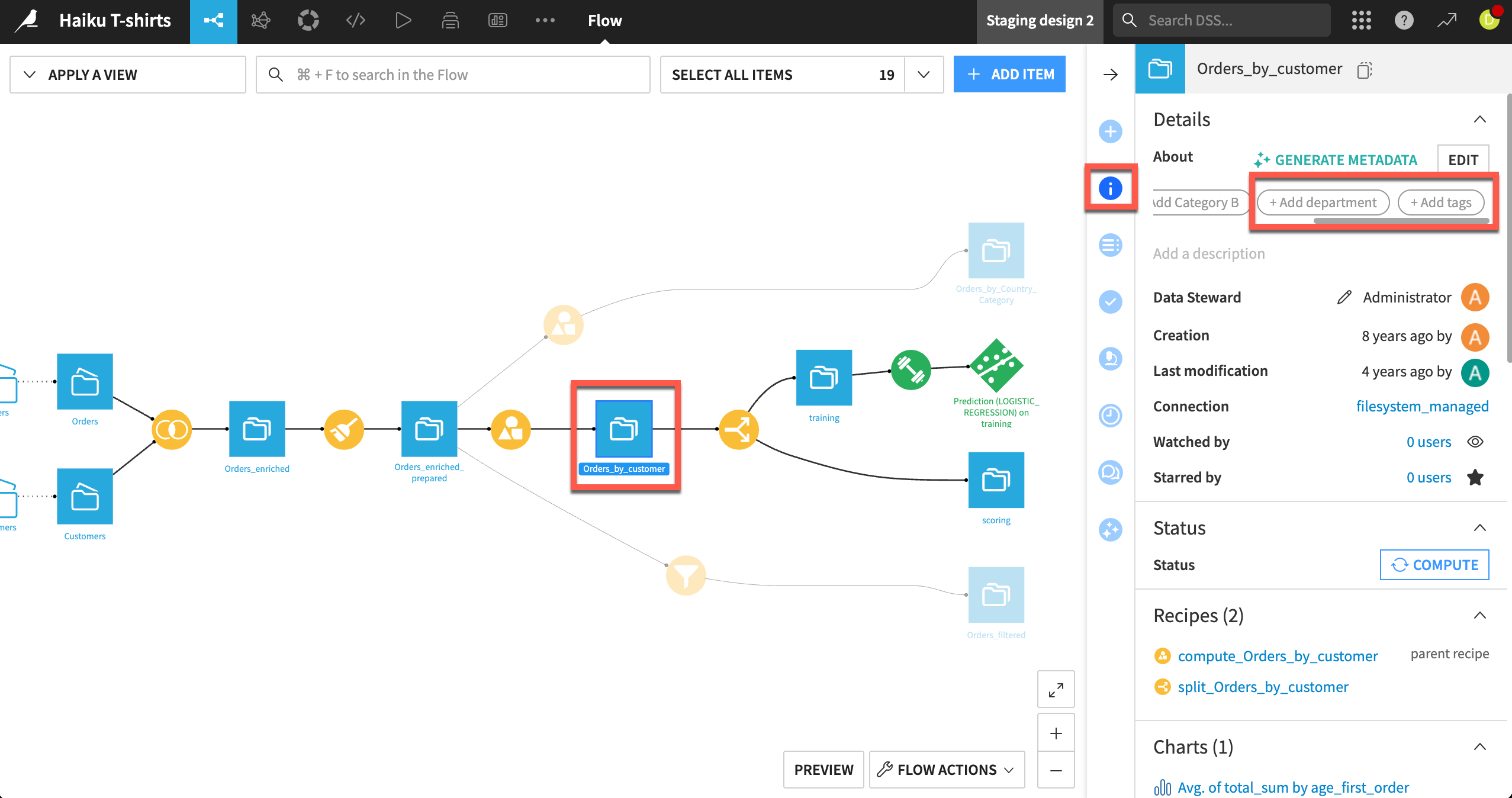

Tagging the Flow items#

When there are too many objects on the screen, you can add tags to the different items of the Flow then use these tags to select which parts of the Flow to view.

Tags can be based on attributes such as creator, purpose, status and so on.

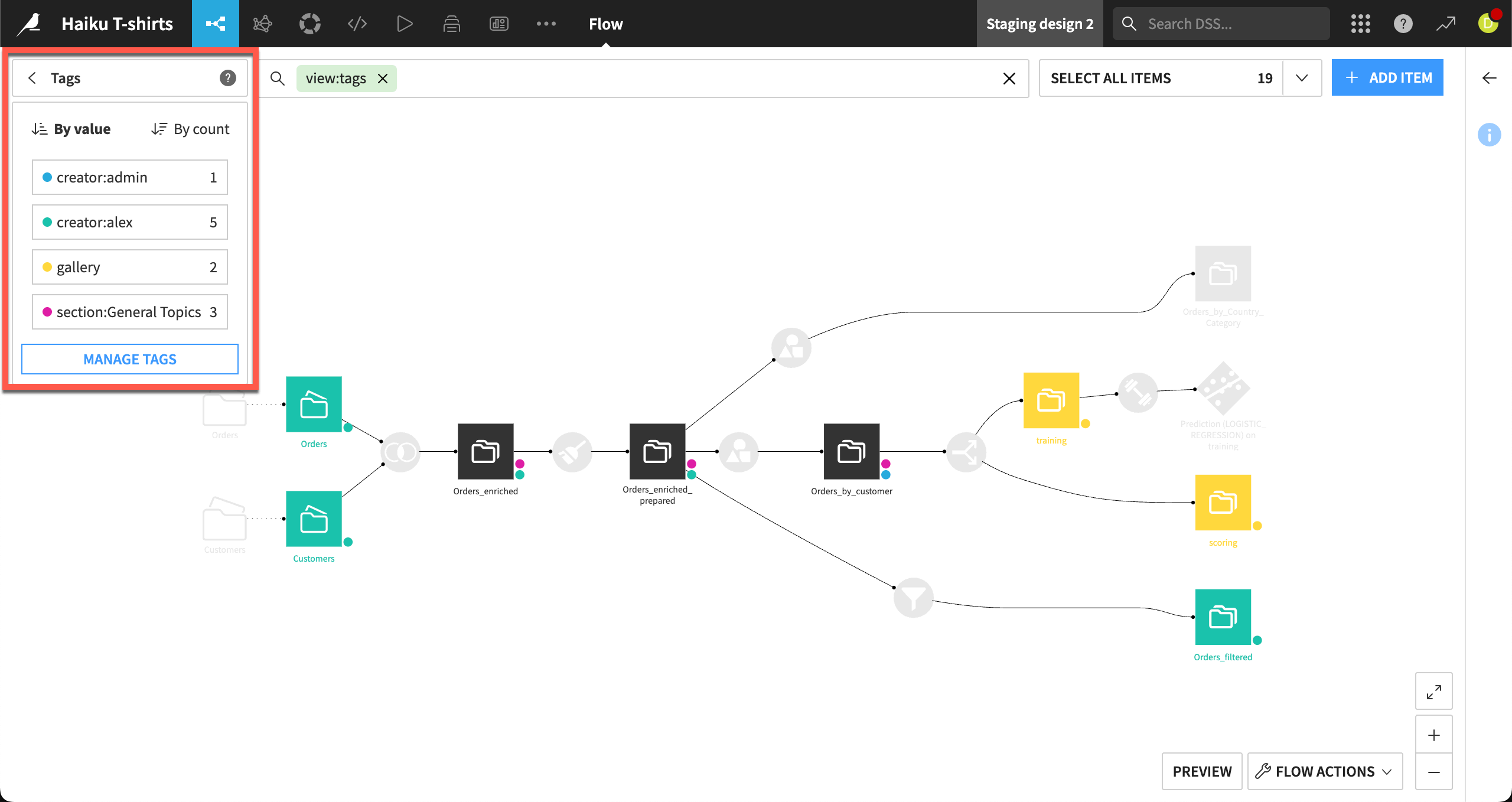

Filtering the Flow#

The Apply a View menu in the top left corner of the Flow lets you filter the Flow based on different elements such as Flow zones, tags, connections, recipe engines, the last modification date, etc.

For example, you can show or hide parts of the Flow by selecting or deselecting some tags to decrease the overall number of objects that appear on the screen.

Building the Flow#

The Flow Actions dropdown at the bottom right of the Flow includes a Build all menu to build the entire Flow.

Yet, you can also right click on any item in the Flow and select the Build menu from there.

Keep in mind that the Flow in Dataiku has an awareness of the relationships and dependencies between datasets in your project. For example, if you make changes to a dataset or recipe, you may choose to dynamically rebuild dependent items upstream or downstream in your Flow to reflect the update.

For more information, see the Concept | Build modes article.

Next steps#

In this lesson, you learned about the Flow in Dataiku. Continue getting to know the basics of Dataiku by learning about computation engines.

See also

For more information, see also:

The Flow article in the reference documentation.

The Flow creation and management article in the Developer Guide.