Inspect a model’s results#

See a screencast covering this section’s steps

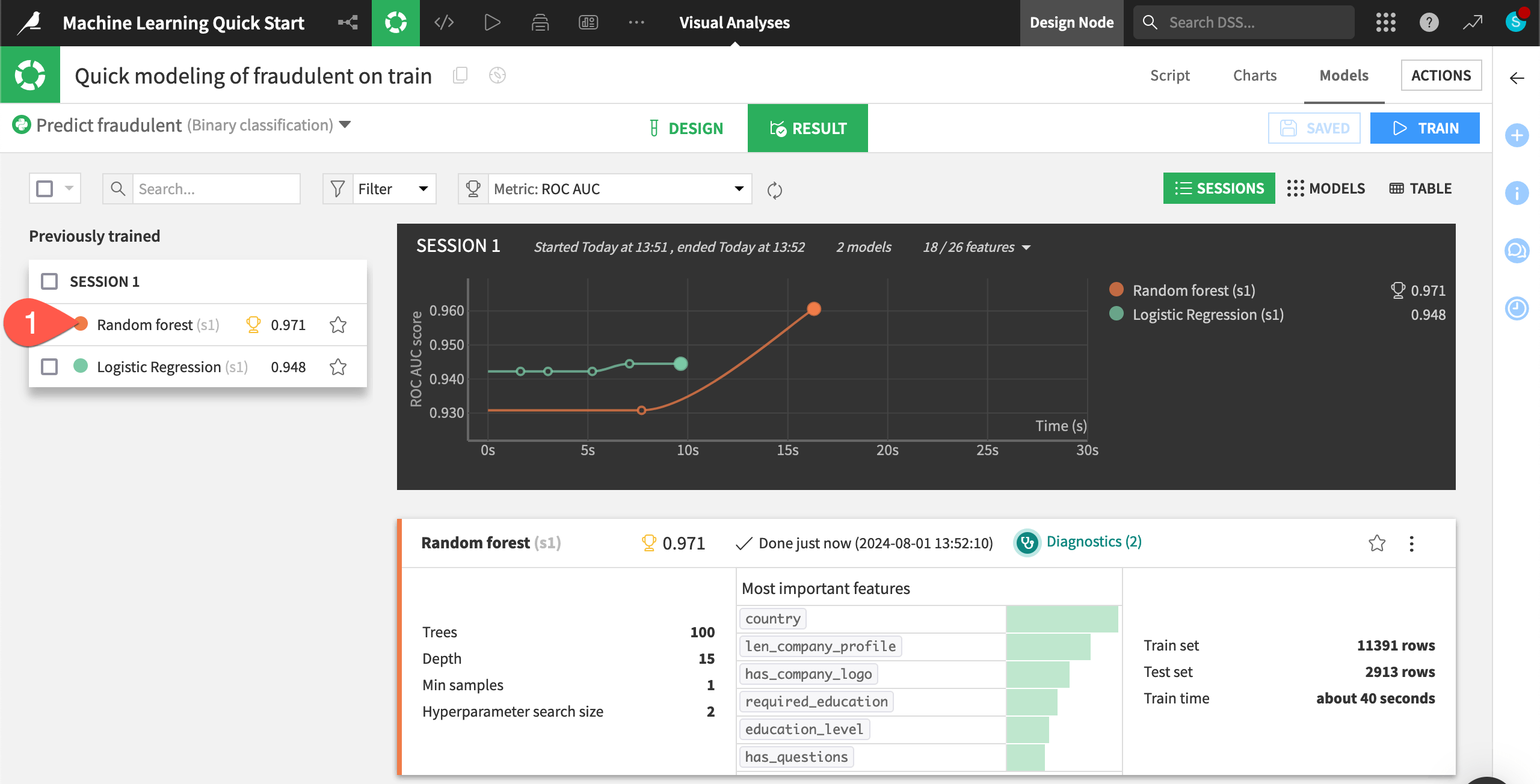

Once your models have finished training, review how well they did.

While in the Result tab, double click on the Random forest model in Session 1 on the left hand side of the screen to open a detailed model report.

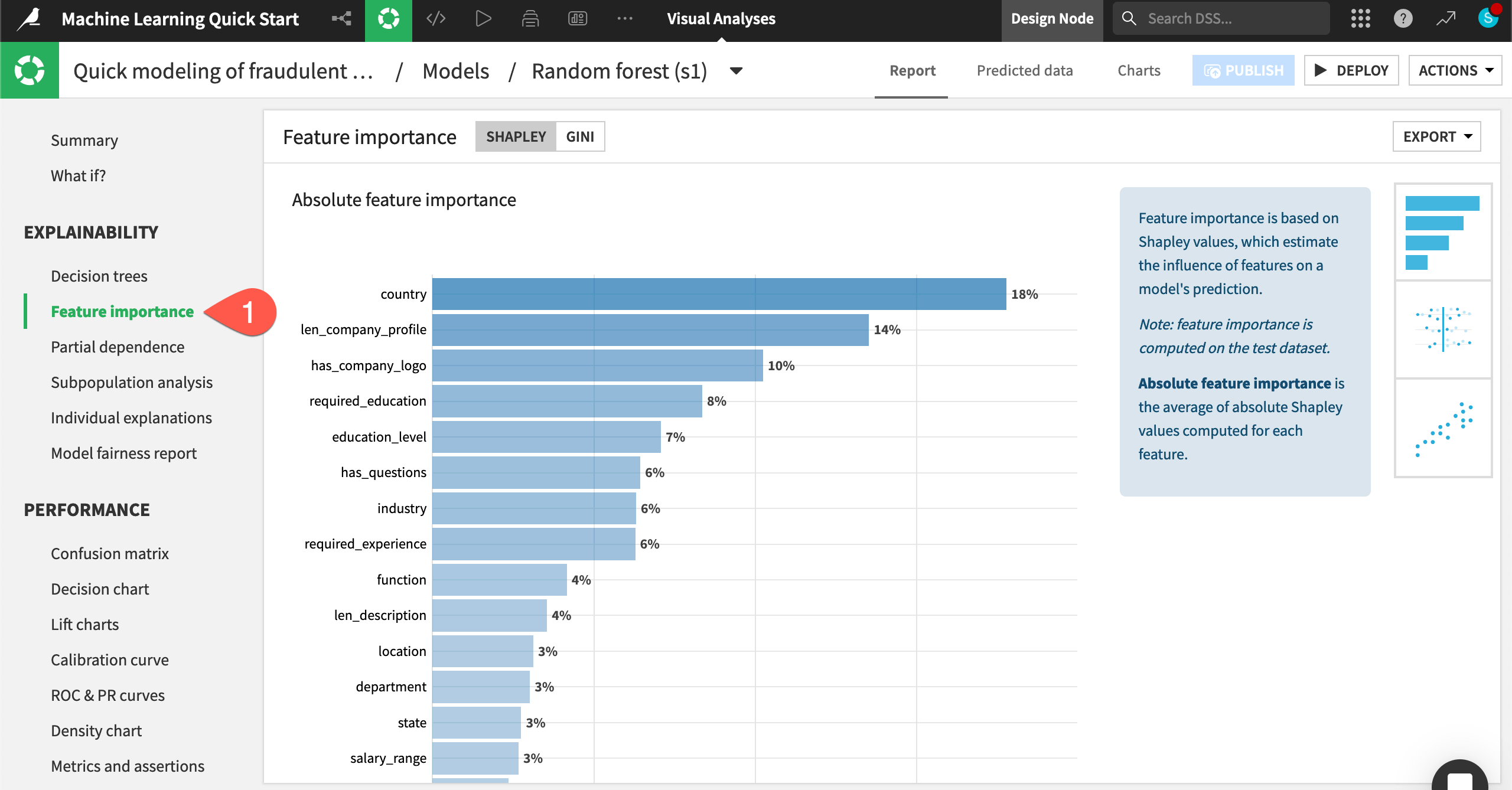

Check model explainability#

One important aspect of a model is the ability to understand its predictions. The Explainability section of the report includes many tools for doing so.

In the Explainability section, click Feature importance to see an estimate of the influence of a feature on the predictions.

Note

Due to the somewhat random nature of algorithms like random forest, you might not have exactly the same results throughout this modeling exercise.

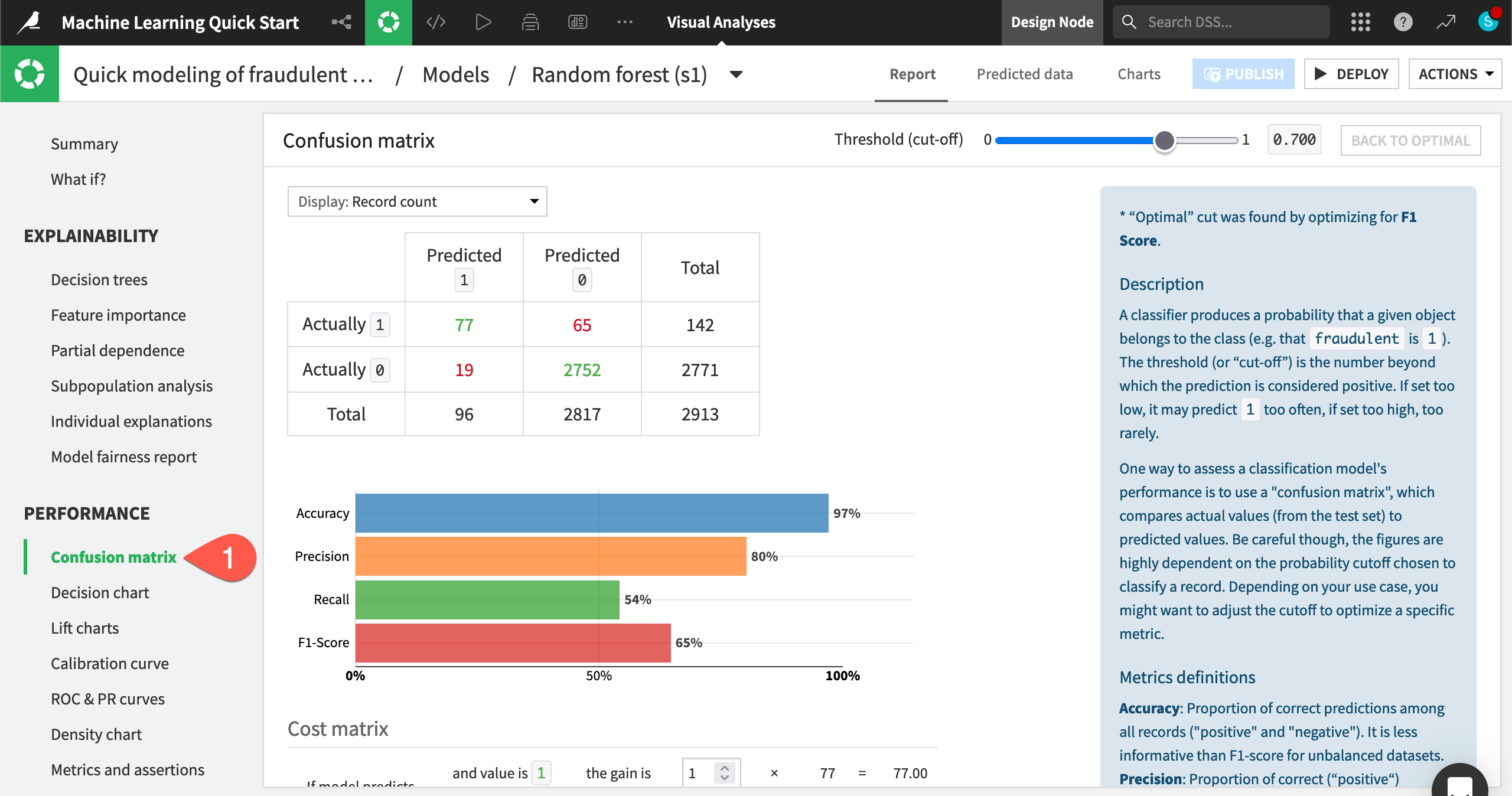

Check model performance#

You’ll also want to dive deeper into a model’s performance, starting with basic metrics for a classification problem like accuracy, precision, and recall.

In the Performance section, click Confusion matrix to check how well the model classified real and fake job postings.

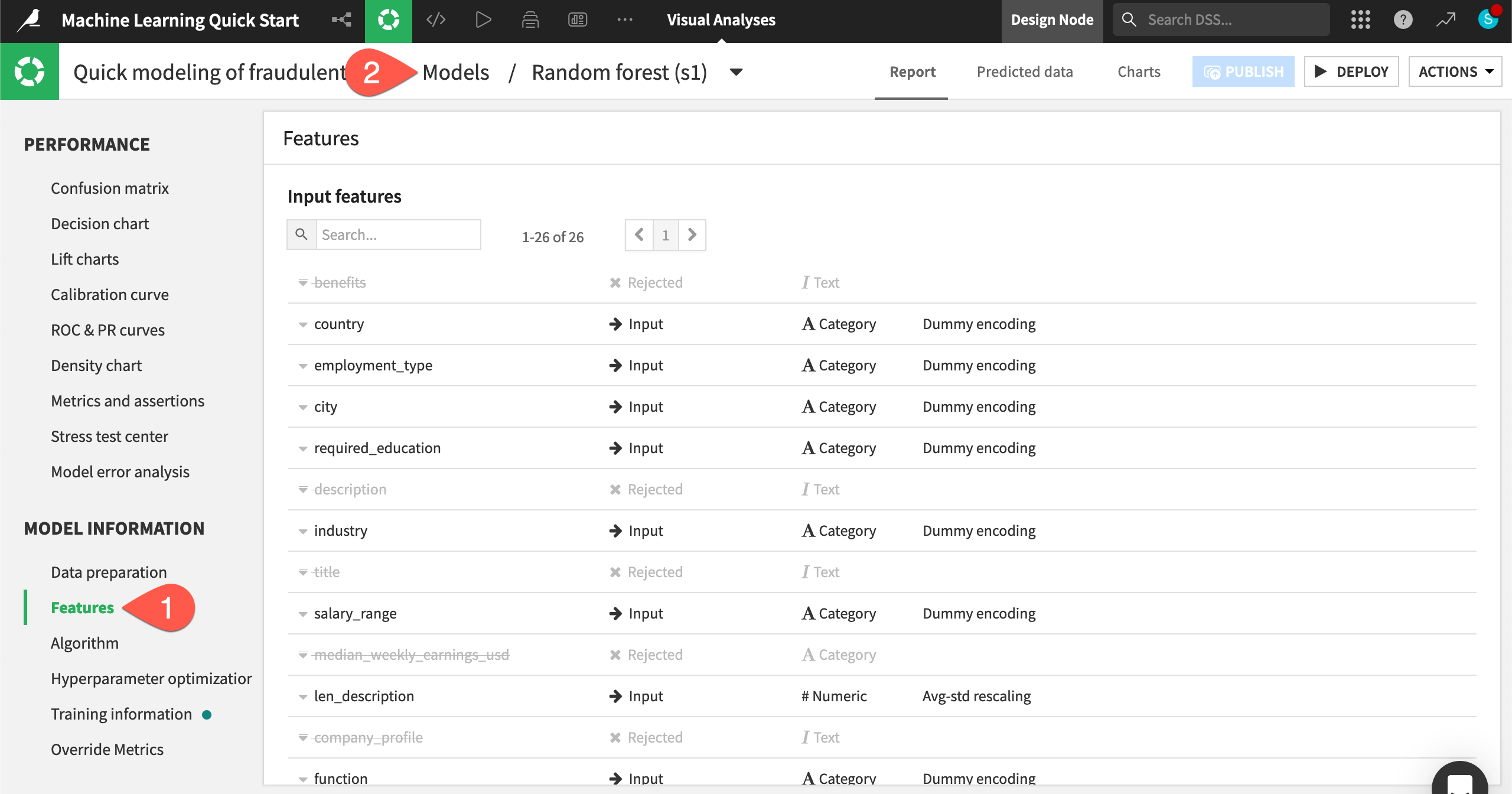

Check model information#

Alongside the results, you’ll also want to be sure exactly how the model was trained.

In the Model Information section, click Features to check which features the model rejected (such as the text features), which it included, and how it handled them.

When finished, click on Models to return to the Result home for the ML task.