Score unlabeled data#

Let’s use this random forest model to generate predictions on the unlabelled scoring dataset. Remember, the goal is to assign the probability of a car’s failure.

From the Result tab of the visual analysis, click on the random forest model (the best performing model)—if not already open.

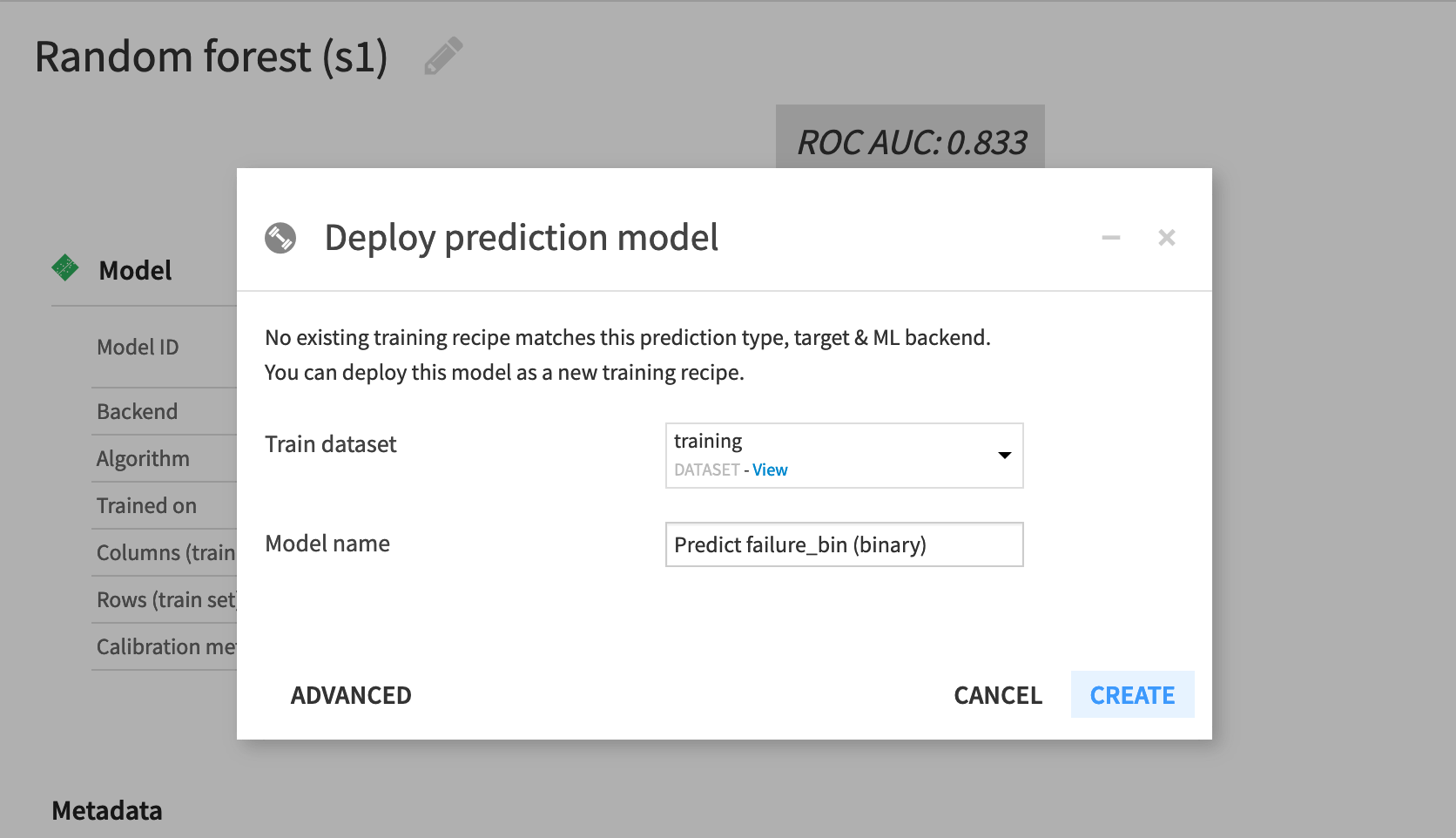

Click the Deploy button near the top right.

training dataset should already be selected as the Train dataset in the “Deploy prediction model” window.

Accept the default model name, and click Create.

Now back in the Flow, you can see the training recipe and model object. Let’s now use the model to score the unlabelled data!

In the Flow, select the model we just created. Initiate a Score recipe from the right panel.

Select scoring as the input dataset.

Predict failure_bin (binary) should already be the prediction model.

Name the output dataset

scored.Create and run the recipe with the default settings.

Note

There are multiple ways to score models in Dataiku. For example, in the Flow, if selecting a model first, you can use a Score recipe (if it’s a prediction model) or an Apply model (if it’s a cluster model) on a dataset of your choice. If selecting a dataset first, you can use a Predict or Cluster recipe with an appropriate model of your choice.

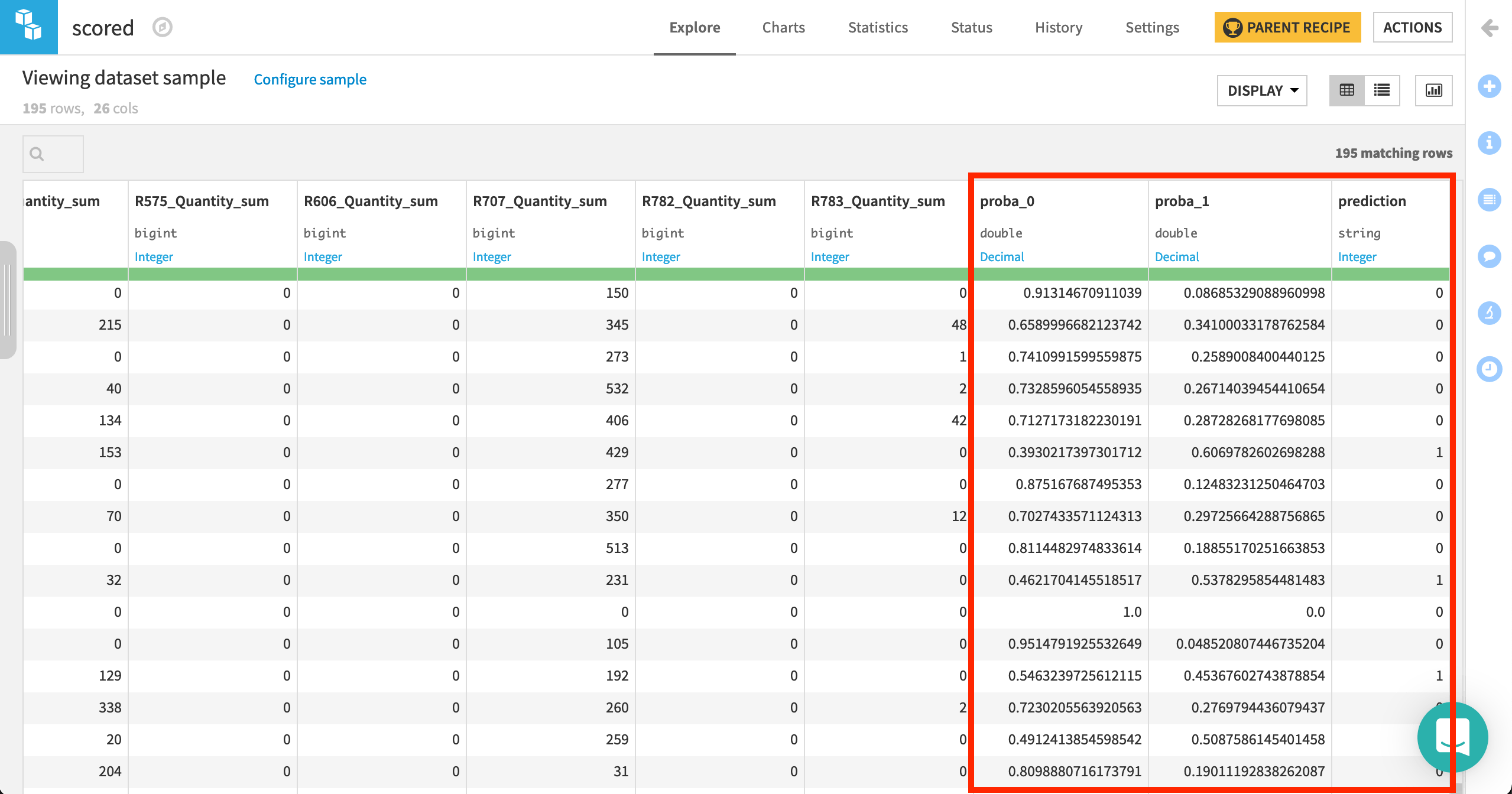

Compare the schema of the scoring and scored datasets. (It’s easiest to do this from the Schema () tab of the right panel in the Flow).

Then, the prepared scoring dataset is passed to the model, where three new columns are added:

proba_1: probability of failure

proba_0: probability of non-failure (1 - proba_1)

prediction: model prediction of failure or not (based on probability threshold)