Concept | Guardrails against risks from Generative AI and LLMs#

When using Large Language Models (LLMs) to benefit from the power of Generative AI (GenAI) for various applications, it’s crucial to understand the potential risks and implement robust guardrails to ensure these technologies are used safely, reliably, and ethically.

Indeed, LLMs, by their nature, can inadvertently generate outputs that may pose risks to both organizations and individuals. These risks include:

The lack of control over access to the information. When using LLMs, you need to control where you are using this new technology and who has access to what.

The potential exposure of sensitive or confidential information, particularly with Retrieval-Augmented Generation (RAG) as you provide your own knowledge to the LLM to enrich it in order to offer contextually relevant answers.

The generation of incorrect information that may sound plausible (often referred to as hallucinations).

Secure LLM connections#

Safe gateway#

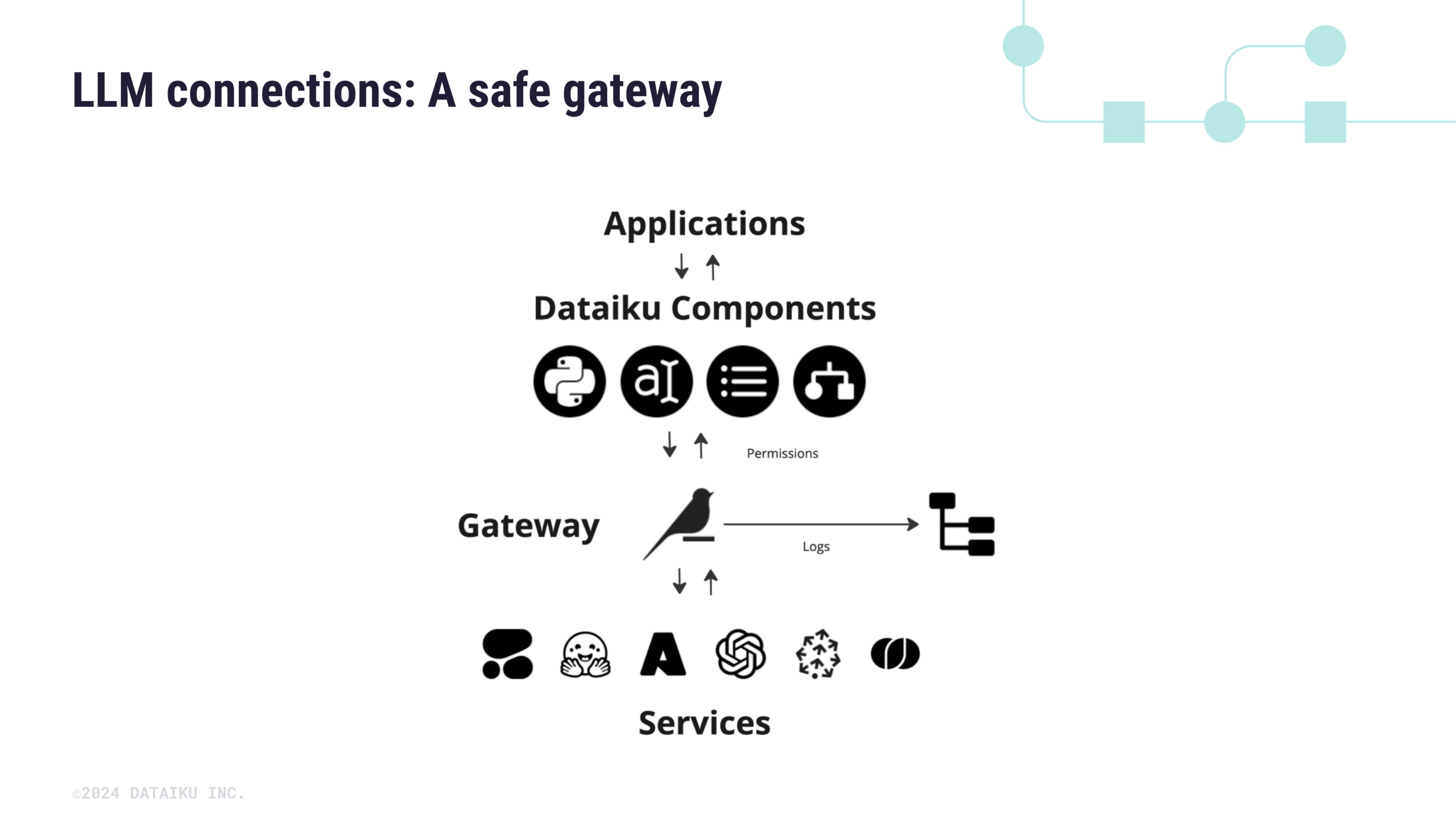

In Dataiku, LLM connections act as a secure API gateway between your applications and the underlying services. The connections sit between the Dataiku components and an API endpoint, transmitting the required requests to the relevant service.

This gateway is set by an administrator and allows you to:

Control the user access to regulate who can access the LLM connections.

Enable security measures such as rate limiting, network, and tuning parameters (to define the number of retries, timeout, and maximum parallelism).

Apply security policies.

Centralized management of access keys, models, and permissions#

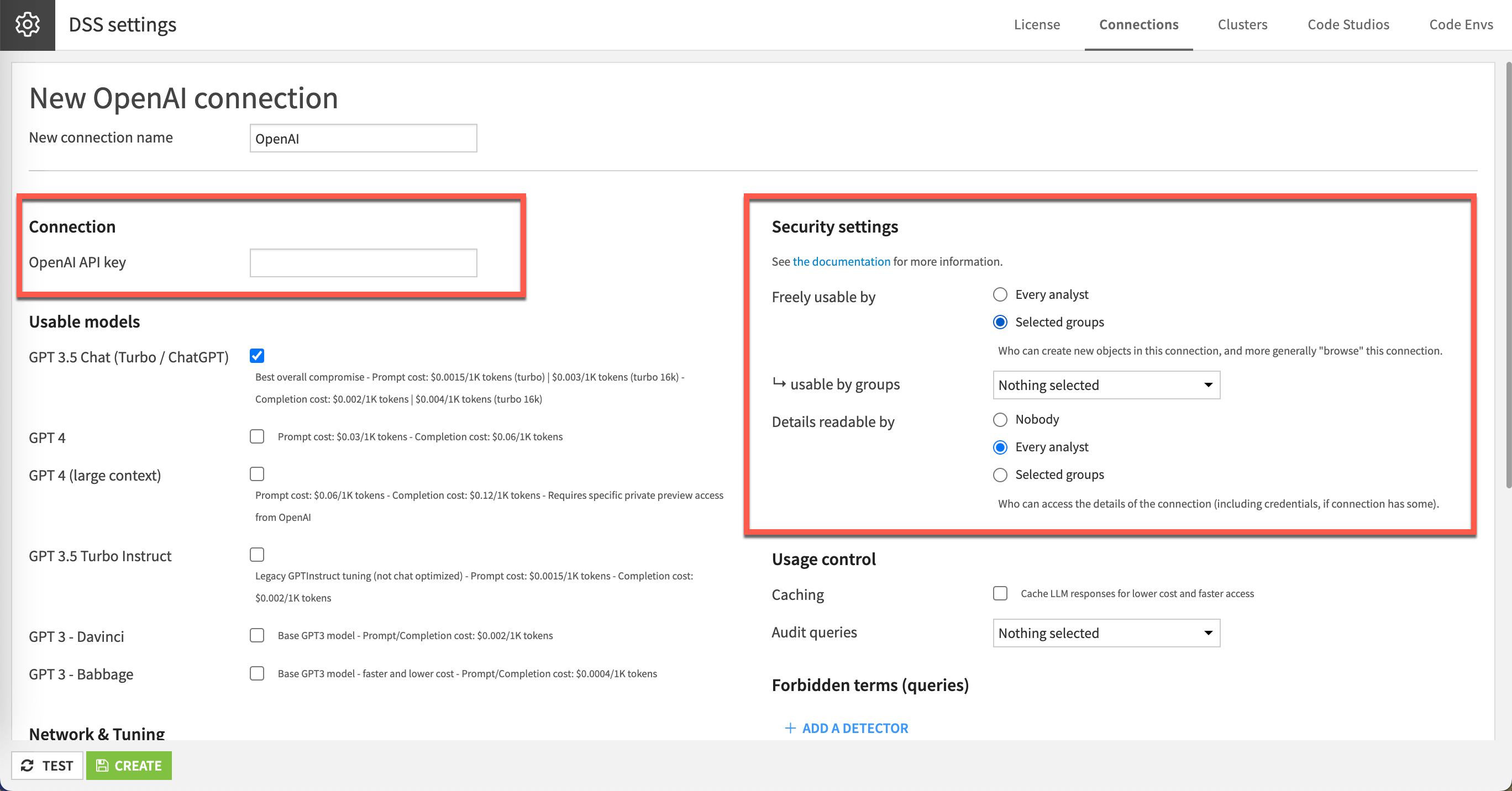

When configuring LLM connections, the administrator must provide the proper credentials, such as an API key for many services, an S3 connection for AWS Bedrock, or a connection to a local Hugging Face model. Then, the administrator can define which models are made available.

This centralizes the management of access keys, ensuring that only approved services are used.

The administrators also configure the security settings at the connection level to define which user groups can use the connection, thus ensuring the underlying services are properly managed.

See also

For more information, see the LLM connections article in the reference documentation.

Content moderation and sensitive data management#

Still at the connection level, the administrators can define a certain number of filters and moderation techniques to avoid toxic interactions and restrict the transmission of sensitive data, particularly when using self-hosted or private LLMs.

In Dataiku, the LLM connections provide three main filters to moderate the content and detect and manage sensitive data:

Toxicity detection

PII (Personal Identifiable Information) detection

Forbidden terms

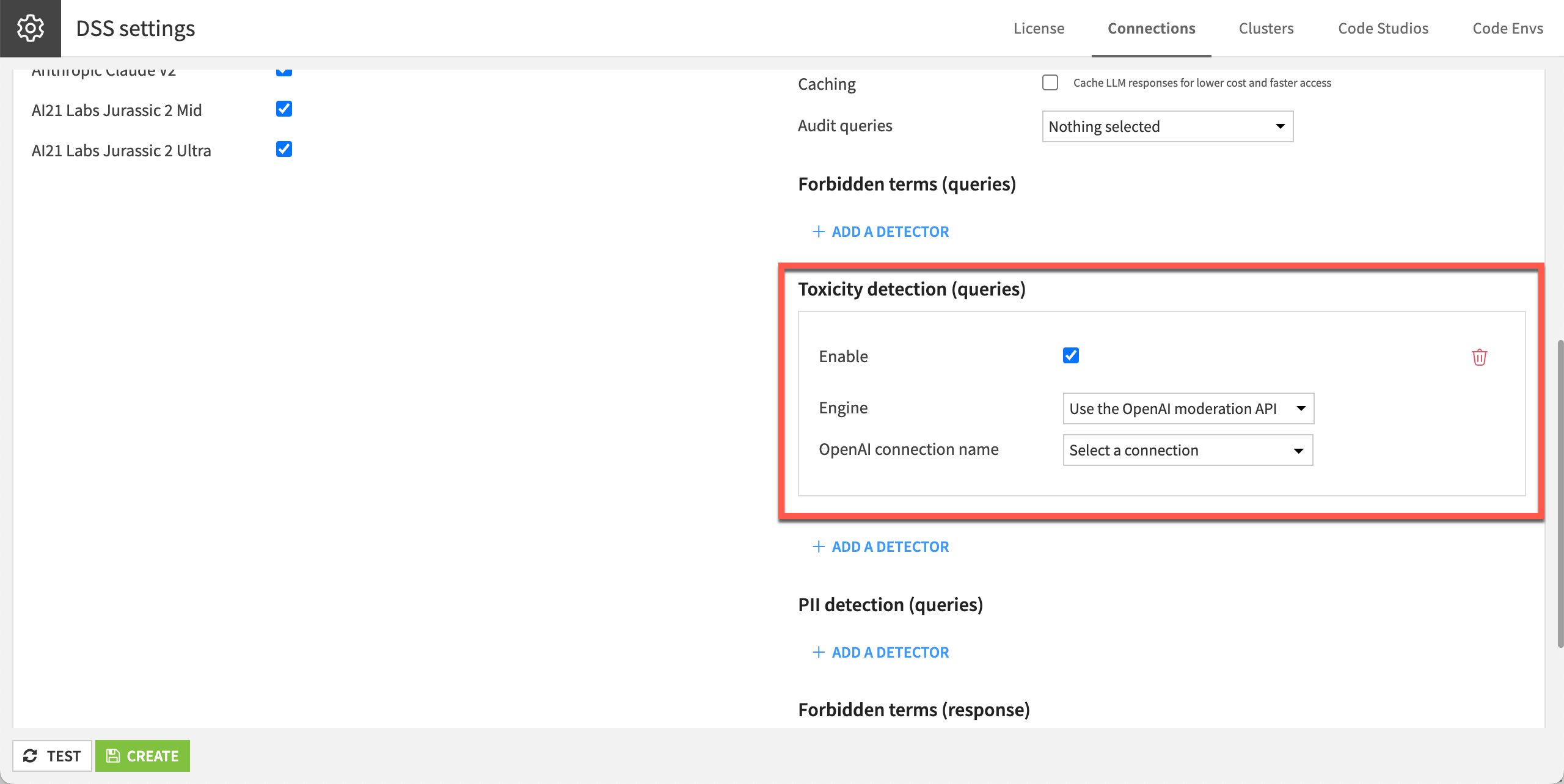

Toxic language detection#

Toxicity detection is key to ensure that the model’s input or output doesn’t contain inappropriate or offensive content, and to define appropriate remedies if they do.

To mitigate this risk, the Dataiku administrators can set the LLM connection to moderate the content both for queries and responses, leveraging dedicated content moderation models to filter inputs and outputs.

If toxic content is spotted, whether in a query or a response, the API call fails with an explicit error.

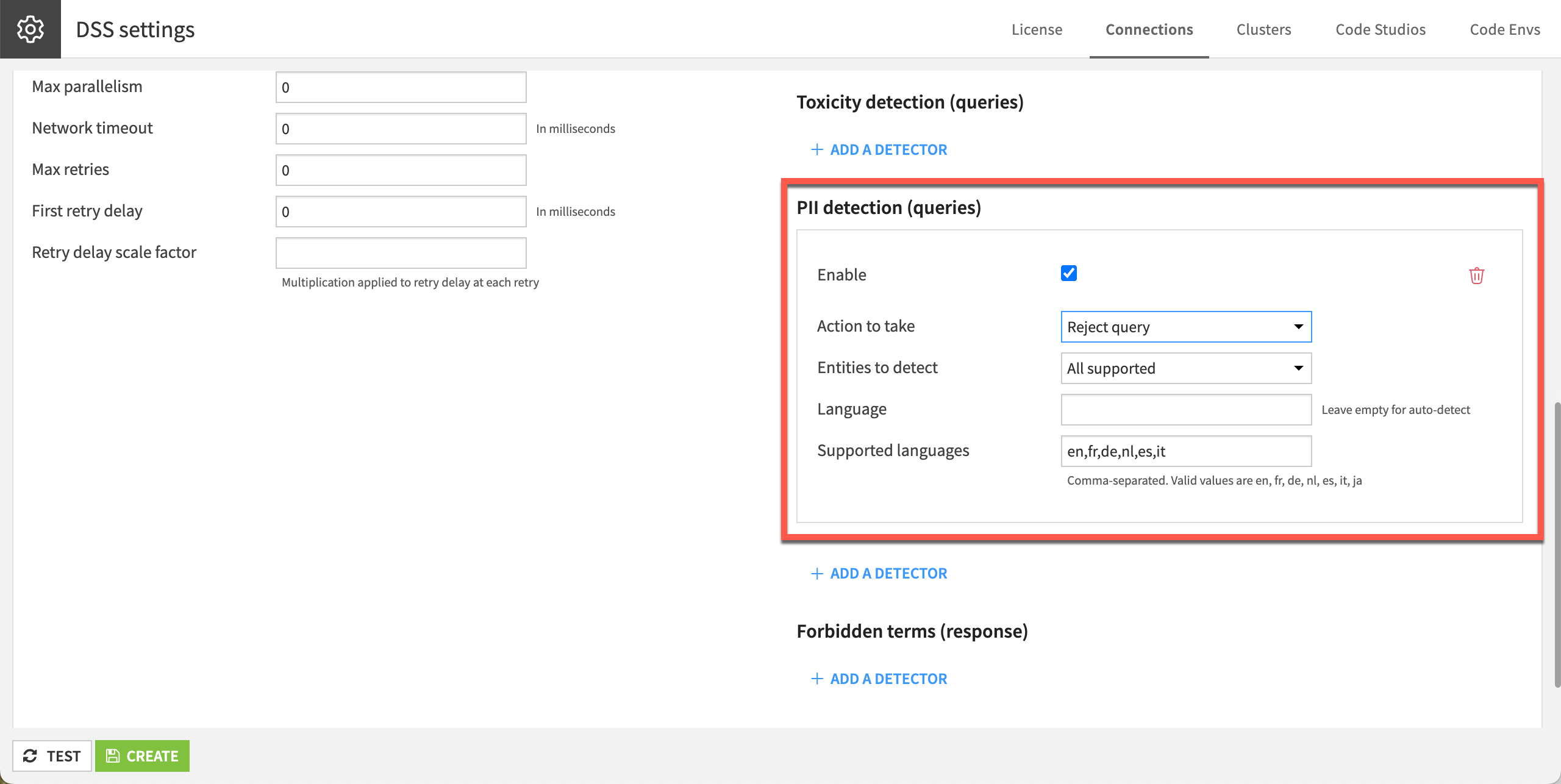

PII detection#

The PII detection allows you to filter every query and define an action to take such as:

Rejecting the query.

Replacing the PII with a placeholder, etc.

It leverages the presidio library.

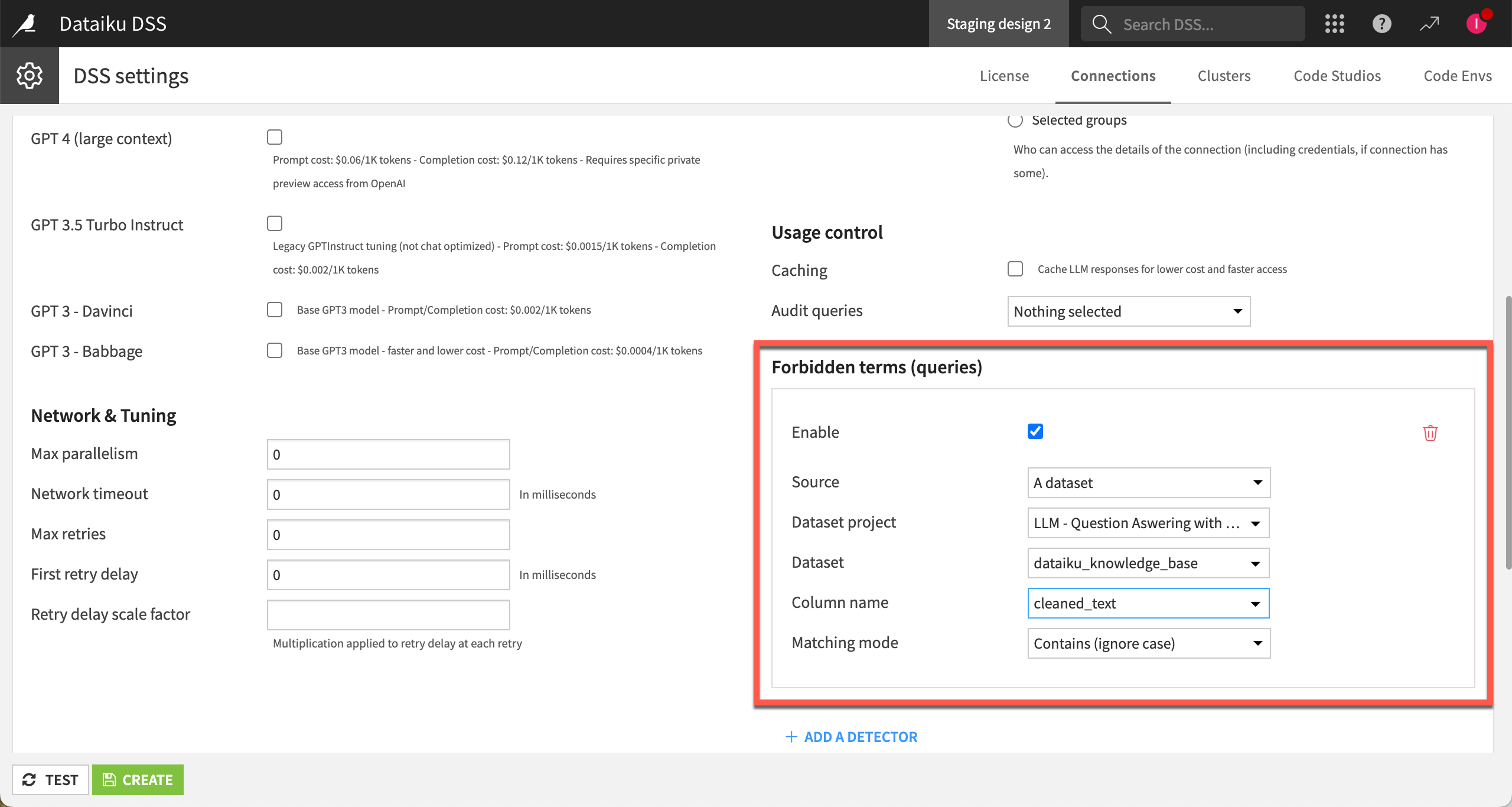

Forbidden terms#

The administrator can add forbidden terms to filter out specific terms from both queries and responses, ensuring that sensitive or prohibited content is excluded from LLM interactions.

The filter defines the source dataset that contains the forbidden terms and a matching mode to enforce the restriction.

Incorrect information#

Mitigating the risk of hallucinations and misinformation is crucial. The quality and reliability of the knowledge banks used in RAG directly impact the accuracy of the model’s outputs.

It’s therefore important to maintain these knowledge banks carefully, ensuring they’re up-to-date and verified for accuracy.

When setting up your knowledge bank in an Embed recipe, you can ask the LLM to return the sources used to generate its responses (see the Print sources option).

Next steps#

For more information on LLM connections and security, see also the:

LLM connections article in the reference documentation.

Concept | LLM connections article in the Knowledge Base.