Concept | LLM connections#

Dataiku provides connections to a variety of Generative AI services, both hosted and self-hosted, that are required to build Large Language Model (LLM) applications.

The LLM connections can then be leveraged with Dataiku’s:

Agents, to power the agent’s tasks.

LLM visual recipes, such as the Prompt recipe, text processing recipes (summarization, classification), and the Embed recipe for Retrieval-Augmented Generation (RAG).

Python API for usage in code recipes, Code Studios, notebooks, and web applications.

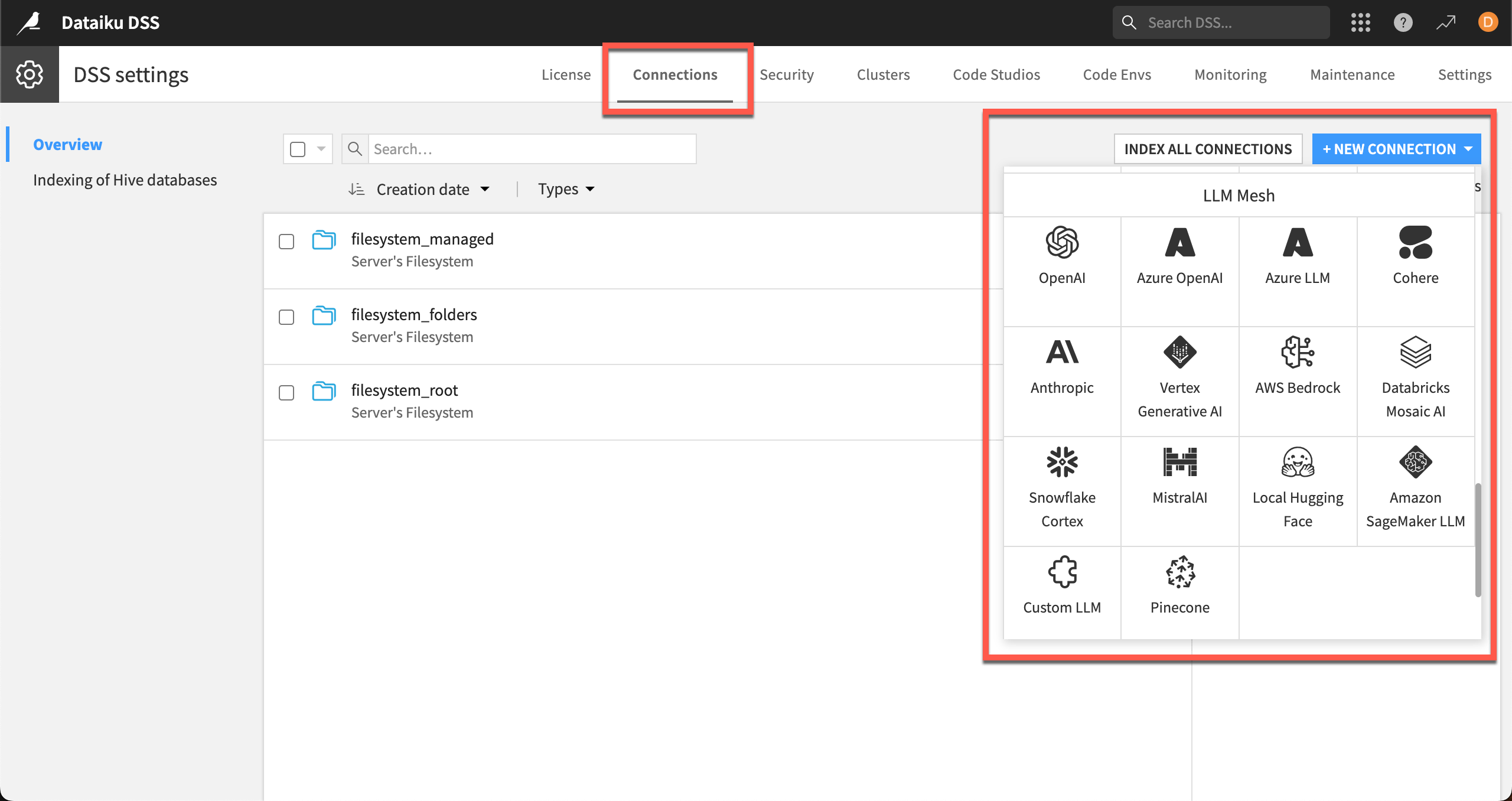

Instance administrators can configure connections to LLMs in the waffle menu () > Administration > Connections > + New Connection > LLM Mesh.

Types of connections#

In Dataiku’s LLM Mesh, you can connect to models that are hosted by service providers, hosted locally, or developed locally.

Hosted LLM connections#

Supported connections to Generative AI services include providers such as OpenAI, Hugging Face, Cohere, etc.

Connection settings vary depending on the requirements of the providers.

Hugging Face local connections#

Users can host, manage, and use open source Hugging Face models locally with Dataiku’s Hugging Face connection. This connection includes containerized execution and integration with NVIDIA GPUs to make the model execution simpler. Users can start experimenting with Hugging Face models without downloading the model into the code environment’s resources.

Administrators can configure which Hugging Face models are available for different tasks, and other settings such as containerized execution, in the connection settings.

Custom LLM connections#

Users who have developed their own LLMs with API endpoints can integrate these custom services into Dataiku via the Custom LLM connection. Setting up a Custom LLM connection requires developing a specific plugin using the Java LLM component to map the endpoint’s behavior.

LLM usage#

Monitoring and controlling LLM usage is a major consideration for organizations using LLM services. The Dataiku LLM Mesh provides solutions to logging and tracing usage.

Usage monitoring#

Tracking of LLM cost estimates require custom development or is available as a pre-built Solution for Advanced LLM Mesh customers. Dataiku’s Resource Usage Monitoring Solution does not track LLM usage and it is not designed to handle LLM logs and costs.

Using cache to reduce costs#

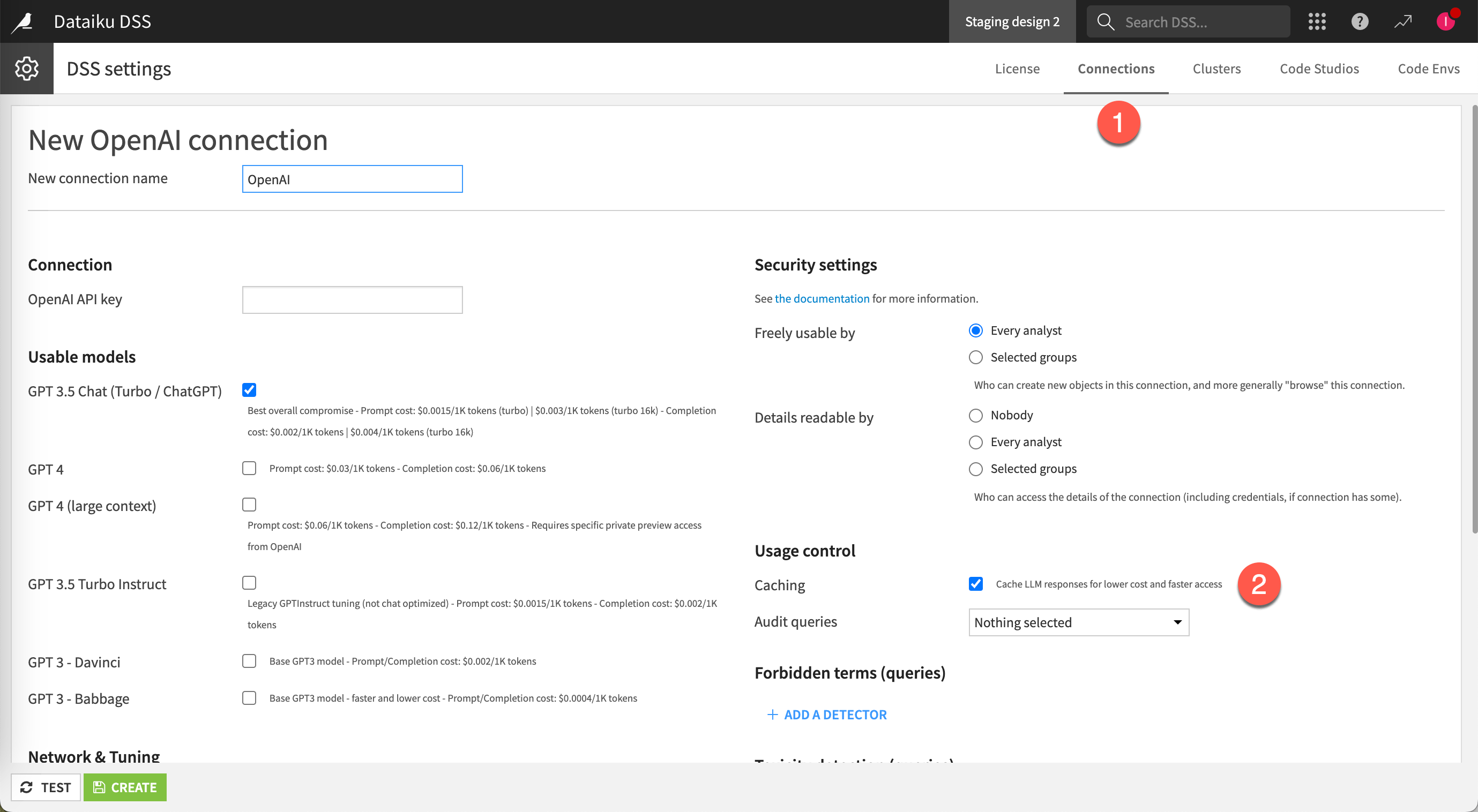

The LLM Mesh provides caching capabilities that an administrator can configure at the LLM connection level. By caching responses to common queries, the LLM Mesh avoids unnecessary re-generation of answers, reducing avoidable costs.

Administrators can enable caching in the LLM connection under Usage control.

Auditing LLM connections#

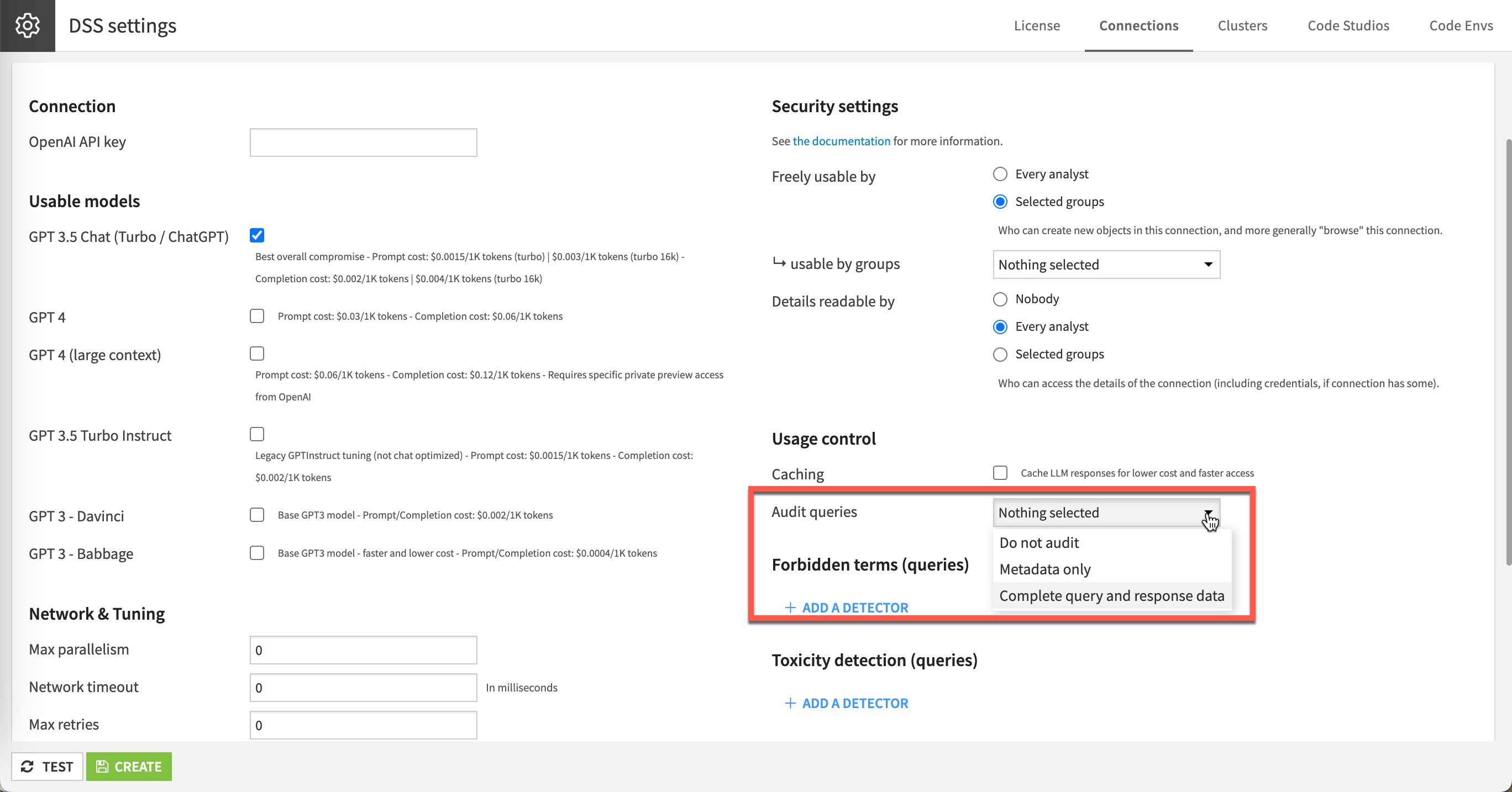

Dataiku administrators can configure the level of auditing and logging to keep a complete record of queries and responses. They can either:

Disable the audit.

Log only the metadata.

Log all the queries/responses.

Logs are then available for download from the administration panel or can be used directly in a Dataiku project for further analysis, for example, to ensure extensive cost control and monitoring. For further information, see the Usage monitoring section above.