Concept | Definition, challenges, and principles of MLOps#

Putting machine learning models into production is a significant challenge for many organizations. As AI initiatives expand, MLOps is the cornerstone in ensuring deployed models are well maintained, perform as expected, and don’t adversely affect the business.

Let’s define MLOps, see how it compares to DevOps, discuss the challenges of managing the lifecycle of machine learning models at scale, and uncover the main principles behind MLOps.

Definition#

Defining MLOps isn’t as straightforward as it may seem. MLOps involves the standardization and streamlining of machine learning lifecycle management. An accurate description broadens the scope of MLOps beyond the deployment and monitoring of models in production to include the entire operationalization puzzle.

While standard software can run in production for years without requiring an update, this situation is far from realistic for a machine learning model. There is an inherent decay in model predictions that requires periodic retraining. Manually updating models quickly becomes tedious and isn’t scalable.

Automation begins with identifying:

Which metrics need to be monitored?

At what point or range do these metrics become worrisome?

What indicators determine whether a new version of a model is outperforming the current version?

These challenges highlight the importance of seeing MLOps as a complete puzzle with the pieces coming from designing, building, deploying, monitoring, and governing models.

MLOps vs. DevOps#

MLOps isn’t an entirely new approach. The primary inspiration for MLOps is DevOps. Practitioners often coin MLOps as DevOps for ML. While there are similarities, there are also crucial differences.

The core concept of DevOps is breaking down team silos. This concept should be fundamental to all MLOps initiatives (as it is for DevOps). Another similarity is having a simple, reliable, and automated way of deploying any project.

The most crucial difference between MLOps and DevOps is that managing data is critical for an ML project. Another key differentiator is the need for responsible & ethical AI. This is an essential aspect of MLOps that organizations shouldn’t overlook.

These underscore the need for teamwork between DevOps engineers and ML practitioners, bringing back the notion of breaking silos.

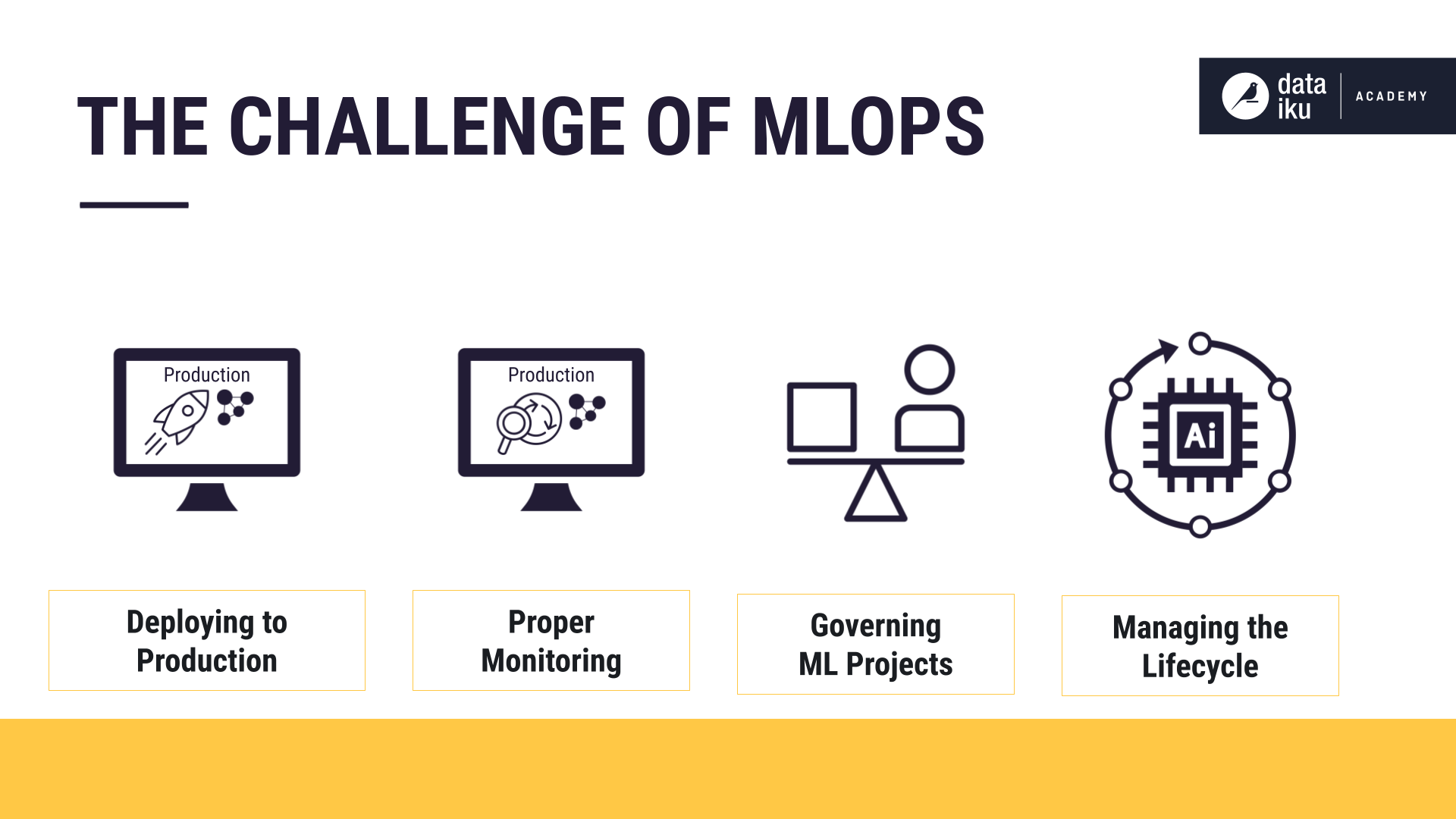

MLOps challenges#

Why is the management of ML models at scale so challenging? Let’s discuss four reasons.

Deploying to production#

Models need to be put into production to reap their full benefit, but doing so at scale presents new challenges. Existing DevOps and DataOps expertise aren’t enough. There are fundamental challenges with managing machine learning models in production.

Machine learning operationalization requires a mix of skills. The organization will need to identify the various roles and skills necessary for successful ML operationalization. They’ll need to map these skills to the activities that are part of any data science and ML project.

Proper monitoring#

Once AI projects are up and running in production, the real work begins. Teams need to periodically update production models based on newer data, detected data drift, or an appropriate schedule. Monitoring involves having the correct metrics defined and the correct tools in place.

Governing ML projects#

Updating projects manually in production can be challenging and risky. An organization that takes on the challenge of MLOps will have to consider what level of governance is needed.

One consideration might be governing the AI project to ensure it delivers on its responsibility to all stakeholders, including regulatory entities and the public.

Another consideration might be the governance of the data to ensure it’s free of bias and complies with regulations, such as the General Data Protection Regulation (GDPR) law.

Managing the project lifecycle#

The lifecycle of ML projects requires a supportive architecture to move project files between design, test, and production environments. Data scientists need to see all deployed files, and data engineers need to know when the project requires testing and rollout.

Furthermore, there are many dependencies. Both data and business needs are constantly changing. Ensuring that data and models meet the original goal across time is mandatory.

Organizations can adopt MLOps practices to help overcome these challenges. With MLOps, teams can understand the models and model dependencies even while decision automation is happening.

Governing principles of MLOps#

Now that we know the definition of MLOps and some of the challenges organizations face, let’s uncover the governing principles behind MLOps.

Compatible with deployment#

When we haven’t prepared our models for deployment, the models can become difficult to interpret, non-viable, unfair, or inconsistent. MLOps aims to ensure ML models are reproducible in production and helps us prepare data, models, and dashboards for deployment.

Safe and robust environment#

MLOps ensures that computations are scalable and efficient. It ensures that ML pipelines, whether for batch-scoring or real-time predictions, are robust.

Governed#

Constantly feeding our production models new data can lead to data quality and model performance issues. For this reason, we must govern data quality and model performance.

Able to be updated#

Over time, will our model continue to meet the original needs for which it was designed? A model might not meet the original needs over time, and so the organization must be able to update it.

Summary#

Ultimately, MLOps is about making machine learning scale inside organizations by incorporating techniques and technologies, such as DevOps, and expanding them to include machine learning, data security, and governance. MLOps turbocharges the ability of organizations to go farther and faster with machine learning.

Works Cited

Mark Treveil and the Dataiku team. Introducing MLOps: How to Scale Machine Learning in the Enterprise. O’Reilly, 2020.