Tutorial | Data quality and SQL metrics#

Get started#

Let’s learn how to evaluate the quality of SQL datasets using SQL metrics and data quality rules.

Objectives#

In this tutorial, you will learn how to create a data quality rule that warns if a foreign key doesn’t have a valid primary key match. In other words, you’ll check for referential integrity.

To do so, you will:

Build a metric that uses the output of an SQL query.

Create a data quality rule that verifies the validity of the metric.

Examine the source of data quality warnings.

Prerequisites#

To reproduce the steps in this tutorial, you’ll need:

Dataiku 12.6 or later.

An SQL connection configured in Dataiku.

Knowledge of relational databases and SQL queries.

Create the project#

From the Dataiku Design homepage, click + New Project.

Select Learning projects.

Search for and select Data Quality and SQL Metrics.

If needed, change the folder into which the project will be installed, and click Create.

From the project homepage, click Go to Flow (or type

g+f).

From the Dataiku Design homepage, click + New Project.

Select DSS tutorials.

Filter by Advanced Designer.

Select Data Quality and SQL Metrics.

From the project homepage, click Go to Flow (or type

g+f).

Note

You can also download the starter project from this website and import it as a zip file.

Change connections#

To get this data into your SQL database, let’s change the dataset connections.

Select the orders_filtered, customers_prepared, and orders_joined datasets.

Near the bottom of the right panel’s Actions tab, select Change connection.

For the new connection, select an available SQL connection.

Click Save.

You’ll next want to build the Flow.

Click Flow Actions at the bottom right of the Flow.

Click Build all.

Keep the default settings and click Build.

Use case#

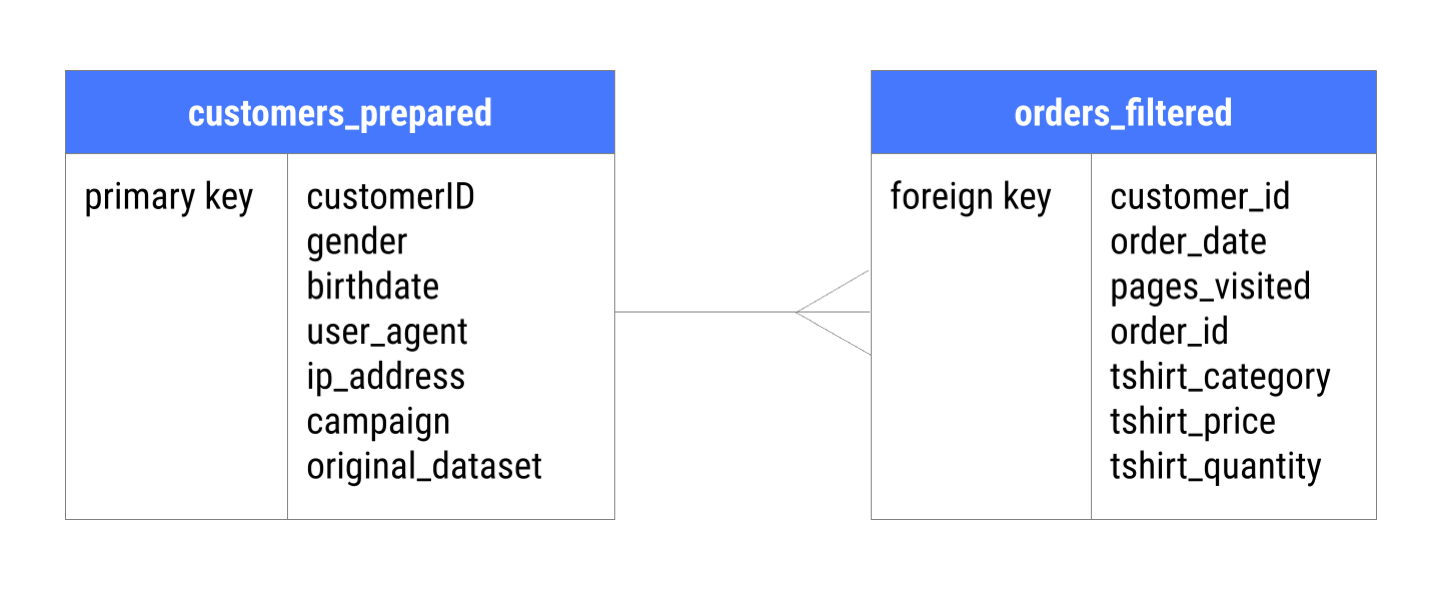

Let’s examine the orders_filtered and customers_prepared datasets in the Flow. The orders_filtered dataset tracks t-shirt orders, while customers_prepared contains customer information.

For this tutorial, one main requirement is that every customer in orders_filtered should have a corresponding entry in the customers_prepared dataset.

In other words, we need to ensure that all foreign keys in orders_filtered have matching primary keys in customers_prepared.

Note

This project comes with a data quality rule on orders_joined that validates this requirement. However, using an SQL metric can help you identify issues earlier in the data pipeline.

Test an SQL query#

Our goal is to write an SQL query that returns the number of orders made by customers that aren’t accounted for in the customer dataset. Before creating an SQL metric, let’s test to make sure that the SQL query returns a correct response.

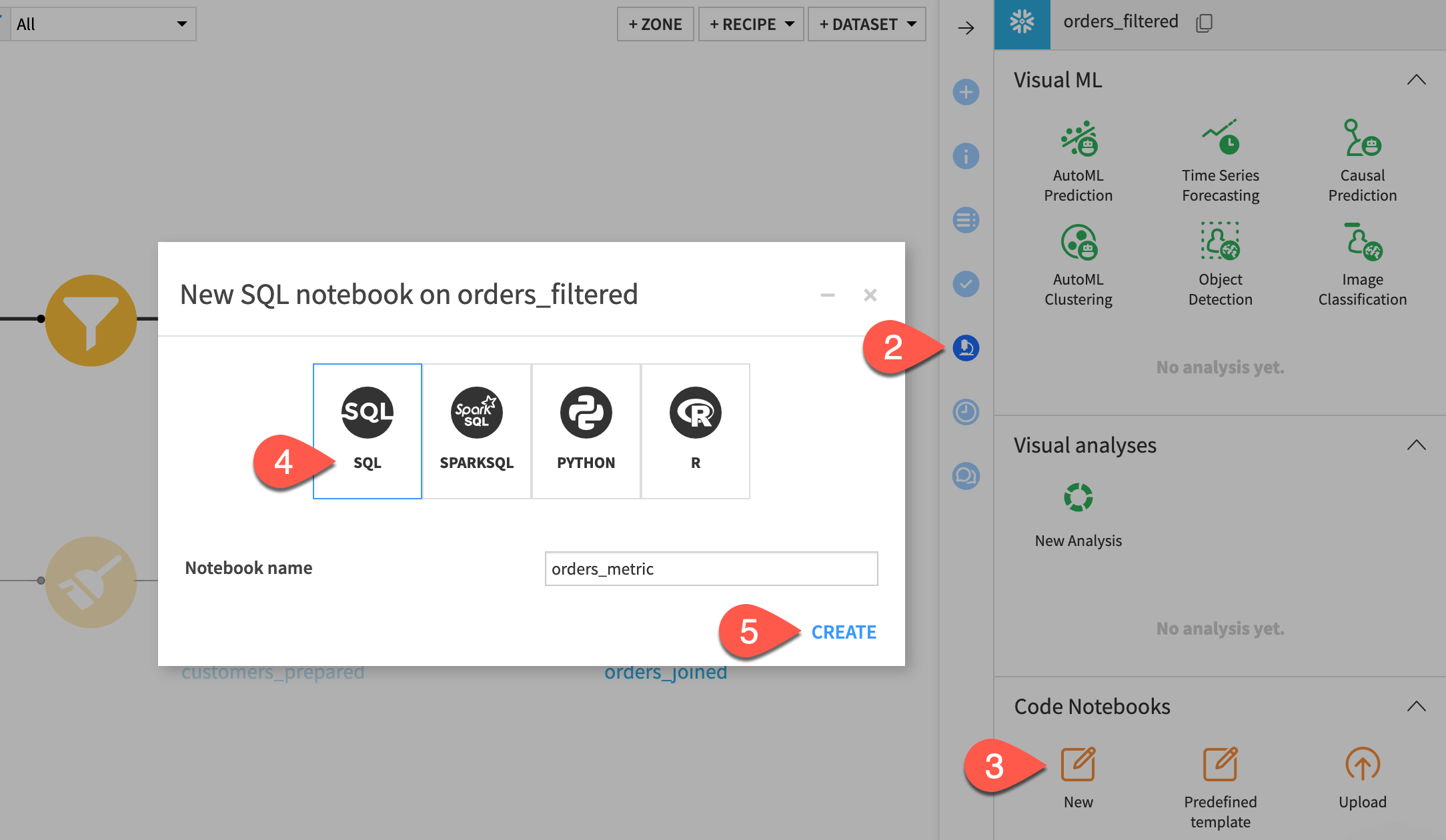

Select the orders_filtered dataset.

In the right panel, open the Lab (

) tab.

In the Code Notebooks section, select New.

Select SQL and name it

orders_metric.Click Create.

The way you reference tables in your query will depend on the schema of your SQL database, your project key, and whether you are on Dataiku Cloud or a self-managed instance.

Testing in the notebook should help you determine the correct way to format your query.

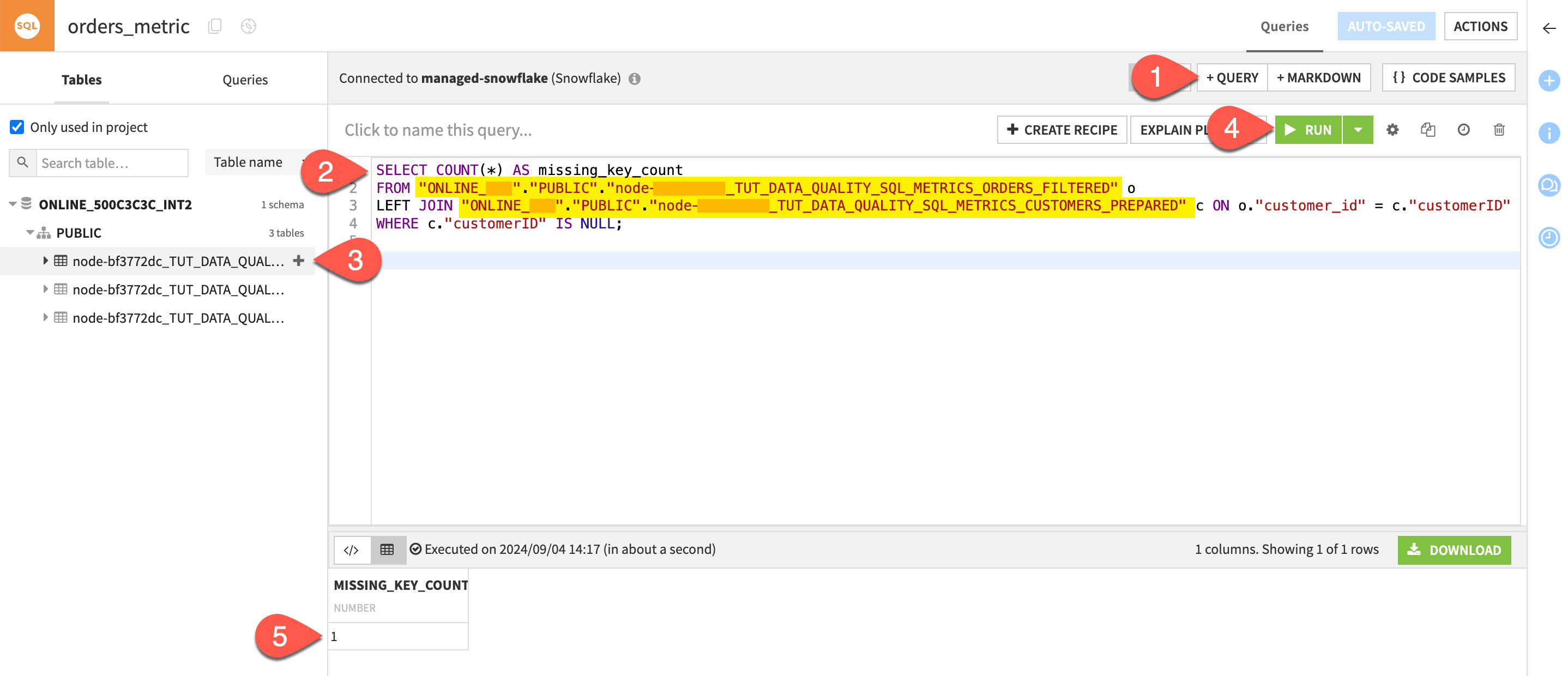

Click + Query.

Paste this query into the code editor:

SELECT COUNT(*) AS missing_key_count FROM "{ORDERS_FILTERED_TABLE}" o LEFT JOIN "{CUSTOMERS_PREPARED_TABLE}" c ON o."customer_id" = c."customerID" WHERE c."customerID" IS NULL;

Replace

"{ORDERS_FILTERED_TABLE}"and"{CUSTOMERS_PREPARED_TABLE}"with the correct table names.Tip

You can paste the correct names in the code editor by switching to the Tables tab, and clicking the plus sign next to the relevant table.

Click Run to execute the query.

Verify that the query returns

1. This means that there is one customer in orders_filtered who isn’t in customers_prepared.

Create an SQL metric#

Once you have validated that your query works, let’s use it to create an SQL metric.

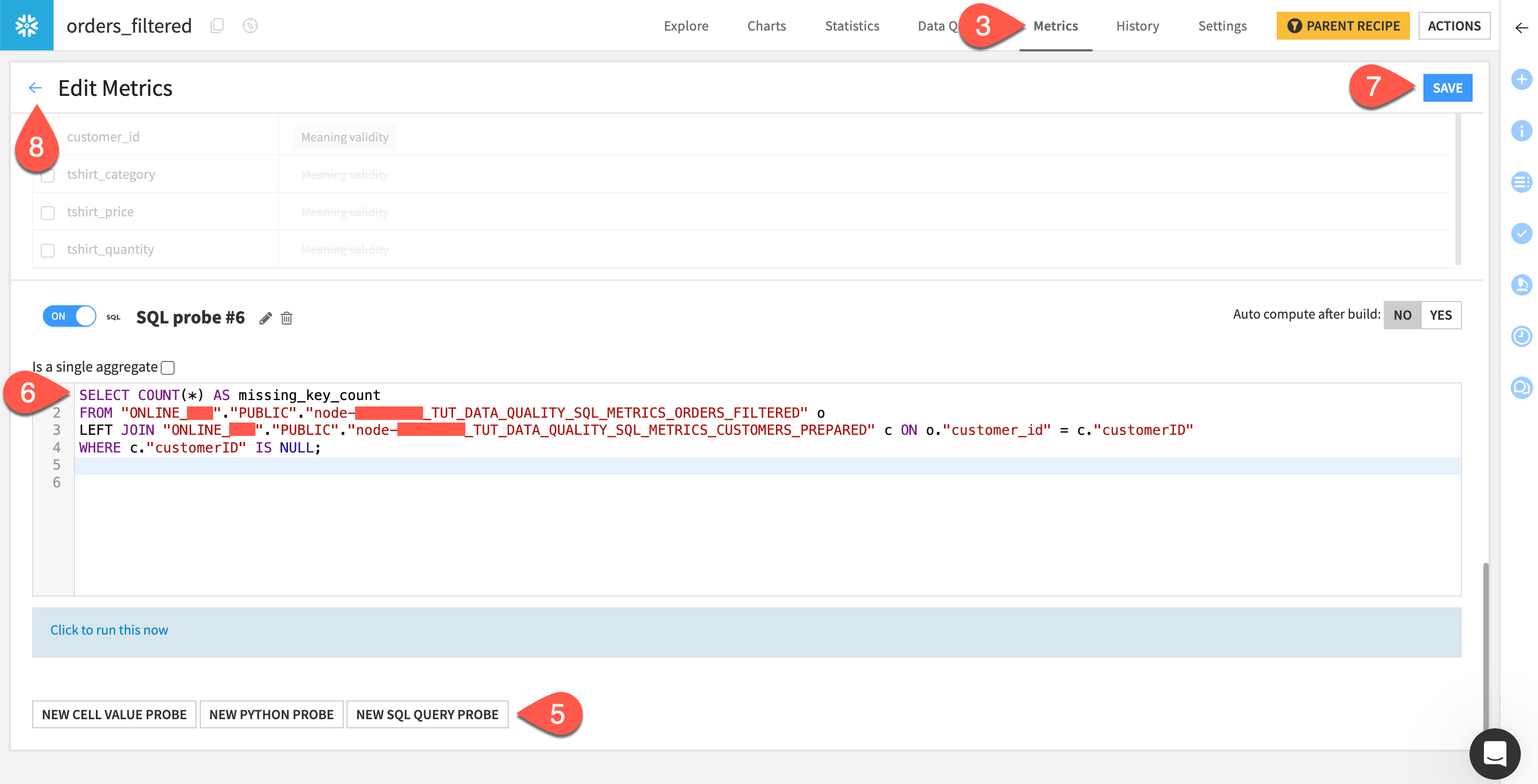

Copy your SQL query.

Return to the Flow and open the orders_filtered dataset.

Navigate to the Metrics tab.

Select Edit Metrics.

At the bottom of the page, click New SQL Query Probe and toggle your metric On.

Paste your SQL query in the code editor.

Save your changes.

Click the back arrow to return to the Metrics page.

Compute the metric#

To use this new metric for a data quality rule, you’ll have to compute it first.

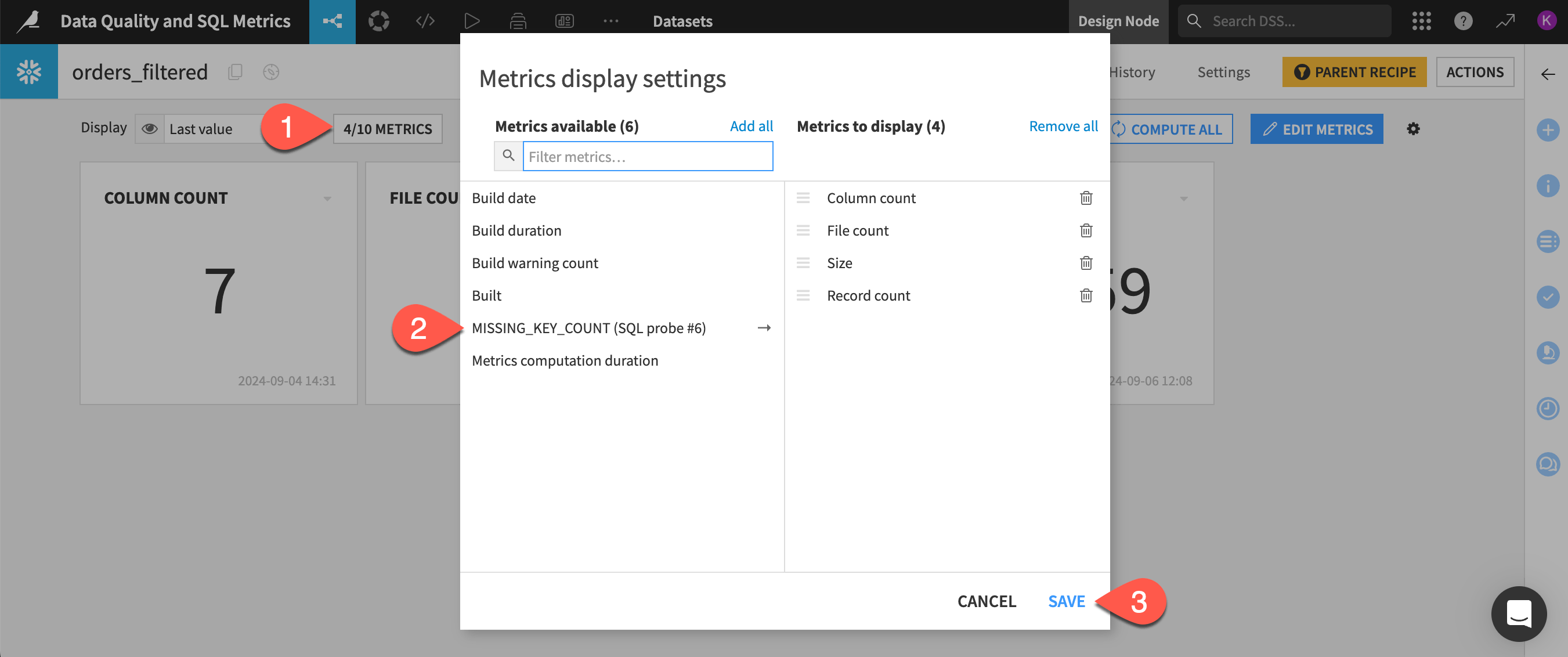

Select X/Y Metrics.

Click on your SQL metric to add it to the Metrics to display column.

Click Save.

Select Compute All to compute all metrics.

You should see a new tile that displays the value of your metric.

Add a data quality rule#

Now, you’ll add a new rule that returns a warning if the value of your metric is greater than zero.

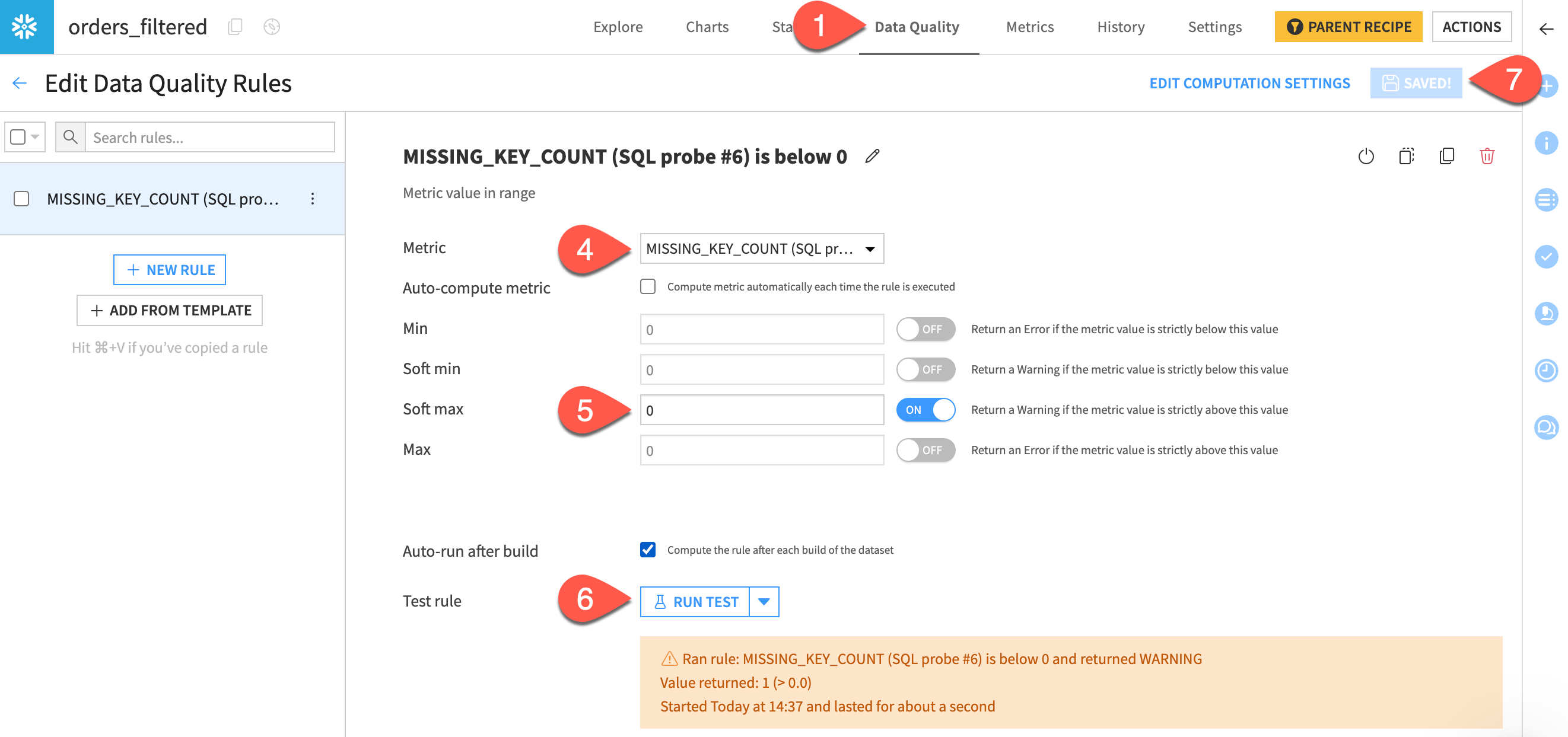

Open the Data Quality tab.

Select Edit Rules.

Click Metric value in range.

In the Metric dropdown, select your custom SQL metric.

Toggle on the Soft max option and ensure that the value is

0.Click Run Test to quickly view the result of the rule.

Save your work.

The result is a warning that not every foreign key (a customer ID in the orders_filtered dataset) references an existing primary key (a customer ID in the customers_prepared dataset).

Investigate the warning#

In most real-world cases, you would want to investigate and resolve the source of the warning.

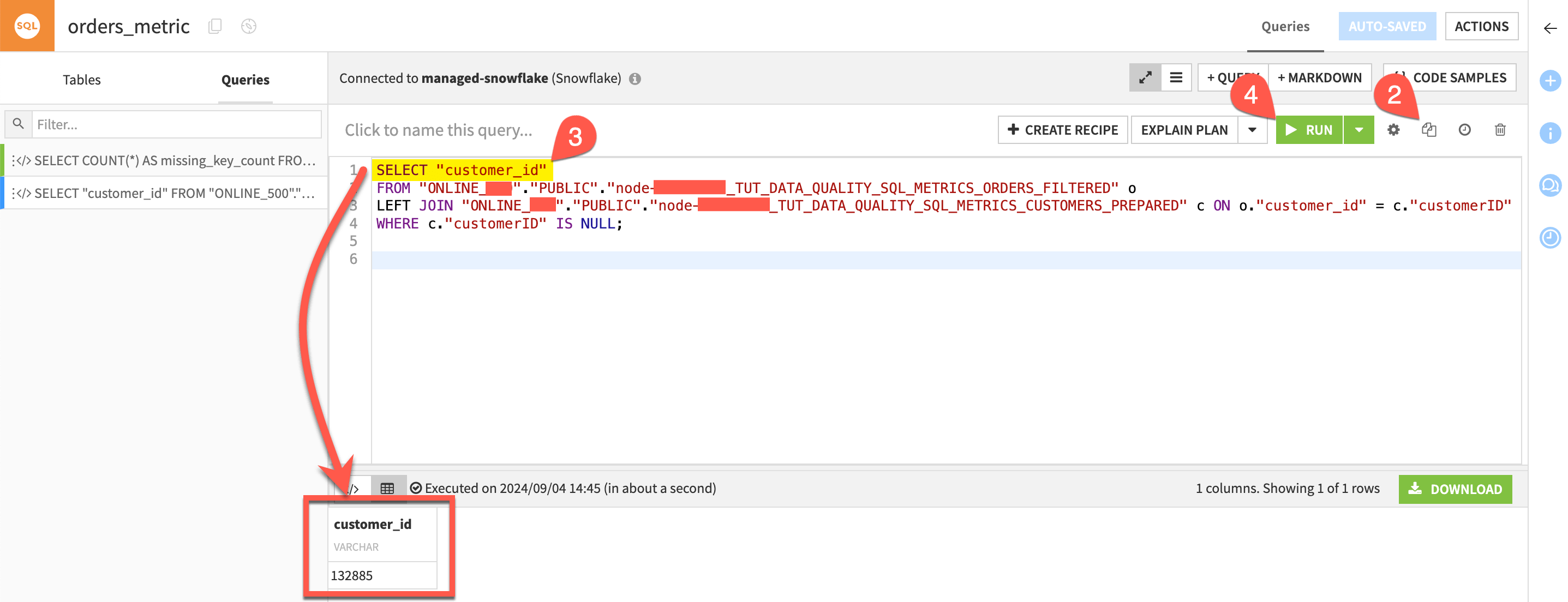

Return to your orders_metric SQL notebook.

Click the Duplicate query icon.

Replace

SELECT COUNT(*) AS missing_key_countwithSELECT "customer_id".Click Run to execute the query.

The query should return the customer that’s missing from customers_prepared.

Tip

One way to apply your data quality rule is to use a Verify rules or run checks step in a scenario.

If the data quality rule returns a warning, you can block the rebuilding of a data pipeline during a scenario run. In this case, you can prevent the build of the orders_joined dataset if there is a customer missing from customers_prepared dataset. Further, you could add scenario steps to execute SQL and report the missing customer.

Next steps#

In this tutorial, you learned that using data quality checks can help you maintain referential integrity by identifying and potentially handling invalid foreign key references.

Next, try to create different metrics and data quality rules to validate your SQL datasets!

See also

Find more information on Data Quality Rules in the reference documentation.